Eevee🔹

Bio

Participation5

I'm interested in effective altruism and longtermism broadly. The topics I'm interested in change over time; they include existential risks, climate change, wild animal welfare, alternative proteins, and longtermist global development.

A comment I've written about my EA origin story

Pronouns: she/her

Legal notice: I hereby release under the Creative Commons Attribution 4.0 International license all contributions to the EA Forum (text, images, etc.) to which I hold copyright and related rights, including contributions published before 1 December 2022.

"It is important to draw wisdom from many different places. If we take it from only one place, it becomes rigid and stale. Understanding others, the other elements, and the other nations will help you become whole." —Uncle Iroh

Posts 117

Comments830

Topic contributions136

Thank you for posting this! I've been frustrated with the EA movement's cautiousness around media outreach for a while. I think that the overwhelmingly negative press coverage in recent weeks can be attributed in part to us not doing enough media outreach prior to the FTX collapse. And it was pointed out back in July that the top Google Search result for "longtermism" was a Torres hit piece.

I understand and agree with the view that media outreach should be done by specialists - ideally, people who deeply understand EA and know how to talk to the media. But Will MacAskill and Toby Ord aren't the only people with those qualifications! There's no reason they need to be the public face of all of EA - they represent one faction out of at least three. EA is a general concept that's compatible with a range of moral and empirical worldviews - we should be showcasing that epistemic diversity, and one way to do that is by empowering an ideologically diverse group of public figures and media specialists to speak on the movement's behalf. It would be harder for people to criticize EA as a concept if they knew how broad it was.

Perhaps more EA orgs - like GiveWell, ACE, and FHI - should have their own publicity arms that operate independently of CEA and promote their views to the public, instead of expecting CEA or a handful of public figures like MacAskill to do the heavy lifting.

I've gotten more involved in EA since last summer. Some EA-related things I've done over the last year:

- Attended the virtual EA Global (I didn't register, just watched it live on YouTube)

- Read The Precipice

- Participated in two EA mentorship programs

- Joined Covid Watch, an organization developing an app to slow the spread of COVID-19. I'm especially involved in setting up a subteam trying to reduce global catastrophic biological risks.

- Started posting on the EA Forum

- Ran a birthday fundraiser for the Against Malaria Foundation. This year, I'm running another one for the Nuclear Threat Initiative.

Although I first heard of EA toward the end of high school (slightly over 4 years ago) and liked it, I had some negative interactions with EA community early on that pushed me away from the community. I spent the next 3 years exploring various social issues outside the EA community, but I had internalized EA's core principles, so I was constantly thinking about how much good I could be doing and which causes were the most important. I eventually became overwhelmed because "doing good" had become a big part of my identity but I cared about too many different issues. A friend recommended that I check out EA again, and despite some trepidation owing to my past experiences, I did. As I got involved in the EA community again, I had an overwhelmingly positive experience. The EAs I was interacting with were kind and open-minded, and they encouraged me to get involved, whereas before, I had encountered people who seemed more abrasive.

Now I'm worried about getting burned out. I check the EA Forum way too often for my own good, and I've been thinking obsessively about cause prioritization and longtermism. I talk about my current uncertainties in this post.

Anecdotally, it seems like many employers have become more selective about qualifications, particularly in tech where the market got really competitive in 2024 - junior engineers were suddenly competing with laid-off senior engineers and FAANG bros.

Also, per their FAQ, Capital One has a policy not to select candidates who don't meet the basic qualifications for a role. One Reddit thread says this is also true for government contractors. Obviously this may vary among employers - is there any empirical evidence on how often candidates get hired without meeting 100% of qualifications, especially since 2024?

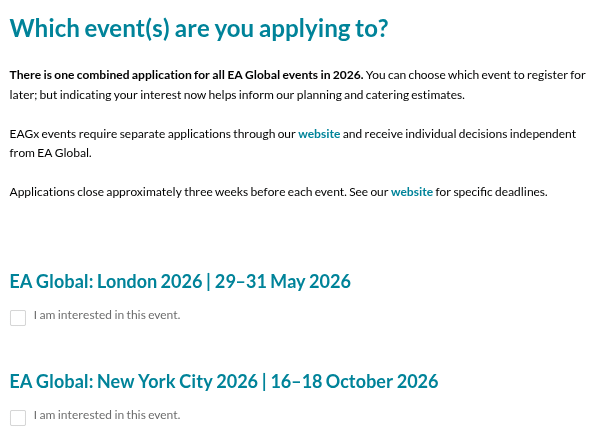

Have applications for EAG San Francisco already closed? The website states that the application deadline is February 1, but the application form only includes checkboxes for London and NYC.

This is great!

(Not sure if you already know this, but posting it in case it's helpful to anyone) Not-so-pro tip: your distributor only pays your sound recording royalties; streaming services send the mechanical and/or performance royalties for the underlying musical composition to a CMO (such as PRS for Music in the UK) which pays you separately.

On Spotify, composition royalties are about 1/5 of the share of revenue that Spotify pays to the owners of sound recordings, so realistically, it's probably just a few extra cents from streaming alone. But I feel like it's a good practice to claim them anyway. Enrolling a song in a CMO also enables the song to be played at live venues (e.g. if an EAG/x conference wanted to play the song, they'd license it through the CMO and you'd get paid).

How did Sam "win"? The press coverage of him since the FTX collapse has been overwhelmingly negative. The EA movement has been caught up in that as collateral damage, but some works have criticized Sam for "twisting" EA, like the podcast Spellcaster.

...why you just assume it will be a positive representation of EAs?

No, I'm not making any assumptions about the movies being released.

I can speak for myself: I want AGI, if it is developed, to reflect the best possible values we have currently (i.e. liberal values[1]), and I believe it's likely that an AGI system developed by an organization based in the free world (the US, EU, Taiwan, etc.) would embody better values than one developed by one based in the People's Republic of China. There is a widely held belief in science and technology studies that all technologies have embedded values; the most obvious way values could be embedded in an AI system is through its objective function. It's unclear to me how much these values would differ if the AGI were developed in a free country versus an unfree one, because a lot of the AI systems that the US government uses could also be used for oppressive purposes (and arguably already are used in oppressive ways by the US).

Holden Karnofsky calls this the "competition frame" - in which it matters most who develops AGI. He contrasts this with the "caution frame", which focuses more on whether AGI is developed in a rushed way than whether it is misused. Both frames seem valuable to me, but Holden warns that most people will gravitate toward the competition frame by default and neglect the caution one.

Hope this helps!

Fwiw I do believe that liberal values can be improved on, especially in that they seldom include animals. But the foundation seems correct to me: centering every individual's right to life, liberty, and the pursuit of happiness.