Automating reasoning about the future at Ought

Summary

Ought’s mission is to automate and scale open-ended reasoning. Since wrapping up factored evaluation experiments at the end of 2019, Ought has built Elicit to automate the open-ended reasoning involved in judgmental forecasting.

Today, Elicit helps forecasters build distributions, track beliefs over time, collaborate on forecasts, and get alerts when forecasts change. Over time, we hope Elicit will:

- Support and absorb more of a forecaster’s thought process

- Incrementally introduce automation into that process, and

- Continuously incorporate the forecaster’s feedback to ensure that Elicit’s automated reasoning is aligned with how each person wants to think.

This blog post introduces Elicit and our focus on judgmental forecasting. It also reifies the vision we’re running towards and potential ways to get there.

Judgmental forecasting today

What is judgmental forecasting?

Judgmental forecasting refers to forecasts that rely heavily on human intuition or “qualitative” beliefs about the world. Forecasts on prediction platforms such as the Good Judgement Open and Metaculus tend to be judgmental forecasts. Example questions include:

Judgmental forecasting distinguishes itself from statistical forecasting, which uses extrapolation methods like ARIMA. We need judgmental forecasting when we don’t have the right data required to train a model. This generally includes questions about low-frequency events (e.g. transformative technology, geopolitical events, or new business launches) and agent-based reasoning (e.g. business competitor behavior).

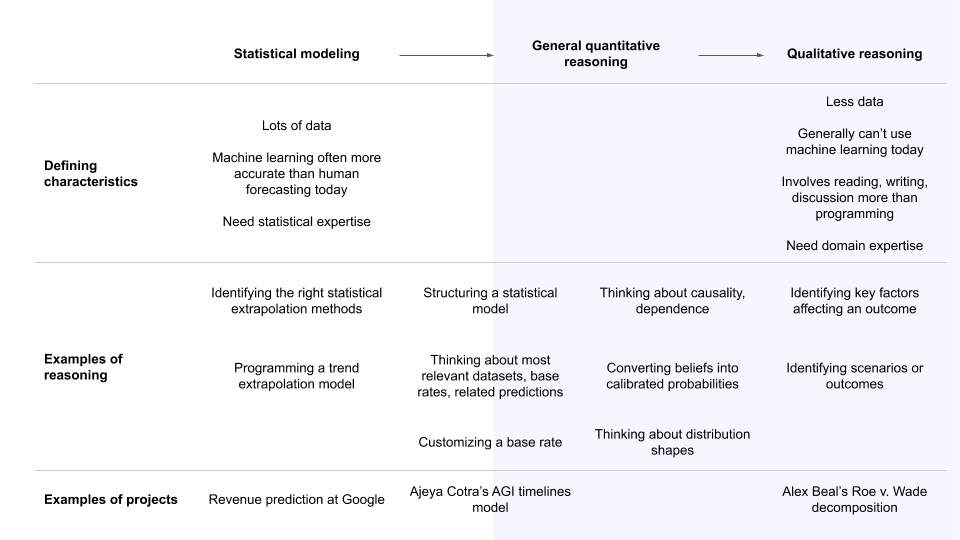

In an effort to communicate this fuzzy spectrum more concretely, we’ll share an imperfect visualization. We highlight in blue the types of reasoning we want to support first.

On the left, we have revenue forecasting at Google, where algorithms predict ad revenue in 30 second increments. We don’t plan on supporting this type of reasoning in the foreseeable future.

A bit to the right from that we have projects like Ajeya Cotra’s Draft report on transformative artificial intelligence timelines. Ajeya needed to gather data and model the trajectories of hardware prices, spending on computation, and algorithmic progress (among others), but she also used qualitative reasoning to decompose the question into compute requirements, compute availability, and so on. The experts she elicited predictions from did not build their own models, but made parameter estimates based on their prior research and expertise. We expect to be useful for parts of such projects.

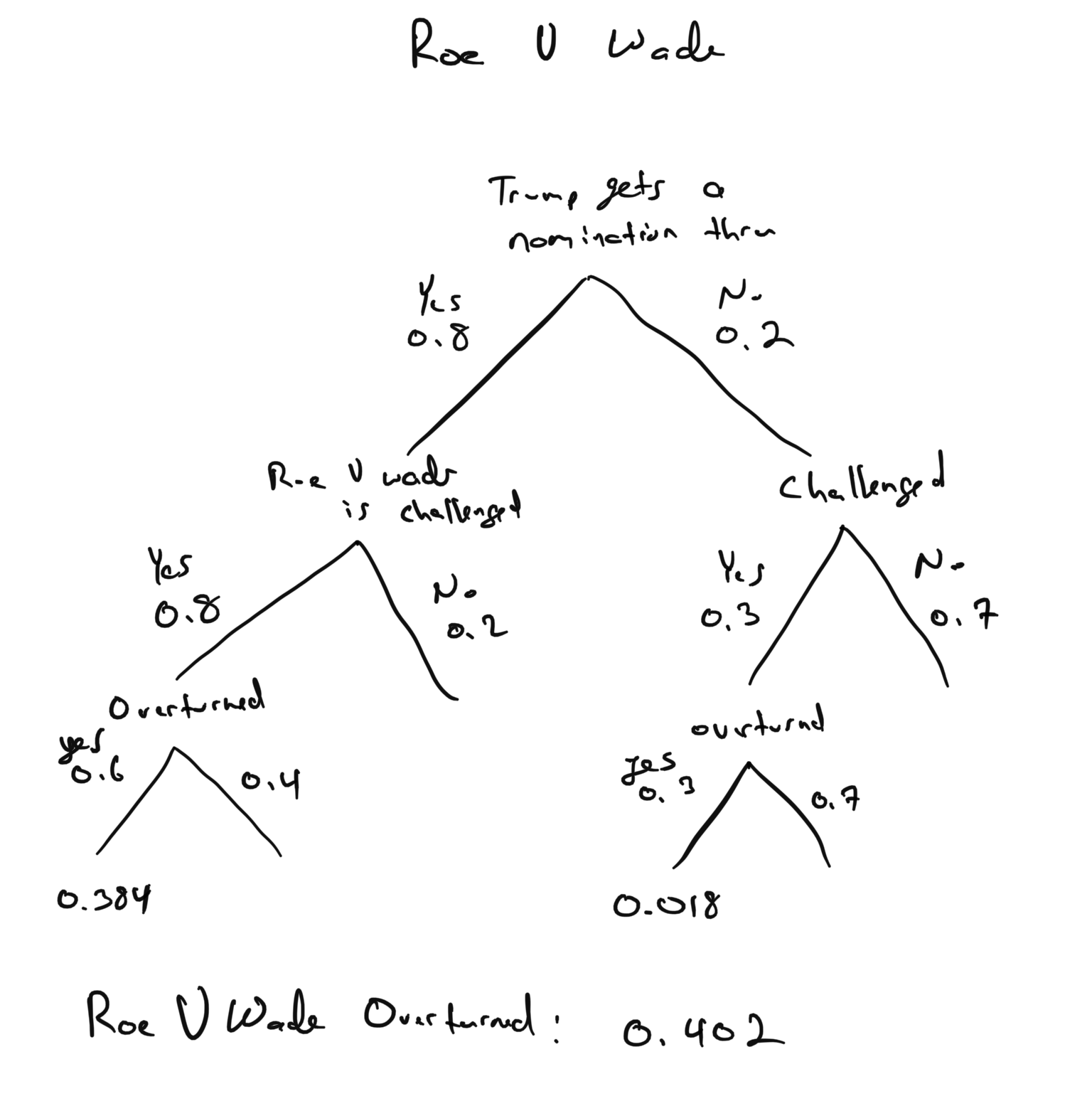

Further to the right, we have Alex Beal’s decomposition of whether Roe v. Wade will get overturned conditional on President Trump nominating a new Supreme Court Justice. Alex uses probabilistic reasoning here, but his overall decomposition is world-model based. His probabilities are not extrapolated from data but from his beliefs about Trump and the United States Supreme Court. We plan to be the most useful tool for this type of reasoning.

Reality is not as linear as the table above suggests: the different examples of reasoning are not strictly more qualitative going right. Nor are types of reasoning as discrete as the graphic suggests. In practice, people often use both quantitative and qualitative reasoning. Regardless, we hope this clarifies the types of reasoning Ought focuses on.

Why should we automate judgmental forecasting?

Forecasting underpins almost all decision-making. Often, a decision is a pair of conditional forecasting questions in disguise: “Should we spend $10 million on ads this month?” breaks down into “How much revenue will we make if we spend $10 million on ads this month?” and “How much revenue will we make if we don’t buy ads?” Organizations use pairs of conditional forecasts like these to isolate the marginal impact of ads on revenue and decide whether that’s worthwhile.

The importance of complex human reasoning couldn’t be more obvious today: the coronavirus pandemic has changed our society so dramatically that we can’t rely on past data to predict the near future. Government task forces found at times that all of the covid-19 prediction models were wrong, and had to resort to averaging them. Chief Financial Officers at public companies like Autodesk similarly found that “the current state renders all previous models useless.” Chief Marketing Officers at nimble startups like Nurx feel like throwing their computers out the window when trying to forecast demand.

In these unprecedented times, human judgment can step in to help counties like El Paso, Texas predict covid infection peaks more accurately than purely quantitative models.

Beyond global pandemics, judgemental forecasting can help the intelligence community anticipate elections, disease outbreaks and geopolitical dynamics. In conjunction with more quantitative modeling, it helps non-profits like Rethink Priorities estimate how much their donors will give next year. It’s necessary for organizations like the Long Now Foundation or Open Philanthropy, who want to prepare for the long-term future.

With Elicit, Ought aims to scale up the reasoning that happens in judgmental forecasting. We want to make it incredibly easy to produce good forecasts, enabling a wider range of people, companies, and teams to forecast things they don’t even imagine to be forecasting questions today. Serious forecasting should not be limited to those who can afford to pay trained forecasters. Eventually, “Will this project launch on time?” will feel as easy as figuring out the weather next week.

Human work doesn’t get us to that scale. We need to build a system that trains machines to think in the way we would if we knew more, were wiser, and had more time. Yet, we don't currently have compelling proposals for how to train machine learning systems to help people answer hard qualitative questions. Language models are usually trained with imitation learning, which probably won’t scale to significantly surpass human abilities. Reinforcement learning requires fast feedback loops and will be hard to apply to long-term forecasts that don't have this sort of feedback. To exceed human performance, we'll likely need to combine imitation learning or reinforcement learning with yet unproven approaches such as factored cognition, factored evaluation, or debate. So, in addition to being valuable in its own right, automating judgmental forecasting is a proving ground for aligned delegation of thinking more generally.

Judgmental forecasting automated

Where are we going?

Elicit is a tool for judgmental forecasting. People use Elicit to build, save, and collaborate on predictions. They also use Elicit to get alerts when prediction markets change their minds about the future.

Today, users do most of the work and Elicit automates parts of the forecasting workflow. Over time, Elicit will not just automate workflow, but increasingly support the reasoning that goes into forecasts. It will do things like point out inconsistent beliefs, suggest additional considerations, and guess at what the user really meant by their question.

This aspirational demo illustrates our current long-term vision:

In our vision, Elicit learns by imitating the thoughts and reasoning steps users share in the tool. It also gets direct feedback from users on its suggestions. Elicit progressively guesses more complex parts of the thought process, until it ends up suggesting entire decompositions, models, or explanations. As Elicit’s work gets more sophisticated, users can still dig into subcomponents of Elicit’s reasoning to evaluate parts even when they can’t evaluate the entire process end-to-end.

Elicit starts with humans doing most of the work and ends with machines doing most of the work. In the end state, the user primarily provides oversight and feedback to an AI system reasoning about the future. Having evolved with the forecaster and their constant feedback, Elicit ends up as a bespoke thought partner to each individual.

How do we get there?

As we showed in our earlier graphic, we want to support two types of reasoning in Elicit:

- Qualitative reasoning. The forecaster decomposes the question, structures a model, thinks about the causal relationships in the world, or potential outcomes of an event.

- Quantitative reasoning. The forecaster estimates numbers or the probabilistic implications of their qualitative beliefs (they specify likelihoods, distributions, etc.).

The table below shows how Elicit currently supports these two types of reasoning, and how it plans on incrementally automating them going forward.

| Qualitative reasoning | Elicit today | Elicit tomorrow |

| Let users store and share notes about forecasts | • | • |

| Suggest relevant factors or subquestions influencing a forecast | • | |

| Suggest related existing questions or benchmarks | • | |

| Suggest entire decompositions of a question | • | |

| Quantitative reasoning | ||

| Associate probabilities with qualitative beliefs and notes | • | • |

| Design complex distribution shapes | • | • |

| Validate beliefs with visualization | • | • |

| Show new beliefs implied by the user’s stated beliefs | • | • |

| Express beliefs in natural language | • | |

| Estimate prior distributions | • |

Elicit today

Today, Elicit supports qualitative reasoning by letting users add both free-form notes and notes associated with intervals and percentiles. Users can break down a question into smaller components to establish more direct links between beliefs and overall predictions.

Users can track all versions of their predictions on a question. With this history, forecasters get more granular lessons each time a question resolves. Decomposing a prediction into bins, probabilities, notes, and versions isolates areas for future improvement. When they go back to reflect, users learn not just whether their prediction was right or wrong, but more directly whether they missed an important consideration or just overestimated another factor’s influence, for example.

With this same functionality, users can poll other people to get their feedback and notes on a question, as demonstrated by this AI timelines thread and this AI timelines model.

Once forecasters have organized their thoughts, Elicit makes it easy to express them quantitatively as a probability density function. Users can specify percentiles or bins and corresponding probabilities. Both are more accessible than coding a distribution in Python or identifying whether a distribution is lognormal, what the variance is, etc. Elicit is particularly useful for abnormally shaped distributions like this one on Ebola deaths before 2021, truncated at number of deaths to date and this multimodal distribution on SpaceX’s value in 2030.

Users don’t have to specify every part of the distribution, like they would if they were building a histogram in spreadsheets. They also don’t need to keep track of whether bins add up to 100%. Elicit will accept the messiness of overlapping bins and inconsistent beliefs.

With these features, Elicit facilitates a three way conversation among the bins, plot, and Elicit-calculated implied beliefs. As shown in the tutorials above, users enter in their probabilities and double check with the plots and Elicit-provided implied beliefs. They then adjust their bins accordingly. Sometimes users have stronger intuitions about specific probabilities and ranges. Other times, they have stronger intuitions about the overall shape of the curve.

Elicit tomorrow

Elicit today helps forecasters make their thinking explicit. Most of the value comes from giving people a place to organize, store, and share the thoughts they’ve generated on their own. Over time, Elicit will generate more of the thoughts, letting the forecaster play evaluator.

For example, Elicit can integrate language models to operationalize the fuzzy questions forecasters care about into the concrete questions they can measure and predict. It can also use language models to find base rates or datasets to expedite the research process, the most time consuming part of the forecasting workflow.

We can already extract the resolution criteria and data sources from the lengthy text descriptions of Metaculus questions. We’re not far away from being able to extract relevant information from longer papers and publications.

With semantic search, Elicit will help people find relevant forecasting questions that already exist across all forecasting platforms. Better search reduces duplicate work and helps forecasters incorporate background research or existing predictions into any new question they are working on.

Eventually, we hope language models can suggest the complete list of factors - the entire decomposition - for users to review and accept / reject.

On the quantitative reasoning side, we plan to use language models to convert natural language statements into precise distributions. The ideal probability input format varies a lot for each user-question pair. Some people want to express their beliefs using bins. Percentiles are easiest for date questions. Sometimes drawing or visually adjusting curves works best. In other cases, users prefer to specify parameters such as function family, mean, and variance.

To accommodate these varied preferences, we can train a language model to convert any text-based input into a distribution and make a suggestion that the user can approve or reject. We eventually want to learn what a vague statement like “Most likely above 50” means for each user and in each context. We then want to automatically generate for them the right prior that the user can evaluate.

Conclusion

Ought’s mission is to automate and scale open-ended reasoning. We want to make good reasoning abundant. To attain that scale, we need automation and machine learning - human work is too expensive.

Today, machine learning works best when we can gather a large amount of task-relevant data. The most impressive examples involve imitation learning on static large-scale datasets (GPT-3) and reinforcement learning in situations with fast feedback (AlphaGo). We don't yet know how to exceed human capability at judgmental forecasting and in other situations that require qualitative reasoning, have limited data, and face slow feedback loops. With Elicit, we aim to make machine learning as useful for qualitative forecasts made with limited data as it is for data-rich situations today.

In the beginning, people do most of the work and thinking in Elicit; Elicit provides simple workflow automation. At this early stage, we’re studying what good reasoning looks like and how we can automate or support it. Elicit then starts to guess at increasingly complex parts of the forecaster’s thought process, suggesting subquestions, factors, scenarios, related questions, datasets, etc. Users provide ongoing feedback; Elicit evolves with and around each user.

By automating the reasoning and adding value especially in contexts with limited data, we make high-quality thinking and forecasts available even for questions that might only happen once to one person. If we succeed, answering questions like “When will my daughter’s passport arrive?” and “When will this software project finish?” will be as easy as looking up the weather for next week.

If you’re excited about building tools for thinking about the future, there’s plenty of work to do.