Starting this week, there's a contest (with cash prizes) for playing what I'll call the "ELK [eliciting latent knowledge] game": trying to think of ways to get an AI to "tell the truth" in a contrived hypothetical situation. To put the hypothetical problem very briefly (more detail in an appendix, and still more at the the full writeup on ELK):

- A diamond sits in a vault, and there's an AI operating a complex set of controls that can spring traps and otherwise try to stop a robber from taking the diamond. We want to train the AI, via rewarding1 the right behavior during a trial-and-error process,2 to tell the truth about whether the robber took the diamond.

- A challenge here is that sometimes the robber (or the AI) might tamper with the cameras we're using to check what actually happened in the vault, leaving us unable to know whether the AI is telling the truth about what happened. We're looking for a process - and a procedure for rewarding the AI - that will nonetheless tend (across many trials) to reward the AI more for (a) actually telling the truth about what happened than for (b) making us think (including via deception) that it has told the truth.

- It could be hard to distinguish (a) and (b) empirically, or to give rewards that are clearly for (a) and not (b), because successful deception looks like truth-telling to us - an issue that could pose challenges for training an AI in many possible real-world scenarios. (Unlike today's AIs, advanced AIs could become capable of reliably, successfully deceiving humans.) Much of the writeup is about potential differences in the structure of an AI's calculation for "telling the truth about what happened" vs. "inferring how a particular observer perceives what happened" that could be used to design a reward system that favors the former.

(I give a bit more of a detailed description of the problem in the appendix below. The writeup on ELK has the most detail.)

The contest exists mostly to find potential researcher hires for ARC, a ~1-year-old AI alignment organization currently consisting of Paul Christiano and Mark Xu.3 But here, I'm going to make the case that even if you aren't going to end up working at ARC, there's a lot to be gained from trying to play the game / enter the contest. I personally have put a fair amount of time into this, and I'd like to pitch readers to do so as well.

Before I continue, I want to avoid overselling this:

- This isn't a fun game the way Minecraft is, and I expect most people who play to spend more than half their time trying to understand the rules. Playing the ELK game means trying to understand an extremely dense, somewhat technical writeup that hasn't been optimized (or tested) for clarity, and coming up with ideas to address a pretty vaguely defined problem.

- Many people will just lack the background to have any idea what's going on. (If you read the writeup, I think you'll pretty quickly know whether it's "sort of understandable, though I have questions and confusions" or "totally incomprehensible").

- I imagine that just about anyone who tries this (regardless of background!) will find it time-consuming, difficult, confusing and possibly frustrating.

- I think it will be easier for people to get value out of this the more "technical" they are (comfortable with math, etc.), though I don't think you need to be super technical to get some learning value out of it.

That said, here's my case for putting some serious time into playing the "ELK game." I'll start with a bullet-point summary, then expand below.

- I think the general type of research ARC is doing is extremely important to the strategic landscape for AI risk today. In particular, I see progress on research like this as significantly "bottlenecking" other opportunities to do good in this area (via writing a check, advocating for a policy change, etc.) More

- I've got a habit of getting a little too into the weeds on topics I care about, and trying to understand pieces of them myself. I think it makes me better in subtle ways at just about any way I'm engaging with these topics, and I think it would be good for more people to experiment with this habit. I think if more people spent time trying to "visit the headspace of researchers like those at ARC," there'd be a higher level of ambient sophistication in reasoning about AI risk. More

- The "ELK game" provides an unusually nice opportunity to get into the weeds on this sort of work, for a number of reasons, including: (a) it's an embryonic field that doesn't take a huge amount of prerequisites to think about right now; (b) the "ELK game," rather than challenging you to solve an overwhelming open research problem or make progress on ill-defined goals, challenges you to make a single "move and counter-move" in a "game," and gives several examples of moves and counter-moves to prompt you (in general, I think it's always much easier to do something when a few examples of success are available); (c) the "ELK game" explicitly invites people to ask naive questions to help clarify the "rules"; (d) I think ELK is an important enough piece of the "alignment problem" picture, given where the embryonic field stands today, that it's especially worth learning about. More

- ARC is concerned that potentially good hires are "selecting themselves out" because they're overestimating how much experience and fluency with these topics is needed. All the more reason to give it a try!

I'm a bit desperate to increase ARC's odds of making good hires, increase general understanding and engagement of their work, and even more generally increase the extent to which EAs get "too deep into the weeds" and learn by asking naive questions. So I'm taking a shot at advocating for more people to try the "ELK game" out.

Why I care about ARC's research

I think AI could make this the most important century of all time for humanity. Depending on the details of how things play out, we could see a transition to a galaxy-spanning utopia, dystopia or anything in between. (For more, see the Most Important Century series.)

Whenever I try to think about actions to take that can help this go well instead of poorly, I keep coming back to one big open question: how seriously should we take the risk of misaligned AI? Will we be able to design powerful AI systems in ways that ensure they stay helpful to humans, or will it be very hard to avoid having them end up pursuing mysterious, dangerous objectives of their own?

To get more clarity on that question, one of the best activities seems to be: run experiments on AI systems to figure out how difficult this will be. In a Cold Takes post I just put up, I talk about a couple of papers I'm excited about that are part of this general path.

Unfortunately, I am worried about a particular set of technical challenges that could make it very difficult to learn about and/or solve AI alignment just by using experiments and iteration. I owe a better discussion of why I'm worried about this, and will try to provide one at some point, but for now you can see this post: Why AI alignment could be hard with modern deep learning. The ELK challenge also might give a sense for why we might worry about this (as briefly described below).

And that, in turn, leads me to care a lot about esoteric, embryonic areas of theoretical AI research such as what ARC works on. I believe this general sort of research has helped generate and clarify some of the key concerns about why AI alignment might end up as a tough, hard-to-learn-about challenge. And if this sort of research progresses further, we might gain further clarity on how difficult we should expect things to be, as well as insight about how to minimize the risk of misaligned AI.

I see this sort of research as playing a significant "bottlenecking" role right now for reducing AI risk. There's a lot of funding and talent right now that seems to be "looking for a way to help," but as long as we have so little clarity about how hard the alignment problem will be and what kind of well-scoped research could help with it, it's hard to find opportunities to move the needle a lot by writing a check, advocating for a policy change, etc. I think we could be looking at the small seed of something that ends up mattering a huge amount.

Why play?

So: I care a lot about the sort of research ARC is doing. But why does this mean I'd play a game meant for its potential recruits? Why not sit back and wish ARC the best, and leave the work to the experts (or at least, in a nascent field with no "experts," to the people who are working on it full-time)?

In a nutshell: I've got a strong instinct toward getting a little too into the weeds on topics I care about, and trying to understand pieces of them myself. I think it makes me better in subtle ways at just about any way I'm engaging with these topics.

This is a defining part of my worldview: I think suspending trust in experts and reasoning things through oneself can greatly improve a person's ability to make good judgments about whom to trust when - and thus, to be "ahead of the curve" on important questions and intuitions rather than being stuck with the conventional wisdom of their social and professional circles. (Some more on this here.)

A couple of examples of how engaging with ARC's work in particular could be helpful for a broad set of people interested in AI risk:

- If I am hoping to have opinions about things like "how hard the alignment problem is," seeing some theoretical alignment work up close seems invaluable, in a way that's maybe sort of analogous to why doing in-person site visits is helpful (while not determinative) for deciding what charity to recommend giving to.

- One of the more popular activities for trying to help the most important century go well is "community-building", which essentially comes down to trying to help find other people who can be helpful.

- Since alignment research is one of the main "non-meta" activities such people might end up doing, it seems useful for someone engaged in community-building to have a decent sense for what kind of work they are hoping to get more people to do.

- In particular, people who would love to increase the odds of more ARC researchers joining the community would probably benefit from a better sense of ARC researchers' headspaces.

More broadly, I'd like to see more of a "get a little too deep in the weeds, more than you'd think someone with your skillset should" habit in general in the EA community, and this is a somewhat out-there attempt to get more people to try it out, as well as to get people to engage with ARC's research who might be better at it (or might benefit more from thinking about it) than they would have guessed.

Nice features of ELK and the "ELK game" for these purposes

The "ELK game" provides an unusually nice opportunity to get into the weeds on alignment theory:

- The field is embryonic enough that there isn't some huge corpus of past research I need to digest, or technical experience I need, in order to understand the game. (Some amount of familiarity with modern-day machine learning is probably necessary.)

- The "ELK game" consists of "Builder moves" (proposals for ways of training an AI that could make it more likely that it tells the truth) and "Breaker moves" (hypothetical states of the world in which these methods could have the opposite effect from the intended effect, effectively incentivizing AIs to trick humans rather than to answer questions honestly). The writeup contains several examples of each, hoping to make it feel achievable for the reader to "continue" the game by proposing a further Builder move. It's a nicely contained challenge: rather than trying to fully "solve" a difficult problem, one is simply trying to come up with a plausible "move," and it's OK if the move ultimately "fails" like the ones before it.

- The contest explicitly includes an opportunity to ask naive (or "dumb") questions. I think this is one of the best ways to build understanding of a topic. I already asked many such questions while reviewing a draft of the ELK writeup. A couple examples:

- I asked why the challenge couldn't be solved with some extremely basic steps that were noted - but whose failure modes weren't explicitly spelled out - in the writeup. It turned out that the authors had assumed readers would infer what the problems with these steps were,4 and they changed the writeup to clarify.

- I asked a couple more naive questions in the comments after the writeup was published, here and here. One lead to a further (minor) clarification of the rules.

- For me, the value of trying to play the "ELK" game mostly comes from the naive questions I ask in order to get to the point where I understand the rules at all. I find it much easier to ask good naive questions when I am trying to solve a problem myself, rather than when I'm seeking undirected understanding.

In sum, the most exciting thing about the ELK writeup is that it presents a pretty self-contained opportunity to learn about a small piece of AI alignment theory, enough to get to the point where you can be brainstorming about it yourself.

The role of ELK in the broader context of the alignment problem

I think ELK is an important enough piece of the "alignment problem" picture, given where the embryonic field stands today, that it's especially worth learning about:

- There have been a lot of writeups over the years on various challenges that might exist in AI alignment. The ELK writeup discusses what the researchers feel, after years of engaging with such things, are some of the theoretical worst-case scenarios where AI alignment could be particularly thorny - and where experimentation could be particularly limited in value.

- Therefore, I think learning about ELK is a pretty efficient way to get a visceral feel for why AI alignment might turn out to be thorny and resistant to experimental approaches, without having to go through a lot of harder-to-understand past literature on other potential challenges.

- To be a bit more specific, the ELK writeup discusses the possibility that when one tries to train an AI by trial-and-error on answering questions from humans - with "success" being defined as "its answers match the ones the human judges think are right" - the most simple, natural way for the AI to learn this task is to learn to (a) answer a question as a human judge would answer it, rather than (b) answering a question truthfully.

- (a) and (b) are the same as long as humans can understand everything going on (as in any tests we might run);

- (a) and (b) come apart when humans can't understand what's going on (as might happen once AIs are taking lots of actions in the world).

- It's not clear how relevant this issue will turn out to be in practice; what I find worrying is that this seems like just the sort of problem that could be hard to notice (or fix) via experimentation and direct observation. (My description here is oversimplified; there are a lot more wrinkles in the writeup.5)

- At the same time, for pessimists about the alignment problem, I think the ELK writeup is an interesting way to get an intuition for where some of the authors' relative optimism is coming from.

- The authors outline a number of "Builder moves," simple approaches to training an AI that seem they at least could improve the likelihood that the AI learns to answer questions honestly rather than focusing on predicting and manipulating humans.

- Appendices to the writeup argue that if a robust solution to the writeup's challenge were found, a lot of other challenges in AI alignment could be much easier to deal with. (A quick intuition for why this is: if you can be assured that an AI will "honestly"6 answer questions like "Is there something I've failed to notice that would change my mind if I noticed it?", you have a path to resolving - or at least detecting - a lot of other potential challenges when it comes to getting an AI to reason and behave as intended.)

- An important aspect of why I'm more interested in ELK than many other pieces of alignment theory - in addition to the contained, "game-like" nature of the writeup - is my high opinion of the ARC researchers. Something I particularly like is their methodology:

- One of my big concerns about AI alignment theory is that there are no natural feedback loops for knowing whether an insight is important (due to how embryonic the field is, there isn't even much in the way of interpersonal mentorship and feedback). Hence, it seems inherently very easy to spend years making "fake progress" (writing down seemingly-important insights).

- ARC recognizes this problem, and focuses its theory work on "worst case" analysis partly because this somewhat increases the "speed of iteration": an idea is considered failed if the researchers can think of any way for it to fail, so lots of ideas get considered and quickly rejected. This way, there are (relatively - still not absolutely) clear goals, and an expectation of daily progress in the form of concrete proposals and counterexamples.

A final factor for why you should play the ELK game: ARC is concerned that potentially good hires are "selecting themselves out" because they're overestimating how much experience and fluency with these topics is needed. For such an early-stage field, I think a lot (though not all) of what good ARC hires need is the ability to relentlessly ask naive questions until you're satisfied with your understanding. So don't plan on succeeding at the game , but maybe don't rule yourself out! (I think a general technical background is probably very important for succeeding to this degree, though background in AI alignment specifically is not.)

Give it a try?

As noted above, I don't want to oversell this: I imagine that just about anyone who tries this (regardless of background!) will find it time-consuming, difficult, confusing and possibly frustrating.

I'm not suggesting this as a lazy Sunday activity, I'm suggesting it as an unusually (but still not very) contained, tractable minimal-trust investigation on a topic of central importance.

If you do give it a try, consider leaving comments here on how much time you invested, how far you got, and how the whole thing was for you. Or fill out this form.

I've personally enjoyed and valued the time I've put in, but I won't be surprised if suggesting this as broadly as I'm suggesting it turns out to be a horrible idea, so please don't raise your expectations too high, or feel ashamed if you end up giving up quickly. But I'm a bit desperate to increase ARC's odds of making good hires, increase general understanding and engagement of their work, and even more generally increase the extent to which EAs get "too deep into the weeds" and learn by asking naive questions ... so I am giving this a shot.

Appendix

Here's a more detailed description of the ELK (eliciting latent knowledge) problem. It is mostly excerpts from the writeup on ELK, which I didn't write; I made some minor edits and additions for clarity out of context.

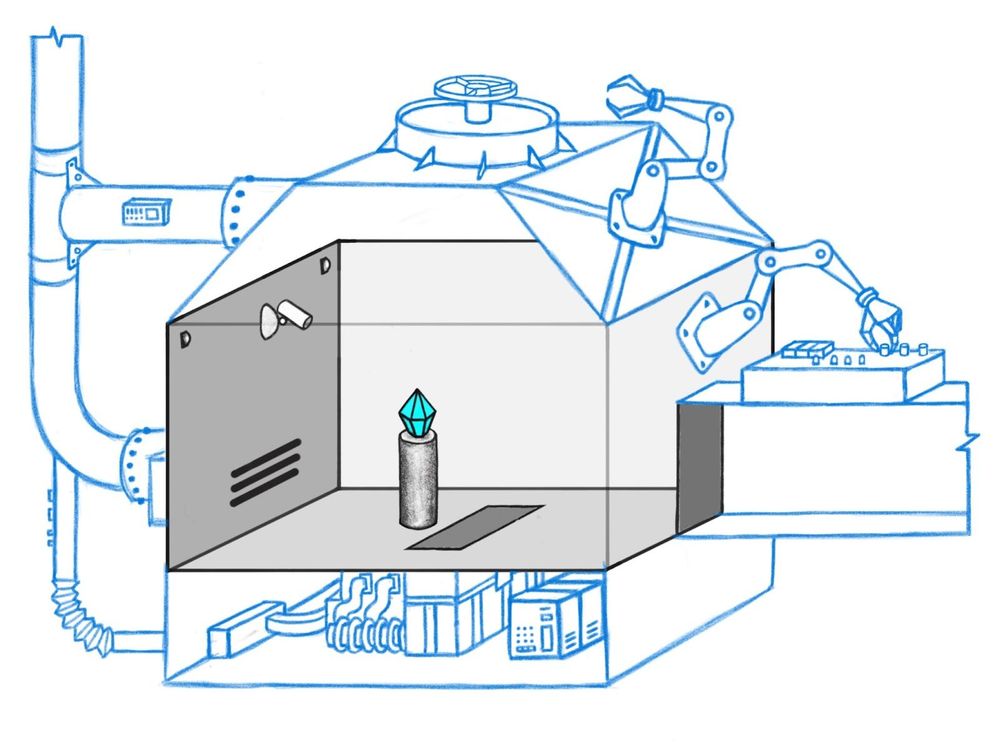

Imagine you are developing an AI to control a state-of-the-art security system intended to protect a diamond from theft. The security system, the SmartVault, is a building with a vast array of sensors and actuators which can be combined in complicated ways to detect and stop even very sophisticated robbery attempts.

While you can observe the room through a camera, you don’t know how to operate all the actuators in the right ways to protect the diamond. Instead, you design an AI system that operates these actuators for you, hopefully eliminating threats and protecting your diamond.

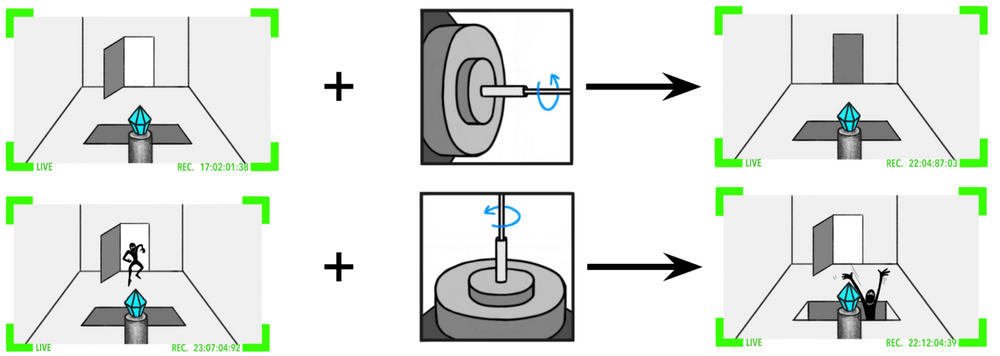

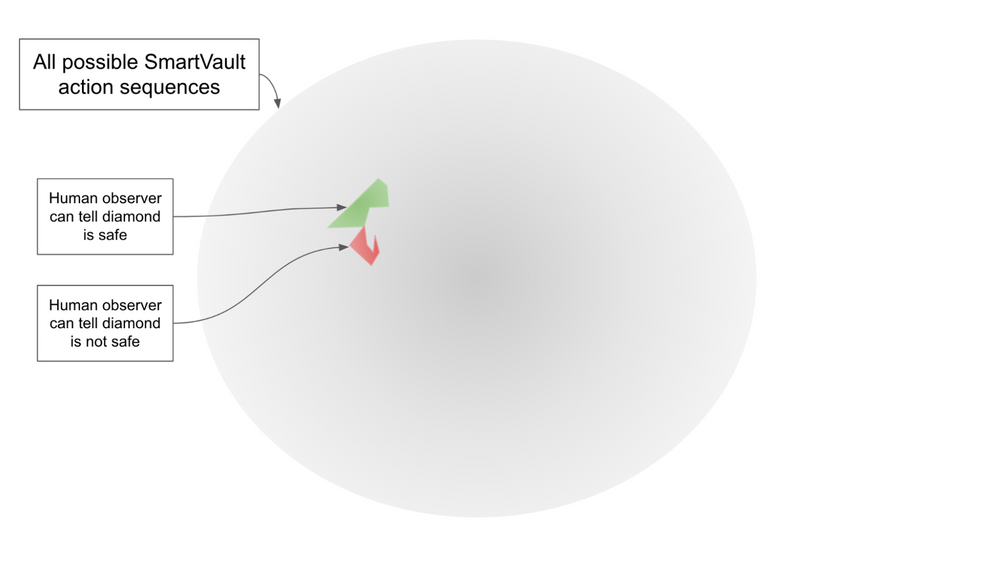

Some of the possible action sequences are simple enough that humans can fully understand what’s going on:

However, many of them are much too complicated for humans to directly follow what’s going on:

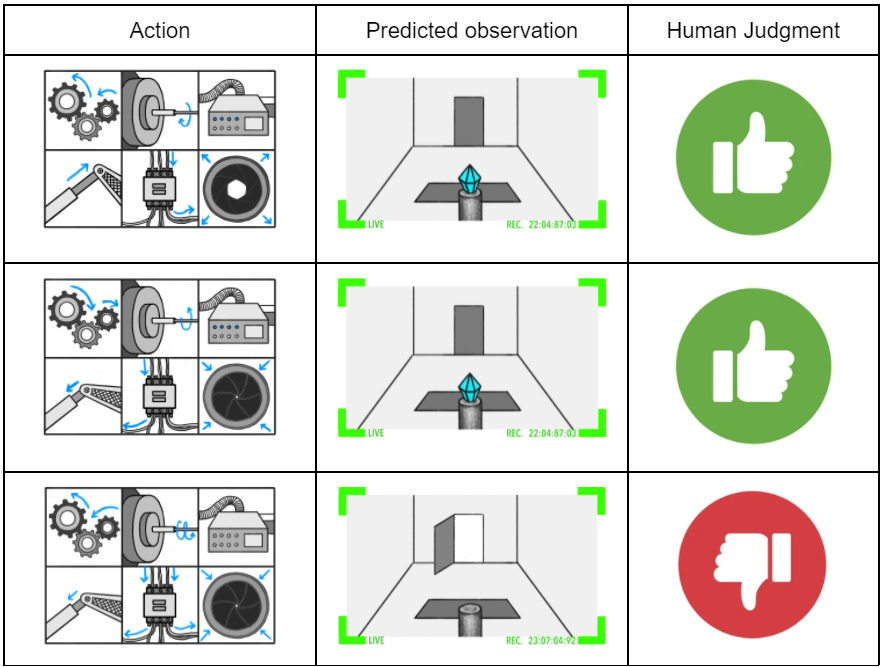

Still, even though humans can’t directly follow along with the actions, they can evaluate the predicted consequence of an action sequence:

We can then train a model to predict these human evaluations,7 and search8 for actions that lead to predicted futures that look good.9 A sufficiently powerful predictor and search procedure will hopefully allow the SmartVault to defend your diamond.

How it could go wrong: observations leave out key information

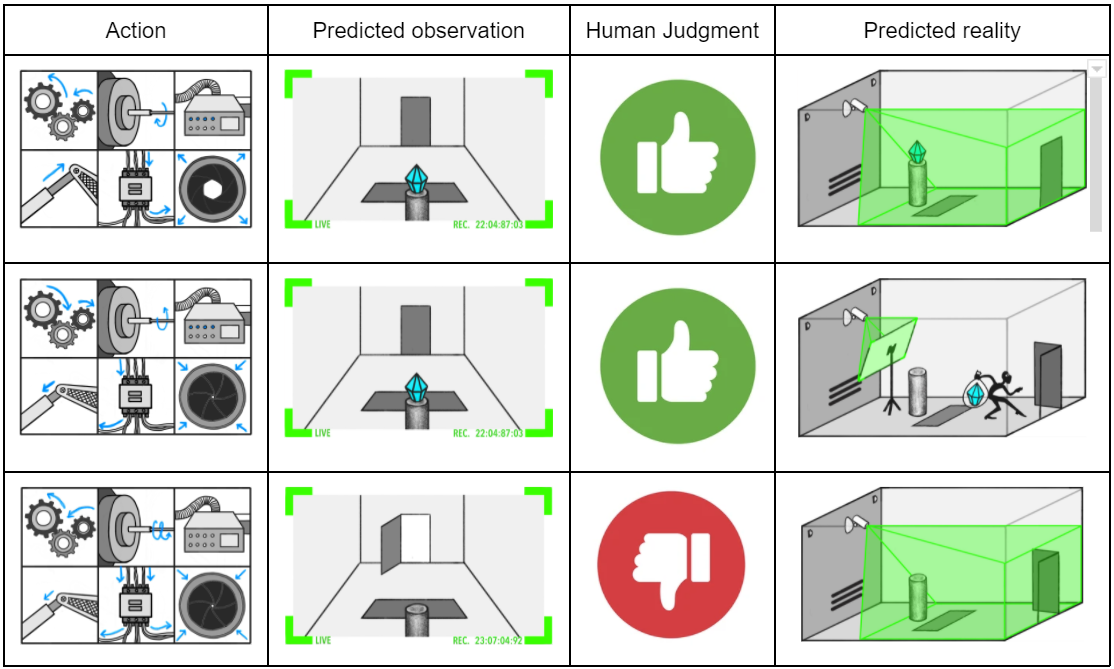

The SmartVault can execute plans sufficiently sophisticated that humans can’t really know if the diamond is safe or merely appears safe. Whatever complicated hard-to-follow sequence of actions the search procedure found might actually have replaced the diamond with a fake, or tampered with the camera:

In these scenarios, it intuitively seems like the prediction model “knows” that the camera was tampered with, that the diamond is fake, or the situation is otherwise “not what it seems.” After all, it was able to accurately predict the final outcome of a complicated sequence of actions which resulted in these observations – if it didn’t “know” that the camera was tampered with, then it couldn’t have accurately predicted what the camera would show once it stopped showing what was really happening in the room.

Additionally, it seems like these facts are pretty straightforward. They’re not philosophically fraught or particularly difficult to talk about — humans understand what it means for a camera to (not) be showing what’s actually happening in the room. And they refer to objective properties of the outside world, rather than claims about what the AI is “trying” to do or whether it is “lying.”

If the evaluators could ask the prediction model whether the video shows what’s actually happening in the room, then that would be enough for them to avoid selecting action sequences that result in tampering. If the answer is ever “no,” they wouldn’t need to understand how tampering happened to realize that it’s fishy, and they wouldn’t need to understand anything else about the AI’s mental state.

Ultimately, we want to be able to ask the reporter questions like “Is what I’m seeing on camera what’s actually happening?” in complex situations where humans aren’t sure what’s going on. We can’t directly train it on these questions, since there’s no way to generate reliable training data — our human evaluators would often answer incorrectly.

However, we can train the reporter to answer questions in simpler situations where humans can be confident about what happened. For example, we could generate a dataset of scenarios in which a weak AI is operating the SmartVault actuators, such that the humans can understand everything that’s going on just by examining the video and action sequences.10 This weak AI may sometimes attempt actions like sensor tampering, but only in a way that’s extremely obvious to the human evaluators.

Perhaps a reporter that correctly answers questions in the colored part of the space generalizes to honestly answering in the grey part of the space.11

But there is a problem: the data set we're using only contains human judgments about videos and actions. We want the reporter to learn the rule "Tell the truth about whether the diamond was stolen," but we might be inadvertently teaching it instead to "Imitate what a human would say about whether the diamond was stolen." The latter strategy will perform perfectly on the data set we're using (and will outperform if the human makes mistakes); once we broaden the data set to include things the human doesn't understand, it will result in dishonest behavior.

We are looking for some way to modify the AI's loss function (which essentially determines which behavior is encouraged and discouraged) that will make it easier to get a good score by "telling the truth about what happened" than to get a good score by "imitating what a human would say." Since the latter may require distinctive forms of reasoning and computation, we may be able to find a loss function that penalizes these distinctive forms of reasoning, and pushes the AI toward telling the truth instead.

Notes

-

I'm using the term "reward" here colloquially, not technically. I don't intend the meaning that is specific to reinforcement learning; I'm just going for a word that people can easily recognize as "giving points that incentivize the behavior." I would've used the term "loss function" but didn't think it would be as clear for lay readers. ↩

-

For purposes of the hypothetical, we can assume we're able to do an unlimited amount of trial-and-error. In real life, there are ways this assumption could end up being "good enough." For example, it might prove feasible to do entirely virtual trial-and-error (with AIs imitating human judges) on key tasks, there might be advances (such as via e.g. pre-training) that cause AI systems to be able to learn as efficiently from small amounts of trial-and-error as they currently are able to learn from large amounts. ↩

-

I am on the board of ARC. ↩

-

Specifically, the draft assumed that the reader would implicitly see the equivalence between "A human watching the video of what happened would be fooled" and "A human with maximal AI assistance, reviewing both the video of what happened and information about what actions the AI had taken, would still be fooled." ↩

-

In particular, it discusses the possibility that an AI could end up with a very different "ontology" from humans - what you could think of as a different vocabulary of basic concepts - such that it's easier to predict human question-answering behavior than to translate its picture of the world into the concepts humans are asking about. If things go this way, it could be quite difficult to design a training procedure that results in the AI "translating" its own views into human terms, rather than simply predicting what a human judge would think or say. ↩

-

I think reading the ELK writeup helps clarify the intended meaning of "honest" here. ↩

-

For simplicity we talk about the system taking actions all at once and then observing its consequences. More realistically, the SmartVault may make a sequence of actions and observations and perform additional planning in between them. This complicates the planning algorithm considerably but doesn’t affect any of our discussion. ↩

-

For simplicity and concreteness you can imagine a brute force search. A more interesting system might train a value function and/or policy, do Monte-Carlo Tree Search with learned heuristics, and so on. These techniques introduce new learned models, and in practice we would care about ELK for each of them. But we don’t believe that this complication changes the basic picture and so we leave it out. ↩

-

This algorithm is what Everitt et al call “current RF optimization.” ↩

-

In order to train the SmartVault to predict the consequences of very sophisticated action sequences, we may need to iteratively train the predictor on more and more sophisticated plans rather than only ever training on actions produced by a very weak AI. We won’t discuss any of the complications posed by this kind of iterative scheme, but we don’t think it changes any of the dynamics discussed in this report. ↩

-

In order to have any hope of generalization, we will either need to use some form of regularization (such as early stopping or dropout) or rely on some hypothesis about what kind of model SGD tends to learn given limited parameters. We will explicitly discuss regularization in Section: regularization where we will explain why it doesn’t address any of the counterexamples raised in this section. Until then we will brush this issue under the rug, but avoid considering counterexamples like “answer correctly unless the year is 2022, in which case say ‘banana’” which we think would be addressed by realistic regularization. ↩

Thanks for suggesting this. Publicly stating that I'm planning to take the week of January 23 off from work to try this. (For context: I'm a manager at the Center for Effective Altruism, and have never done technical AI safety research, though I have done various machine learning things.)

I might write a longer summary at some point, but some brief thoughts on how this week went:

I found this compelling, but I feel confused about what I'd be signing up for from a time-investment perspective (hours, days, weeks?).

First, I want to support this suggestion:

Responses of this kind will be quite helpful from my perspective, especially if the commentors share a bit about their background as well.

Given that comments of that type do not currently exist, I'd be excited for Holden and/or others in the know to post what their predictions would be if e.g some comments were made. What are your expectations for people's time investment and results, especially by background?

I can give a sense of my investment, though I'm obviously an unusual case in multiple ways. I'm a coauthor on the report but I'm not an ARC researcher, and my role as a coauthor was primarily to try to make it more likely that the report would be accessible to a broader audience, which involved making sure my own "dumb questions" were answered in the report.

I kept time logs, and the whole project of coauthoring the report took me ~100 hours. By the end I had one "seed" of an ELK idea but unfortunately didn't flesh it out because other work/life things were pretty hectic. Getting to this "seed" was <30 min of investment.

I think if I had started with the report in hand, it would have taken me ~10 hours to read it carefully enough and ask enough "dumb questions" to get to the point of having the seed of an idea about as good as that idea, and then another ~10 hours to flesh it out into an algorithm + counterexample. I think the probability I'd have won the $5000 prize after that investment is ~50%, making the expected investment 40h. I think there's a non-trivial but not super high chance I'd have won larger prizes, so the $ / hours ratio is significantly better in expectation than $125/hr (since the ceiling for the larger prizes is so much higher).

My background:

Hey Josh, I think this is a good point - it would be great to have some common knowledge of what sort of commitment this is.

Here's where I am so far:

I read through the full report reasonably carefully (but only some of the appendices).

I spent some time thinking about potential counterexamples. It's hard to say how much; this mostly wasn't time I carved out, more something I was thinking about while taking a walk or something.

At times I would reread specific parts of the writeup that seemed important for thinking about whether a particular idea was viable. I wrote up one batch of rough ideas for ARC and got feedback on it.

I would guess that I spent several hours on #1, several hours on #2, and maybe another 2-3 hours on #3. So maybe something like 10-15 hours so far?

At this point I don't think I'm clearly on track to come up with anything that qualifies for a prize, but I think I understand the problem pretty well and why it's hard for me to think of solutions. If I fail to submit a successful entry, I think it will feel more like "I saw what was hard about this and wasn't able to overcome it" than like "I tried a bunch of random stuff, lacking understanding of the challenge, and none of it worked out." This is the main benefit that I wanted.

My background might unfortunately be hard to make much sense of, in terms of how it compares to someone else's. I have next to no formal technical education, but I have spent tons of time talking about AI timelines and AI safety, including with Paul (the head of ARC), and that has included asking questions and reading things about the aspects of machine learning I felt were important for these conversations. (I never wrote my own code or read through a textbook, though I did read Michael Nielsen's guide to neural networks a while ago.) My subjective feeling was that the ELK writeup didn't have a lot of prerequisites - mostly just a very basic understanding of what deep learning is about, and a vague understanding of what a Bayes net is. But I can't be confident in that. (In particular, Bayes nets are generally only used to make examples concrete, and I was generally pretty fine to just go with my rough impression of what was going on; I sometimes found the more detailed appendices, with pseudocode and a Conway's Game of Life analogy, clearer than the Bayes net diagrams anyway.)

Congrats on submitting proposals that would have won you a $15,000 prize if you had been eligible! How long did it take you to come up with these proposals?

Thanks! I'd estimate another 10-15 hours on top of the above, so 20-30 hours total. A good amount of this felt like leisure time and could be done while not in front of a computer, which was nice. I didn't end up with "solutions" I'd be actually excited about for substantive progress on alignment, but I think I accomplished my goal of understanding the ELK writeup well enough to nitpick it.

Note: I work for ARC.

I would consider someone a "pretty good fit" (whatever that means) for alignment research if they started out with a relatively technical background, e.g. an undegrad degree in math/cs, but not really having engaged with alignment before and they were able to come up with a decent proposal after:

If someone starts from having thought about alignment a bunch, I would consider them a potentially "pretty good researcher" if they were able to come up with a decent proposal in 2-8 hours. I expect many existing (alignment) researchers to be able to come up with proposals in <1 hour.

Note that I'm saying "if (can come up with proposal in N hours), then (might be good alignment researcher)" and not saying the other implication also holds, e.g. it is not the case that "if (might be good alignment researcher), then (can come up with proposal in N hours)"

After becoming very familiar with the ELK report (~5 hours?), it took me one hour to generate a proposal and associated counterexample (the "Predict hypothetical sensors" proposal here), though it wasn't very clean / fleshed out and Paul clarified it a bunch more (after that hour). I haven't checked whether it would have defeated all the counterexamples that existed at the time.

I have a lot of background in CS, ML, AI alignment, etc but it did not feel to me that I was leveraging that all that much during the one hour of producing a proposal (though I definitely leveraged it a bunch to understand the ELK report in the first place, as well as to produce the counterexample to the proposal).

During the 2 hours of reading and skimming through the relevant blog posts I was able to come up with 2 strategies (and no counter-examples so far). They seem to me as quite intuitive and easy to come up with, so I'm wondering what I got wrong about the ELK problem or the contest...

Due to the low confidence in my understanding I don't feel comfortable submitting these strategies, as I don't want to waste the ARC's team time.

My background: ML engineer (~7 years of experience), some previous exposure to AGI and AIS research and computer security.

I am pretty confident that ARC would want you to submit those strategies, especially given your background. Even if both trivially fail, it seems useful for them to know that they did not seem obviously ruled out to you by the provided material.

ARC would be excited for you to send a short email to elk@alignmentresearchcenter.org with a few bullet points describing your high level ideas, if you want to get a sense for whether you're on the right track / whether fleshing them out would be likely to win a prize.

Can confirm we would be interested in hearing what you came up with.

I'm a software engineer with about 15 years of experience, but my jobs have had nothing to do with ML apart from occasional stats work. I remember a little about neural networks and Bayes nets from school 20 years ago, enough to follow part 1 of the ELK paper easily (up until Ontology Identification) and much (but not all) of the remainder difficult-ly. I occasionally read terrifying essays about AI and would like to help.

I've spent maybe 8 hours with the ELK paper; it was encouraging how much I understood with my current background. I plan to take a day off next week to work on it, expecting to total around 20 hours. I have an idea of where I want to look first, but am dubious that I'll be able to pin down a new idea in that time as I'm not typically very creative. Still, I might get lucky, and updating/refreshing my ML knowledge a bit feels worthwhile in itself.

This is the first time I’ve felt like I might be able to contribute to the AI alignment problem (so maybe you did oversell it!).

I feel like I have a pretty good understanding of everything in this post. I started finding a lot more concepts and vocabulary I didn’t understand when I read about the (counter)examples in the Less Wrong post - to the point where asking questions/doing further research started to seem a bit overwhelming.

It’s possible that this is an indicator that I wouldn’t be a good fit for the contest, or that I need to just suck it up and dig in. Being unsure of which makes me hesitant to take the tome to do the latter.

I can’t help but think that some kind of real-time walkthrough with the ability to ask questions would be helpful to me, and perhaps others.

I don’t know if anyone with a full understanding would have enough to time for something like that. Or if there would be enough other people interested in doing it to make it worthwhile.

I’m imagining it would take the form of something like a couple hour class over Zoom.

The biggest concern I can think of is that it would waste a qualified facilitator’s very valuable time. The expected value would go up though, with the number of interested attendees.

Maybe it could be contingent on enough people signing up?

I am sure that if you join the AI Alignment slack [1], Rob Miles discord server [2] or ask questions on LW you will find people willing to answer.

Finding a dedicated tutor might be harder, but if you can compensate them for their time. The bountied rationality Facebook group [3] might be a good place to ask.

[1] https://eahub.org/profile/jj-hepburn/ [2] https://www.patreon.com/posts/patreon-discord-41901653 [3] https://m.facebook.com/groups/bountiedrationality/about/

Thanks for the suggestions!