I think EA Global should be open access. No admissions process. Whoever wants to go can.

I'm very grateful for the work that everyone does to put together EA Global. I know this would add much more work for them. I know it is easy for me, a person who doesn't do the work now and won't have to do the extra work, to say extra work should be done to make it bigger.

But 1,500 people attended last EAG. Compare this to the 10,000 people at the last American Psychiatric Association conference, or the 13,000 at NeurIPS. EAG isn't small because we haven't discovered large-conference-holding technology. It's small as a design choice. When I talk to people involved, they say they want to project an exclusive atmosphere, or make sure that promising people can find and network with each other.

I think this is a bad tradeoff.

...because it makes people upset

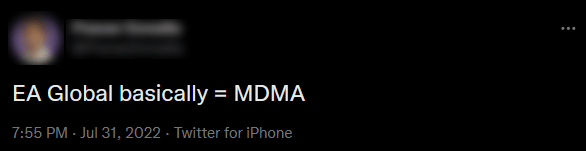

This comment (seen on Kerry Vaughan's Twitter) hit me hard:

A friend describes volunteering at EA Global for several years. Then one year they were told that not only was their help not needed, but they weren't impressive enough to be allowed admission at all. Then later something went wrong and the organizers begged them to come and help after all. I am not sure that they became less committed to EA because of the experience, but based on the look of delight in their eyes when they described rejecting the organizers' plea, it wouldn't surprise me if they did.

Not everyone rejected from EAG feels vengeful. Some people feel miserable. This year I came across the Very Serious Guide To Surviving EAG FOMO:

Part of me worries that, despite its name, it may not really be Very Serious...

...but you can learn a lot about what people are thinking by what they joke about, and I think a lot of EAs are sad because they can't go to EAG.

...because you can't identify promising people.

In early 2020 Kelsey Piper and I gave a talk to an EA student group. Most of the people there were young overachievers who had their entire lives planned out, people working on optimizing which research labs they would intern at in which order throughout their early 20s. They expected us to have useful tips on how to do this.

Meanwhile, in my early 20s, I was making $20,000/year as an intro-level English teacher at a Japanese conglomerate that went bankrupt six months after I joined. In her early 20s, Kelsey was taking leave from college for mental health reasons and babysitting her friends' kid for room and board. If either of us had been in the student group, we would have been the least promising of the lot. And here we were, being asked to advise! I mumbled something about optionality or something, but the real lesson I took away from this is that I don't trust anyone to identify promising people reliably.

...because people will refuse to apply out of scrupulosity.

I do this.

I'm not a very good conference attendee. Faced with the challenge of getting up early on a Saturday to go to San Francisco, I drag my feet and show up an hour late. After a few talks and meetings, I'm exhausted and go home early. I'm unlikely to change my career based on anything anyone says at EA Global, and I don't have any special wisdom that would convince other people to change theirs.

So when I consider applying to EAG, I ask myself whether it's worth taking up a slot that would otherwise go to some bright-eyed college student who has been dreaming of going to EAG for years and is going to consider it the highlight of their life. Then I realize I can't justify bumping that college student, and don't apply.

I used to think I was the only person who felt this way. But a few weeks ago, I brought it up in a group of five people, and two of them said they had also stopped applying to EAG, for similar reasons. I would judge both of them to be very bright and dedicated people, exactly the sort who I think the conference leadership are trying to catch.

In retrospect, "EAs are very scrupulous and sensitive to replaceability arguments" is a predictable failure mode. I think there could be hundreds of people in this category, including some of the people who would benefit most from attending.

...because of Goodhart's Law

If you only accept the most promising people, then you'll only get the people who most legibly conform to your current model of what's promising. But EA is forever searching for "Cause X" and for paradigm-shifting ideas. If you only let people whose work fits the current paradigm to sit at the table, you're guaranteed not to get these.

At the 2017 EAG, I attended some kind of reception dinner with wine or cocktails or something. Seated across the table from me was a man who wasn't drinking and who seemed angry about the whole thing. He turned out to be a recovering alcoholic turned anti-alcohol activist. He knew nobody was going to pass Prohibition II or anything; he just wanted to lessen social pressure to drink and prevent alcohol from being the default option - ie no cocktail hours. He was able to rattle off some pretty impressive studies about the number of QALYs alcohol was costing and why he thought that reducing social expectations of drinking would be an effective intervention. I didn't end up convinced that this beat out bednets or long-termism, but his argument has stuck with me years later and influenced the way I approach social events.

This guy was a working-class recovering alcoholic who didn't fit the "promising mathematically gifted youngster" model - but that is the single conversation I think about most from that weekend, and ever since then I've taken ideas about "class diversity" and "diversity of ideas" much more seriously.

(even though the last thing we need is for one more category of food/drink to get banned from EA conferences)

...because man does not live by networking alone

In the Facebook threads discussing this topic, supporters of the current process have pushed back: EA Global is a networking event. It should be optimized for making the networking go smoothly, which means keeping out the people who don't want to network or don't have anything to network about. People who feel bad about not being invited are making some sort of category error. Just because you don't have much to network about doesn't make you a bad person!

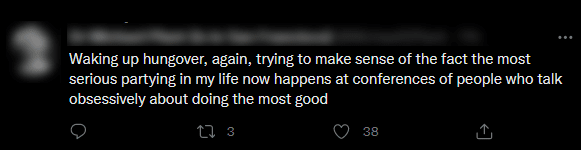

On the other hand, the conference is called "EA Global" and is universally billed as the place where EAs meet one another, learn more about the movement, and have a good time together. Everyone getting urged not to worry because it's just about networking has to spend the weekend watching all their friends say stuff like this:

Some people want to go to EA Global to network. Some people want to learn more about EA and see whether it's right for them. Some people want to update themselves on the state of the movement and learn about the latest ideas and causes. Some people want to throw themselves into the whirlwind and see if serendipity makes anything interesting happen. Some people want to see their friends and party.

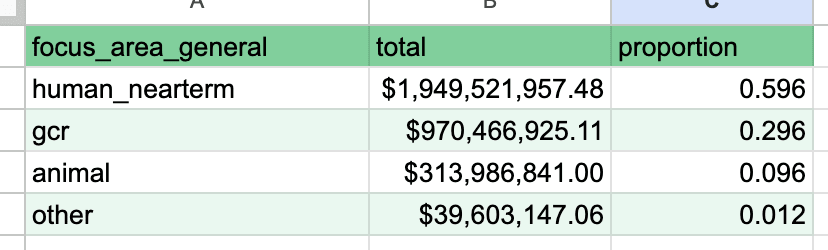

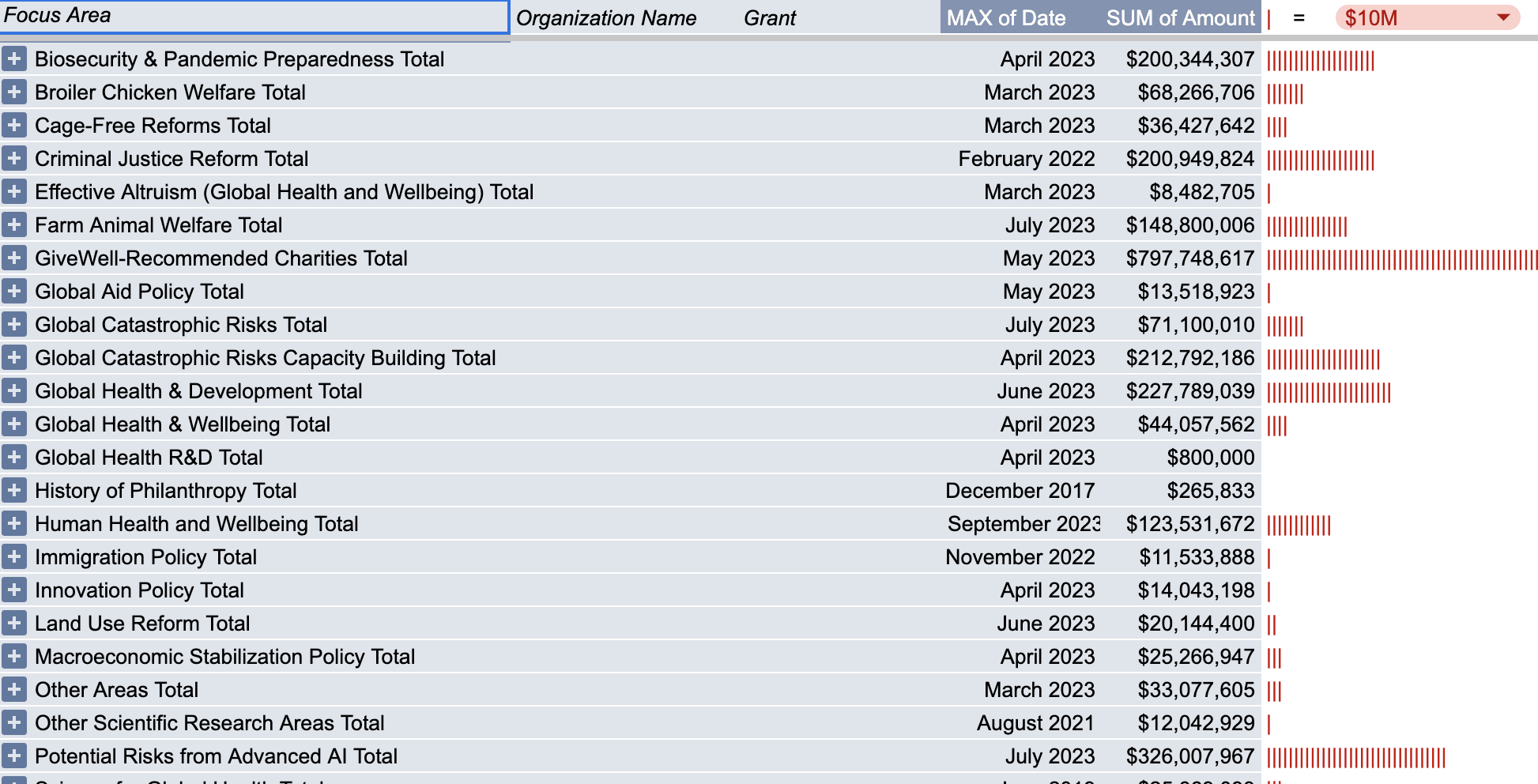

All of these people are valid. Even the last group, the people who just want to see friends and party, are valid. EA spends I-don't-even-know-how-many millions of dollars on community-building each year. And here are people who really want to participate in a great EA event, one that could change their lives and re-energize them, basically the community trying to build itself. And we're telling them no?

...because you can have your cake and eat it too.

There ought to be places for elites to hang out with other elites. There ought to be ways for the most promising people to network with each other. I just don't think these have to be all of EA Global.

For example, what if the conference itself was easy to attend, but the networking app was exclusive? People who wanted to network could apply, the 1500 most promising could get access to the app, and they could network with each other, same as they do now. Everyone else could just go to the talks or network among themselves.

Or what if EA Global was easy to attend, but there were other conferences - the Special AI Conference, the Special Global Health Conference - that were more selective? Maybe this would even be more useful, since the Global Health people probably don't gain much from interacting with the AI people, and vice versa.

Some people on Facebook worried that they wanted to offer travel reimbursement to attendees, but couldn't afford to scale things up and give 10,000 travel reimbursement packages. So why not have 10,000 attendees, and they can apply for 1,500 travel reimbursement packages which organizers give based on a combination of need and talent? Why not make ordinary attendees pay a little extra, and subsidize even more travel reimbursements?

I don't know, there are probably other factors I don't know about. Still, it would surprise me if, all things being considered, the EA movement would be worse off by giving thousands of extra really dedicated people the chance to attend their main conference each year.

At the closing ceremony of EA Global 2017, Will MacAskill urged attendees to "keep EA weird"

I don't know if we are living up to that. Some of the people who get accepted are plenty weird. Still, I can't help thinking we are failing to fully execute that vision.

Hey everyone, on an admin note I want to announce that I'm stepping in as "Transition Coordinator." Basically, Max wanted to step down immediately, and choosing an ED even on an interim basis might take a bit, so I will be doing the minimal set of ED-like tasks to keep CEA running and start an ED search.

If things go well you shouldn’t even notice that I’m here, but you can reach me at ben.west@centreforeffectivealtruism.org if you would like to contact me personally.

Hey folks, a reminder to please be thoughtful as you comment.

The previous Nonlinear thread received almost 500 comments; many of these were productive, but there were also some more heated exchanges. Following Forum norms—in a nutshell: be kind, stay on topic, be honest—is probably even more important than usual in charged situations like these.

Discussion here could end up warped towards aggression and confusion for a few reasons, even if commenters are generally well intentioned:

Regarding this paragraph from the post:

... (read more)A short note as a moderator:[1] People (understandably) have strong feelings about discussions that focus on race, and many of us found the content that the post is referencing difficult to read. This means that it's both harder to keep to Forum norms when responding to this, and (I think) especially important.

Please keep this in mind if you decide to engage in a discussion about this, and try to remember that most people on the Forum are here for collaborative discussions about doing good.

If you have any specific concerns, you can also always reach out to the moderation team at forum-moderation@effectivealtruism.org.

Mostly copying this comment from one I made on another post.

I thought we could do a thread for Giving What We Can pledgers and lessons learnt or insights since pledging!

I'll go first: I was actually really worried about how donating 10% would feel, as well as it's impact on my finances - but actually it's made me much less stressed about money - to know I can still have a great standard of living with 10% less. It's actually changed the way I see money and finances and has helped me think about how I can increase my giving in future years.

If folks don't mind, a brief word from our sponsors...

I saw Cremer's post and seriously considered this proposal. Unfortunately I came to the conclusion that the parenthetical point about who comprises the "EA community" is, as far as I can tell, a complete non-starter.

My co-founder from Asana, Justin Rosenstein, left a few years ago to start oneproject.org, and that group came to believe sortition (lottery-based democracy) was the best form of governance. So I came to him with the question of how you might define the electorate in the case of a group like EA. He suggests it's effectively not possible to do well other than in the case of geographic fencing (i.e. where people have invested in living) or by alternatively using the entire world population.

I have not myself come up with a non-geographic strategy that doesn't seem highly vulnerable to corrupt intent or vote brigading. Given that the stakes are the ability to control large sums of money, having people stake some of their own (i.e. become "dues-paying" members of some kind) does not seem like a strong enough mitigation. For example, a hostile takeover almost happened to the Sierra Club in SF in 2015 (albeit fo... (read more)

It is 2AM in my timezone, and come morning I may regret writing this. By way of introduction, let me say that I dispositionally skew towards the negative, and yet I do think that OP is amongst the best if not the best foundation in its weight class. So this comment generally doesn't compare OP against the rest but against the ideal.

One way which you could allow for somewhat democratic participation is through futarchy, i.e., using prediction markets for decision-making. This isn't vulnerable to brigading because it requires putting proportionally more money in the more influence you want to have, but at the same time this makes it less democratic.

More realistically, some proposals in that broad direction which I think could actually be implementable could be:

- allowing people to bet against particular OpenPhilanthropy grants producing successful outcomes.

- allowing people to bet against OP's strategic decisions (e.g., against worldview diversification)

- I'd love to see bets between OP and other organizations about whose funding is more effective, e.g., I'd love to see a bet between your and Jaan Tallinn on who's approach is better, where the winner gets some large amount (e.g., $20

... (read more)Hi Dustin :)

FWIW I also don't particularly understand the normative appeal of democratizing funding within the EA community. It seems to me like the common normative basis for democracy would tend to argue for democratizing control of resources in a much broader way, rather than within the self-selected EA community. I think epistemic/efficiency arguments for empowering more decision-makers within EA are generally more persuasive, but wouldn't necessarily look like "democracy" per se and might look more like more regranting, forecasting tournaments, etc.

A couple replies imply that my research on the topic was far too shallow and, sure, I agree.

But I do think that shallow research hits different from my POV, where the one person I have worked most closely with across nearly two decades happens to be personally well researched on the topic. What a fortuitous coincidence! So the fact that he said "yea, that's a real problem" rather than "it's probably something you can figure out with some work" was a meaningful update for me, given how many other times we've faced problems together.

I can absolutely believe that a different person, or further investigation generally, would yield a better answer, but I consider this a fairly strong prior rather than an arbitrary one. I also can't point at any clear reference examples of non-geographic democracies that appear to function well and have strong positive impact. A priori, it seems like a great idea, so why is that?

The variations I've seen so far in the comments (like weighing forum karma) increase trust and integrity in exchange for decreasing the democratic nature of the governance, and if you walk all the way along that path you get to institutions.

On behalf of Chloe and in her own words, here’s a response that might illuminate some pieces that are not obvious from Ben’s post - as his post is relying on more factual and object-level evidence, rather than the whole narrative.

“Before Ben published, I found thinking about or discussing my experiences very painful, as well as scary - I was never sure with whom it was safe sharing any of this with. Now that it’s public, it feels like it’s in the past and I’m able to talk about it. Here are some of my experiences I think are relevant to understanding what went on. They’re harder to back up with chatlog or other written evidence - take them as you want, knowing these are stories more than clearly backed up by evidence. I think people should be able to make up their own opinion on this, and I believe they should have the appropriate information to do so.

I want to emphasize *just how much* the entire experience of working for Nonlinear was them creating all kinds of obstacles, and me being told that if I’m clever enough I can figure out how to do these tasks anyway. It’s not actually about whether I had a contract and a salary (even then, the issue wasn’t the amount or even the legali... (read more)

I confirm that this is Chloe, who contacted me through our standard communication channels to say she was posting a comment today.

Thank you very much for sharing, Chloe.

Ben, Kat, Emerson, and readers of the original post have all noticed that the nature of Ben's process leads to selection against positive observations about Nonlinear. I encourage readers to notice that the reverse might also be true. Examples of selection against negative information include:

- Ben has reason to exclude stories that are less objective or have a less strong evidence base. The above comment is a concrete example of this.

- There's also something related here about the supposed unreliability of Alice as a source: Ben needs to include this to give a complete picture/because other people (in particular the Nonlinear co-founders) have said this. I strongly concur with Ben when he writes that he "found Alice very willing and ready to share primary sources [...] so I don’t believe her to be acting in bad faith." Personally, my impression is that people are making an incorrect inference about Alice from her characteristics (that are perhaps correlated with source-reliability in a large population, but aren't logically related, and aren't relevant in this case).

- To the extent that you expect other people to have been silenced (e.g. via antici

... (read more)😬 There's a ton of awful stuff here, but these two parts really jumped out at me. Trying to push past someone's boundaries by imposing a narrative about the type of person they are ('but you're the type of person who loves doing X!' 'you're only saying no because you're the type of person who worries too much') is really unsettling behavior.

I'll flag that this is an old remembered anecdote, and those can be unreliable, and I haven't heard Emerson or Kat's version of events. But it updates me, because Chloe seems like a pretty good source and this puzzle piece seems congruent with the other puzzle pieces.

E.g., the vibe here matches something that creeped me out a lot about Kat's text message to Alice in the OP, which is the apparent attempt to corner/railroad Alice into agreement via a bunch of threats and strongly imposed frames, followed immediately by Kat repeatedly stat... (read more)

This sounds like a terribly traumatic experience. I'm so sorry you went through this, and I hope you are in a better place and feel safer now.

Your self-worth is so, so much more than how well you can navigate what sounds like a manipulative, controlling, and abusive work environment.

It sounds like despite all of this, you've tried to be charitable to people who have treated you unfairly and poorly - while this speaks to your compassion, I know this line of thought can often lead to things that feel like you are gaslighting yourself, and I hope this isn't something that has caused you too much distress.

I also hope that Effective Altruism as a community becomes a safer space for people who join it aspiring to do good, and I'm grateful for your courage in sharing your experiences, despite it (very reasonably!... (read more)

I’m responding on behalf of the community health team at the Centre for Effective Altruism. We work to prevent and address problems in the community, including sexual misconduct.

I find the piece doesn’t accurately convey how my team, or the EA community more broadly, reacts to this sort of behavior.

We work to address harmful behavior, including sexual misconduct, because we think it’s so important that this community has a good culture where people can do their best work without harassment or other mistreatment. Ignoring problems or sweeping them under the rug would be terrible for people in the community, EA’s culture, and our ability to do good in the world.

My team didn’t have a chance to explain the actions we’ve already taken on the incidents described in this piece. The incidents described here include:

We’ll be going through the piece to see if there are any situations we might be able to address further, but in most of them there’s not enough information to do so. If you ... (read more)

There's a lot of discussion here about why things don't get reported to the community health team, and what they're responsible for, so I wanted to add my own bit of anecdata.

I'm a woman who has been closely involved with a particularly gender-imbalanced portion of EA for 7 years, who has personally experienced and secondhand heard about many issues around gender dynamics, and who has never reported anything to the community health team (despite several suggestions from friends to). Now I'm considering why.

Upon reflection, here are a few reasons:

-

-

... (read more)Early on, some of it was naiveté. I experienced occasional inappropriate comments or situations from senior male researchers when I was a teenager, but assumed that they could never be interested in me because of the age and experience gap. At the time I thought that I must be misinterpreting the situation, and only see it the way I do now with the benefit of experience and hindsight. (I never felt unsafe, and if I had, would have reported it or left.)

Often, the behavior felt plausibly deniable. "Is this person asking me to meet at a coffeeshop to discuss research or to hit on me? How about meeting at a bar? Going for a walk on the be

To give a little more detail about what I think gave wrong impressions -

Last year as part of a longer piece about how the community health team approaches problems, I wrote a list of factors that need to be balanced against each other. One that’s caused confusion is “Give people a second or third chance; adjust when people have changed and improved.” I meant situations like “someone has made some inappropriate comments and gotten feedback about it,” not something like assault. I’m adding a note to the original piece clarifying.

What proportion of the incidents described was the team unaware of?

I appreciate you taking the effort to write this. However, like other commentators I feel that if these proposals were implemented, EA would just become the same as many other left wing social movements, and, as far as I can tell, would basically become the same as standard forms of left wing environmentalism which are already a live option for people with this type of outlook, and get far more resources than EA ever has. I also think many of the proposals here have been rejected for good reason, and that some of the key arguments are weak.

- You begin by citing the Cowen quote that "EAs couldn't see the existential risk to FTX even though they focus on existential risk". I think this is one of the more daft points made by a serious person on the FTX crash. Although the words 'existential risk' are the same here, they have completely different meanings, one being about the extinction of all humanity or things roughly as bad as that, and the other being about risks to a particular organisation. The problem with FTX is that there wasn't enough attention to existential risks to FTX and the implications this would have for EA. In contrast, EAs have put umpteen pers

... (read more)I don't think I am a great representative of EA leadership, given my somewhat bumpy relationship and feelings to a lot of EA stuff, but I nevertheless I think I have a bunch of the answers that you are looking for:

The Coordination Forum is a very loosely structured retreat that's been happening around once a year. At least the last two that I attended were structured completely as an unconference with no official agenda, and the attendees just figured out themselves who to talk to, and organically wrote memos and put sessions on a shared schedule.

At least as far as I can tell basically no decisions get made at Coordination Forum, and it's primary purpose is building trust and digging into gnarly disagreements between different people who are active in EA community building, and who seem to get along well with the others attending (with some bal... (read more)

The 15 billion figure comes from Will's text messages themselves (page 6-7). Will sends Elon a text about how SBF could be interested in going in on Twitter, then Elon Musk asks, "Does he have huge amounts of money?" and Will replies, "Depends on how you define "huge." He's worth $24B, and his early employees (with shared values) bump that up to $30B. I asked how much he could in principle contribute and he said: "~1-3 billion would be easy, 3-8 billion I could do, ~8-15b is maybe possible but would require financing"

It seems weird to me that EAs would think going in with Musk on a Twitter deal would be worth $3-10 billion, let alone up to 15 (especially of money that at the ti... (read more)

Hey, I wanted to clarify that Open Phil gave most of the funding for the purchase of Wytham Abbey (a small part of the costs were also committed by Owen and his wife, as a signal of “skin in the game”). I run the Longtermist EA Community Growth program at Open Phil (we recently launched a parallel program for EA community growth for global health and wellbeing, which I don’t run) and I was the grant investigator for this grant, so I probably have the most context on it from the side of the donor. I’m also on the board of the Effective Ventures Foundation (EVF).

Why did we make the grant? There are two things I’d like to discuss about this, the process we used/context we were in, and our take on the case for the grant. I’ll start with the former.

Process and context: At the time we committed the funding (November 2021, though the purchase wasn’t completed until April 2022), there was a lot more apparent funding available than there is today, both from Open Phil and from the Future Fund. Existential risk reduction and related efforts seemed to us to have a funding overhang, and we were actively looking for more ways to spend money to support more good work, e... (read more)

Hi - Thanks so much for writing this. I'm on holiday at the moment so have only have been able to quickly skim your post and paper. But, having got the gist, I just wanted to say:

(i) It really pains me to hear that you lost time and energy as a result of people discouraging you from publishing the paper, or that you had to worry over funding on the basis of this. I'm sorry you had to go through that.

(ii) Personally, I'm excited to fund or otherwise encourage engaged and in-depth "red team" critical work on either (a) the ideas of EA, longtermism or strong longtermism, or (b) what practical implications have been taken to follow from EA, longtermism, or strong longtermism. If anyone reading this comment would like funding (or other ways of making their life easier) to do (a) or (b)-type work, or if you know of people in that position, please let me know at will@effectivealtruism.org. I'll try to consider any suggestions, or put the suggestions in front of others to consider, by the end of January.

I'm one of the people (maybe the first person?) who made a post saying that (some of) Kathy's accusations were false. I did this because those accusations were genuinely false, could have seriously damaged the lives of innocent people, and I had strong evidence of this from multiple very credible sources.

I'm extremely prepared to defend my actions here, but prefer not to do it in public in order to not further harm anyone else's reputation (including Kathy's). If you want more details, feel free to email me at scott@slatestarcodex.com and I will figure out how much information I can give you without violating anyone's trust.

I'm glad you made your post about how Kathy's accusations were false. I believe that was the right thing to do -- certainly given the information you had available.

But I wish you had left this sentence out, or written it more carefully:

It was obvious to me reading this post that the author made a really serious effort to stay constructive. (Thanks for that, Maya!) It seems to me that we should recognize that, and you're erasing an important distinction when you categorize the OP with imprudent tumblr call-out posts.

If nothing else, no one is being called out by name here, and the author doesn't link any of the tumblr posts and Reddit threads she refers to.

I don't think causing reputational harm to any individual was the author's intent in writing this. Fear of unfair individual reputational harm from what's written here seems a bit unjustified.

EDIT: After some time to cool down, I've removed that sentence from the comment, and somewhat edited this comment which was originally defending it.

I do think the sentence was true. By that I mean that (this is just a guess, not something I know from specifically asking them) the main reason other people were unwilling to post the information they had, was because they were worried that someone would write a public essay saying "X doesn't believe sexual assault victims" or "EA has a culture of doubting sexual assault victims". And they all hoped someone else would go first to mention all the evidence that these particular rumors were untrue, so that that person could be the one to get flak over this for the rest of their life (which I have, so good prediction!), instead of them. I think there's a culture of fear around these kinds of issues that it's useful to bring to the foreground if we want to model them correctly.

But I think you're gesturing at a point where if I appear to be implicitly criticizing Maya for bringing that up, fewer people will bring things like that up in the future, and even if this particular episode was false, many similar ones will be true, so her bri... (read more)

I want to strong agree with this post, but a forum glitch is preventing me from doing so, so mentally add +x agreement karma to the tally. [Edit: fixed and upvoted now]

I have also heard from at least one very credible source that at least one of Kathy's accusations had been professionally investigated and found without any merit.

Maybe also worth adding that the way she wrote the post would in a healthy person be intentionally misleading, and was at least incredibly careless for the strength of accusation. Eg there was some line to the effect of 'CFAR are involved in child abuse', where the claim was link-highlighted in a way that strongly suggested corroborating evidence but, as in that paraphrase, the link in fact just went directly to whatever the equivalent website was then for CFAR's summer camp.

It's uncomfortable berating the dead, but much more important to preserve the living from incredibly irresponsible aspersions like this.

Hi Carla and Luke, I was sad to hear that you and others were concerned that funders would be angry with you or your institutions for publishing this paper. For what it's worth, raising these criticisms wouldn't count as a black mark against you or your institutions in any funding decisions that I make. I'm saying this here publicly in case it makes others feel less concerned that funders would retaliate against people raising similar critiques. I disagree with the idea that publishing critiques like this is dangerous / should be discouraged.

+1 to everything Nick said, especially the last sentence. I'm glad this paper was published; I think it makes some valid points (which doesn't mean I agree with everything), and I don't see the case that it presents any risks or harms that should have made the authors consider withholding it. Furthermore, I think it's good for EA to be publicly examined and critiqued, so I think there are substantial potential harms from discouraging this general sort of work.

Whoever told you that funders would be upset by your publishing this piece, they didn't speak for Open Philanthropy. If there's an easy way to ensure they see this comment (and Nick's), it might be helpful to do so.

+1, EA Funds (which I run) is interested in funding critiques of popular EA-relevant ideas.

This was incredibly upsetting for me to read. This is the first time I've ever felt ashamed to be associated with EA. I apologize for the tone of the rest of the comment, can delete it if it is unproductive, but I feel a need to vent.

One thing I would like to understand better is to what extent this is a bay area issue versus EA in general. My impression is that a disproportionate fraction of abuse happens in the bay. If this suspicion is true, I don't know how to put this politely, but I'd really appreciate it if the bay area could get its shit together.

In my spare time I do community building in Denmark. I will be doing a workshop for the Danish academy of talented highschool students in April. How do you imagine the academy organizers will feel seeing this in TIME magazine?

What should I tell them? "I promise this is not an issue in our local community"?

I've been extremely excited to prepare this event. I would get to teach Denmark's brightest high schoolers about hierarchies of evidence, help them conduct their own cost-effectiveness analyses, and hopefully inspire a new generation to take action to make the world a better place.

Now I have to worry about whether it would be more appropriate to send the organizers a heads up informing them about the article and give them a chance to reconsider working with us.

I frankly feel unequipped to deal with something like this.

A response to why a lot of the abuse happens in the Bay Area:

"I am one of the people in the Time Mag article about sexual violence in EA. In the video below I clarify some points about why the Bay Area is the epicenter of so many coercive dynamics, including the hacker house culture, which are like frat houses backed by billions in capital, but without oversight of HR departments or parent institutions. This frat house/psychedelic/male culture, where a lot of professional networking happens, creates invisible glass ceilings for women."

tweet: https://twitter.com/soniajoseph_/status/1622002995020849152

Hi! I listened to your entire video. It was very brave and commendable. I really hope you've started something that will help get EA and the Bay Area rationalist scene into a much healthier and more impactful place. I think your analysis of the problem is very sharp. Thank you for coming forward and doing what you did.

Zooming out from this particular case, I’m concerned that our community is both (1) extremely encouraging and tolerant of experimentation and poor, undefined boundaries and (2) very quick to point the finger when any experiment goes wrong. If we don’t want to have strict professional norms I think it’s unfair to put all the blame on failed experiments without updating the algorithm that allows people embark on these experiments with community approval.

To be perfectly clear, I think this community has poor professional boundaries and a poor understanding of why normie boundaries exist. I would like better boundaries all around. I don’t think we get better boundaries by acting like a failure like this is due to character or lack of integrity instead of bad engineering. If you wouldn’t have looked at it before it imploded and thought the engineering was bad, I think that’s the biggest thing that needs to change. I’m concerned that people still think that if you have good enough character (or are smart enough, etc), you don’t need good boundaries and systems.

Yep, I think this is a big problem.

More generally, I think a lot of EAs give lip service to the value of people trying weird new ambitious things, "adopt a hits-based approach", "if you're never failing then you're playing it too safe", etc.; but then we harshly punish visible failures, especially ones that are the least bit weird. In cases like those, I think the main solution is to be more forgiving of failures, rather than to give up on ambitious projects.

From my perspective, none of this is particularly relevant to what bothers me about Ben's post and Nonlinear's response. My biggest concern about Nonlinear is their attempt to pressure people into silence (via lawsuits, bizarre veiled threats, etc.), and "I really wish EAs would experiment more with coercing and threatening each other" is not an example of the kind of experimentalism I'm talking about when I say that EAs should be willing to try and fail at more things (!).

"Keep EA weird" does not entail "have low ethical standards... (read more)

It's fair enough to feel betrayed in this situation, and to speak that out.

But given your position in the EA community, I think it's much more important to put effort towards giving context on your role in this saga.

Some jumping-off points:

- Did you consider yourself to be in a mentor / mentee relationship with SBF prior to the founding of FTX? What was the depth and cadence of that relationship?

- e.g. from this Sequoia profile (archived as they recently pulled it from their site):

- What diligence did you / your team do on FTX before agreeing to join the Future Fund as an advisor?&nb

... (read more)"The math, MacAskill argued, means that if one’s goal is to optimize one’s life for doing good, often most good can be done by choosing to make the most money possible—in order to give it all away. “Earn to give,” urged MacAskill.

... And MacAskill—Singer’s philosophical heir—had the answer: The best way for him to maximize good in the world would be to maximize his wealth. SBF listened, nodding, as MacAskill made his pitch. The earn-to-give logic was airtight. It was, SBF realized, applied utilitarianism. Knowing what he had to do, SBF simply said, “Yep. That makes sense.”"

[Edit after months: While I still believe these are valid questions, I now think I was too hostile, overconfident, and not genuinely curious enough.] One additional thing I’d be curious about:

You played the role of a messenger between SBF and Elon Musk in a bid for SBF to invest up to 15 billion of (presumably mostly his) wealth in an acquisition of Twitter. The stated reason for that bid was to make Twitter better for the world. This has worried me a lot over the last weeks. It could have easily been the most consequential thing EAs have ever done and there has - to my knowledge- never been a thorough EA debate that signalled that this would be a good idea.

What was the reasoning behind the decision to support SBF by connecting him to Musk? How many people from FTXFF or EA at large were consulted to figure out if that was a good idea? Do you think that it still made sense at the point you helped with the potential acquisition to regard most of the wealth of SBF as EA resources? If not, why did you not inform the EA community?

Source for claim about playing a messenger: https://twitter.com/tier10k/status/1575603591431102464?s=20&t=lYY65-TpZuifcbQ2j2EQ5w

I don't think EAs should necessary require a community-wide debate before making major decisions, including investment decisions; sometimes decisions should be made fast, and often decisions don't benefit a ton from "the whole community weighs in" over "twenty smart advisors weighed in".

But regardless, seems interesting and useful for EAs to debate this topic so we can form more models of this part of the strategy space -- maybe we should be doing more to positively affect the world's public fora. And I'd personally love to know more about Will's reasoning re Twitter.

I think it's important to note that many experts, traders, and investors did not see this coming, or they could have saved/made billions.

It seems very unfair to ask fund recipients to significantly outperform the market and most experts, while having access to way less information.

See this Twitter thread from Yudkowsky

Edit: I meant to refer to fund advisors, not (just) fund recipients

Also from the Sequoia profile: "After SBF quit Jane Street, he moved back home to the Bay Area, where Will MacAskill had offered him a job as director of business development at the Centre for Effective Altruism." It was precisely at this time that SBF launched Alameda Research, with Tara Mac Aulay (then the president of CEA) as a co-founder ( https://www.bloomberg.com/news/articles/2022-07-14/celsius-bankruptcy-filing-shows-long-reach-of-sam-bankman-fried).

To what extent was Will or any other CEA figure involved with launching Alameda and/or advising it?

One specific question I would want to raise is whether EA leaders involved with FTX were aware of or raised concerns about non-disclosed conflicts of interest between Alameda Research and FTX.

For example, I strongly suspect that EAs tied to FTX knew that SBF and Caroline (CEO of Alameda Research) were romantically involved (I strongly suspect this because I have personally heard Caroline talk about her romantic involvement with SBF in private conversations with several FTX fellows). Given the pre-existing concerns about the conflicts of interest between Alameda Research and FTX (see examples such as these), if this relationship were known to be hidden from investors and other stakeholders, should this not have raised red flags?

Hi Scott — I work for CEA as the lead on EA Global and wanted to jump in here.

Really appreciate the post — having a larger, more open EA event is something we’ve thought about for a while and are still considering.

I think there are real trade-offs here. An event that’s more appealing to some people is more off-putting to others, and we’re trying to get the best balance we can. We’ve tried different things over the years, which can lead to some confusion (since people remember messaging from years ago) but also gives us some data about what worked well and badly when we’ve tried more open or more exclusive events.

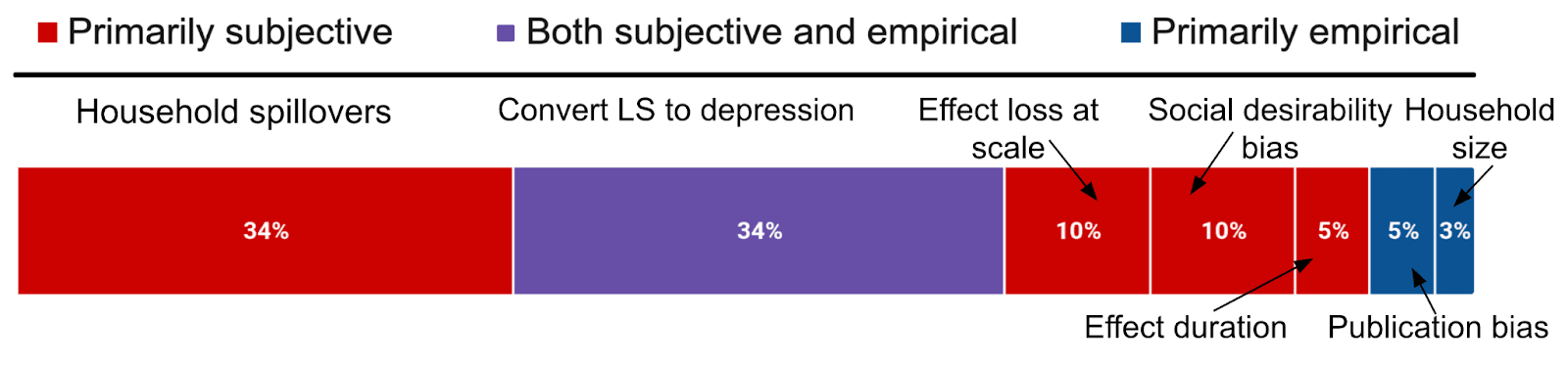

- We’ve asked people’s opinion on this. When we’ve polled our advisors including leaders from various EA organizations, they’ve favored more selective events. In our most recent feedback surveys, we’ve asked attendees whether they think we should have more attendees. For SF 2022, 34% said we should increase the number, 53% said it should stay the same, and 14% said it should be lower. Obviously there’s selection bias here since these are the people who got in, though.

- To your “...because people will refuse to apply out of scrupulosity” point — I want to clarify tha

... (read more)FWIW I generally agree with Eli's reply here. I think maybe EAG should 2x or 3x in size, but I'd lobby for it to not be fully open.

Thanks for commenting, Eli.

I'm a bit confused by one of your points here. You say: "I want to clarify that this isn’t how our admissions process works, and neither you nor anyone else we accept would be bumping anyone out of a spot". OK, cool.

However, when I received my acceptance email to EAG it included the words "If you find that you can’t make it to the event after all, please let us know so that we can give your spot to another applicant."

That sure sounds like a request that you make when you have a limited number of spots and accepting one person means bumping another.

To be clear, I think it's completely reasonable to have a set number of places - logistics are a thing, and planning an event for an unknown number of people is extremely challenging. I'm just surprised by your statement that it doesn't work that way.

I also want to make a side note that I strongly believe that making EA fun is important. The movement asks people to give away huge amounts of money, reorient their whole careers, and dedicate themselves to changing the world. Those are big asks! It's very easy for people to just not do them!

It's hard to get people to voluntarily do even small, easy things when they feel unappreciated or excluded. I agree that making EAs happy is not and should not be a terminal value but it absolutely should be an instrumental value.

The timeline (in PT time zone) seems to be:

Jan 13, 12:46am: Expo article published.

Jan 13, 4:20am: First mention of this on the EA Forum.

Jan 13, 6:46am: Shakeel Hashim (speaking for himself and not for CEA; +110 karma, +109 net agreement as of the 15th) writes, "If this is true it's absolutely horrifying. FLI needs to give a full explanation of what exactly happened here and I don't understand why they haven't. If FLI did knowingly agree to give money to a neo-Nazi group, that’s despicable. I don't think people who would do something like that ought to have any place in this community."

Jan 13, 9:18pm: Shakeel follows up, repeating

that he sees no reason why FLI wouldn't have already made a public statementthat it's really weird that FLI hasn't already made a public statement, and raises the possibility that FLI has maybe done sinister questionably-legal things and that's why they haven't spoken up.Jan 14, 3:43am: You (titotal) comment, "If the letter is genuine (and they have never denied that it is), then someone at FLI is either grossly incompetent or malicious. They need to address this ASAP. "

Jan 14, 8:16am: Jason comments (+15 karma, +13 net agreement a... (read more)

Thanks for calling me out on this — I agree that I was too hasty to call for a response.

I’m glad that FLI has shared more information, and that they are rethinking their procedures as a result of this. This FAQ hasn’t completely alleviated my concerns about what happened here — I think it’s worrying that something like this can get to the stage it did without it being flagged (though again, I'm glad FLI seems to agree with this). And I also think that it would have been better if FLI had shared some more of the FAQ info with Expo too.

I do regret calling for FLI to speak up sooner, and I should have had more empathy for the situation they were in. I posted my comments not because I wanted to throw FLI under the bus for PR reasons, but because I was feeling upset; coming on the heels of the Bostrom situation I was worried that some people in the EA community were racist or at least not very sensitive about how discussions of race-related things can make people feel. At the time, I wanted to do my bit to make it clear — in particular to other non-white people who felt similarly to me — that EA isn’t racist. But I could and should have done that in a much better way. I’m sorry.

FTX collapsed on November 8th; all the key facts were known by the 10th; CEA put out their statement on November 12th. This is a totally reasonable timeframe to respond. I would have hoped that this experience would make CEA sympathetic to a fellow EA org (with much less resources than CEA) experiencing a media crisis rather than being so quick to condemn.

I'm also not convinced that a Head of Communications, working for an organization with a very restrictive media policy for employees, commenting on a matter of importance for that organization, can really be said to be operating in a personal capacity. Despite claims to the contrary, I think it's pretty reasonable to interpret these as official CEA communications. Skill at a PR role is as much about what you do not say as what you do.

The eagerness with which people rushed to condemn is frankly a warning sign for involution. We have to stop it with the pointless infighting or it's all we will end up doing.

Maya, I’m so sorry that things have made you feel this way. I know you’re not alone in this. As Catherine said earlier, either of us (and the rest of the community health team) are here to talk and try to support.

I agree it’s very important that no one should get away with mistreating others because of their status, money, etc. One of the concerns you raise related to this is an accusation that Kathy Forth made. When Kathy raised concerns related to EA, I investigated all the cases where she gave me enough information to do so. In one case, her information allowed me to confirm that a person had acted badly, and to keep them out of EA Global.

At one point we arranged for an independent third party attorney who specialized in workplace sexual harassment claims to investigate a different accusation that Kathy made. After interviewing Kathy, the accused person, and some other people who had been nearby at the time, the investigator concluded that the evidence did not support Kathy’s claim about what had happened. I don’t think Kathy intended to misrepresent anything, but I think her interpretation of what happened was different than what most people’s would have been.

I do want pe... (read more)

I’m part of Anima International’s leadership as Director of Global Development (so please note that Animal Charity Evaluators’ negative view of the leadership quality is, among others, about me).

As the author noted, this topic is politically charged and additionally, as Anima International, we consider ourselves ‘a side’, so our judgment here may be heavily biased. This is why, even though we read this thread, we are quite hesitant to comment.

Nevertheless, I can offer a few factual points here that will clear some of the author’s confusion or that people got wrong in the comments.

We asked ACE for their thoughts on these points to make sure we are not misconstruing what happened due to a biased perspective. After a short conversation with Anima International, ACE preferred not to comment. They declined to correct what they feel is factually incorrect and instead let us know that they will post a reply to my post to avoid confusion, which we welcome.

1.

The author wrote: “it's possible that some Anima staff made private comments that are much worse than what is public”

While I don’t want to comment or judge whether comments are better or worse, we specifically asked ACE to publish all... (read more)

As AI heats up, I'm excited and frankly somewhat relieved to have Holden making this change. While I agree with 𝕮𝖎𝖓𝖊𝖗𝖆's comment below that Holden had a lot of leverage on AI safety in his recent role, I also believe he has an vast amount of domain knowledge that can be applied more directly to problem solving. We're in shockingly short supply of that kind of person, and the need is urgent.

Alexander has my full confidence in his new role as the sole CEO. I consider us incredibly fortunate to have someone like him already involved and and prepared to of succeed as the leader of Open Philanthropy.

I know that lukeprog's comment is mostly replying to the insecurity about lack of credentials in the OP. Still, the most upvoted answer seems a bit ironic in the broader context of the question:

If you read the comment without knowing Luke, you might be like "Oh yeah, that sounds encouraging." Then you find out that he wrote this excellent 100++ page report on the neuroscience of consciousness, which is possibly the best resource on this on the internet, and you're like "Uff, I'm f***ed."

Luke is (tied with Brian Tomasik) the most genuinely modest person I know, so it makes sense that it seems to him like there's a big gap between him and even smarter people in the community. And there might be, maybe. But that only makes the whole situation even more intimidating.

It's a tough spot to be in and I only have advice that maybe helps make the situation tolerable, at least.

Related to the advice about Stoicism, I recommend viewing EA as a game with varying levels of difficulty.

... (read more)While I understand that people generally like Owen, I believe we need to ensure that we are not overlooking the substance of his message and giving him an overly favorable response.

Owen's impropriety may be extensive. Just because one event was over 5 years ago, does not mean that the other >=3 events were (and if they were, one expects he would tell us). Relatedly, if it indeed was the most severe mistake of this nature, there may have been more severe mistakes of somewhat different kinds. There may yet be further events that haven't yet been reported to, or disclosed by Owen, and indeed, on the outside view, most events would not be suchly reported.

What makes things worse is the kind of career Owen has pursued over the last 5+ years. Owen's work centered on: i) advising orgs and funders, ii) hiring junior researchers, and iii) hosting workshops, often residential, and with junior researchers. If as Owen says, you know as of 2021-22 that you have deficiencies in dealing with power dynamics, and there have been a series of multiple events like this, then why are you still playing the roles described in (i-iii)? His medium term career trajectory, even relative to other EAs, is in... (read more)

I want to make a small comment on your phrase "it could have a chilling effect on those who have their own cases of sexual assault to report." Owen has not committed sexual assault, but sexual harassment. If this imperfect wording was an isolated incident, I wouldn't have said anything, but in every sexual misconduct comment thread I've followed on the forum, people have said sexual assault when they mean sexual harassment, and/or rape when they mean sexual assault. I was a victim of sexual abuse both growing up and as an adult, so I'm aware that there are big differences between the three, and feel it would be helpful to be mindful of our wording.

As someone with a fairly upvoted comment expressing a different perspective than yours, I want to mention that personally I had never heard of Owen until this post except for the disturbing description in the Time article, and that personally I have no interest in advancing my career based on any of my political opinions, so his power is irrelevant to me. While I appreciate that the last section of your comment came from a place of wanting to be supportive towards early career people like me, I think it oversimplifies the issues and found it a bit condescending. I’m trying to encourage women in my position to speak up more because we have important things to say.

I think it's likely that the difference in the replies to this post and the replies to the official statement by EV UK are from people not reading the link in the EV UK post, and so not getting the full context of the statement.

Edit: Also, if I was trying to impress Owen, wouldn't I be agreeing with his current perspective instead of arguing that he had over-updated?

With apologies, I would like to share some rather lengthy comments on the present controversy. My sense is that they likely express a fairly conventional reaction. However, I have not yet seen any commentary that entirely captures this perspective. Before I begin, I perhaps also ought to apologise for my decision to write anonymously. While none of my comments here are terribly exciting, I would like to think, I hope others can still empathise with my aversion to becoming a minor character in a controversy of this variety.

Q: Was the message in question needlessly offensive and deserving of an apology?

Yes, it certainly was. By describing the message as "needlessly offensive," what I mean to say is that, even if Prof. Bostrom was committed to making the same central point that is made in the message, there was simply no need for the point to be made in such an insensitive manner. To put forward an analogy, it would be needlessly offensive to make a point about free speech by placing a swastika on one’s shirt and wearing it around town. This would be a highly insensitive decision, even if the person wearing the swastika did not hold or intend to express any of the views associated wit... (read more)

For context, I'm black (Nigerian in the UK).

I'm just going to express my honest opinions here:

The events of the last 48 hours (slightly) raised my opinion of Nick Bostrom. I was very relieved that Bostrom did not compromise his epistemic integrity by expressing more socially palatable views that are contrary to those he actually holds.

I think it would be quite tragic to compromise honestly/accurately reporting our beliefs when the situation calls for it to fit in better. I'm very glad Bostrom did not do that.

As for the contents of the email itself, while very untasteful, they were sent in a particular context to be deliberately offensive and Bostrom did regret it and apologise for it at the time. I don't think it's useful/valuable to judge him on the basis of an email he sent a few decades ago as a student. The Bostrom that sent the email did not reflectively endorse its contents, and current Bostrom does not either.

I'm not interested in a discussion on race & IQ, so I deliberately avoided addressing that.

I. It might be worth reflecting upon how large part of this seem tied to something like "climbing the EA social ladder".

E.g. just from the first part, emphasis mine

Replace "EA" by some other environment with prestige gradients, and you have something like a highly generic social climbing guide. Seek cool kids, hang around them, go to exclusive parties, get good at signalling.

II. This isn't to say this is bad . Climbing the ladder to some extent could be instrumentally useful, or even necessary, for an ability to do some interesting things, sometimes.

III. But note the hidden costs. Climbing the social ladder can trade of against building things. Learning all the Berkeley vibes can trade of against, eg., learning the math actually useful for understanding agen... (read more)

Turning to the object level: I feel pretty torn here.

On the one hand, I agree the business with CARE was quite bad and share all the standard concerns about SJ discourse norms and cancel culture.

On the other hand, we've had quite a bit of anti-cancel-culture stuff on the Forum lately. There's been much more of that than of pro-SJ/pro-DEI content, and it's generally got much higher karma. I think the message that the subset of EA that is highly active on the Forum generally disapproves of cancel culture has been made pretty clearly.

I'm sceptical that further content in this vein will have the desired effect on EA and EA-adjacent groups and individuals who are less active on the Forum, other than to alienate them and promote a split in the movement, while also exposing EA to substantial PR risk. I think a lot of more SJ-sympathetic EAs already feel that the Forum is not a space for them – simply affirming that doesn't seem to me to be terribly useful. Not giving ACE prior warning before publishing the post further cements an adversarial us-and-them dynamic I'm not very happy about.

I don't really know how that cashes out as far as this post and posts like it are concerned. Biting one's tongue about what does seem like problematic behaviour would hardly be ideal. But as I've said several times in the past, I do wish we could be having this discussion in a more productive and conciliatory way, which has less of a chance of ending in an acrimonious split.

I agree with the content of your comment, Will, but feel a bit unhappy with it anyway. Apologies for the unpleasantly political metaphor, but as an intuition pump imagine the following comment.

"On the one hand, I agree that it seems bad that this org apparently has a sexual harassment problem. On the other hand, there have been a bunch of posts about sexual misconduct at various orgs recently, and these have drawn controversy, and I'm worried about the second-order effects of talking about this misconduct."

I guess my concern is that it seems like our top priority should be saying true and important things, and we should err on the side of not criticising people for doing so.

More generally I am opposed to "Criticising people for doing bad-seeming thing X would put off people who are enthusiastic about thing X."

Another take here is that if a group of people are sad that their views aren't sufficiently represented on the EA forum, they should consider making better arguments for them. I don't think we should try to ensure that the EA forum has proportionate amounts of pro-X and anti-X content for all X. (I think we should strive to evaluate content fairly; this involves not being more or less enthusiastic about content about views based on its popularity (except for instrumental reasons like "it's more interesting to hear arguments you haven't heard before).)

EDIT: Also, I think your comment is much better described as meta level than object level, despite its first sentence.

"On the other hand, we've had quite a bit of anti-cancel-culture stuff on the Forum lately. There's been much more of that than of pro-SJ/pro-DEI content, and it's generally got much higher karma. I think the message that the subset of EA that is highly active on the Forum generally disapproves of cancel culture has been made pretty clearly"

Perhaps. However, this post makes specific claims about ACE. And even though these claims have been discussed somewhat informally on Facebook, this post provides a far more solid writeup. So it does seem to be making a signficantly new contribution to the discussion and not just rewarming leftovers.

It would have been better if Hypatia had emailed the organisation ahead of time. However, I believe ACE staff members might have already commented on some of these issues (correct me if I'm wrong). And it's more of a good practise than something than a strict requirement - I totally understand the urge to just get something out of there.

"I'm sceptical that further content in this vein will have the desired effect on EA and EA-adjacent groups and individuals who are less active on the Forum, other than to al... (read more)

Over the course of me working in EA for the last 8 years I feel like I've seen about a dozen instances where Will made quite substantial tradeoffs where he traded off both the health of the EA community, and something like epistemic integrity, in favor of being more popular and getting more prestige.

Some examples here include:

- When he was CEO while I was at CEA he basically didn't really do his job at CEA but handed off the job to Tara (who was a terrible choice for many reasons, one of which is that she then co-founded Alameda and after that went on to start another fradulent-seeming crypto trading firm as far as I can tell). He then spent like half a year technically being CEO but spending all of his time being on book tours and talking to lots of high net-worth and high-status people.

- I think Doing Good Better was already substantially misleading about the methodology that the EA community has actually historically used to find top interventions. Indeed it was very "randomista" flavored in a way that I think really set up a lot of people to be disappointed when they encountered EA and then realized that actual cause prioritization is nowhere close to this formalized an

... (read more)Fwiw I have little private information but think that:

Also thanks Habryka for writing this. I think surfacing info like this is really valuable and I guess it has personal costs to you.

Epistemic status: Probably speaking too strongly in various ways, and probably not with enough empathy, but also feeling kind of lonely and with enough pent-up frustration about how things have been operating that I want to spend some social capital on this, and want to give a bit of a "this is my last stand" vibe.

It's been a few more days, and I do want to express frustration with the risk-aversion and guardedness I have experienced from CEA and other EA organizations in this time. I think this is a crucial time to be open, and to stop playing dumb PR games that are, in my current tentative assessment of the situation, one of the primary reasons why we got into this mess in the first place.

I understand there is some legal risk, and I am trying to track it myself quite closely. I am also worried that you are trying to run a strategy of "try to figure out everything internally and tell nice narratives about where we are all at afterwards", and I think that strategy has already gotten us into is so great that I don't think now is the time to double-down on that strategy.

Please, people at CEA and other EA organizations, come and talk to the community. Explore with us what ... (read more)

I feel there's a bit of a "missing mood" in some of the comments here, so I want to say:

I felt shocked, hurt, and betrayed at reading this. I never expected the Oxford incident to involve someone so central and well-regarded in the community, and certainly not Owen. Other EAs I know who knew Owen and the Oxford scene better are even more deeply hurt and surprised by this. (As other commenters here have already attested, tears have not been uncommon.)

Despite the length and thoughtfulness of the apology, it's difficult for me to see how someone who was already in a position of power and status in EA -- a community many of us see as key to the future of humanity -- behaved in a way that seems so inappropriate and destructive. I'm angry not only at the harm that was done to women trying to do good in the world, but also to the health, reputation, and credibility of our community. We deserve better from our leaders.

I really sympathize with all the EAs -- especially women -- who feel betrayed and undermined by this news. To all of you who've had bad experiences like this in EA -- I'm really sorry. I hope we can do better. I think we can do better -- I think we already have the seed... (read more)

I appreciate you writing this. To me, this clarifies something. (I'm sorry there's a rant incoming and if this comunity needs its hand held through these particular revelations, I'm not the one):

It seems like many EAs still (despite SBF) didn't put significant probability on the person from that particular Time incident being a very well-known and trusted man in EA, such as Owen. This despite the SBF scandal and despite (to me) this incident being the most troubling incident in the Time piece by far which definitely sounded to be attached to a "real" EA more than any of the others (I say as someone who still has significant problems with the Time piece). Some of us had already put decent odds on the probability that this was an important figure doing something that was at least thoughtless and ended up damaging the EA movement... I mean the woman who reported him literally tried to convey that he was very well-connected and important.

It seems like the community still has a lot to learn from the surprise of SBF about problematic incidents and leaders in general: No one expects their friends or leaders are gonna be the ones who do problematic things. That includes us. Update no... (read more)

[Epistemic status: I've done a lot of thinking about these issues previously; I am a female mathematician who has spent several years running mentorship/support groups for women in my academic departments and has also spent a few years in various EA circles.]

I wholeheartedly agree that EA needs to improve with respect to professional/personal life mixing, and that these fuzzy boundaries are especially bad for women. I would love to see more consciousness and effort by EA organizations toward fixing these and related issues. In particular I agree with the following:

> Not having stricter boundaries for work/sex/social in mission focused organizations brings about inefficiency and nepotism [...]. It puts EA at risk of alienating women / others due to reasons that have nothing to do with ideological differences.

However, I can't endorse the post as written, because there's a lot of claims made which I think are wrong or misleading. Like: Sure, there are poly women who'd be happier being monogamous, but there are also poly men who'd be happier being monogamous, and my own subjective impression is that these are about equally common. Also, "EA/rationalism and redpill fit like yin and y... (read more)

We (the Community Health team at CEA) would like to share some more information about the cases in the TIME article, and our previous knowledge of these cases. We’ve put these comments in the approximate order that they appear in the TIME article.

Re: Gopalakrishnan’s experiences

We read her post with concern. We saw quite a few supportive messages from community members, and we also tried to offer support. Our team also reached out to Gopalakrishnan in a direct message to ask if she was interested in sharing more information with us about the specific incidents.

Re: The man who

We don’t know this person’s identity for sure, but one of these accounts resembles a previous public accusation made against a person who used to be involved in the rationality community. He has been banned from CEA events for almost 5 years, and we understand he has been banned from some other EA spaces. He has been a critic of the EA movemen... (read more)

Brief update: I am still in the process of reading this. At this point I have given the post itself a once-over, and begun to read it more slowly (and looking through the appendices as they're linked).

I think any and all primary sources that Kat provides are good (such as the page of records of transactions). I am also grateful that they have not deanonymized Alice and Chloe.

I plan to compare the things that this post says directly against specific claims in mine, and acknowledge anything where I was factually inaccurate. I also plan to do a pass where I figure out which claims of mine this post responds to and which it doesn’t, and I want to reflect on the new info that’s been entered into evidence and how it relates to the overall picture.

It probably goes without saying that I (and everyone reading) want to believe true things and not false things about this situation. If I made inaccurate statements I would like to know that and correct them.

As I wrote in my follow-up post, I am not intending to continue spear-heading an investigation into Nonlinear. However this post makes some accusations of wrongdoing on my part, which I intend to respond to, and of course for... (read more)

I had missed that; thank you for pointing it out!

While using quotation marks for paraphrase or when recounting something as best as you recall is occasionally done in English writing, primarily in casual contexts, I think it's a very poor choice for this post. Lots of people are reading this trying to decide who to trust, and direct quotes and paraphrase have very different weight. Conflating them, especially in a way where many readers will think the paraphrases are direct quotes, makes it much harder for people to come away from this document with a more accurate understanding of what happened.

Perhaps using different markers (ex: "«" and "»") for paraphrase would make sense here?

I am one of the people mentioned in the article. I'm genuinely happy with the level of compassion and concern voiced in most of the comments on this article. Yes, while a lot of the comments are clearly concerned that this is a hard and difficult issue to tackle, I’m appreciative of the genuine desire of many people to do the right thing here. It seems that at least some of the EA community has a drive towards addressing the issue and improving from it rather than burying the issue as I had feared.

A couple of points, my spontaneous takeaways upon reading the article and the comments:

- This article covers bad actors in the EA space, and how hard it is to protect the community from them. This doesn't mean that all of EA is toxic, but rather the article is bringing to light the fact that bad actors have been tolerated and even defended in the community to the detriment of their victims. I'm sensing from the comments that non-Bay Area EA may have experienced less of this phenomenon. If you read this article and are absolutely shocked and disgusted, then I think you experienced a different selection of EA than I have. I know many of my peers will read this article and feel unc

... (read more)I feel like this post mostly doesn't talk about what feels like to me the most substantial downside of trying to scale up spending in EA, and increased availability of funding.

I think the biggest risk of the increased availability of funding, and general increase in scale, is that it will create a culture where people will be incentivized to act more deceptively towards others and that it will attract many people who will be much more open to deceptive action in order to take resources we currently have.

Here are some paragraphs from an internal memo I wrote a while ago that tried to capture this:

========

... (read more)Reading this, I guess I'll just post the second half of this memo that I wrote here as well, since it has some additional points that seem valuable to the discussion:

... (read more)James courteously shared a draft of this piece with me before posting, I really appreciate that and his substantive, constructive feedback.

1. I blundered

The first thing worth acknowledging is that he pointed out a mistake that substantially changes our results. And for that, I’m grateful. It goes to show the value of having skeptical external reviewers.

He pointed out that Kemp et al., (2009) finds a negative effect, while we recorded its effect as positive — meaning we coded the study as having the wrong sign.

What happened is that MH outcomes are often "higher = bad", and subjective wellbeing is "higher = better", so we note this in our code so that all effects that imply benefits are positive. What went wrong was that we coded Kemp et al., (2009), which used the GHQ-12 as "higher = bad" (which is usually the case) when the opposite was true. Higher equalled good in this case because we had to do an extra calculation to extract the effect [footnote: since there was baseline imbalance in the PHQ-9, we took the difference in pre-post changes], which flipped the sign.

This correction would reduce the spillover effect from 53% to 38% and reduce the cost-effectiveness comparison from 9.5... (read more)

Strong upvote for both James and Joel for modeling a productive way to do this kind of post -- show the organization a draft of the post first, and give them time to offer comments on the draft + prepare a comment for your post that can go up shortly after the post does.

Thank you Max for your years of dedicated service at CEA. Under your leadership as Executive Director, CEA grew significantly, increased its professionalism, and reached more people than it had before. I really appreciate your straightforward but kind communication style, humility, and eagerness to learn and improve. I'm sorry to see you go, and wish you the best of luck in whatever comes next.

Predictably, I disagree with this in the strongest possible terms.

If someone says false and horrible things to destroy other people's reputation, the story is "someone said false and horrible things to destroy other people's reputation". Not "in some other situation this could have been true". It might be true! But discussion around the false rumors isn't the time to talk about that.

Suppose the shoe was on the other foot, and some man (Bob), made some kind of false and horrible rumor about a woman (Alice). Maybe he says that she only got a good position in her organization by sleeping her way to the top. If this was false, the story isn't "we need to engage with the ways Bob felt harmed and make him feel valid." It's not "the Bob lied lens is harsh and unproductive". It's "we condemn these false and damaging rumors". If the headline story is anything else, I don't trust the community involved one bit, and I would be terrified to be associated with it.

I understand that sexual assault is especially scary, and that it may seem jarring to compare it to less serious accusations like Bob's. But the original post says we need to express emotions more, and I wanted to try to convey an emot... (read more)

I think a very relevant question is to ask is how come none of the showy self-criticism contests and red-teaming exercises came up with this? A good amount of time and money and energy were put into such things and if the exercises are not in fact uncovering the big problems lurking in the movement then that suggests some issues

If this comment is more about "how could this have been foreseen", then this comment thread may be relevant. I should note that hindsight bias means that it's much easier to look back and assess problems as obvious and predictable ex post, when powerful investment firms and individuals who also had skin in the game also missed this.

TL;DR:

1) There were entries that were relevant (this one also touches on it briefly)

2) They were specifically mentioned

3) There were comments relevant to this. (notably one of these was apparently deleted because it received a lot of downvotes when initially posted)

4) There has been at least two other posts on the forum prior to the contest that engaged with this specifically

My tentative take is that these issues were in fact identified by various members of the community, but there isn't a good way of turning identified issues into constructive actions - the status quo is we just have to trust that organisations have good systems in place for this, and that EA leaders are sufficiently careful and willing to make changes or consider them seriously, such that all the community needs to do is "raise the issue". And I think looking at the system... (read more)

Am I right in thinking that, if it weren't for the Time article, there's no reason to think that Owen would ever have been investigated and/or removed from the board?

While this is all being sorted and we figure out what is next, I would like to emphasize wishes of wellness and care for the many impacted by this.