All of DM's Comments + Replies

I broadly agree with this and have also previously made a case for Wikipedia editing on the Forum: https://forum.effectivealtruism.org/posts/FebKgHaAymjiETvXd/wikipedia-editing-is-important-tractable-and-neglected

As a caveat, there are some nuances to Wikipedia editing to make sure you're following community standards, which I've tried to lay out in my post. In particular, before investing a lot of time writing a new article, you should check if someone else tried that before and/or if the same content is already covered elsewhere. For example, there have been previous unsuccessful efforts to create an 'Existential risk' Wikipedia article. Those attempts failed in part because relevant content is already covered on the 'Global catastrophic risks' article.

One other relevant resource I'd recommend is Will and Toby's joint keynote speech at the 2016 EA Global conference in San Francisco. It discusses some of the history of EA (focusing on the Oxford community in particular) and some historical precursors: https://youtu.be/VH2LhSod1M4

I enjoyed reading this and would love to see more upbeat and celebratory posts like this. The EA community is very self-critical (which is good!) but we shouldn't lose sight of all the awesome things community members accomplish.

I recently had to make an important and urgent career decision and found it tremendously valuable to speak with several dozen wonderful people about this at EA Global SF. I'm immensely grateful both to the people giving me advice and to CEA for organizing my favorite EA Global yet.

Going very broad, I'd recommend going through the EA Forum Topics Wiki and considering the concepts included there. Similarly, you may look at the posts that make up the EA Handbook and look for suitable concepts there.

For inspiration, here are some other examples of TEDx talks given by EAs:

1. Beth Barnes (2015): "Effective Altruism"

2. Gabriella Overödder (2019): "How Using Science Can Radically Increase Your Social Impact"

3. Linh Chi Nguyen (2020): "5 Lessons for choosing an impactful career"

Feel free to add others below that I'm not aware of.

From a quick search:

Choosing for effective altruism | Helen Toner | TEDxUniMelb

Sustainable Practice: Reconciling Art and Effective Altruism | Kev Nemelka | TEDxBerkleeValencia

Want to Change the World? Start with Your Career! | EA | Amarins Veringa | TEDxLeidenUniversity

Thinking like an effective altruist: Roxanne Heston at TEDxTU

What’s the world’s most pressing problem | Stefan Torges | TEDxFS

For how much can you save a life? | Amon Elders | TEDxYouth@Maastricht

At the risk of self-promotion, I wrote a motivational essay on EA a few years ago, Framing Effective Altruism as Overcoming Indifference.

Toby Ord explains several related distinctions very clearly in his paper 'The Edges of Our Universe'. Highly recommended: https://arxiv.org/abs/2104.01191

Copied from my post: Notes on "The Myth of the Nuclear Revolution" (Lieber & Press, 2020)

...I recently completed a graduate school class on nuclear weapons policy, where we read the 2020 book “The Myth of the Nuclear Revolution: Power Politics in the Atomic Age” by Keir A. Lieber and Daryl G. Press. It is the most insightful nuclear security book I have read to date and while I disagree with some of the book’s outlook and conclusions, it is interesting and well written. The book is also very accessible and fairly short (180 pages). In sum, I believe more

In "The Definition of Effective Altruism", William MacAskill writes that

"Effective altruism is often considered to simply be a rebranding of utilitarianism, or to merely refer to applied utilitarianism...It is true that effective altruism has some similarities with utilitarianism: it is maximizing, it is primarily focused on improving wellbeing, many members of the community make significant sacrifices in order to do more good, and many members of the community self-describe as utilitarians.

But this is very different from effective altruism being the...

The following paper is relevant: Pummer & Crisp (2020). Effective Justice, Journal of Moral Philosophy, 17(4):398-415.

From the abstract:

"Effective Justice, a possible social movement that would encourage promoting justice most effectively, given limited resources. The latter minimal view reflects an insight about justice, and our non-diminishing moral reason to promote more of it, that surprisingly has gone largely unnoticed and undiscussed. The Effective Altruism movement has led many to reconsider how best to help others, but relatively little ...

Great video, I was very happy seeing Kurzgesagt promote this frame! Also relevant: https://forum.effectivealtruism.org/posts/ckPSrWeghc4gNsShK/good-news-on-climate-change

Great post! While I agree with your main claims, I believe the numbers for the multipliers (especially in aggregate and for ex ante impact evaluations) are nowhere near as extreme in reality as your article suggests for the reasons that Brian Tomasik elaborates on in these two articles:

(i) Charity Cost-Effectiveness in an Uncertain World

(ii) Why Charities Usually Don't Differ Astronomically in Expected Cost-Effectiveness

I mostly agree; the uncertain flow-through effects of giving socks to one's colleagues totally overwhelm the direct impact and are probably at least 1/1000 as big as the effects of being a charity entrepreneur (when you take the expected value according to our best knowledge right now). If Ana is trying to do good by donating socks, instead of saying she's doing 1/20,000,000th the good she could be, perhaps it's more accurate to say that she has an incorrect theory of change and is doing good (or harm) by accident.

I think the direct impacts of the best int...

Excellent post! I really appreciate your proposal and framing for a book on utilitarianism. In line with your point, William MacAskill and Richard Yetter Chappell also perceived a lack of accessible, modern, and high-quality resources on utilitarianism (and related ideas), motivating the creation of utilitarianism.net, an online textbook on utilitarianism. The website has been getting a lot of traction over the past year, and it's still under development (including plans to experiment with non-text media and translations into other languages).

Thanks for working on this website, it's a great idea!

Possible additions to your list of books (I've only read the first one so forgive me if they aren't as good/relevant as I think they are):

- Utilitarianism: A Very Short Introduction (de Lazari Radek and Singer)

- The Methods of Ethics (Sidgwick - I'm told quite long and dense though)

- The Point of View of the Universe: Sidgwick and Contemporary Ethics (de Lazari Radek and Singer)

- Utilitarianism: A Guide for the Perplexed (Bykvist 2010)

- Understanding Utilitarianism (Mulgan 2014)

- Hedonistic Utilitarianism (Tännsjö

Strong upvote! I found reading this very interesting and the results seem potentially quite useful to inform EA community building efforts.

Hi Timothy, it's great that you found your way here! There's a vibrant German EA community (including an upcoming conference in Berlin in September/October that you may want to join).

Regarding your university studies, I essentially agree with Ryan's comment. However, while studying in the UK and US can be great, I appreciate that doing so may be daunting and financially infeasible for many young Germans. If you decide to study in Germany and are more interested in the social sciences than in the natural sciences, I would encourage you (like Ryan) to ...

I want to express my deep gratitude to you, Patrick, for running EA Radio for all these years! 🙏 Early in my EA involvement (2015-16), I listened to all the EA Radio talks available at the time and found them very valuable.

In my experience, many EAs have a fairly nuanced perspective on technological progress and aren't unambiguous techno-optimists.

For instance, a substantial fraction of the community is very concerned about the potential negative impacts of advanced technologies (AI, biotech, solar geoengineering, cyber, etc.) and actively works to reduce the associated risks.

Moreover, some people in the community have promoted the idea of "differential (technological) progress" to suggest that we should work to (i) accelerate risk-reducing, welfare-enhancing tec...

Utilitarianism.net has also recently published an article on Arguments for Utilitarianism, written by Richard Yetter Chappell. (I'm sharing this article since it may interest readers of this post)

Thanks, it's valuable to hear your more skeptical view on this point! I've included it after several reviewers of my post brought it up and still think it was probably worth including as one of several potential self-interested benefits of Wikipedia editing.

I was mainly trying to draw attention to the fact that it is possible to link a Wikipedia user account to a real person and that it is worth considering whether to include it in certain applications (something I've done in previous applications). I still think Wikipedia editing is a decent signal ...

Thanks for this comment, Michael! I agree with all the points you make and should have been more careful to compare Wikipedia editing against the alternatives (I began doing this in an earlier draft of this post and then cut it because it became unwieldy).

In my experience, few EAs I've talked to have ever seriously considered Wikipedia editing. Therefore, my main objective with this post was to get more people to recognize it as one option of something valuable they might do with a part of their time; I wasn't trying to argue that Wikipedia editing i...

I strongly agree that we should learn our lessons from this incident and seriously try to avoid any repetition of something similar. In my view, the key lessons are something like:

- It's probably best to avoid paid Wikipedia editing

- It's crucial to respect the Wikipedia community's rules and norms (I've really tried to emphasize this heavily in this post)

- It's best to really approach Wikipedia editing with a mindset of "let's look for actual gaps in quality and coverage of important articles" and avoid anything that looks like promotional editing

I think it wou...

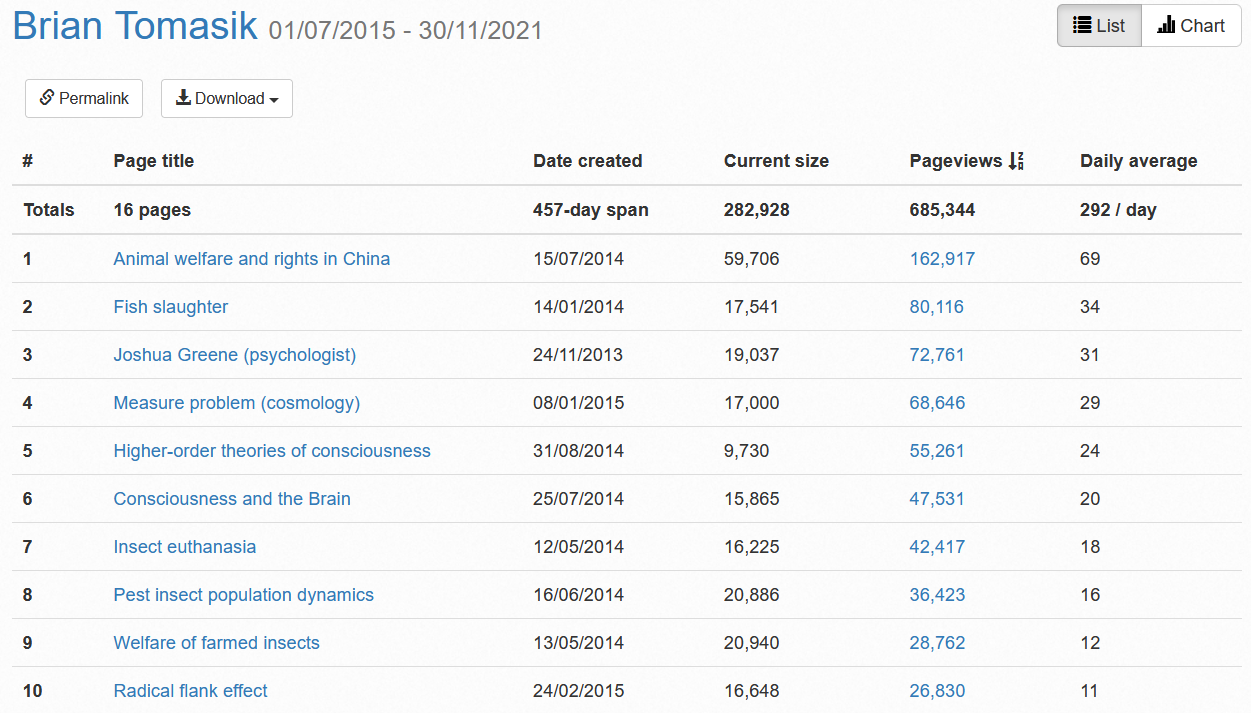

As an example, look at this overview of the Wikipedia pages that Brian Tomasik has created and their associated pageview numbers (screenshot of the top 10 pages below). The pages created by Brian mostly cover very important (though fringe) topics and attract ~ 100,000 pageviews every year. (Note that this overview ignores all the pages that Brian has edited but didn't create himself.)

Someone (who is not me) just started a proposal for a WikiProject on Effective Altruism! To be accepted, this proposal will need to be supported by at least 6-12 active Wikipedia editors. If you're interested in contributing to such a WikiProject, please express "support" for the proposal on the proposal page.

The proposal passed!! Everyone who's interested should add themselves as a participant on the official wikiproject! https://en.wikipedia.org/wiki/Wikipedia:WikiProject_Effective_Altruism

This is the best tool I know of to get an overview of Wikipedia article pageview counts (as mentioned in the post); the only limitation with it is that pageview data "only" goes back to 2015.

Create a page on biological weapons. This could include, for instance,

- An overview of offensive BW programs over time (when they were started, stopped, funding, staffing, etc.; perhaps with a separate section on the Soviet BW program)

- An overview of different international treaties relating to BW, including timelines and membership over time (i.e., the Geneva Protocol, the Biological Weapons Convention (BWC), Australia Group, UN Security Council Resolution 1540)

- Submissions of Confidence-Building Measures in the BWC over time (including as a percentage of the

(This does sound useful, though I'd note this is also a relatively sensitive area and OWID are - thankfully! - a quite prominent site, so OWID may wish to check in with global catastrophic biorisk researchers regarding whether anything they'd intend to include on such a page might be best left out.)

Thank you! Just FYI, on (6) we have:

For many people interested in but not yet fully committed to biosecurity, it may make more sense to choose a more general master's program in international affairs/security and then concentrate on biosecurity/biodefense to the extent possible within their program.

Some of the best master's programs to consider to this end:

- Georgetown University: MA in Security Studies (Washington, DC; 2 years)

- Johns Hopkins University: MA in International Relations (Washington, DC; 2 years)

- Stanford University: Master's in International Policy (2 years)

- King's College Lon

Georgetown University offers a 2-semester MSc in "Biohazardous Threat Agents & Emerging Infectious Diseases". Course description from the website: "a one year program designed to provide students with a solid foundation in the concepts of biological risk, disease threat, and mitigation strategies. The curriculum covers classic biological threats agents, global health security, emerging diseases, technologies, CBRN risk mitigation, and CBRN security."

Website traffic was initially low (i.e. 21k pageviews by 9k unique visitors from March to December 2020) but has since been gaining steam (i.e. 40k pageviews by 20k unique visitors in 2021 to date) as the website's search performance has improved. We expect traffic to continue growing significantly as we add more content, gather more backlinks and rise up the search rank. For comparison, the Wikipedia article on utilitarianism has received ~ 480k pageviews in 2021 to date, which suggests substantial room for growth for utilitarianism.net.

I'm not sure what counts as 'astronomically' more cost effective, but if it means ~1000x more important/cost-effective I might agree with (ii).

This may be the crux - I would not count a ~ 1000x multiplier as anywhere near "astronomical" and should probably have made this clearer in my original comment.

Claim (i), that the value of the long-term (in terms of lives, experiences, etc.) is astronomically larger than the value of the near-term, refers to differences in value of something like 1030 x.

All my comment was meant to say is that it seems hi...

I'd like to point to the essay Multiplicative Factors in Games and Cause Prioritization as a relevant resource for the question of how we should apportion the community's resources across (longtermist and neartermist) causes:

...TL;DR: If the impacts of two causes add together, it might make sense to heavily prioritize the one with the higher expected value per dollar. If they multiply, on the other hand, it makes sense to more evenly distribute effort across the causes. I think that many causes in the effective altruism sphere interact more multip

Please see my above response to jackmalde's comment. While I understand and respect your argument, I don't think we are justified in placing high confidence in this model of the long-term flowthrough effects of near-term targeted interventions. There are many similar more-or-less plausible models of such long-term flowthrough effects, some of which would suggest a positive net effect of near-term targeted interventions on the long-term future, while others would suggest a negative net effect. Lacking strong evidence that would allow us to accurately ...

Yep, not placing extreme weight. Just medium levels of confidence that when summed over, add up to something pretty low or maybe mildly negative. I definitely am not like 90%+ confidence on the flowthrough effects being negative.

No, we probably don’t. All of our actions plausibly affect the long-term future in some way, and it is difficult to (be justified to) achieve very high levels of confidence about the expected long-term impacts of specific actions. We would require an exceptional degree of confidence to claim that the long-term effects of our specific longtermist intervention are astronomically (i.e. by many orders of magnitude) larger than the long-term effects of some random neartermist interventions (or even doing nothing at all). Of course, this claim is perfectly...

Agreed, I'd love this feature! I also frequently rely on pageview statistics to prioritize which Wikipedia articles to improve.

There is a big difference between (i) the very plausible claim that the value of the long-term (in terms of lives, experiences, etc.) is astronomically larger than the value of the near-term, and (ii) the rather implausible claim that interventions targeted at improving the long-term are astronomically more important/cost-effective than those targeted at improving the near-term. It seems to me that many longtermists believe (i) but that almost no-one believes (ii).

Basically, in this context the same points apply that Brian Tomasik made in his essay "Why Ch...

I tentatively believe (ii), depending on some definitions. I'm somewhat surprised to see Ben and Darius implying it's a really weird view, and makes me wonder what I'm missing.

I don't want the EA community to stop working on all non-longtermist things. But the reason is because I think many of those things have positive indirect effects on the EA community. (I just mean indirect effects on the EA community, and maybe on the broader philanthropic community, I don't mean indirect effects more broadly in the sense of 'better health in poor countries' --> '...

It seems to me that many longtermists believe (i) but that almost no-one believes (ii).

Really? This surprises me. Combine (i) with the belief that we can tractably influence the far future and don't we pretty much get to (ii)?

I really appreciated the many useful links you included in this post and would like to encourage others to strive to do the same when writing EA Forum articles.

Happy to have you here, Linda! It sounds like you have some really important skills to offer and I wish you will find great opportunities to apply them.

The listed application documents include a "Short essay (≤500 words)" without further details. Can you say more about what this entails and what you are looking for?

Are non-US citizens who hold a US work authorization disadvantaged in the application process even if they seek to enter a US policy career (and perhaps aim to become naturalized eventually)?

There is Eliezer Yudkowsky's Harry Potter fan fiction "Harry Potter and the Methods of Rationality" (HPMOR), which conveys many ideas and concepts that are relevant to EA: http://www.hpmor.com/

Please note that there is also a fan-produced audio version of HPMOR: https://hpmorpodcast.com/

Great initiative! Unfortunately, I cannot seem to find the podcast on either of my two podcast apps (BeyondPod and Podcast Addict). Do you plan to make the podcast available across all major platforms?

Awesome episode! I really enjoyed listening to it when it came out and was excited for Sam's large audiences across Waking Up and Making Sense to learn about EA in this way.

As a caveat, there are some nuances to Wikipedia editing to make sure you're following community standards, which I've tried to lay out in my post. In particular, before investing a lot of time writing a new article, you should check if someone else tried that before and/or if the same content is already covered elsewhere. For example, there have been previous unsuccessful efforts to create an 'Existential risk' Wikipedia article. Those attempts failed in part because relevant content is already covered on the 'Global catastrophic risks' article.