All of MikeJohnson's Comments + Replies

Great post, and agreed about the dynamics involved. I worry the current EA synthesis has difficulty addressing this class of criticism (power corrupts; transactional donations, geeks/mops/sociopaths), but perhaps we haven’t seen EA’s final form.

As a small comment, I believe discussions of consciousness and moral value tend to downplay the possibility that most consciousness may arise outside of what we consider the biological ecosystem.

It feels a bit silly to ask “what does it feel like to be a black hole, or a quasar, or the Big Bang,” but I believe a proper theory of consciousness should have answers to these questions.

We don’t have that proper theory. But I think we can all agree that these megaphenomena involve a great deal of matter/negentropy and plausibly some interesting self-organized microstructure- though that’s purely conjecture. If we’re charting out EV, let’s keep the truly big numbers in mind (even if we don’t know how to count them yet).

Thank you for this list.

#2: I left a comment on Matthew’s post that I feel is relevant: https://forum.effectivealtruism.org/posts/CRvFvCgujumygKeDB/my-current-thoughts-on-the-risks-from-seti?commentId=KRqhzrR3o3bSmhM7c

#16: I gave a talk for Mathematical Consciousness Science in 2020 that covers some relevant items: I’d especially point to 7,8,9,10 in my list here: https://opentheory.net/2022/04/it-from-bit-revisited/

#18+#20: I feel these are ultimately questions for neuroscience, not psychology. We may need a new sort of neuroscience to address...

I posted this as a comment to Robin Hanson’s “Seeing ANYTHING Other Than Huge-Civ Is Bad News” —

————

I feel these debates are too agnostic about the likely telos of aliens (whether grabby or not). Being able to make reasonable conjectures here will greatly improve our a priori expectations and our interpretation of available cosmological evidence.

Premise 1: Eventually, civilizations progress until they can engage in megascale engineering: Dyson spheres, etc.

Premise 2: Consciousness is the home of value: Disneyland with no children is valueless.

Premise 2.1: ...

Great, thank you for the response.

On (3) — I feel AI safety as it’s pursued today is a bit disconnected from other fields such as neuroscience, embodiment, and phenomenology. I.e. the terms used in AI safety don’t try to connect to the semantic webs of affective neuroscience, embodied existence, or qualia. I tend to take this as a warning sign: all disciplines ultimately refer to different aspects of the same reality, and all conversations about reality should ultimately connect. If they aren’t connecting, we should look for a synthesis such that they do.

T...

- What do you see as Aligned AI’s core output, and what is its success condition? What do you see the payoff curve being — i.e. if you solve 10% of the problem, do you get [0%|10%|20%] of the reward?

- I think a fresh AI safety approach may (or should) lead to fresh reframes on what AI safety is. Would your work introduce a new definition for AI safety?

- Value extrapolation may be intended as a technical term, but intuitively these words also seem inextricably tied to both neuroscience and phenomenology. How do you plan on interfacing with these fields? What key

I consistently enjoy your posts, thank you for the time and energy you invest.

Robin Hanson is famous for critiques in the form of “X isn’t about X, it’s about Y.” I suspect many of your examples may fit this pattern. To wit, Kwame Appiah wrote that “in life, the challenge is not so much to figure out how best to play the game; the challenge is to figure out what game you’re playing.” Andrew Carnegie, for instance, may have been trying to maximize status, among his peers or his inner mental parliament. Elon Musk may be playing a complicated game with SpaceX...

Most likely infectious diseases also play a significant role in aging- have seen some research suggesting that major health inflection points are often associated with an infection.

I like your post and strongly agree with the gist.

DM me if you’re interested in brainstorming alternatives to the vaccine paradigm (which seems to work much better for certain diseases than others).

Generally speaking, I agree with the aphorism “You catch more flies with honey than vinegar;”

For what it’s worth, I interpreted Gregory’s critique as an attempt to blow up the conversation and steer away from the object level, which felt odd. I’m happiest speaking of my research, and fielding specific questions about claims.

Welcome, thanks for the good questions.

Asymmetries in stimuli seem crucial for getting patterns through the “predictive coding gauntlet.” I.e., that which can be predicted can be ignored. We demonstrably screen perfect harmony out fairly rapidly.

The crucial context for STV on the other hand isn’t symmetries/asymmetries in stimuli, but rather in brain activity. (More specifically, as we’re currently looking at things, in global eigenmodes.)

With a nod back to the predictive coding frame, it’s quite plausible that the stimuli that create the most internal sym...

Hi Abby, I understand. We can just make the best of it.

1a. Yep, definitely. Empirically we know this is true from e.g. Kringelbach and Berridge’s work on hedonic centers of the brain; what we’d be interested in looking into would be whether these areas are special in terms of network control theory.

1c. I may be getting ahead of myself here: the basic approach to testing STV we intend is looking at dissonance in global activity. Dissonance between brain regions likely contribute to this ‘global dissonance’ metric. I’m also interested in measuring dissonance...

Good catch; there’s plenty that our glossary does not cover yet. This post is at 70 comments now, and I can just say I’m typing as fast as I can!

I pinged our engineer (who has taken the lead on the neuroimaging pipeline work) about details, but as the collaboration hasn’t yet been announced I’ll err on the side of caution in sharing.

To Michael — here’s my attempt to clarify the terms you highlighted:

- Neurophysiological models of suffering try to dig into the computational utility and underlying biology of suffering

-> existing theories talk about what emo...

Hi Abby, thanks for the questions. I have direct answers to 2,3,4, and indirect answers to 1 and 5.

1a. Speaking of the general case, we expect network control theory to be a useful frame for approaching questions of why certain sorts of activity in certain regions of the brain are particularly relevant for valence. (A simple story: hedonic centers of the brain act as ‘tuning knobs’ toward or away from global harmony. This would imply they don’t intrinsically create pleasure and suffering, merely facilitate these states.) This paper from the Bassett lab is ...

Hi Mike,

Thanks again for your openness to discussion, I do appreciate you taking the time. Your responses here are much more satisfying and comprehensible than your previous statements, it's a bit of a shame we can't reset the conversation.

1a. I am interpreting this as you saying there are certain brain areas that, when activated, are more likely to result in the experience of suffering or pleasure. This is the sort of thing that is plausible and possible to test.

1b. I think you are making a mistake by thinking of the brain like a musical inst...

Hi Samuel, I think it’s a good thought experiment. One prediction I’ve made is that one could make an agent such as that, but it would be deeply computationally suboptimal: it would be a system that maximizes disharmony/dissonance internally, but seeks out consonant patterns externally. Possible to make but definitely an AI-complete problem.

Just as an idle question, what do you suppose the natural kinds of phenomenology are? I think this can be a generative place to think about qualia in general.

Hi Abby,

I feel we’ve been in some sense talking past each other from the start. I think I bear some of the responsibility for that, based on how my post was written (originally for my blog, and more as a summary than an explanation).

I’m sorry for your frustration. I can only say I’m not intentionally trying to frustrate you, but that we appear to have very different styles of thinking and writing and this may have caused some friction, and I have been answering object-level questions from the community as best I can.

I really appreciate you putting it like this, and endorse everything you wrote.

I think sometimes researchers can get too close to their topics and collapse many premises and steps together; they sometimes sort of ‘throw away the ladder’ that got them where they are, to paraphrase Wittgenstein. This can make it difficult to communicate to some audiences. My experience on the forum this week suggests this may have happened to me on this topic. I’m grateful for the help the community is offering on filling in the gaps.

Hi Samuel,

I’d say there’s at least some diversity of views on these topics within QRI. When I introduced STV in PQ, I very intentionally did not frame it as a moral hypothesis. If we’re doing research, best to keep the descriptive and the normative as separate as possible. If STV is true it may make certain normative frames easier to formulate, but STV itself is not a theory of morality or ethics.

One way to put this is that when I wear my philosopher’s hat, I’m most concerned about understanding what the ‘natural kinds’ (in Plato’s terms) of qualia are. If...

Hi all, I messaged some with Holly a bit about this, and what she shared was very helpful. I think a core part of what happened was a mismatch of expectations: I originally wrote this content for my blog and QRI’s website, and the tone and terminology was geared toward “home team content”, not “away team content”. Some people found both the confidence and somewhat dense terminology offputting, and I think that’s reasonable of them to raise questions. As a takeaway, I‘ve updated that crossposting involves some pitfalls and intend to do things differently next time.

I take Andrés’s point to be that there’s a decently broad set of people who took a while to see merit in STV, but eventually did. One can say it’s an acquired taste, something that feels strange and likely wrong at first, but is surprisingly parsimonious across a wide set of puzzles. Some of our advisors approached STV with significant initial skepticism, and it took some time for them to come around. That there are at least a few distinguished scientists who like STV isn’t proof it’s correct, but may suggest withholding some forms of judgment.

Andrés’s STV presentation to Imperial College London’s psychedelics research group is probably the best public resource I can point to on this right now. I can say after these interactions it’s much more clear that people hearing these claims are less interested in the detailed structure of the philosophical argument, and more in the evidence, and in a certain form of evidence. I think this is very reasonable and it’s something we’re finally in a position to work on directly: we spent the last ~year building the technical capacity to do the sorts of studies we believe will either falsify or directly support STV.

Hi Holly, I’d say the format of my argument there would be enumeration of claims, not e.g. trying to create a syllogism. I’ll try to expand and restate those claims here:

A very important piece of this is assuming there exists a formal structure (formalism) to consciousness. If this is true, STV becomes a lot more probable. If it isn’t, STV can’t be the case.

Integrated Information Theory (IIT) is the most famous framework for determining the formal structure to an experience. It does so by looking at the causal relationships between components of a system; ...

I feel like your explanations are skipping a bunch of steps that would help folks understand where you're coming from. FWIW, here's how I make sense of STV:

- Neuroscience can tell us that some neurons light up when we eat chocolate, but it doesn't tell us what it is about the delicious experience of chocolate that makes it so wonderful. "This is what sugar looks like" and "this is the location of the reward center" are great descriptions of parts of the process, but they don't explain why certain patterns of neural activations feel a certain way.

- Everyone agr

Just a quick comment in terms of comment flow: there’s been a large amount of editing of the top comment, and some of the replies that have been posted may not seem to follow the logic of the comment they‘re attached to. If there are edits to a comment that you wish me to address, I’d be glad if you made a new comment. (If you don’t, I don’t fault you but I may not address the edit.)

Hi Charles, I think several people (myself, Abby, and now Greg) were put in some pretty uncomfortable positions across these replies. By posting, I open myself to replies, but I was pretty surprised by some of the energy of the initial comments (as apparently were others; both Abby and I edited some of our comments to be less confrontational, and I’m happy with and appreciate that).

Happy to answer any object level questions you have that haven’t been covered in other replies, but this remark seems rather strange to me.

Hi Michael, I appreciate the kind effortpost, as per usual. I’ll do my best to answer.

- This is a very important question. To restate it in several ways: what kind of thing is suffering? What kind of question is ‘what is suffering’? What would a philosophically satisfying definition of suffering look like? How would we know if we saw it? Why does QRI think existing theories of suffering are lacking? Is an answer to this question a matter of defining some essence, or defining causal conditions, or something else?

Our intent is to define phenomenological valenc...

Hi Seb, I appreciate the honest feedback and kind frame.

I can say that it’s difficult to write a short piece that will please a diverse audience, but that ducks the responsibility of the writer.

You might be interested in my reply to Linch which notes that STV may be useful even if false; I would be surprised if it were false but it wouldn’t be an end to qualia research, merely a new interesting chapter.

I spoke with the team today about data, and we just got a new batch this week we’re optimistic has exactly the properties we’re looking for (meditativ...

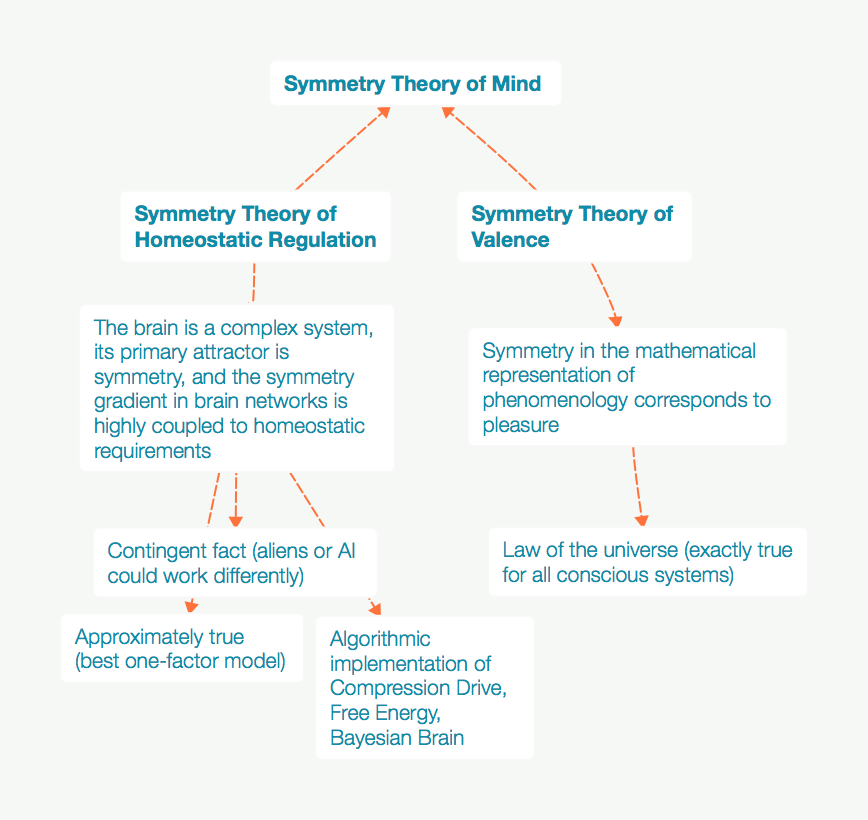

Hi Linch, that’s very well put. I would also add a third possibility (c), which is “is STV false but generative.” — I explore this a little here, with the core thesis summarized in this graphic:

I.e., STV could be false in a metaphysical sense, but insofar as the brain is a harmonic computer (a strong reframe of CSHW), it could be performing harmonic gradient descent. Fully expanded, there would be four cases:

STV true, STHR true

STV true, STHR false

STV false, STHR true

STV false, STHR false

Of course, ‘true and false’ are easier to navigate if we can speak of ...

This is in fact the claim of STV, loosely speaking; that there is an identity relationship here. I can see how it would feel like an aggressive claim, but I’d also suggest that positing identity relationships is a very positive thing, as they generally offer clear falsification criteria. Happy to discuss object-level arguments as presented in the linked video.

Hi Mike, I really enjoy your and Andrés's work, including STV, and I have to say I'm disappointed by how the ideas are presented here, and entirely unsurprised at the reaction they've elicited.

There's a world of a difference between saying "nobody knows what valence is made out of, so we're trying to see if we can find correlations with symmetries in imaging data" (weird but fascinating) and "There is an identity relationship between suffering and disharmony" (time cube). I know you're not time cube man, because I've read lots of other QRI output over the ...

Thanks for adjusting your language to be nicer. I wouldn’t say we’re overwhelmingly confident in our claims, but I am overwhelmingly confident in the value of exploring these topics from first principles, and although I wish I had knockout evidence for STV to share with you today, that would be Nobel Prize tier and I think we’ll have to wait and see what the data brings. For the data we would identify as provisional support, this video is likely the best public resource at this point:

This sounds overwhelmingly confident to me, especially since you have no evidence to support either of these claims.

If there is dissonance in the brain, there is suffering; if there is suffering, there is dissonance in the brain. Always.

I’d say that’s a fair assessment — one wrinkle that isn’t a critique of what you wrote, but seems worth mentioning, is that it’s an open question if these are the metrics we should be optimizing for. If we were part of academia, citations would be the de facto target, but we have different incentives (we’re not trying to impress tenure committees). That said, the more citations the better of course.

As you say, if STV is true, it would essentially introduce an entirely new subfield. It would also have implications for items like AI safety and those may outw...

Thanks valence. I do think the ‘hits-based giving’ frame is important to develop, although I understand it’s doesn’t have universal support as some of the implications may be difficult to navigate.

And thank for appreciating the problem; it’s sometimes hard for me to describe how important the topic feels and all the reasons for working on it.

Hi Linch, cool idea.

I’d suggest that 100 citations can be a rather large number for papers, depending on what reference class you put us in, 3000 larger still; here’s an overview of the top-cited papers in neuroscience for what it’s worth: https://www.frontiersin.org/articles/10.3389/fnhum.2017.00363/full

Methods papers tend to be among the most highly cited, and e.g. Selen Atasoy’s original work on CSHW has been cited 208 times, according to Google Scholar. Some more recent papers are at significantly less than 100, though this may climb over time.

Anyway m...

Hi Abby, to give a little more color on the data: we’re very interested in CSHW as it gives us a way to infer harmonic structure from fMRI, which we’re optimistic is a significant factor in brain self-organization. (This is still a live hypothesis, not established fact; Atasoy is still proving her paradigm, but we really like it.)

We expect this structure to be highly correlated with global valence, and to show strong signatures of symmetry/harmony during high-valence states. The question we’ve been struggling with as we’ve been building this hypothesis is ...

Hi Gregory, I’ll own that emoticon. My intent was not to belittle, but to show I’m not upset and I‘m actually enjoying the interaction. To be crystal clear, I have no doubt Hoskin is a sharp scientist and cast no aspersions on her work. Text can be a pretty difficult medium for conveying emotions (things can easily come across as either flat or aggressive).

Hi Abby, to be honest the parallels between free-energy-minimizing systems and dissonance-minimizing systems is a novel idea we’re playing with (or at least I believe it’s novel - my colleague Andrés coined it to my knowledge) and I’m not at full liberty to share all the details before we publish it. I think it’s reasonable to doubt this intuition, and we’ll hopefully be assembling more support for it soon.

To the larger question of neural synchrony and STV, a good collection of our argument and some available evidence would be our talk to Robin Carha...

Hi Harrison, that’s very helpful. I think it’s a challenge to package fairly technical and novel research into something that’s both precise and intuitive. Definitely agree that “harmony” is an ambiguous concept.

One of the interesting aspects of this work is it does directly touch on issues of metaphysics and ontology: what are the natural kinds of reality? What concepts ‘carve reality at the joints’? Most sorts of research can avoid dealing with these questions directly, and just speak about observables and predictions. But since part of what we’re doing ...

I’m glad to hear you feel good about your background and are filled with confidence in yourself and your field. I think the best work often comes from people who don’t at first see all the challenges involved in doing something, because often those are the only people who even try.

At first I was a little taken aback by your tone, but to be honest I’m a little amused by the whole interaction now.

The core problem with EEG is that the most sophisticated analyses depend on source localization (holographic reconstruction of brain activity), and accurate s...

- In brief, asynchrony levies a complexity and homeostatic cost that harmony doesn’t. A simple story here is that dissonant systems shake themselves apart; we can draw a parallel between dissonance in the harmonic frame and free energy in the predictive coding frame.

I appreciate your direct answer to my question, but I do not understand what you are trying to say. I am familiar with Friston and the free-energy principle, so feel free to explain your theory in those terms. All you are doing here is saying that the brain has some reason to reduce “dissonance i...

Hi Mike,

I am comfortable calling myself "somebody who knows a lot about this field", especially in relation to the average EA Forum reader, our current context.

I respect Karl Friston as well, I'm looking forward to reading his thoughts on your theory. Is there anything you can share?

The CSHW stuff looks potentially cool, but it's separate from your original theory, so I don't want to get too deep into it here. The only thing I would say is that I don't understand why the claims of your original theory cannot be investigated using standard...

Hi Jpmos, really appreciate the comments. To address the question of evidence, this is a fairly difficult epistemological situation but we’re working with high-valence datasets from Daniel Ingram & Harvard, and Imperial College London (jhana data, and MDMA data, respectively) and looking for signatures of high harmony.

Neuroimaging is a pretty messy thing, there are no shortcuts to denoising data, and we are highly funding constrained, so I’m afraid we don’t have any peer-reviewed work published on this yet. I can say that initial results seem fai...

Hi Harrison, appreciate the remarks. My response would be more-or-less an open-ended question: do you feel this is a valid scientific mystery? And, what do you feel an answer would/should look like? I.e., correct answers to long-unsolved mysteries might tend to be on the weird side, but there’s “useful generative clever weird” and “bad wrong crazy timecube weird”. How would you tell the difference?

Haha, I certainly wouldn't label what you described/presented as "timecube weird." To be honest, I don't have a very clear cut set of criteria, and upon reflection it's probable that the prior is a bit over-influenced by my experiences with some social science research and theory as opposed to hard science research/theory. Additionally, it's not simply that I'm skeptical of whether the conclusion is true, but more generally my skepticism heuristics for research is about whether whatever is being presented is "A) novel/in contrast with existing theories or ...

Hi Abby, I‘m happy to entertain well-meaning criticism, but it feels your comment rests fairly heavily on credentialism and does not seem to offer any positive information, nor does it feel like high-level criticism (“their actual theory is also bad”). If your background is as you claim, I’m sure you understand the nuances of “proving” an idea in neuroscience, especially with regard to NCCs (neural correlates of consciousness) — neuroscience is also large enough that “I published a peer reviewed fMRI paper in a mainstream journal” isn’t a particularl...

Hi Mike! I appreciate your openness to discussion even though I disagree with you.

Some questions:

1. The most important question: Why would synchrony between different brain areas involved in totally different functions be associated with subjective wellbeing? I fundamentally don't understand this. For example, asynchrony has been found to be useful in memory as a way of differentiating similar but different memories during encoding/rehearsal/retrieval. It doesn't seem like a bad thing that the brain has a reason to reduce, the way it has ...

I like this theme a lot!

In looking at longest-term scenarios, I suspect there might be useful structure&constraints available if we take seriously the idea that consciousness is a likely optimization target of sufficiently intelligent civilizations. I offered the following on Robin Hanson's blog:

Premise 1: Eventually, civilizations progress until they can engage in megascale engineering: Dyson spheres, etc.

Premise 2: Consciousness is the home of value: Disneyland with no children is valueless.

Premise 2.1: Over the long term we should expect...

Hi Daniel,

Thanks for the reply! I am a bit surprised at this:

Getting more clarity on emotional valence does not seem particularly high-leverage to me. What's the argument that it is?

The quippy version is that, if we’re EAs trying to maximize utility, and we don’t have a good understanding of what utility is, more clarity on such concepts seems obviously insanely high-leverage. I’ve written about specific relevant to FAI here: https://opentheory.net/2015/09/fai_and_valence/ Relevance to building a better QALY here: https://opentheory.net/2015/06/effecti...

Hi Daniel,

Thanks for the remarks! Prioritization reasoning can get complicated, but to your first concern:

Is emotional valence a particularly confused and particularly high-leverage topic, and one that might plausibly be particularly conductive getting clarity on? I think it would be hard to argue in the negative on the first two questions. Resolving the third question might be harder, but I’d point to our outputs and increasing momentum. I.e. one can levy your skepticism on literally any cause, and I think we hold up excellently in a relative sense. We ma...

Is emotional valence a particularly confused and particularly high-leverage topic, and one that might plausibly be particularly conductive getting clarity on? I think it would be hard to argue in the negative on the first two questions. Resolving the third question might be harder, but I’d point to our outputs and increasing momentum. I.e. one can levy your skepticism on literally any cause, and I think we hold up excellently in a relative sense. We may have to jump to the object-level to say more.

I don't think I follow. Getting more clarity on emoti...

Congratulations on the book! I think long works are surprisingly difficult and valuable (both to author and reader) and I'm really happy to see this.

My intuition on why there's little discussion of core values is a combination of "a certain value system [is] tacitly assumed" and "we avoid discussing it because ... discussing values is considered uncooperative." To wit, most people in this sphere are computationalists, and the people here who have thought the most about this realize that computationalism inherently denies the p...

Thanks, Mike!

Great questions. Let me see whether I can do them justice.

If you could change peoples' minds on one thing, what would it be? I.e. what do you find the most frustrating/pernicious/widespread mistake on this topic?

Three important things come to mind:

1. There seems to be this common misconception that if you hold a suffering-focused view, then you will, or at least you should, endorse forms of violence that seem abhorrent to common sense. For example, you should consider it good when people get killed (because it prevents future suffering fo...

A core 'hole' here is metrics for malevolence (and related traits) visible to present-day or near-future neuroimaging.

Briefly -- Qualia Research Institute's work around connectome-specific harmonic waves (CSHW) suggests a couple angles:

(1) proxying malevolence via the degree to which the consonance/harmony in your brain is correlated with the dissonance in nearby brains;

(2) proxying empathy (lack of psychopathy) by the degree to which your CSHWs show integration/coupling with the CSHWs around you.

Both of these analyses could be done today, ...

Very important topic! I touch on McCabe's work in Against Functionalism (EA forum discussion); I hope this thread gets more airtime in EA, since it seems like a crucial consideration for long-term planning.

Hey Pablo! I think Andres has a few up on Metaculus; I just posted QRI's latest piece of neuroscience here, which has a bunch of predictions (though I haven't separated them out from the text):

https://opentheory.net/2019/11/neural-annealing-toward-a-neural-theory-of-everything/

I think it would be worthwhile to separate these out from the text, and (especially) to generate predictions that are crisp, distinctive, and can be resolved in the near term. The QRI questions on metaculus are admirably crisp (and fairly near term), but not distinctive (they are about whether certain drugs will be licensed for certain conditions - or whether evidence will emerge supporting drug X for condition Y, which offer very limited evidence for QRI's wider account 'either way').

This is somewhat more promising from your most recent post:

...I’d expect

Speaking broadly, I think people underestimate the tractability of this class of work, since we’re already doing this sort of inquiry under different labels. E.g.,

In large part, I don’t think these have been supported as longtermis... (read more)