All of Ruby's Comments + Replies

I think it matters a lot to be precise with claims here. If someone believes that any case of people with power over others asking them to commit crimes is damning, then all we need to establish is that this happened. If it's understood that whether this was bad depends on the details, then we need to get into the details. Jack's comment was not precise so it felt important to disambiguate (and make the claim I think is correct).

There are a lot of dumb laws. Without saying it was right in this case, I don't think that's categorical a big red line.

Thanks, this also made me pause. I can imagine some occasions where you might encourage employees to break the law (although this still seems super ethically fraught) - for example, some direct action in e.g. animal welfare. However, the examples here are 'to gain recreational and productivity drugs' and to drive around doing menial tasks'.

So if you're saying "it isn't always unambiguously ethically wrong to encourage employees to commit crimes" then I guess yes, in some very limited cases I can see that.

But if you're saying "in these instances it was honest, honourable and conscientious to encourage employees to break the law" then I very strongly disagree.

Or if it's majority false, pick out the things you think are actually true, implying everything else you contest!

I would think you could go through the post and list out 50 bullet points of what you plan to contest in a couple of hours.

My guess is it was enough time to say which claims you objected to and sketch out the kind of evidence you planned to bring. And Ben judged that your response didn't indicate you were going to bring anything that would change his mind enough that the info he had was worth sharing. E.g. you seemed to focus on showing that Alice couldn't be trusted, but Ben felt that this would not refute enough of the other info he had collected / the kinds of refutation (e.g. only a $50 for driving without a license, she brought back illegal substances anyway) were not com...

I think asking your friends to vouch for you is quite possibly okay, but that people should disclose there was a request.

It's different evidence between "people who know you who saw this felt motivated to share their perspective" vs "people showed up because it was requested".

I appreciate the frame of this post and the question it proposes, it's worth considering. The questions I'd want to address before fully buying though is:

1) Are the standard of investigative journalism actually good for their purpose? Or they did get distorted along the way for the same reason lots of regulated/standardized things do (e.g. building codes)

2) Supposing they're good for their purpose, does that really apply not in mainstream media, but rather a smaller community.

I think answering (2), we really do have a tricky false positive/false negative t...

I can follow that reasoning.

I think what you get with fewer dedicated people is people with the opportunity for a build-up of deep moderation philosophy and also experience handling tricky cases. (Even after moderating for a really long time, I still find myself building those and benefitting from stronger investment.)

Quick thought after skimming, so forgive me if was already addressed. Why is the moderator position for ~3 hours? Why not get full-time people (or at least half-time), or go for 3 hours minimum. Mostly I expect fewer people spending more time doing the task will be better than more people doing it less.

I think this post falls short of arguing compellingly for the conclusion.

- It brings 1 positive example of a successful movement that didn't schism early one, and 2 examples of large movements that did schism and then had trouble.

- I don't think it's illegitimate to bring suggestive examples vs a system review of movement trajectories, but I think it should be admitted that cherry-picking isn't hard for three examples.

- There's no effort expended to establish equivalence between EA and its goals and Christianity, Islam, or Atheism at the gears level of what they

When I think about being part of the movement or not, I'm not asking whether I feel welcomed, valued, or respected. I want to feel confident that it's a group of people who have the values, culture, models, beliefs, epistemics, etc that means being part of the group will help me accomplish more of my values than if I didn't join the group.

Or in other words, I'd rather push uphill to join an unwelcoming group (perhaps very insular) that I have confidence in their ability to do good, than join a group that is all open arms and validation, but I don't think w...

If you indicate to X group, directly or otherwise, that they're not welcome in your community, then most people who identify with X are probably gonna take you at your word and stop showing up. Some people might be like you and be willing to push past the unwelcomeness for the greater good, but these people are rare, and are not numerous enough to prevent a schism.

Ultimately, you can't make a place welcoming for every single identity without sacrificing things. If the X is "neo-nazis", then trying to make the place welcoming for them is a mistake that would drive out everyone else. But if X is like, "Belgians", then all you have to do is not be racist towards Belgians.

I think I agree with your clarification and was in fact conflating the mere act of speaking with strong emotion with speaking in a way that felt more like a display. Yeah, I do think it's a departure from naive truth-seeking.

In practice, I think it is hard, though I do think it is hard for the second order reasons you give and others. Perhaps an ideal is people share strong emotion when they feel it, but in some kind of format/container/manner that doesn't shut down discussion or get things heated. "NVC" style, perhaps, as you suggest.

Hey Shakeel,

Thank you for making the apology, you have my approval for that! I also like your apology on the other thread – your words are hopeful for CEA going in a good direction.

Some feedback/reaction from me that I hope is helpful. In describing your motivation for the FLI comment, you say that it was not to throw FLI under the bus, but because of your fear that some people would think EA is racist, and you wanted to correct that. To me, that is a political motivation, not much different from a PR motivation.

To gesture at the difference (in my ontology...

To me, the ideal spirit is "let me add my cognition to the collective so we all arrive at true beliefs" rather than "let me tug the collective beliefs in the direction I believe is correct" or "I need to ensure people believe the correct thing."

I like this a lot.

I'll add that you can just say out loud "I wish other people believed X" or "I think the correct collective belief here would be X", in addition to saying your personal belief Y.

(An example of a case where this might make sense: You think another person or group believes Z, and you think they...

I came to the comments here to also comment quickly on Kathy Forth's unfortunate death and her allegations. I knew her personally (she subletted in my apartment in Australia for 7 months in 2014, but more meaningfully in terms of knowing her, we also we overlapped at Melbourne meetups many times, and knew many mutual people). Like Scott, I believe she was not making true accusations (though I think she genuinely thought they were true).

I would have said more, but will follow Scott's lead in not sharing more details. Feel free to DM me.

Those accusations seem of a dramatically more minor and unrelated nature and don't update me much at all that allegations of mistreatment of employees are more likely.

The couple arguments against this do not likely hold up against the vast utility discrepancies from resource allocations...

This kind of utilitarian reasoning seems not too different from the kind that would get one to commit fraud to begin within. I don't think whether it's legally required to return or not makes the difference – morality does not depend on laws. If someone else steals money from a bank and gives it to me, I won't feel good about using that money even if I don't have to give it back and will use it much better.

Sounds an awful lot like LessWrong, but competition can be healthy[1] ;)

- ^

I think this is less likely to be true of things like "places of discussion" because splitting the conversation / eroding common knowledge, but I think it's fine/maybe good to experiment here.

I didn't scrutinize, but at a high-level, new intro article is the best I've seen yet for EA. Very pleased to see it!

I think 20% might be a decent steady-state but at the start of their involvement I think I'd like to see new aspiring community builders do something like six months on intensive object-level work/research.

Fwiw, my role is similar to yours, and granted that LessWrong has a much stronger focus on Alignment, but I currently feel that a very good candidate for the #1 reason that I will fail to steer LW to massive impact is because I'm not and haven't been an Alignment researcher (and perhaps Oli hasn't been either, but he's a lot more engaged with the field than I am).

Again, thanks for taking the time to engage.

I think this post is maybe a format that the EA Forum hasn't done before, but this is intended to be a repository of advice that's crowd-sourced. This is also maybe not obvious because I "seeded" it with a lot of content I thought was worth sharing (and also to make it less sad if it didn't get many contributions – so far a few).

As I wrote:

...I've seeded this post with a mix of advice, experience, and resources from myself and a few friends, plus various good content I found on LessWrong through the Relationships ta

Hi Larks, thanks for taking the time to engage.

I'm not sure how relevant this is to the EA forum?

I personally think that for Effective Altruists to be effective, they need to be healthy/well-adjusted/flourishing humans and therefore something as crucial as good relationship advice ought to be shared on the EA Forum (much the same productivity, agency or motivation advice).

I didn't mention it in the post, but part of the impetus for this post came from Julia's recent Power Dynamics between people in EA post that discusses relationships, and it seemed ...

In terms of thinking about why solutions haven't been attempted, I'll plug Inadequate Equilibria. Though it probably provides a better explanation for why problems in the broader world haven't been addressed. I don't think the EA world is yet in an equilibrium and so things don't get done because {it's genuinely a bad idea, it seems like the thing you shouldn't be unilateral on and no one has built consensus, sheer lack of time}.

Good comment!!

Most ideas for solving problems are bad, so your prior should be that if you have an idea, and it's not being tried, probably the idea is bad;

A key thing here is to be able to accurately judge whether the idea would be harmful if tried or not. "Prior is bad idea != EV is negative". If the idea is a random research direction, probably won't hurt anyone if you try it. On the other hand, for example, certain kinds of community coordination attempts deplete a common resource and interfere with other attempts, so the fact no one else is acting is ...

For LessWrong, we've thought about some kind of "karma over views" metrics for a while. We experimented a few years ago but it proved to be a hard UI design challenge to make it work well. Recently we've thought about having another crack at it.

Yes! This. Thank you for writing.

I often get asked why LessWrong doesn't hire contractors in the meantime while we're hiring, and this is the answer. In particular the fact that getting contractors to do good work would require all of the onboarding that getting a team member to do good work would require.

I don't mean that I expect EA Forum software to replace Swapcard for EAG itself probably, just that the goal is to provide similar functionality all year round.

My understanding (which could be wrong, and I hope they don't mind me mentioning it on their behalf) is that the EA Forum dev team is working to build Swapcard functionality into the forum, including the ability to import your Swapcard data.

In the meantime, I agree with the OP.

I bet that if they are impressive to you (and your judgment is reasonable), you can convince grantmakers at present.

Thank you for the detailed reply!

I agree that Earning to Give may make sense if you're neartermist or don't share the full moral framework. This is why my next sentence beings "if you'd be donating to longtermist/x-risk causes." I could have emphasized these caveats more.

I will say that if a path is not producing value, I very much want to demotivate people pursuing that path. They should do something else! One should only be motivated for things that deserve motivation.

I've looked at the posts you shared and I don't find them compelling.

I think the ...

[Speaking from LessWrong here:] based on our experiments so far, I think there's a fair amount more work to be done before we'd want to widely roll out a new voting system. Unfortunately for this feature, development is paused while we work on some other stuff.

I also see that a lot of the issues were predictable from last year's comments but were not addressed.

This is my fault. I was the lead organizer for Petrov Day this year though wasn't an organizer in previous years. I recalled that there were issues with ambiguity last year, which I attempted to address (albeit quite unsuccessfully), however, I didn't go through and read/re-read all of the comments from last year. If I had done so, I might have corrected more of the design.

I'm sorry for the negative experience you had due to the poor design. I do thi...

LessWrong mod speaking here. Just wanted to confirm that everything written here is correct.

To be clear, only the identities of the account that enters a valid code will be shared.

There is already a (clunky) feature that enables this.

If you hyperlink text with a tag url with the url parameter ?userTagName=true, the hyperlinked text will be replaced by whatever the current name of the tag is.

E.g. If the tag is called "Global dystopia" and I put in a post or other tag with the hyperlink url /global-distoptia?useTagName=true and then it gets renamed to "Dystopia":

- The old URL will still work

- The text "Global dystopia" will be replaced with the current name "Dystopia"

See: https://www.lesswrong.com/posts/E6CF8JCQAWqqhg7ZA/wiki-tag-fa...

Collaborative calendar/schedule for the event is now live! https://docs.google.com/spreadsheets/d/1xUToQ-Wu6w-Uaow7q8Bo5s61beWWRJhIh9P-DNAvx4Q/edit?usp=sharing

Please add any events or activities you'd like to run. Comment here or in the doc if you have questions, e.g . about good places to host your session.

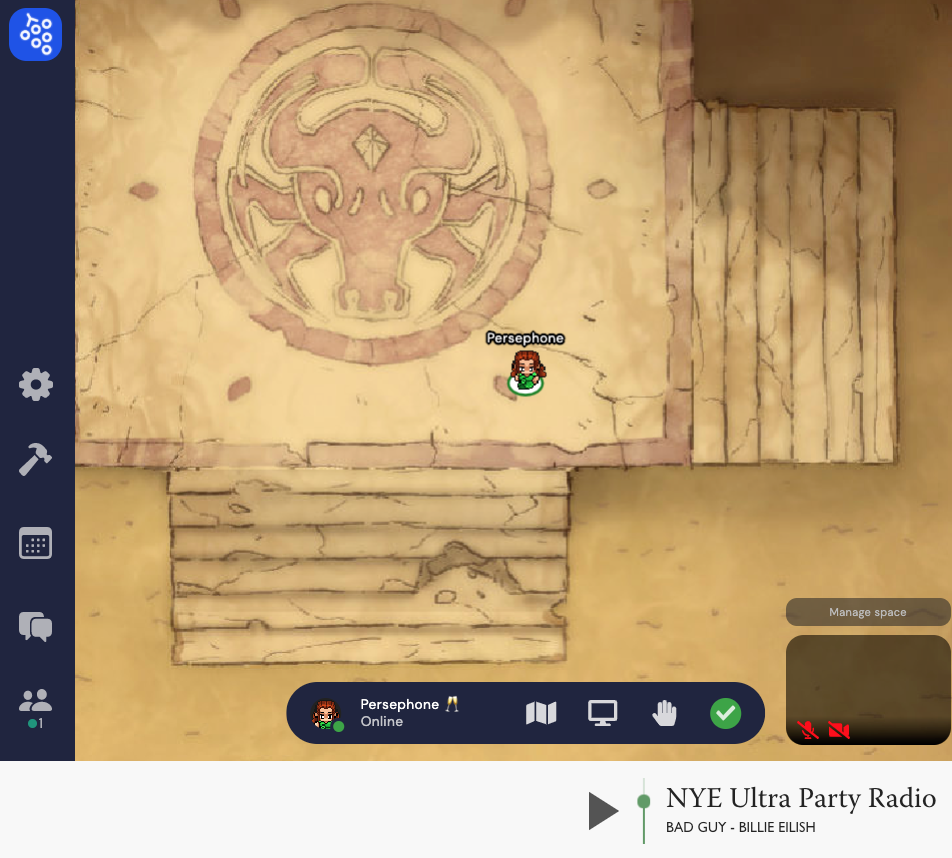

The Ultra Party Radio (TM) has been constructed (bottom right of attached image). We'll be streaming tunes to the entire Garden from our own server, but the music will be optimized for the ballroom dancefloor.

What music would you like to hear? Please comment with:

- Genres

- Specific song requests

- Playlists you might like us to use or borrow from

Some images of the party locations to pump the imagination:

We've now designated many activities to many different regions of the Walled Garden. If you're interested in hosting or attending a specific activity, please comment. The organizers can help you set it up and put it on the Official Party Schedule.

The following are scheduling throughout the party, but it seems great to have more specific things scheduled for like-interested people to join.

Ballroom: dancing, toasts & roasts, countdown

Violet Study: meet new people

Moloch Maze: games, e.g., poker, Among Us

Great Library (1st floor): deep philosophical conver...

RobertM and I are having a "dialogue"[1] on LessWrong with a lot of focus on whether it was appropriate for this to be posted when it was and with info collected so far (e.g. not waiting for Nonlinear response).

What is the optimal frontier for due diligence?

Just wanted to say (without commenting on the points in the dialogue) that I appreciate you and Robert having this discussion, and I think the fact you're having it is an example of good epistemics.