All of ryancbriggs's Comments + Replies

Fair. I struggle with how to incorporate animals into the capabilities approach, and while I appreciate Martha Nussbaum turning her attention here I was also wary of list-based approaches so it doesn't help me too much.

I think this is one of those posts where the question is ultimately more valuable than the answer. And to be clear that isn't a criticism and I upvoted the post. I appreciate posts that push people to think about important questions, even if our best guess answers are not currently very compelling.

I strongly agree with your main point on uncertainty, and I'll defer to you on the (lack of) consensus among happiness researchers on the question of whether or not life is getting better for humans given their paradigm.

However, I think one can easily ground out the statement "There’s compelling evidence that life has gotten better for humans recently" in ways that do not involve subjective wellbeing and if one does so then the statement is quite defensible.

Good question. It’s worth recalling that Sam and Finn’s JDE actually finds small positive (significant) effects of aid on many governance outcomes. I’m not sure I actually believe that those positive effects exist, but it’s important to see that my claims above don’t hinge on over interpreting (imprecise) nulls. Also I realize you know this, but for others it’s good to remember that classical measurement error in the DV will increase noise but will not introduce bias.

The article in footnote 3 is also an example of other work I (in the interest of brevity) ...

That is correct. I think it is pretty uncontentious to say that the people who study this in general worry less about these private charity-type interventions than they do about ODA.

Fair.

I used the term to mean ODA, as did all of the authors that I cited. Basically, this would capture grants and concessional (below market rate) loans that are aimed at promoting economic development or welfare (so not military aid). From a donor's POV, it includes money that it gives to multilateral organizations like the WB that then pass the grant on as well as classic bilateral money. From the recipient's POV, it includes money from bilateral donors and from multilaterals. It does not include non-concessional loans or private charity. Money can be O...

Thanks for the comments!

Off the top: I'm not an expert on Afghanistan and it wouldn't be overly surprising to me if we could find specific times in specific countries when aid did affect politics. Maybe post-invasion Afghanistan is one. All that said, my personal bet would be that aid just isn't doing much in Afghanistan.

Now if the question is "does aid work well in Afghanistan?" then I'd guess the answer is "no." I fully believe that politics can interact with aid to make aid more or less effective, especially in the sense that aid to very badly governed ...

I really appreciate you putting in the work and being so diligent Gregory. I did very little here, though I appreciate your kind words. Without you seriously digging in, we’d have a very distorted picture of this important area.

That makes some sense to me. She should have an easier time of this (than Sen-ish people like me) because she’s willing to just write a list of the eg 10 most important capabilities for humans. If you’re willing to do that, then it almost seems easier to do it for animals. I’ll listen to the podcast and should read the book. Thanks for the pointer.

I liked this post. It was thought provoking.

I just wanted to note that you are correct in highlighting the “human” part in my post on the capability approach. To me, capabilities are the best way to think about human welfare but some variant of utilitarianism is the best way to think about the welfare of (most?) animals, but I’ve no good way to exchange between those and I find that unsatisfying.

I like this idea in the abstract.

One implementation detail that I worry about is how much friction would exist in the micro-transaction. For example, if I do this on my iPhone would it just go <ching> and then make the donation, or would it then pop up a little screen saying "pick your method of payment, then I double press the side button for apple pay, then it scans my face and I wait a beat". I think it has to be the latter given iOS platform constraints (to stop scammers from just taking people's money), but I think that might greatly reduce the "fun, friction-free" thing that I see as the draw here.

Yeah, good points. You may well be right.

I think point 2 is highly questionable though. Just from an information aggregation POV, it seems like we should want key public goods providers to be open to all ideas and to do rather little to filter or privilege some ideas. For example, the forum should not elevate posts on animals or poverty or AI or whatever (and they don't). I've been upset with 80k for this.

I think HLI provides a good example of how this should be done. If you want to push EA in a direction, do that as a spoke and try to sway people to your ...

Re: what goes wrong with the market metaphor: I mostly just think it raises all sorts of questions about whether or not the relevant assumptions hold to model this like an efficient market. Even if the answer is yes (and I'm skeptical), I think the fact that it pushes my (and seemingly other people's) thoughts there isn't idea. It feels like a distraction from the core issue you're pointing to.

I think this is probably better framed as a governance problem. I think you're asking institutions that provide public goods to the "spokes" or EA to not pick favour...

If people care maybe I can look I to this more seriously and write up something longer, but I find it quite unlikely that their claim is correct. I think many of your numbered points are likely correct, but I bet 3 is significant. CEA is tough to do well, and easy to shape.

That said, wasting really is a serious concern and might be quite cheap to treat so if UNICEF was going to be highly cost-effective it might be here.

Thank you for this Michael. I don't think I agree with the market metaphor, but I do think that EA is "letting this crisis go to waste" and that that is unfortunate. I'm glad you're drawing attention to it.

My thoughts are not well-formed, but I agree that the current setup—while it makes sense historically—is not well suited for the present. Like you, I think that it would be beneficial to have more of a separation between object-level organizations focusing on specific cause areas and central organizations that are basically public goods providers for obj...

This is really sad and shocking. His family, colleagues, and students have my sincere condolences.

For people that didn't know him, one thing that stood out about him was his extreme generosity in helping students and junior colleagues. If you want to read some of their small tributes, the replies and quote tweets here are full of political scientists and others sharing stories. He will be deeply missed.

I think this is an excellent idea. As others have noted, I think there is alpha in reaching new people without any EA branding and pitching helping distant others to them. Doing the basics well. Also, seeing your webpage made me realize how much I've lowered my standards on design for a lot of EA content. It's quite nice.

My main (small) criticism is that I was confused by the name. I think you should more clearly explain it. I kept imagining black pepper, not a chili pepper. Maybe there is a way to incorporate the image of a hot pepper somewhere? It didn't feel bad, exactly, but random and odd.

For what it is worth, even people that one might think would know better like professors of international development really get these sort of questions wrong.

I agree. I think 80k et al pushing longtermist philosophy hard was a mistake. It clearly turns some people off and it seems most actual longtermist projects (eg around pandemics or AI) are justifiable without any longtermist baggage.

Glad you found this interesting, and you have my sympathies as another walking phone writer.

can't we square your intuition that the second child's wellbeing is better with preference satisfaction by noting that people often have a preference to have the option to do things they don't currently prefer

A few people have asked similar comments about preference structures. I can give perhaps a sharper example that addresses this specific point. I left a lot out of my original post in the interest of brevity, so I'm happy to expand more in the comments.

Probably ...

I will probably have longer comments later, but just on the fixed effects point, I feel it’s important to clarify that they are sometimes used in this kind of situation (when one fears publication bias or small study-type effects). For example, here is a slide deck from a paper presentation with three *highly* qualified co-authors. Slide 8 reads:

- To be conservative, we use ‘fixed-effect’ MA or our new unrestricted WLS—Stanley and Doucouliagos (2015)

- Not random-effects or the simple average: both are much more biased if there is publication bias (PB).

- Fixed-ef

Glad the post was useful.

- The capability approach is widely used as a "north star" guiding much development practice and research. Amartya Sen has been very influential in this community. The MDPI is pretty new, but it has at least a little purchase at at the UNDP and World Bank, among others. It's probably worth at this point reiterating that I'd say the MDPI is "capability inspired" rather than "the capability approach in practice."

- On the question of data: it's the opposite. I'm quite confident that those questions were chosen because we have a reasonable

Thank you for this writeup. I enjoyed reading it.

As someone who is pretty convinced by the capability approach , one thing that I feel is somewhat missing from this (well done) exercise is a consideration of the foreclosing of options that a child marriage entails for the girl. Even if the girl grows up happy and doesn't have the problems that you sought out measures for (schooling, experiencing violence), her life options may have been quite massively curtailed by being entered into this hard-to-break legal and social arrangement before she was an a...

Thank you for this excellent summary! I can try to add a little extra information around some of the questions. I might miss some questions or comments, so do feel free to respond if I missed something or wrote something that was confusing.

--

On alignment with intuitions as being "slightly iffy as an argument": I basically agree, but all of these theories necessarily bottom out somewhere and I think they all basically bottom out in the same way (e.g. no one is a "pain maximizer" because of our intuitions around pain being bad). I think we want to be careful...

To answer your question directly: yes, but I did not when I was young. I'm pretty steeped in Abrahamic cultural influences. That said, I do not think the post presumes anything about universal religious experiences or anything like that.

However, I'd probably express these ideas a little bit differently if I had to do it again now. Mainly, I'd try harder to separate two ideas. While I'm as convinced as ever that "messianic AI"-type claims are very likely wrong, I think the fact that lots of people make claims of that form may just show that they're from cul...

That's so reasonable.

I think that we can all agree that the analysis was done in an atypical way (perhaps for good reason), that it was not as rigorous as many people expected, and that it had a series of omissions or made atypical analytical moves that (perhaps inadvertently) made SM look better than it will look once that stuff is addressed. I don't think anyone can speak yet to the magnitude of the adjustment when the analysis is done better or in a standard way.

But I'd welcome especially Joel's response to this question. It's a critical question and it's worth hearing his take.

Thank you for sharing these Joel. You've got a lot going on in the comments here, so I'm going only make a few brief specific comments and one larger one. The larger one relates to something you've noted elsewhere in the thread, which is:

"That the quality of this analysis was an attempt to be more rigorous than most shallow EA analyses, but definitely less rigorous than an quality peer reviewed academic paper. I think this [...] is not something we clearly communicated."

This work forms part of the evidence base behind some strong claims from HLI about wher...

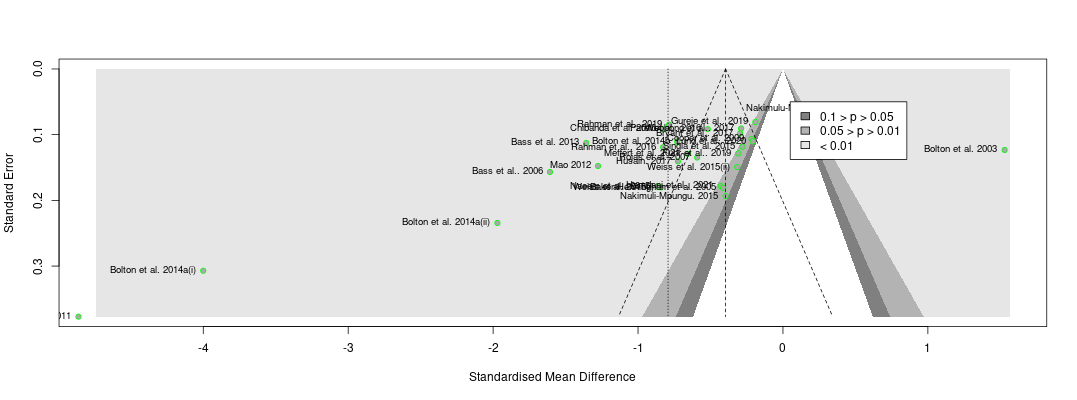

Thank you for responding Jason. That makes sense. The analysis under question here was done in Oct 2021, so I do think there was enough time to check a funnel plot for publication bias or odd heterogeneity. I really do think it's a bad look if no one checked for this, and it's a worse look if people checked and didn't report it. This is why I hope the issue is something like data entry.

Your core point is still fair though: There might be other explanations for this that I'm not considering, so while waiting for clarification from HLI I should be clea...

Hi Ryan,

Our preferred model uses a meta-regression with the follow-up time as a moderator, not the typical "average everything" meta-analysis. Because of my experience presenting the cash transfers meta-analysis, I wanted to avoid people fixating on the forest plot and getting confused about the results since it's not the takeaway result. But In hindsight I think it probably would have been helpful to include the forest plot somewhere.

I don't have a good excuse for the publication bias analysis. Instead of making a funnel plot I embarked on a quest t...

I'd love to hear which parts of my comment people disagree with. I think the following points, which I tried to make in my comment, are uncontentious:

- The plots I requested are indeed informative, and they cast some doubt on the credibility of the original meta-analysis

- Basic meta-analysis plots like a forest or funnel plot, which are incredible common in meta-analyses, should have been provided by the authors rather than made by community members

- Relatedly, transparency in the strength and/or quality of evidence underpinning charity recommendation is good (n

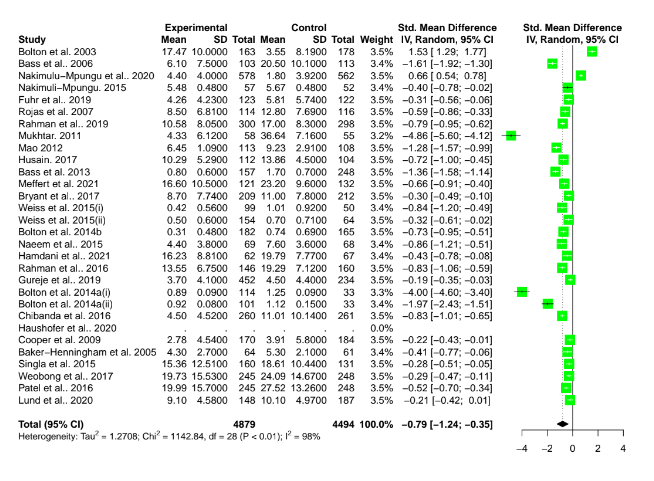

This is why we like to see these plots! Thank you Gregory, though this should not have been on you to do.

Having results like this underpin a charity recommendation and not showing it all transparently is a bad look for HLI. Hopefully there has been a mistake in your attempted replication and that explains e.g. the funnel plot. I look forward to reading the responses to your questions to Joel.

I'd love to hear which parts of my comment people disagree with. I think the following points, which I tried to make in my comment, are uncontentious:

- The plots I requested are indeed informative, and they cast some doubt on the credibility of the original meta-analysis

- Basic meta-analysis plots like a forest or funnel plot, which are incredible common in meta-analyses, should have been provided by the authors rather than made by community members

- Relatedly, transparency in the strength and/or quality of evidence underpinning charity recommendation is good (n

I believe that’s generally outside the model. It’s like asking if people have preferences about the ranking of their preferences.

I should also say, if there is a replication package available for the analysis (I didn’t see one) then I should be able to do this myself and I can share the results here.

If we all agree that this topic matters, then it is pretty important to share this kind of normal diagnostic info. For example, the recent water disinfectant meta-analysis by Michael Kremer’s team shows both graphs. https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4071953

I found (I think) the spreadsheet for the included studies here. I did a lazy replication (i.e. excluding duplicate follow-ups from studies, only including the 30 studies where 'raw' means and SDs were extracted, then plugging this into metamar). I copy and paste the (random effects) forest plot and funnel plot below - doubtless you would be able to perform a much more rigorous replication.

Thank you for this. I might have more to say later when I read all this more carefully, but I couldn’t find either a forest plot or a funnel plot from the meta-analysis in the report (sorry if I missed it). Could you share those or point me to where they exist? They’re both useful for understanding what is going on in the data.

I should also say, if there is a replication package available for the analysis (I didn’t see one) then I should be able to do this myself and I can share the results here.

If we all agree that this topic matters, then it is pretty important to share this kind of normal diagnostic info. For example, the recent water disinfectant meta-analysis by Michael Kremer’s team shows both graphs. https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4071953

Thanks for these questions.

I think that there are two main points where we disagree: first on paternalism and second on prioritizing mental states. I don't expect I will convince you, or vice versa, but I hope that a reply is useful for the sake of other readers.

On paternalism, what makes the capability approach anti-paternalistic is that the aim is to give people options, from which they can then do whatever they want. Somewhat loosely (see fn1 and discussion in text), for an EA the capability approach means trying to max their choices. If instead o...

Thank you for the kind words. I'm a little confused by this sentence:

I do think "women being happier despite having less options" is an interesting case, but women would still likely prefer to be in a mental state where they have more options.

I'll try to respond to what I see as the overall question of the comment (feel free to correct me if I didn't get it): If we assume someone has the thing they like in their choice set, why is having more choices good?

I think there are two answers to this, theoretical and pragmatic. I struggle sometimes to explain the ...

Good questions.

I tried to address the fist one in the second part of the Downsides section. It is indeed the case that while the list of capability sets available to you is objective, your personal ranking of them is subjective and the weights can vary quite a bit. I don't think this problem is worse than the problems other theories face (turns out adding up utility is hard), but it is a problem. I don't want to repeat myself too much, but you can respond to this by trying to make a minimal list of capabilities that we all value highly (Nussbaum), or...

There are two meanings of "we don't know how to increase growth." One is that literally no one knows what Malawi could do to increase growth. That's very likely wrong. The second is "given all of the social and (especially) political constraints in existence right now, no one knows what marginal thing they could actually do that would increase growth in Malawi." I think that's basically right. Leaders in LICs themselves generally know, or have advisors that know, which policies could increase growth. If a LIC isn't growing it very likely isn't because lead...

Good question. Kevin and Robin Grier make a similar argument. I mostly buy it, but I think there are two very important caveats.

First, for a long time (like from Nic VDWs politics of permanent crisis if not earlier) a mainstream argument has been that SAPs were bad not because the policies were bad—many were straightforwardly, obviously good—but because they were nearly impossible to implement given the politics of the countries undergoing crisis. In response, many countries just didn't implement the policies, or they kind of half implemented them and foot...

This is a nice post that should help people better grasp the magnitudes of income gaps across countries and the importance of growth. I'd only add two things:

- Regarding growth, the issue in LICs usually isn't actually speeding up growth, it's sustaining growth. Basically every country has experienced periods of Chinese level growth rates (Malawi's GDP growth data here). The main issue is that in poorer countries these spells are shorter and they are often followed by periods of negative growth.

- The development community is very much aware of these arguments,

I just finished reading all of it, and it was very enjoyable. Kudos to the editors and contributors. For all I know this is complicated and not worth it, but I would really appreciate if there was a way that I could subscribe to this in Apple News+. I like the offline, not-in-browser reading mode and I'd like to slot this reading alongside other long form magazines mentally. I have no clue how Apple News works not the back end, but the magazines must get money out of this. Finally, getting on Apple News might help you extend your audience.

I might be missing the part of my brain that makes these concerns make sense, but this would roughly be my answer: Imagine that you and everyone in your household consume water with lead it in every day. You have the chance to learn if there is lead in the the water. If you learn that it does, you'll feel very bad but also you'll be able to change your source of water going forward. If you learn that it does not, you'll no longer have this nagging doubt about the water quality. I think learning about EA is kind of like this. It will be right or wrong to ea...

The viridis package is good for colourblindness and is also pretty: https://cran.r-project.org/web/packages/viridis/index.html

I have a similar intuition, but I think for me it isn’t that I think far future lives should be discounted. Rather, it’s that I think the uncertainty on basically everything is so large at that time scale (more than 1k years in the future) that it feels like the whole exercise is kind of a joke. To be clear: I’m not saying this take is right, but at a gut level I feel it very strongly.

My claiming it's uncontentious is based on working in this research area and talking to lots of researchers about it. When asked, most say they're less worried about charity than ODA causing these sort of governance issues. Now I get that your question is "why?" and my answer here is more tentative, because I don't know what is going on in their heads.

I do think "size of flow" is a big part of it. I'd guess "large flow" is a necessary but not sufficient condition for governance issues, and absent something like a big GiveDirectly UBI-type thing the s... (read more)