TLDR; EA organizations should implement a public impact dashboard to make impacts public and create a data-driven and transparency-focused culture.

Rigor: Quick and short post. Appreciate feedback and further thoughts on the concept in the comments. Thank you to Jonathan Rystrøm, Richard Ren, and Maris Sala for feedback.

Transparency and impact assessments

Transparency is a virtue in EA where we constantly attempt to evaluate where we can make the most impact. There have been several posts that point out how we can do better evaluations with more transparency, e.g. FTX/CEA - show us your numbers, AI safety impact review, and shallow evaluations of longtermist organizations, among others.

The EA community is usually very transparent and seeks every opportunity for criticism, e.g. sharing mistakes (GiveWell, CEA, 80k, Giving What We Can) and red-teaming. In this post, I will argue for a standardized data-based transparency approach to make transparency in EA easily accessible for everyone.

Solution: Open organizations

Specifically, EA organizations should try to create a subpage called /open of their websites with a public impact data dashboard that allows everyone to see how they work towards impact and the organization’s associated key impact metrics. These dashboards are already present in several larger funding organizations in EA but can be created for most other EA organizations (with a bit of well worth it work).

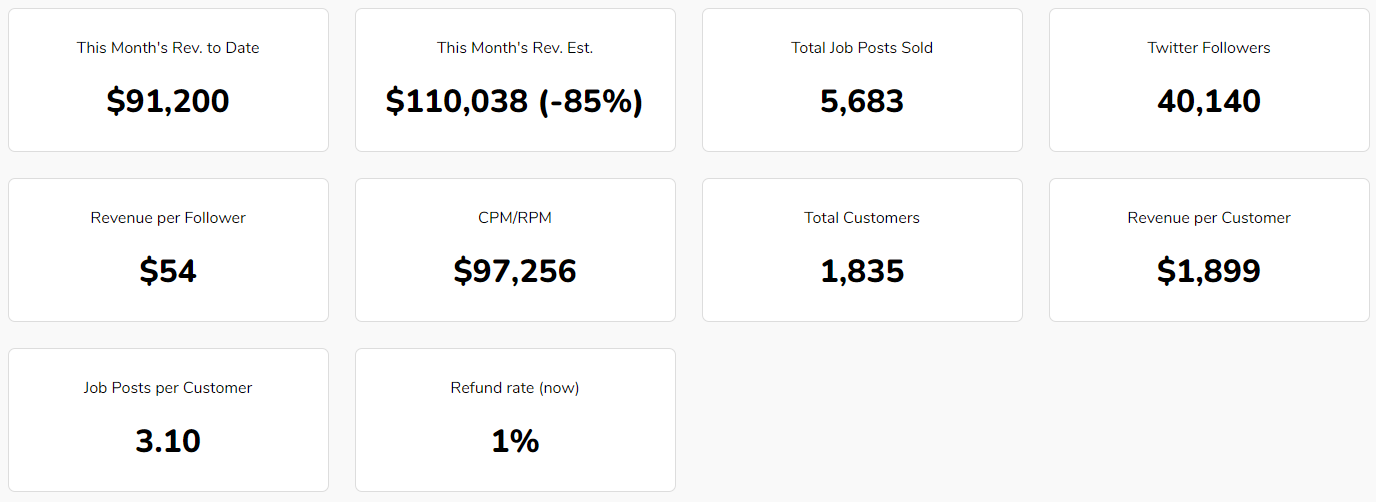

Examples include GiveWell’s wonderful open sites, EA Funds’ donations dashboard and project payouts reports, and OpenPhil’s grants database and principles of sharing detailed reports and project updates. Beyond these in EA, inspiration can be found from Buffer’s transparency journey, SimpleAnalytics, Remote OK, and Friendly, among others.

If you have previously created such a page, it might be quite valuable with a comment on how much time you spent on this.

A company’s normally secret metrics in an /open format allows for a better overview of how that organization is run, company ethics and more

The specific metrics tracked and shared for every project are very different but that is why the /open format allows you to share anything that you believe showcases your impact the best. /open can even be a simple text writeup of your current progress and why you think the project has substantial impact. A writeup can be fine if you don’t have data capabilities in your organization to store and share impact metrics.

Some of the benefits of creating a /open page are:

- Many frequently asked questions about organizations can be answered quickly and in passing compared to the alternative deep dive or direct contact that will take up valuable time. Including a FAQ, roadmap or other organizational information can be very valuable here. Just try to keep it simple and visual so visitors don’t get overwhelmed with information.

- We end up with a better understanding of the actual metrics or considerations evaluated for impact internally at EA organizations and can apply scrutiny to these and their optimization.

- It creates a culture of transparency which is useful when we try to figure out what the most high-impact actions are that we can take in this world.

- It forces us to think about our key impact metrics and how we can measure them properly, resulting in good growth practices.

- It creates a public accountability effect for organizations to do the most good.

Criticism and responses

But if we focus on numbers, won’t we just be subject to improving the numbers instead of our impact?

Yes, and that is why we should choose good representative metrics. I recommend reading the book Lean Analytics (find relations to your own organization's processes in the linked summary). It is focused on for-profit organizations but has good insights into how we can choose good metrics. When metrics are well-chosen, the value of tracking them can be immeasurable. Often, we will also showcase different metrics than we internally measure depending on what will provide the best cursory overview of our impact.

There are no immediate data-based results in longtermist work (!)

This is a valid concern. I will argue that we should strive to figure out good ways to measure our work’s impact either way, e.g. citations, hypotheses tested, and other estimated qualitative metrics (very case-specific). But if that is not possible, an impact essay on a /open subpage can give immediate understanding of the organization’s value in a similar (albeit more qualitative) fashion.

Won’t this take away time from our actual work? We cannot dedicate resources to that.

Setting goals and tracking them can often help focus your work and ensure alignment with your goals. Additionally, impact evaluations are often done by external organizations that need to have deep insight into the organization and impact evaluations will probably take substantially longer if you do not keep track of your metrics or have open reporting. So all in all, it will probably save the community time in the end.

But we don’t have any data to showcase our results at the moment.

Not having data to show can arise in two cases: 1) You don’t have data yet or 2) you are not tracking any data. Case (1) is fine and you can just do a writeup or focus on getting to a stage where you will have data while (2) is a bit more serious since it implies that no metrics have been selected in the first place. This is probably a risk to understanding your actual impact and should be reevaluated. In some cases, it is justifiable, but these cases are relatively few and far between.

Won’t organizations just select the best metrics?

I hope we will see that data dashboards allow more scrutiny into which metrics are selected without manipulation of these and create a culture of learning between organizations on how to track impact and altruistic work. It also seems like update posts that justify funding are larger subjects to motivated reasoning than such public data dashboards even though update posts are currently one of our best choices.

We work with info hazards and publishing anything might be a risk.

If you believe publishing an impacts dashboard is a bad idea because of info hazards, then don't do it. But often, it seems like these impact dashboards can be created without sharing info hazards but just productivity metrics, though it definitely makes it harder to design.

Example tools

As an exercise in probing how hard it would be to implement /open, we created this pre-alpha subpage for a cursory overview of which tools you can employ.

For data visualization, Google Data Studio seems quite capable and has easy integration with all Google data sources but can connect to other apps as well. These visualizations can be embedded easily as an iframe. By default, SimpleAnalytics provides visualization possibilities as well. You can also put screenshots on the page of Excel or Google Sheet graphs and I imagine there are many other options as well.

For data collection, I recommend SimpleAnalytics for privacy while Google Analytics is a standard and free choice. Otherwise, the main concern is to have tools that provide good options for integration through Zapier, Aurelia, NoCodeAPI, LeadsBridge, IFTTT, and others so you can connect to Google Data studio or other visualization tool without code. Good options for data tracking in digital banking (e.g. for funds) are Wise, Revolut, Paypal, while many other banks have public APIs that can usually be connected with the mentioned integrations.

Most other web apps can also provide you with a surprising amount of data through these integration tools. Check out the Zapier integrations list and see if your currently used web apps are in there.

And again, if you are not comfortable implementing these, writing out a simple updated statement and/or static visualizations of how your organization creates impact is all that is needed as a first step.

Post your /open pages and toolsets in the comments when you create it so we can learn from each other - good luck and have fun!

I agree that open sourcing data seems good to me, just to allow people to have an accurate view of the ecosystem. This only seems better as we grow.

I am curious what the sticking points are.

Glad you like it! I can imagine a few but usually from the platforms I've heard, it sticks at points of existing data infrastructure setup and data/software capabilities to build it. I hope that emphasizing the value of an impact writeup mitigates this, however.

May I ask if you spoke to any org and heard their considerations?

It seems to me like "offering tools" for example - is not solving a real problem that orgs actually have. But you know better than me

Based on our previous conversations, I'm curious if you mean the /open page or aisafetyideas.com. For the /open; no, I have not talked with any major orgs about this and this is not a tool we will be offering. For aisafetyideas.com, the focus on that is the result of a lot of interviews and discussions with people in the field[1] :)

Though it is also not the only thing we are focusing on at the moment!

Ah,

I was talking about the current post, "/open"