Mart_Korz

Bio

Participation5

Hey! I am Mart, I learned about EA a few years back through LessWrong. Currently, I am pursuing a PhD in the theory of quantum technologies and learning more about doing good better in the EA Ulm local group and the EA Math and Physics professional group.

Posts 37

Comments40

Topic contributions1

Thanks! I somehow managed to miss their pledge when looking at their website.

It does seem to be somewhat different from e.g. the 10% pledge: At least according to the current formulation, it is not a public or lifetime pledge but rather a one-year commitment:

This coming year, I pledge to give [percentage field] of my income to organizations effectively helping people in extreme poverty.

As an approach, this sounds very reasonable for TLYCS. I imagine that a lifetime pledge that adjusts to one's income level is something that is harder to emotionally resonate with and might feel like too-large a commitment for many. And recommitting to the pledge yearly might make it easy for people to adjust their giving to their current income without needing to think about the maths.

Your table is very similar to the one Peter Singer presents close to the end of The Life You Can Save. I do like the mathematically clarity of your approach, and we now have an intuitive explanation for where the numbers come from - very nice!

Honestly, now that I write this it seems strange that there is no Pledge that matches this way of thinking? Your suggestion could be a nice complement to the existing 10% and Further Pledge and it now seems a little strange that I have not heard about people actively donating according to Singer's table. Maybe it is just a lot harder to explain than the other pledges, and this kind of defeats the point of a public pledge?

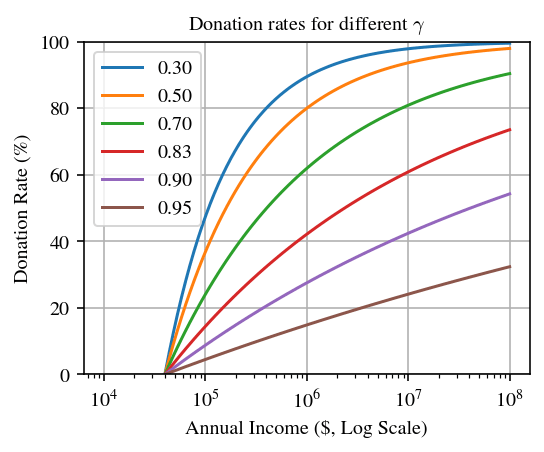

In case someone else wonders what the donation rate would look like for other choices of :

I think a pledge which puts the bar for zero donations at the US-median income [edit: oops, I got that wrong and it is not the median income value. I still think that my point is directionally right] is a little strange – even in the US, many pledgers would never reach non-zero pledged donations and this seems off for a pledge that has income-sensitivity as a central property. Intuitively, a softer rule like "in any case, aim for 1% donations unless you really need all income" or a different reference for the zero-donations bar would be more wholesome.

A very cool idea and nice implementation, thanks for sharing! I sympathize a lot with the idea and "we take responsibility to correct negative consequences of our actions where feasible" could be a good norm to coordinate around.

Some comments

I want the website to give confident, simple numbers so people don't have to think about the uncertainty ranges. I want them to be given a simple number with no uncertainty, and explain the uncertainty further into the site if they dig further.

I think this makes a lot of sense! It can be tricky to get the balance right and at the moment I think some formulations that try to emphasize clarity err in the direction of being too confident - but I fully understand that it is a lot of effort to do these things well.

Formulations on the website:

Inspired by effective altruism principles, we've identified the four areas where your money can do the most good—and calculated exactly how much it takes to balance your share.

The formulation "the four cause areas where your money can do the most good" seems mistaken? If I got this right, the reasoning is to effectively undo (offset) the harms associated with one's personal lifestyle for major areas individually. The effectiveness mostly enters when deciding on which org to donate to within the chosen cause area. Of course, to some degree, the cause areas are still chosen with effectiveness in mind but I think that a different formulation could capture the reasoning better. A suggestion might be

"Inspired by effective altruism principles, for each of the major areas where our lifestyle comes along with negative impact, we've identified where your money can do the most good—and calculated how much it takes to balance your share."

Offset your impact

I feel the formulation "undo/offset my impact" is a little unfortunate as, in my mind, impact mainly is related to intended and thus positive consequences. It takes a little extra concentration to realize that in this case I do want to get rid of the impact. On the other hand, I cannot find a similarly short alternative formulation. Maybe "rectify your impact" could work?

Hiring is also hard if you're progressively eliminating candidates across rounds, because you never can measure the candidates you rejected. The candidate pool is always biased by who you chose to advance already. This makes me feel like I'm never collecting particularly useful data on hiring in hiring rounds. I don't ever learn how good the people I rejected were!

Isn't this avoidable? I could imagine a system where you allow a small percentage of randomized "rejected" candidates to the next hiring round and, if they properly succeed in the next, allow them into the third round. I have essentially no experience with how hiring works, but it seems to me that this could i) increase the effort that goes into hiring only moderately, ii) still sounds kind of fair to the candidates, iii) and would give you some information on what your selection process actually selects for.

Holden Karnofsky has described his current thoughts on these topics "how to help with longermism/AI" on the 80000hours pocast – and there are some important changes:

And then it’s like, what do you do to help? When I was writing my blog post series, “The most important century,” I freely admitted the lamest part was, so what do I do? I had this blog post called “Call to vigilance” instead of “Call to action” — because I was like, I don’t have any actions for you. You can follow the news and wait for something to happen, wait for something to do.

I think people got used to that. People in the AI safety community got used to the idea that the thing you do in AI safety is you either work on AI alignment — which at that time means you theorise, you try to be very conceptual; you don’t actually have AIs that are capable enough to be interesting in any way, so you’re solving a lot of theoretical problems, you’re coming up with research agendas someone could pursue, you’re torturously creating experiments that might sort of tell you something, but it’s just almost all conceptual work — or you’re raising awareness, or you’re community building, or you’re message spreading.

These are kind of the things you can do. In order to do them, you have to have a high tolerance for just going around doing stuff, and you don’t know if it’s working. You have to be kind of self-driven.

He goes on to clarify that today, he sees many ways to contribute that are much more straightforward:

[...]. So that’s the state we’ve been in for a long time, and I think a lot of people are really used to that, and they’re still assuming it’s that way. But it’s not that way. I think now if you work in AI, you can do a lot of work that looks much more like: you have a thing you’re trying to do, you have a boss, you’re at an organisation, the organisation is supporting the thing you’re trying to do, you’re going to try and do it. If it works, you’ll know it worked. If it doesn’t work, you’ll know it didn’t work. And you’re not just measuring success in whether you convinced other people to agree with you; you’re measuring success in whether you got some technical measure to work or something like that.

Then, from ~1:20:00 the topic continues with "Great things to do in technical AI safety"

This is an interesting idea, thanks for sharing!

While thinking about your suggestion a little, I learned about RUTF (peanut-based ready-to-eat meals used to treat child malnutrition) which appear to be rather established. Using the UNICEF 2024 numbers they distributed enough RUTF to feed half a million people throughout the year if we go by calories[1]

According to UNICEF:RUTF-price-data a box of 150 meals (92 g which should correspond to ~500 kcal) costs them around $50 (using 2023 numbers and rounding up). Boiling this down to 2 000 kcal per day, this corresponds to a $1.33/day nutrition.

A few important aspects of RUTF are different than your suggestion (aimed at temporary treatment of malnutrition in children and not general nutrition for all ages, the prices above are not consumer prices, main ingredients differ, RUTF avoids the requirement of adding safe water which might not be easily available), but it seems to me that this supports your cost-estimate at least for large-scale purchases.

I think that together with existing consumer brands for meal-replacement powders, RUTF could be a second great reference for a similar and established idea :)

- ^

Of course, RUTF is used as a temporary treatment so that the amount corresponds to a potential of 5.1 million treated children according to the linked dashboard

I did not downvote myself, but to me the comment from idea21 seems off-topic to the post itself which is a (not very strong) negative.