tobyj

Posts 4

Comments26

Topic contributions1

What are promising folk–elite interventions?

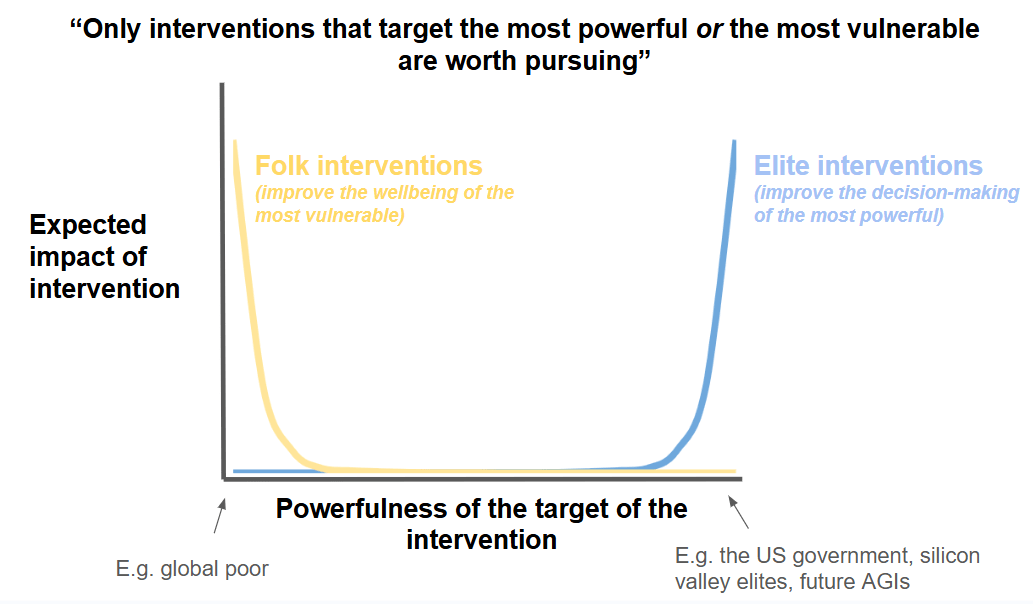

This distinction between "folk interventions" and "elite interventions" feels quite significant in EA spaces.

My instinct that hurt-people hurt people and that elites are often just the visible tip of wider cultural icebergs makes me want to blur this binary.

I just wanted to comment to say I'm very confused about this question/framing - I tried to figure out why and I think it's something to do with uncertainty about what "objective" even means.

Wondering if anyone has a good exploration of what it means for a thing to be objective?

(My intuition is that I have a "sense of the objective" and that that is pointing at "things I anticipate other people will agreeing with", or "things I'd feel annoyed/crazy if people contradicted", - this would point to there being at thing that is objective morality, but is argued from a subjective frame so I'm not sure that's right - so is there an objective definition of objectivity?)

I wanted to get some perspective on my life so I wrote my own obituary (in a few different ways).

They ended up being focussed my relationship with ambition. The first is below and may feel relatable to some here!

Auto-obituary attempt one:

Thesis title: “The impact of the life of Toby Jolly”

a simulation study on a human connected to the early 21st century’s “Effective Altruism” movementSubmitted by:

Dxil Sind 0239β

for the degree of Doctor of Pre-Post-humanities

at Sopdet University

August 2542Abstract

Many (>500,000,000) papers have been published on the Effective Altruism (EA) movement, its prominent members and their impact on the development of AI and the singularity during the 21st century’s time of perils. However, this is the first study of the life of Toby Jolly; a relatively obscure figure who was connected to the movement for many years. Through analysing the subject’s personal blog posts, self-referential tweets, and career history, I was able to generate a simulation centred on the life and mind of Toby. This simulation was run 100,000,000 times with a variety of parameters and the results were analysed. In the thesis I make the case that Toby Jolly had, through his work, a non-zero, positively-signed impact on the creation of our glorious post-human Emperium (Praise be to Xraglao the Great). My analysis of the simulation data suggests that his impact came via a combination of his junior operations work, and minor policy projects but also his experimental events and self-deprecating writing.One unusual way he contributed was by consistently trying to draw attention to how his thoughts and actions were so often the product of his own absurd and misplaced sense of grandiosity; a delusion driven by what he would describe himself as a “desperate and insatiable need to matter”. This work marginally increased the self-awareness and psychological flexibility amongst the EA community. This flexibility subsequently improved the movement's ability to handle its minor role in the negotiations needed to broker power during the Grand Transition - thereby helping avoid catastrophe.

The outcomes of our simulations suggest that through his life and work Toby decreased the likelihood of a humanity-ending event by 0.0000000000024%. He is therefore responsible for an expected 18,600,000,000,000,000,000 quality adjusted experience years across the light-cone, before the heat-death of the universe (using typical FLOP standardisation). Toby mattered.

Ethics note: as per standard imperial research requirements, we asked the first 100 simulations of Toby if they were happy being simulated. In all cases, he said “Sure, I actually, kind of suspected it…look, I have this whole blog about it”

I wrote up my career review recently! Take a look

As a counter-opinion to the above, I would be fine with the use of GPT-4, or even paying a writer. The goal of most initial applications is to asses some of the skills and experience of the individual. As long as that information is accurate, then any system that turns that into a readable application (human or AI) seems fine, and more efficient seems better.

The information this looses, is the way someone would communicate their skills and experience unassisted, but I'm skeptical that this is valuable in most jobs (and suspect it's better to test for these kinds of skills later in the process).

More generally I'm doubtful of the value of any norms that are very hard to enforce and disadvantage scrupulous people (e.g. "don't use GPT-4 or "only spend x hours on this application").

Yeah - appreciate this is ambiguous - I was essentially asking for examples of interventions that blur this binary. This would include interventions closer to the middle of this graph (insofar as they seem genuinely connected to the more extremes). Flavours I was imagining: