Daniel_Friedrich

Bio

Participation4

Exploring consciousness, AI alignment, moral psychology and how they interact in decision-making.

Always happy to chat!

Posts 11

Comments44

Fortunately, the WWOTF link still works: https://whatweowethefuture.com/wp-content/uploads/2023/06/Climate-Change-Longtermism.pdf

Alternatively, it loads a little faster on Web Archive: https://web.archive.org/web/20250426191314/https://whatweowethefuture.com/wp-content/uploads/2023/06/Climate-Change-Longtermism.pdf

I disagree with your argumentation but agree there's quite a significant (e.g. 6.5%) chance that you're correct about the thesis that consciousness has causal efficacy through quantum indeterminacy and that this might be helpful for alignment.

However, my take is that if the effects were very significant and similarly straightforward, they would be scientifically detectable even with very simple fun experiments like the "global consciousness project". It's hard to imagine "selection" among possibly infinite universes and planets and billions of years - but if you manage to do so, the "coincidences" that brought about life can be easily explained with the anthropic principle.

I see this as a more general lesson: People are often overconfident about a theory because they can't imagine an alternative. When it comes to consciousness, the whole debate comes down to to what extent something that seems impossible to imagine is a failure of imagination vs failure of a theory. Personally, I myself give most weight to Rusellian monism but I definitely recommend letting some room for reductionism, especially if you don't see how anyone could possibly believe that, as that was the case for me, before I deeply engaged with the reductionist literature.

But I'm glad whenever people aren't afraid to be public about weird ideas - someone should be trying this and I'm really curious whether e.g. Nirvanic AI finds anything.

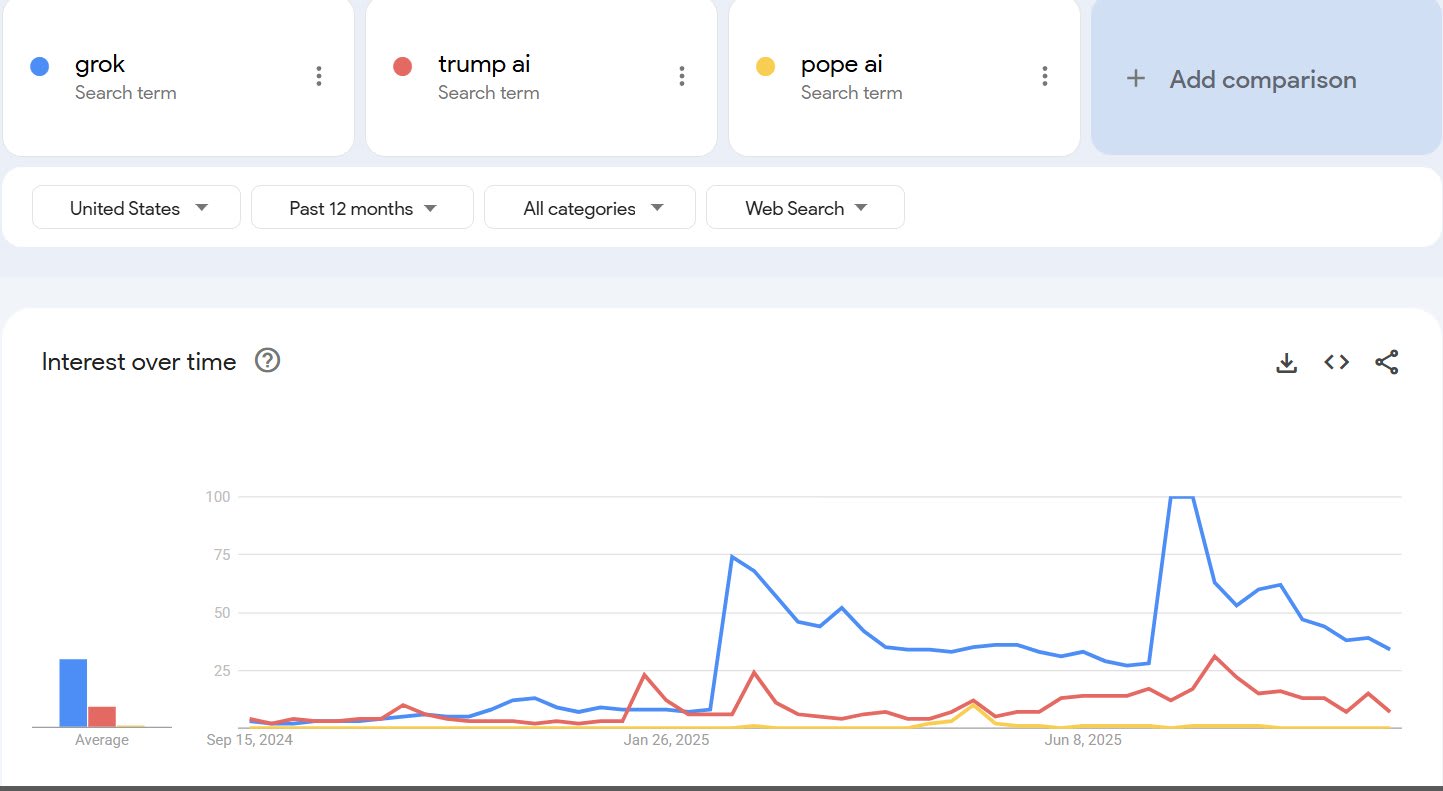

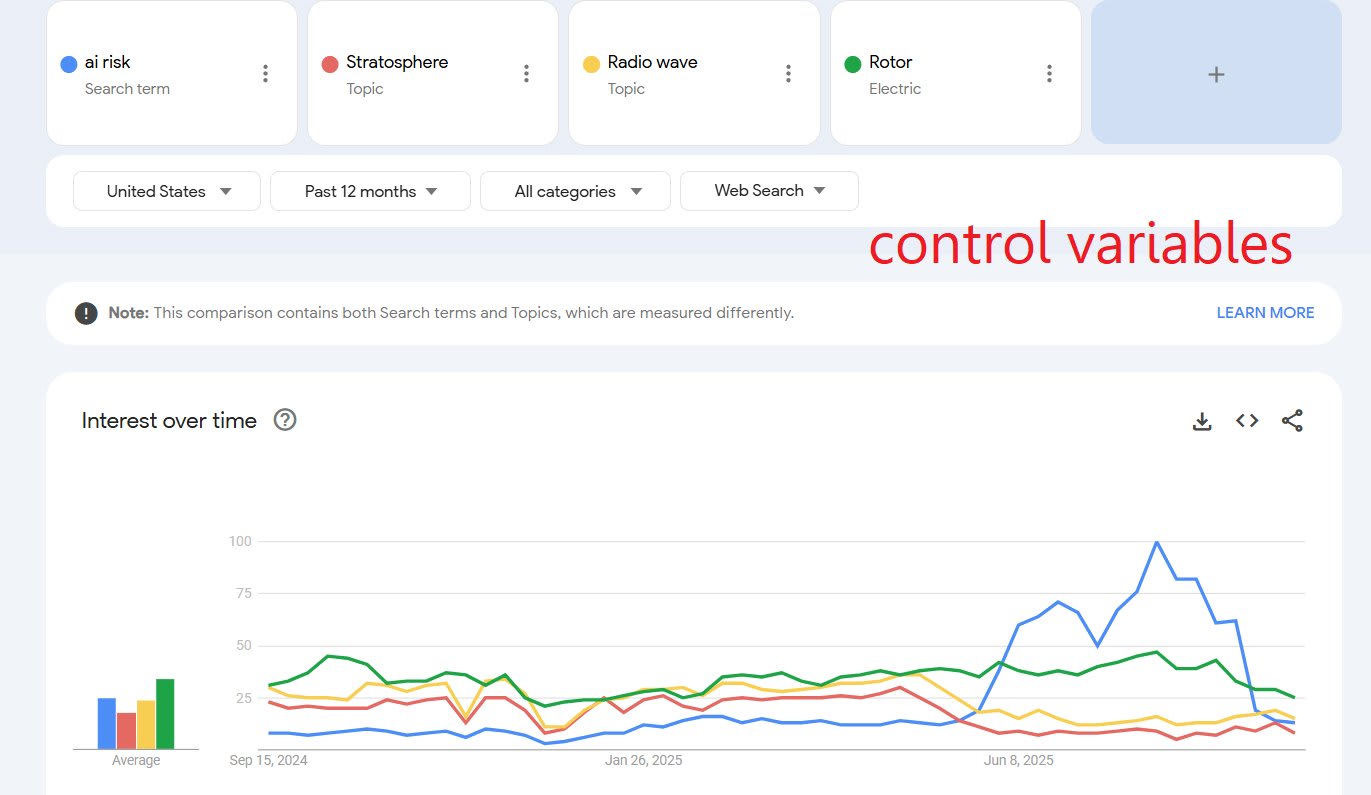

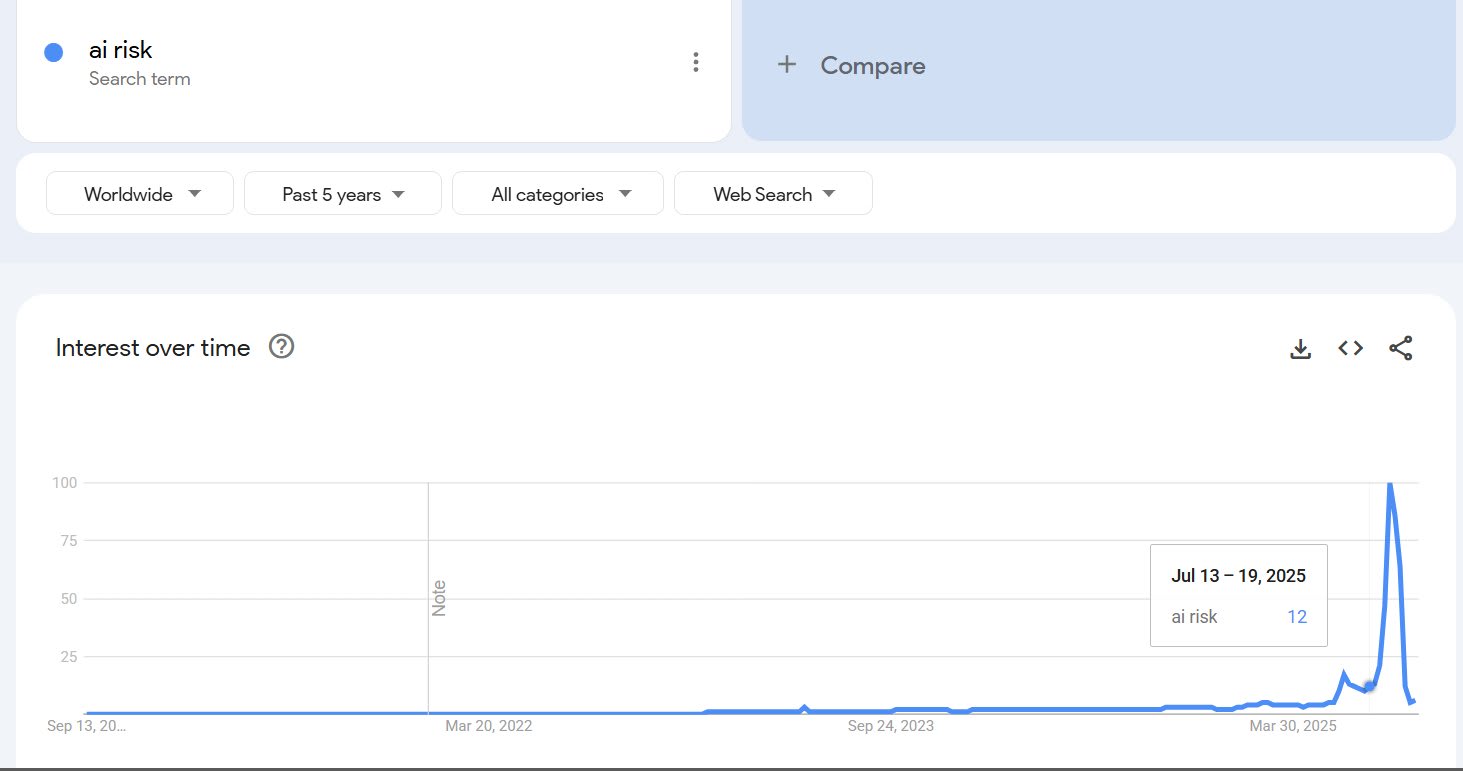

The MechaHitler incident seems to have worked as something of a warning shot, Google interest in AI risk has reached an absolute all time high. Trump's AI plan came out on the same day but the comparisons suggests Grok accounts for ~70% of the peak.

I can't quite dismiss the possibility that the interest was driven by new Chinese AI norms, because Chinese people have to use VPNs, so the geography isn't trustworthy. However, if this were true, I would expect that the number of searches for AI risk in Chinese on Google would be higher than roughly zero (link).

I think objective ordering does imply "one should" so I subscribe to moral realism. However, recently I've been highly appreciating the importance of your insistence that the "should" part is kind of fake - i.e. it means something like "action X is objectively the best way to create most value from the point of view of all moral patients" but it doesn't imply that an ASI that figures out what is morally valuable will be motivated to act on it.

(Naively, it seems like if morality is objective, there's basically a physical law formulated as "you should do actions with characteristics X". Then, it seems like a superintelligence that figures out all the physical laws internalizes "I should do X". I think this is wrong mainly because in human brains, that sentence deceptively seems to imply "I want to do x" (or perhaps "I want to want x") whereas it actually means "Provided I want to create maximum value from an impartial perspective, I want to do x". In my own case, the kind of argument for optimism around AI doom in the style that @Bentham's Bulldog advocated in Doom Debates seemed a bit more attractive before I truly spelled this out in my head.)

My impression is that CEA's goal is to fund the meta cause area and the main goal of local groups is to organize events. While funding is hard to democratize unless you convince some billionaire, democratizing the organizations that run events is trivial. [Edit: Also, while it makes sense to organize local events directly based on the local community's preferences / demand, I think it makes sense to take a more top-down (principles-oriented) approach when it comes to distributing funding, because the "demand-side" here comprises of every person on the planet who appreciates money.]

But now I do realize that in my head, I equated CEA with OpenPhil's wing for the meta cause area, which might not be accurate. I also feel good about democratizing CEA if I imagine it implemented as an indirect democracy (i.e. with local organizations voting, instead of every EA member). This probably moves me towards the middle of the poll - i.e. I would be in favor of this kind of democracy. Indirect democracy would reduce the problem of uninformed voters, the problem of dealing with problems publicly and the problem of disbalance in the level of reflection between the average member and highly-engaged members.

I can see two realistic models for the parallel organization, which I'm not a fan of:

1) A competitor to CEA. Just like CEA, this org would mainly fundraise and fund projects.

I think the problems with selecting members mentioned in this thread are overstated. Any political party faces the same problem. I suspect that in practice, strategically recruiting weakly engaged EAs just isn't a big problem. But it could be either mitigated by requiring members to meet any of the conditions you mentioned (fees, EA org employment, course certificate), or setting a number of votes per regions, e.g. based on similar indicators of the # of engaged members.

Personally, I'm sufficiently satisfied with the general CEA agenda, that I suspect this would be a waste of effort. That's in part because I think highly engaged EAs who dominate these orgs have more philosophically robust views and in part because I don't think this competitor organization would be able to raise more than 10 % of CEA's budget (~80 % of it comes from OpenPhil). So, given the main goal of funding projects, I don't think this org would be sufficiently better to be worth all the costs - and not just costs inherent in the operations, but also the emotional costs of having these debates publicly and the costs of coordinating "who is willing to fund what" which I imagine might already be a nightmare.

2) A union. A soft counter-power to CEA.

If this org's only power were the possibility to strike or produce resolutions, I'm concerned this would artificially inflate unproductive discord. My impression is that unions often produce irrational policies perhaps because they only have quite extreme measures at their disposal, which creates an illusory "us vs. them" aesthetics for relationships that are overall very positive-sum.

However, I have some sympathy for the idea of

3) A community ambassador who would be democratically voted e.g. by all EA Forum members and who's job would be to facilitate the communication between CEA and the community in both directions. I imagine someone at CEA might already effectively hold this job, so perhaps they would be interested in having their choice ratified by the community. Ideally, this community ambassador would collect people's concerns and visit CEA board meetings, in order to be able to integrate both perspectives.

However, I think the cost of this position is non-negligible. Given the power-law distribution of impact among people and given the many rounds of tests, which employees at EA organizations allegedly undergo - a democratic vote would probably yield a much less discerning choice (as most people wouldn't spend more than 30 minutes picking a candidate). I'm not sure to what extent the wisdom of the crowd might apply here.

Because of similar uncertainties and because I wouldn't count this as a "leadership role", I'm voting "moderately disagree".

I think the comparison in energy consumption is misleading because phones use unintuively little energy, as much as 10 Google searches per one charging, (Andy Masley has good articles on AI emissions), using a smartphone for one year costs less than a dollar. I think a good heuristic is "if it's free, it uses so little energy that it's not worth considering".

If you're not paying to generate it, you're also not taking any income away from artists.

The argument that it's bad vibes for artists is a good one.

I'd like to see

- an overview of simple AI safety concepts and their easily explainable real-life demonstrations

- For instance, to explain sycophancy, I tend to mention the one random finding from this paper that hallucinations are more frequent, if a model deems the user uneducated

- more empirical posts on near-term destabilization (concentration of power, super-persuasion bots, epistemic collapse)

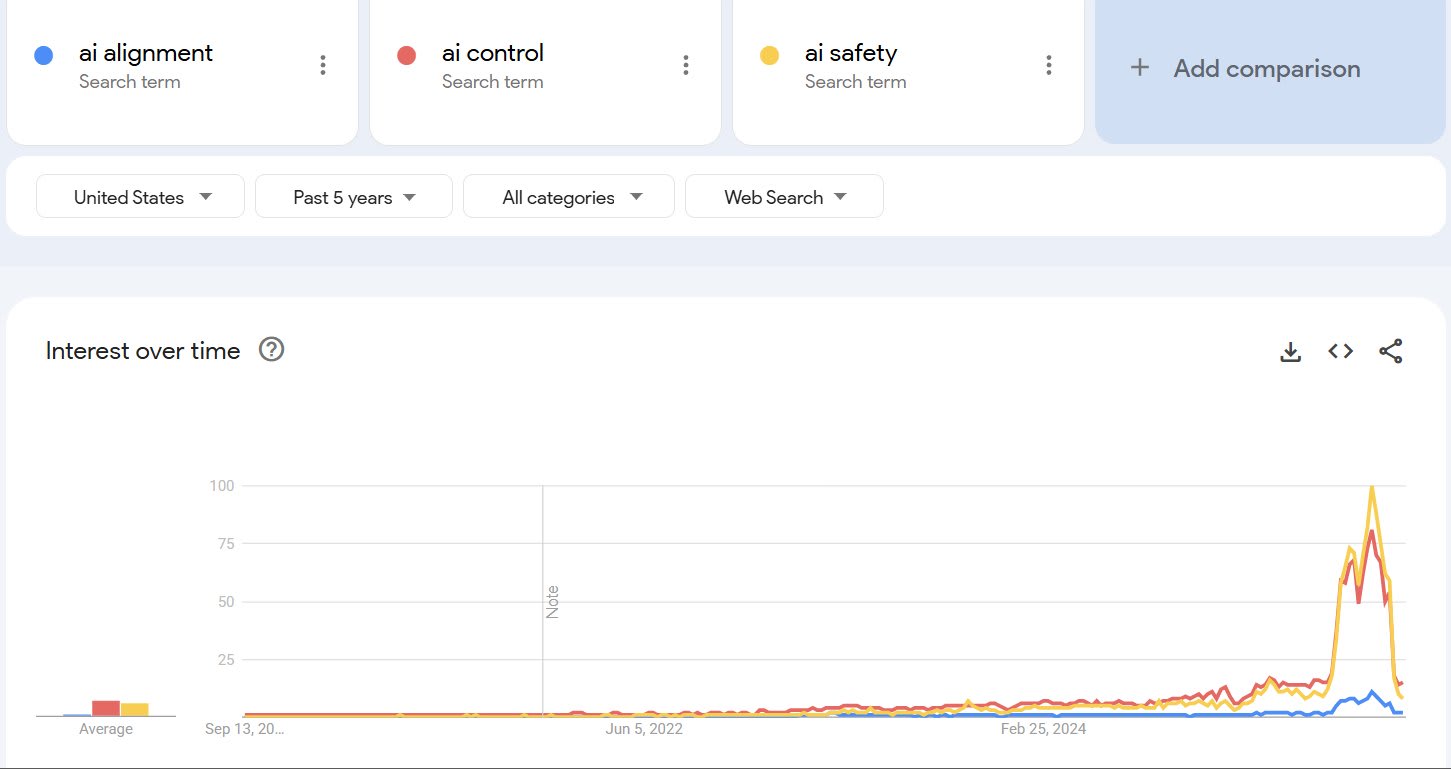

We have seen an order-of-magnitude increase in the interest in AI alignment, according to Google Trends. Part of it (July peak) can be attributed to Grok's behavior (see my little analysis). The YouTube channel AI in Context correctly identified this opportunity and swiftly released a viral video explaining how the incident connects to alignment. September peak might be attributed to the release of If Anyone Builds It.