Elias Au-Yeung

Posts 1

Comments8

Thanks for writing this. Some time ago, I also wrote on the possibility of microorganism suffering. Feel free to reach out if you'd like to discuss the topic :) From the intro:

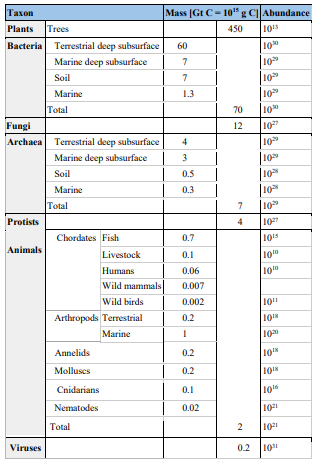

At any given moment, around 1030 (one thousand billion billion billion) microorganisms exist on Earth.[2] Many have very short lives, resulting in massive numbers of deaths. Rough calculations suggest 1027 to 1029 deaths per hour on Earth.[3] Microorganisms display aversive reactions, escape responses, and/or physiological changes against various fitness-threatening phenomena: harmful chemicals, extreme temperatures, starvation, sun damage, mechanical damage, and predators and viruses.

I've updated somewhat from your response and will definitely dwell on those points :)

And glad you plan to read and think about microbes. :) Barring the (very complicated) nested minds issue, the microbe suffering problem is the most convincing reason for me to put some weight to near-term issues (although disclaimer that I'm currently putting most of my efforts on longtermist interventions that improve the quality of the future).

FWIW, I think there are some complicating factors, which makes me think some WAW interventions could be among my top 1-3 priorities.

Some factors:

- Maybe us being in short-lived simulations means that the gap between near and long-term interventions is closer than expected.

- Maybe there are nested minds, which might complicate things?

- There may be some overlap between the digital minds issue you pointed out and WAW, e.g., simulated ecosystems which could contain a lot of suffering. It seems that a lot of people might more easily see "more complex" digital minds as sentient and deserving of moral consideration, but digital minds "only" as complex as insects wouldn't be perceived as such (which might be unfortunate if they can indeed suffer).

Also,

A while ago I wrote a post on the possibility of microorganism suffering. It was probably a bit too weird and didn't get too much attention -- but given sufficient uncertainty about philosophy of mind, the scope of the problem is potentially huge.[1] I kind of suspect this could really be one of the biggest near term issues. To quote the piece, there are roughly "1027 to 1029 [microbe] deaths per hour on Earth" (~10 OOMs greater than the number of insects alive at any time, I believe).

The problem with possibilities like these is that it complicates the entire picture.

For instance, if microorganism suffering was the dominant source of suffering in the near term, then the near term value of farm animal interventions is dominated by how it changes microbe suffering, which makes it a factor to consider when choosing between farm animal interventions.

I think it's less controversial to do some WAW interventions through indirect effects and/or by omission (e.g., changing the distribution of funding on different interventions that change the amount of microbe suffering in the near term). If there's the risk of people creating artificial ecosystems extraterrestrially/simulations in the medium term, then maybe advocacy of WAW would help discourage creating that wild animal suffering. And in addition to that, as Tomasik said, "actions that reduce possible plant/bacteria suffering [in a Tomasik sense of limiting NPP] are the same as those that reduce [wild] animal suffering"[2], which could suggest maintaining prioritization of WAW to potentially do good in this other area as well.

- ^

FYI, I don't consider this a "Pascal's mugging". It seems wrong for me to be very confident that microbes don't suffer, but at the same time think that other humans and non-human animals do, despite huge uncertainties due to being unable to take the perspectives of any other possible mind (problem of other minds).

- ^

To be clear, Tomasik gives less weight than I do to microbes: "In practice, it probably doesn't compete with the moral weight I give to animals". I think he and I would both agree that ultimately it's not a question that can be resolved, and that it's, in some sense, up to us to decide.

Regarding whether to focus on numbers or biomass, I think the following articles could be relevant:

Brian Tomasik's Is Brain Size Morally Relevant? explores arguments for brain-size/complexity weighting and for 'equality weighting' (equal consideration of systems). Despite the title, it's not just brain size that's discussed in the article but sometimes also mental complexity ("Note: In this piece, I unfortunately conflate "brain size" with "mental complexity" in a messy way. Some of the arguments I discuss apply primarily to size, while some apply primarily to complexity.").

The question of whether complexity matters is related to the intensity of experiences. Jason Schukraft's Differences in the Intensity of Valenced Experience across Species explores this and seems to conclude that for less familiar systems (well, animals -- I think only animals are explored in that piece) it's really unclear whether the "intensity range" is larger or smaller. They could potentially suffer more intensely. They could potentially suffer less intensely.

Another article by Brian Tomasik: Fuzzy, Nested Minds Problematize Utilitarian Aggregation raises the question of how to subset/divide physical reality into minds. Like Brian, the approach that's most intuitive for me is to "Sum over all sufficiently individuated "objects"" and count subsystems (e.g. suffering subroutines) too. Although, I think the question is still super confusing. It's also written in the article that "[p]erforming any exact computations seem intractable for now" although I don't know enough math to say.

I think it's ultimately all quite subjective though (which doesn't mean it's not important!). For me, I don't see how we can definitively show that some approach is correct. (Brian Tomasik describes this problem as a "moral question" and frames it as there's "[n]o objective answer" but I feel like his language is slightly confusing. Rather, I think there is an answer regarding suffering/sentience, but it's just that we might not ever have the tools to know that we've reached it. In the absence of those tools, a lot of what we're doing when saying "there's more suffering here" or "there's more suffering there" might be or might be compared to a discussion of morality.) We're also using a bunch of intuitions that were shaped by factors that don't necessarily guide us to truths about suffering/sentience (I explore this in the Factors affecting how we attribute suffering section in my recent microorganism post).

Bear with me, I can only reply piecemeal.

Sure, that's alright :)

If, as seems likely, scientists conclusively show that either brains or neural architecture are the necessary conditions for conscious experience, then that would heavily downgrade the likelihood that some alternative mechanism exists to provide bacteria with consciousness.

One of the things I mention in the post is that whenever we're looking at scientific findings, we're imposing certain standards on what counts as evidence. But it's actually not all that clear how we're supposed to construct these standards in the case of first-person experiences we don't have access to. Brains/neural architectures are categories we invent to put particular instances of "brains" and "neural architectures" in. They're useful in science and medicine but that doesn't mean referring to those categories with those boundaries automatically tells us everything to know about conscious experience/suffering.

What we're really interested in is the category containing systems capable of suffering, and there are a number of different views on what sort of criteria identifies elements in this category: some people follow criteria that suggest only other humans are similar enough to be capable of suffering[1], some people follow criteria that suggest mammals are also similar enough, some people follow criteria that suggest insects are also similar enough. These views have us decrease our threshold for acceptable similarity.[2] One next step might be to extend criteria from the cell-to-cell signaling of nervous systems to the intracellular signaling of microrganisms. If we're confident in/accept some of the other criteria, can we really rule out similar adjacent criteria? This seems difficult given how much uncertainty we have about our reasoning regarding consciousness and suffering[3] . We only need to assign some credence to the views that count some things we know of microbes as evidence of suffering (e.g., chemical-reactive movement, cellular stress responses, how microbes react to predators & associated mechanisms) -- in order to think that microorganism suffering is at least a possibility.

There's a lot of subjective judgment in this. And scientists can't escape it too. The evolution of human intuitions was guided by what's needed for social relationships etc., not fundamental truths about what is conscious/what can suffer. Scientists also impose their standards on what counts as evidence of consciousness/suffering -- but the standards are constructed with those intuitions! I discuss more in the section linked in the footnote. [3]

discover the mechanistic link between biochemistry and consciousness. It seems to me that this missing link is the main factor that leaves room for possible bacterial suffering.

(I actually I hold a physicalist (monist) view. I don't think consciousness is something additional to physics. Dualism seems unlikely mostly given how science hasn't found additional "consciousness stuff" over and above physical reality. My (very rough) approach to all this is that (some) physical processes (e.g. in the brain) can be said to be conscious experiences, under some interpretation/criteria. Although, I don't mention this stuff in the post to be more theory-neutral (hence my describing things as physical 'evidence of suffering'), which is okay since I think the post doesn't really rely on physicalism being true)

- ^

Similar enough to oneself -- the only system that we're absolutely sure can suffer

- ^

What about more "fundamental" differences? I respond to that in the Counterarguments subsection.

- ^

We already have a robust scientific understanding of the biochemical causes of bacterial behavior. Why would we posit that some form of cognitive processing of suffering is also involved in controlling their actions?

I think even if we have a robust scientific understanding of, e.g., the human brain, we would still think that human suffering exists. I don't think understanding the physical mechanisms behind a particular system means that it can't be associated with a first-person experience.

Endorsing a program of study purely on the basis of "important, if true" seems like it would also lead you to endorse studying things like astrology.

So I'm not looking at the topic only because "important, if true". As I explain in the piece, I think there are signs pointing toward "there's at least a small chance" based on reflecting on our concept of what we take to be evidence of suffering, and aspects of microbes that fit possible extended criteria.

I think there's a lot of scientific evidence against astrology. On the other hand, how we should attribute mental experiences to physical systems seems to be an open question because of the problem of (not being) other minds.

Since you're mainly taking the fact that bacteria "look like they might be suffering," it seems like you should also be concerned that nonliving structures are potentially suffering. Wouldn't it be painful to be as hot as a star for up to trillions of years?

I am actually concerned that there's some chance various nonliving structures do suffer. Digital minds are one example. I don't know much about astronomy/astrophysics but I doubt that stars have functioning that strongly matches, e.g., damage responses exhibited by animals. This isn't to say there's absolutely zero chance we can attribute negative experiences to such a system, but I might think it's much more unlikely than microorganisms that better match damage responses of animals. It might be valuable for someone to look into whether some astronomical objects match some interpretations of what it means to be evidence of suffering, and to what extent. I think there's quite a lot of uncertainty surrounding questions in the philosophy of mind (e.g., some philosophers endorse panpsychism while others might be more skeptical about the sentience of non-human animals).

Higher-variance outcomes seem more likely with a U.S.-led future than with a China-led future.

This might be one reason to think that worst-case outcomes are more likely in a U.S.-led future.