FJehn

Bio

Participation4

Hi, I’m Florian. I am enthusiastic about working on large scale problems that require me to learn new skills and extend my knowledge into new fields and subtopics. My main interests are climate change, existential risks, feminism, history, hydrology and food security.

Posts 38

Comments112

Thanks, appreciated!

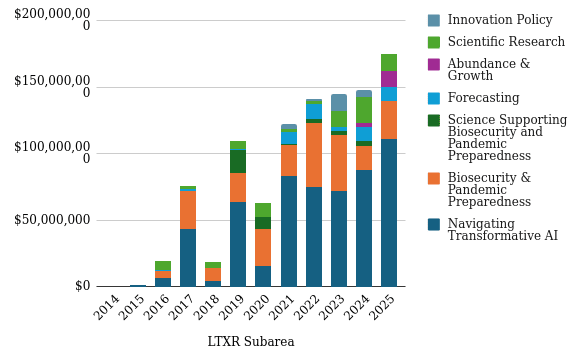

Putting your plot here in the comment, so others don't have to go through the spreadsheet:

What did you group into scientific research?

Curious to see this, because it does not map at all to what seems to be happening in the broader GCR space. ALLFED had to massively downsize, CSER has also gotten smaller, GCRI has gotten smaller, FHI has ceased to exist (though not due to funding explicitly). So, how does this map to the funding staying constant for non-AI things? Where is this funding going to, if it clearly does not end up with the most well known GCR orgs?

Thanks for making this! Is there a more detailed breakdown for the longtermist category? I have the suspicion that there was also a massive shift in this category in the last few years: most money went to AI, there are some breadcrumbs for pandemics and pretty much nothing for the rest. While in the time before this was more balanced. Would be curious to see if this is true.

I had a similar experience. I recommended the podcast to dozens of people over the years, because it was one of the best to have fascinating interviews with great guest on a very wide range of topics. However, since it switched to AI as the main topic, I have recommended it to zero people and I don't expect this to change if the focus stays this way.

With the satellites I understood it as they being disrupted in several ways:

- Their signal gets garbled, but they remain fine

- Their electronics get fried

- The increased drag in the atmosphere leads to them being de-orbited

What ultimately happens depends a lot on the orbit and how hardened the satellite is, but I haven't seen research that tries to assess this in detail (but also haven't looked very hard for this particular thing).

About the airplanes: Yeah this might be an option, though I think the paper that mentioned this said something along the lines "it is quite hard to predict where in the airplanes path the radiation will increase and they can receive the radiation quickly, which makes this hard to avoid".

Yeah, I share that worry. And from experience it is really hard to get funding for nuclear work in both philanthropy and classic academic funding. My last grant proposal about nuclear was rejected with the explanation that we already know everything there is to know about nuclear winter, so no need to spend money on research there.

I meant specifically mentioning that you don't really fund global catastrophic risk work on climate change, ecological collapse, near-Earth objects (e.g., asteroids, comets), nuclear weapons, and supervolcanic eruptions. Because to my knowledge such work has not been funded for several years now (please correct me if this is wrong). And as you mentioned that status quo will continue, I don't really see a reason to expect that the LTFF will start funding such work in the foreseeable future.

Thanks for wanting to check in if there is a difference between the public grants and the application distribution. Would be curious to hear the results.

Thanks for the clarification. In that case I think it would be helpful to state on the website that the LTFF won't be funding non AI/biosecurity GCR work for the foreseeable future. Otherwise you will just attract applications which you would not fund anyway, which results in unnecessary effort for both applicants and reviewers.

Good to know. Thanks.