lexande

Posts 1

Comments43

In the short run it's possible that posting recommendations about whatever causes are currently getting mainstream media attention might attract more donations. But in the long run it's important that donors be able to trust that EA evaltuators will make their donation recommendations honestly and transparently, even when that trades off with marketing to new donors. Prioritizing transparent analysis (even when it leads to conclusions that some donors might find offputting) over advertising & broad donor appeal is a big part of the difference between EA and traditional charities like Oxfam.

Note that the page says

> Our financial year runs from 1st July to 30th June, i.e. FY 2024 is 1st July 2023 to 30th June 2024.

so the "YTD 2024" numbers are for almost eight months, not two, and accordingly it looks like FY 2024 will have similar total revenue to FY 2023 (and substantially less than FY 2021 and FY 2022).

I mostly meant the fact that it's currently restricted to Germany, though also to some extent the focus on interventions that fit into currently-popular anti-AfD narratives over other sorts of governance-improvement or policy-advocacy interventions (without clear justification as to why you believe the former will be more effective).

My objection is not primarily to what Effektiv-Spenden itself published but to the motivation that Sebastian Schienle articulated in the comment I was replying to. As I said there are potentially good reasons to publish such research, I just think "trying to appeal to people who don't currently care about global effectiveness and hoping to redirect them later" is not one of them.

(I think ideally Effektiv-Spenden would do more to distinguish this from other cause areas, "beta" seems like an understatement, but I wouldn't ordinarily criticize such web design decisions if there weren't people here in the comments explicitly saying they were motivated by manipulative marketing considerations.)

As I noted in my first comment, I think this sort of "bait and switch"-like advertising approach risks undermining the key strengths of EA and should generally be avoided. EA's comparative advantage is in being analytically correct and so we should tell people what we believe and why, not flatter their prejudices in the hopes that "we can then guide to the place where money goes furthest". I can see other potential benefits to Effektiv-Spenden or other EAs researching the effectiveness of pro-democracy interventions in Germany, but optimizing for that sort "gateway drug" effect seems likely to be net harmful.

I think it's important that EA analysis not start with its bottom line already written. In some situations the most effective altruistic interventions (with a given set of resources) will have partisan political valence and we need to remain open to those possibilities; they're usually not particularly neglected or tractable but occasional high-leverage opportunities can arise. I'm very skeptical of Effektiv-Spenden's new fund because it arbitrarily limits its possible conclusions to such a narrow space, but limiting one's conclusions to exclude that space would be the same sort of mistake.

The focus on a particular country would make sense in the context of career or voting advice but seems very strange in the context of donations since money is mostly internationally fungible (and it's unlikely that Germany is currently the place where money goes furthest towards the goal of defending democracy). The limited focus might make strategic sense if you thought of this as something like an advertising campaign trying to capitalize on current media attention and then eventually divert the additional donors to less arbitrarily circumscribed cause areas (as suggested by your third bullet point), but I think that sort of relating to donors as customers to advertise to rather than fellow participants in collaborative truth-seeking risks undermining confidence in Effektiv Spenden and the principles that make EA work.

How would you handle it if your analysis reached the conclusion that the most effective pro-democracy intervention were donating to a particular political party or other not-fully-tax-deductible group? I'm not familiar with the details of German charity law but I would worry that recommending such donations might jeopardize Effektiv Spenden's own tax-deductible status, while excluding such groups (which seem more likely to be relevant here than for other cause areas) from consideration would further undermine the principle of transparently giving donors the advice that most effectively furthers their goals.

If you're in charge of investing decisions for a pension fund or sovereign wealth fund or similar, you likely can't personally derive any benefit from having the fund sell off its bonds and other long-term assets now. You might do this in your personal account but the impact will be small.

For government bonds in particular it also seems relevant that I think most are held by entities that are effectively required to hold them for some reason (e.g. bank capital requirements, pension fund regulations) or otherwise oddly insensitive to their low ROI compared to alternatives. See also the "equity premium puzzle".

Beyond just taking vacation days, if you're a bond trader who believes in a very high chance of xrisk in the next five years it probably might make sense to quit your job and fund your consumption out of your retirement savings. At which point you aren't a bond trader anymore and your beliefs no longer have much impact on bond prices.

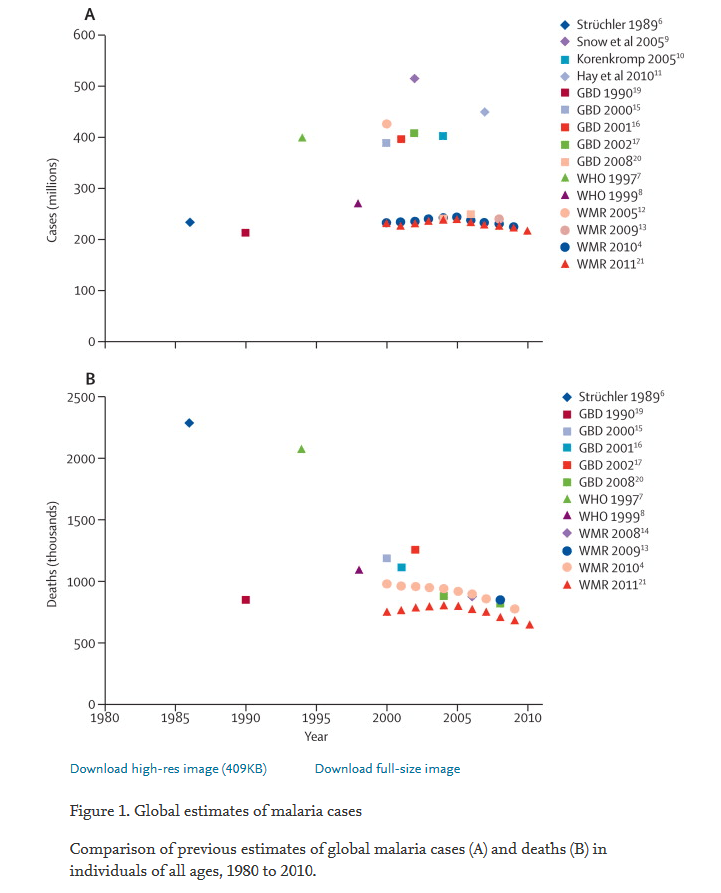

Note that those graphs of malaria cases and malaria deaths by year effectively have pretty wide error bars, with diferent sources disagreeing by a lot:

(source)

Presumably measurement methodology has improved some since 2010 but the above still suggests that the underlying reality is difficult enough to measure that one should not be too confident in a "malaria deaths have flatlined since 2015" narrative. But of course this supports your overall point regarding how much uncertainty there is about everything in this sort of context.