Michaël Trazzi

Posts 22

Comments30

I understand how Scott Wiener can be considered an "AI Safety champion". However, the title you chose feels a bit too personality-culty to me.

I think the forum would benefit for more neutral post titles such as "Consider donating to congressional candidate Scott Wiener", or even "Reasons to donate to congressional candidate Scott Wiener".

Hi Alice, thanks for the datapoint. It's useful to know you have been a LessWrong user for a long time.

I agree with your overall point that the people we want to reach would be on platforms that have a higher signal-to-noise ratio.

Here are some reasons for why I think it might still make sense to post short-form (not trying to convince you, I just think these arguments are worth mentioning for anyone reading this):

- Even if there's more people we want to reach who watch longform vs. short-form (or even who read LessWrong), what actually matters is whether short-form content is neglected, and whether the people who watch short-form would also end up watching long-form anyway. I think there's a case for it being neglected, but I agree that a lot of potentially impactful people who watch TikTok probably also watch Youtube.

- The super-agentic people who have developed substantial "cog sec" and manage to not look at any social media at all would probably only be reachable via LessWrong / arXiv papers, which is an argument that undermines most AI Safety comms, not just short-form. To that I'd say:

- I remember Dwarkesh saying somewhere that 30% of his podcast growth comes from short-form. This hints at short-form bringing potential long-form viewer / listener, and those Dwarkesh listeners are people we'd want to reach.

- Youtube pushes aggressively for short-form. And for platforms like Instagram it's even harder to ignore.

- It's possible to not use Instagram at all, and disable short-form recommendations on Youtube, but every time you add a "cog sec" criteria you're filtering even more people. (A substantial amount of my short-form views come posting on YT shorts, and I'm planning to extend to Instagram soon).

- Similarly to what @Cameron Holmes argues below, broad public awareness is also a nice externality, not just getting more AI Safety talent.

- You could imagine reaching people indirectly (think your friend who does watch short-form content talks to you about what they've learned at lunch).

- When I actually look at the data of what kind of viewers watch my short-form content, it's essentially older people (> 24yo, even >34yo) from high-income countries like the US. It's surprisingly not younger people (who you might expect to have shorter attention span / be less agentic).

Thanks! Just want to add some counterpoints and disclaimers to that:

- 1. I want to flag that although I've filmed & edited ~20 short-form clips in the past (eg. from June 2022 to July 2025) around things like AI Policy and protests, most of the content I've recently been posting as just been clips from other interviews. So I think it would also be unfair to compare my clips and original content (both short-form and longform), which is why I wrote this post. (I started doing this because I ran out of footage to edit shortform videos as I was trying to publish one TikTok a day, and these clips eventually reached way more people than what I was doing before, so I transitioned to doing that).

- 2. regarding comparing to high-production videos: I don't want to come across as saying we shouldn't compare work of different length or using different budgets. I think Marcus and Austin's attempt is honorable. Also, being able to correctly use a large budget to make a high-production video that reaches as many people as many lower budget videos requires a lot of skill, though once you have that level of skill then the amount of time you spend on a video to make it really good ends up leading to exponential results in views (if you make something that is 10% better, Youtube will push it much more than 10% more).

Glad you're working with some of the people I recommended to you, I'm very proud of that SB-1047 documentary team.

I would add to the list Suzy Shepherd who made Writing Doom. I believe she will relatively soon be starting another film. I wrote more about her work here.

For context, you asked me for data for something you were planning (at the time) to publish day-off. There's no way to get the watchtime easily on TikTok (which is why I had to do manual addition of things on a computer) and I was not on my laptop, so couldn't do it when you messaged me. You didn't follow up to clarify that watchtime was actually the key metric in your system and you actually needed that number.

Good to know that the 50 people were 4 Safety people and 46 people who hang at Mox and Taco Tuesday. I understand you're trying to reach the MIT-graduate working in AI who might somehow transition to AI Safety work at a lab / constellation. I know that Dwarkesh & Nathan are quite popular with that crowd, and I have a lot of respect for what Aric (& co) did, so the data you collected make a lot of sense to me. I think I can start to understand why you gave a lower score to Rational Animations or other stuff like AIRN.

I'm now modeling you as trying to answer something like "how do we cost-effectively feed AI Safety ideas to the kind of people who walk in at Taco Tuesday, who have the potential to be good AI Safety researchers". Given that, I can now understand better how you ended up giving some higher score to Cognitive Revolution and Robert Miles.

- 1) Feel free to use $26k. My main issue was that you didn't

ask me for my viewer minutes for TikTok(EDIT: didn't follow up to make sure I give you the viewer minutes for TikTok) and instead used a number that is off by a factor of 10. Please use a correct number in future analysis. For June 15 - Sep 10, that's 4,150,000 minutes, meaning a VM/$ of 160 instead of 18 (details here). - A) Your screenshots of google sheets say "FLI podcast", but you ran your script on the entire channel. And you say that the budget is $500k. Can you confirm what you're trying to measure here? The entire video work of FLI? Just the podcast? If you're trying to get the entire channel, is the budget really $500k for the entire thing? I'm confused.

- B) If you use accurate numbers for some things and estimate for others, I'd make sure to communicate explicitly about which ones are which. Even then, when you then compare estimates and real numbers there's a risk that your estimates are off by a a huge factor (has happened with my TikTok numbers), which makes me question the value of the comparisons.

- C) Let me try to be clearer regarding paid advertising:

- If some of the watchtime estimates you got from people are (views * 33% of length), and they pay $X per view (fixed cost of ads on youtube), then the VM/$ will be: [nb_views * (33% length) / total_cost] = [ nb_views * 33% length] / [nb_views * X] = [33% length / X]. Which is why I mean it's basically the cost of ads. (Note: I didn't include the organic views above because I'm assuming they're negligible compared to the inorganic ones. If you want me to give examples of videos where I see mostly inorganic views, I'll send you by DM).

- For the cases where you got the actual watchtime numbers instead of multiplying the length by a constant or using a script (say, someone tells you they have Y amount of hours total on their channel), or the ads lead to real organic views, your reasoning around ads makes sense, though I'd still argue that in terms of impact the engagement is the low / pretty disastrous in some cases, and does not translate to things we care about (like people taking action).

- 3. I think the questions "who is your favourite AI safety creator" or "Which AI safety YouTubers did/do you watch" are heavily biased towards Robert Miles, as he is (and has basically been for the past 8 years) the only "AI Safety Youtuber" (like making purely talking head videos about AI Safety, in comparison, RA is a team). So I think based on these questions it's quite likely he'd be mentioned, though I agree 50 people saying his name first is important data that needs to be taken into account.

- That said, I'm trying to wrap my head around how to go from your definition of "quality of audience" to "Robert Miles was chosen by 50 different people to be their favorite youtuber, as the first person mentioned". My interpretation is that you're saying: 1) you've spoken to 50 people who are people who work in AI Safety 2) they all mentioned Rob as the canonical Youtuber, so "therefore" A) Rob has the highest quality audience? (cf. you wrote in OP "This led me to make the “audience quality” category and rate his audience much higher.")

- My model for how this claim could be true that 1) you asked 50 people who you all thought were "high quality" audience 2) they all mentioned rob and nobody else (or rarely nobody else), so 3) you inferred "high quality audience => watches Rob" and therefore 4) also inferred "watches Rob => high quality"?

- That said, I'm trying to wrap my head around how to go from your definition of "quality of audience" to "Robert Miles was chosen by 50 different people to be their favorite youtuber, as the first person mentioned". My interpretation is that you're saying: 1) you've spoken to 50 people who are people who work in AI Safety 2) they all mentioned Rob as the canonical Youtuber, so "therefore" A) Rob has the highest quality audience? (cf. you wrote in OP "This led me to make the “audience quality” category and rate his audience much higher.")

- 4. Regarding weights, also respectfully, I did indeed look at them individually. You can check my analysis for what I think the TikTok individual weights should be here. For Youtube see here. Regarding your points:

- I have posted in my analysis of tiktok a bunch of datapoints that you probably don't have about the fact that my audience is mostly older high-income people from richer countries, which is unusually good for TikTok. Which is why I put 3 instead of your 2.

- "you're just posting clips of other podcasts and such and this just doesn't do a great job of getting a message across" -> the clips that end up making the majority of viewer minutes are actually quite high fidelity since they're quite long (2-4m long) and get the message more crisply than the average podcast minute. Anyway, once you look at my TikTok analysis you'll see that I ended up dividing everything by 2 to have the max fidelity tiktok have 0.5 (same as Cognitive Revolution), which means my number is Qf=0.45 at the end (instead of your 0.1) to just be coherent with the rest of your numbers.

- Qm: that's subjective but FWIW I myself only align to 0.75 to my TikTok and not 1 (see analysis)

- "Again, most of the quality factor is being done by audience quality and yes, shorts just have a far lower audience quality." --> again, respectfully, from looking at your tables I think this is false. You rank the fidelity of TikTok as 0.1, which is 5x less than 4 other channels. No other channels except my content (TikTok & YT) has less 0.3. In comparison, if you forget about rob's row, the audience quality varies only by 3x between my Qa for TikTok and the rest. So no, the quality factor is not mainly done by audience quality.

Agreed about the need to include Suzy Shepherd and Siliconversations.

Before Marcus messaged me I was in the process of filling another google sheets (link) to measure the impact of content creators (which I sent him) which also had like three key criteria (production value, usefulness of audience, accuracy).

I think Suzy & Siliconversations are great example of effectiveness because:

- I think Suzy did her film for really cheap (less than $20k). Probably if you included her time you'd get a larger amount, but in terms of actual $ spent and the impact it got (400k views on longform content) I think it's pretty great, and I think quite educational. In particular, it provides another angle to how to explain things, through some original content. In comparison, a lot of the AI 2027 content has been like amplifying an idea that was already in the world and covered by a bunch of people. Not sure how to compare both things but it's worth noting they're different.

- Siliconversations is I think an even more powerful example, and one of the reasons why I wanted to make that google sheets. After talking to folks at ControlAI, his videos about emailing representatives lead to many more emails sent to representatives than another Control AI x Rational Animations collaboration, even though RA is a much bigger channel than Siliconversations (and especially at the time Siliconversations first posted his video). The ratio of CTA per views is at least 2.5% given the 2000 emails from 80k views (source).

The thing I wanted to measure (which I think is probably a bit much harder than just estimating things with weights then multiplying by minutes of watchtime) is "what kind of content leads more people to take action like Siliconversations", and I'm not sure how to measure that except if everyone had CTAs that they tracked and we could compare the ratios.

The reason I think Siliconversations' video lead to so many emails was that he was actually relentless in this video about sending emails, and that was the entire point of the video, instead of like talking about AI risk in general, and having a link in the comments.

I think this is also why that RA x ControlAI collab got less emails, but it also got way more views that potentially in the future will lead to a bunch of people that will do a lot of useful things in the world, though that's hard to measure.

I know that 80k's AI In Context has a full section at the end on "What to do" saying to look at the links in description. Maybe Chana Mesinger has data on how many people clicked on how much traffic was redirected from YT to 80k.

Update: after looking at Marcus' weights, I ended up dividing all the intermediary values of Qf I had by 2, so that it matches with Marcus' weights where Cognitive Revolution = 0.5. Dividing by 2 caps the best tiktok-minute to the average Cognitive Revolution minute. Neel was correct to claim that 0.9 was way too high.

===

My model is that most of the viewer minutes come from people who watch the all thing, and some decent fraction end up following, which means they'll end up engaging more with AI-Safety-related content in the future as I post more.

Looking at my most viewed TikTok:

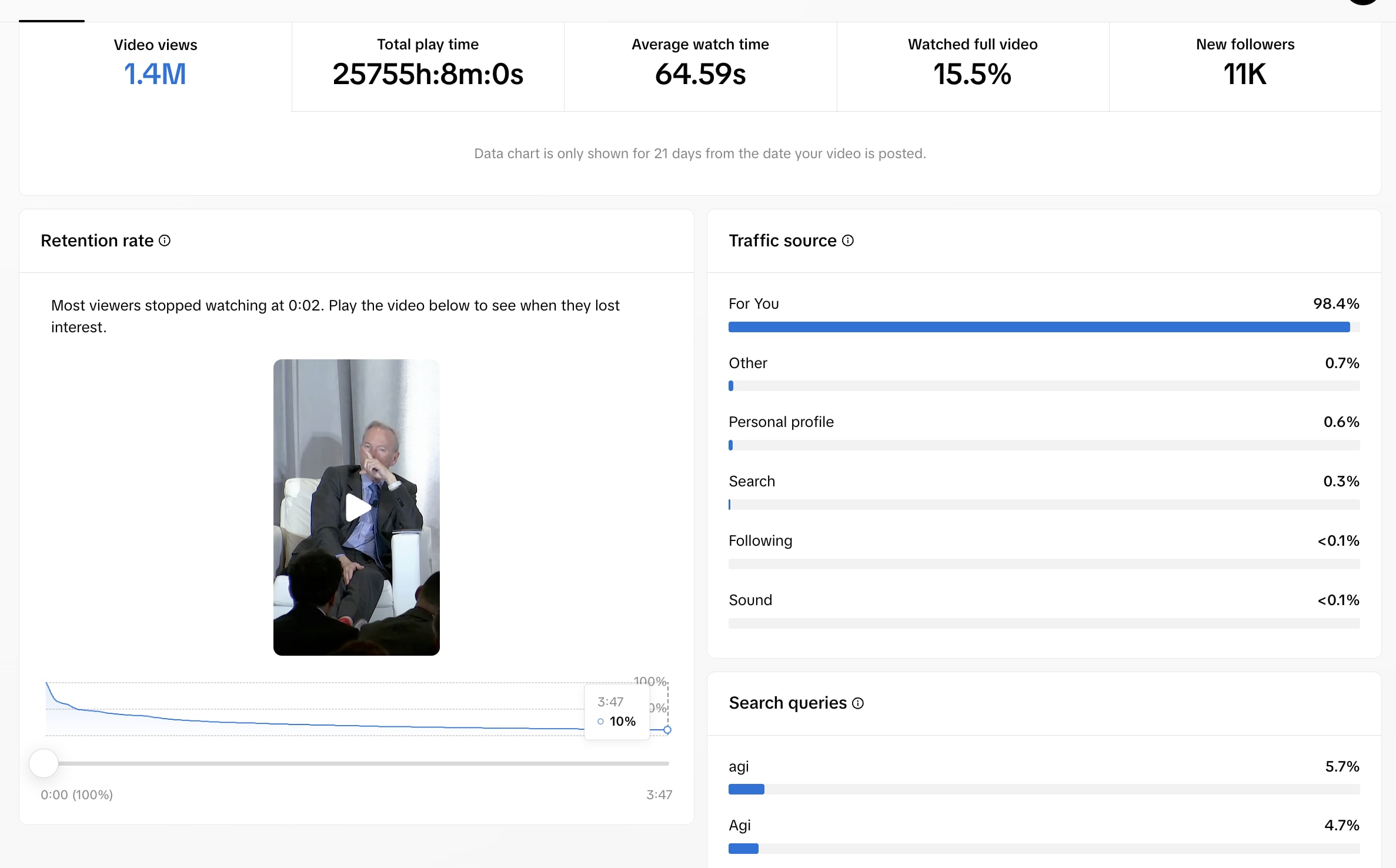

TikTok says 15.5% of viewers (aka 0.155 * 1400000 = 217000) watched the entire thing, and most people who watch the first half end up watching until the end (retention is 18% at half point, and 10% at the end).

And then assuming the 11k who followed came from those 217000 who watched the whole thing, we can say that's 11000/217000 = 5% of the people who finished the video that end up deciding to see more stuff like that in the future.

So yes, I'd say that if a significant fraction (15.5%) watch the full thing, and 0.155*0.05 = 0.7% of the total end up following, I think that's "engaging properly".

And most importantly, most of the viewer-minutes on TikTok do come from these long videos that are 1-4 minutes long (especially ones that are > 2 minutes long):

- The short / low-fidelity takes that are 10-20s long don't get picked up by the new tiktok algorithm, don't get much views, so didn't end up in that "TikTok Qa & Qs" sheet of top 10 videos (and for the ones that did, they didn't really contribute to the total minutes, so to the final Qf).

- To show that the Eric Schimdt example above is not cherry-picked, here is a google docs with similar screenshots of stats for the top 10 videos that I use to compute Qf. From these 10 videos, 6 are more than 1m long, and 4 are more than 2 minutes long. The precise distribution is:

- 0m-1m: 4 videos

- 1m-2m: 2 videos

- 2m-3m: 2 videos

- 3m-4m: 2 videos

Happy for others to come up with different numbers / models for this, or play with my model through the "TikTok Qa & Qf" sheet here, using different intermediary numbers.

Update: as I said at the top, I was actually wrong to have initially said Qf=0.9 given the other values. I now claim that Qf should be closer to 0.45. Neel was right to make that comment.

Wouldn't investors fire Dario and replace him with someone who would maximize profits?

Note: My understanding is that, as of November 2024, the Long-Term Benefit Trust controls 3 of 5 board seats, so investors alone cannot fire him. However, a supermajority of voting shareholders could potentially amend the Trust structure first, then replace the board and fire him.