All of Michaël Trazzi's Comments + Replies

I understand how Scott Wiener can be considered an "AI Safety champion". However, the title you chose feels a bit too personality-culty to me.

I think the forum would benefit for more neutral post titles such as "Consider donating to congressional candidate Scott Wiener", or even "Reasons to donate to congressional candidate Scott Wiener".

Hi Alice, thanks for the datapoint. It's useful to know you have been a LessWrong user for a long time.

I agree with your overall point that the people we want to reach would be on platforms that have a higher signal-to-noise ratio.

Here are some reasons for why I think it might still make sense to post short-form (not trying to convince you, I just think these arguments are worth mentioning for anyone reading this):

- Even if there's more people we want to reach who watch longform vs. short-form (or even who read LessWrong), what actually matters is whether sh

Thanks! Just want to add some counterpoints and disclaimers to that:

- 1. I want to flag that although I've filmed & edited ~20 short-form clips in the past (eg. from June 2022 to July 2025) around things like AI Policy and protests, most of the content I've recently been posting as just been clips from other interviews. So I think it would also be unfair to compare my clips and original content (both short-form and longform), which is why I wrote this post. (I started doing this because I ran out of footage to edit shortform videos as I was trying to p...

Glad you're working with some of the people I recommended to you, I'm very proud of that SB-1047 documentary team.

I would add to the list Suzy Shepherd who made Writing Doom. I believe she will relatively soon be starting another film. I wrote more about her work here.

For context, you asked me for data for something you were planning (at the time) to publish day-off. There's no way to get the watchtime easily on TikTok (which is why I had to do manual addition of things on a computer) and I was not on my laptop, so couldn't do it when you messaged me. You didn't follow up to clarify that watchtime was actually the key metric in your system and you actually needed that number.

Good to know that the 50 people were 4 Safety people and 46 people who hang at Mox and Taco Tuesday. I understand you're trying to reach the MIT-gr...

- 1) Feel free to use $26k. My main issue was that you didn't

ask me for my viewer minutes for TikTok(EDIT: didn't follow up to make sure I give you the viewer minutes for TikTok) and instead used a number that is off by a factor of 10. Please use a correct number in future analysis. For June 15 - Sep 10, that's 4,150,000 minutes, meaning a VM/$ of 160 instead of 18 (details here). - A) Your screenshots of google sheets say "FLI podcast", but you ran your script on the entire channel. And you say that the budget is $500k. Can you confirm what you're trying to

Agreed about the need to include Suzy Shepherd and Siliconversations.

Before Marcus messaged me I was in the process of filling another google sheets (link) to measure the impact of content creators (which I sent him) which also had like three key criteria (production value, usefulness of audience, accuracy).

I think Suzy & Siliconversations are great example of effectiveness because:

- I think Suzy did her film for really cheap (less than $20k). Probably if you included her time you'd get a larger amount, but in terms of actual $ spent and the impact it go

Update: after looking at Marcus' weights, I ended up dividing all the intermediary values of Qf I had by 2, so that it matches with Marcus' weights where Cognitive Revolution = 0.5. Dividing by 2 caps the best tiktok-minute to the average Cognitive Revolution minute. Neel was correct to claim that 0.9 was way too high.

===

My model is that most of the viewer minutes come from people who watch the all thing, and some decent fraction end up following, which means they'll end up engaging more with AI-Safety-related content in the future as I post more.

Looking a...

This comment is answering "TikTok I expect is pretty awful, so 0.1 might be reasonable there". For my previous estimate on the quality of my Youtube long-form stuff, see this comment.

tl;dr: I now estimate the quality of my TikTok content to be Q = 0.75 * 0.45 * 3 = 1

The Inside View (TikTok) - Alignment = 0.75 & Fidelity = 0.45

To estimate fidelity of message (Qf) and alignment of message (Qm) in a systematic way, I compiled my top 10 most performing tiktoks and ranked their individual Qf and Qm (see tab called "TikTok Qa & Qf" here, which contains t...

I struggle to imagine Qf 0.9 being reasonable for anything on TikTok. My understanding of TikTok is that most viewers will be idly scrolling through their feed, watch your thing for a bit as part of this endless stream, then continue, and even if they decide to stop for a while and get interested, they still would take long enough to switch out of the endless scrolling mode to not properly engage with large chunks of the video. Is that a correct model, or do you think that eg most of your viewer minutes come from people who stop and engage properly?

Agreed that the quality of audience is definitely higher for my (niche) AI Safety content on Youtube, and I'd expect Q to be higher for (longform) Youtube than Tiktok.

In particular, I estimate Q(The Inside View Youtube) = 2.7, instead of 0.2, with (Qa, Qf, Qm) = (6, 0.45, 1), though I acknowledge that Qm is (by definition) the most subjective.

To make this easier to read & reply to, I'll post my analysis for Q(The Inside View Tiktok) in another comment, which I'll link to when it's up. EDIT: link for TikTok analysis here.

The Inside View (Youtube) - Qa =

...Thank you both for doing this, I appreciate the effort in trying to get some estimates.

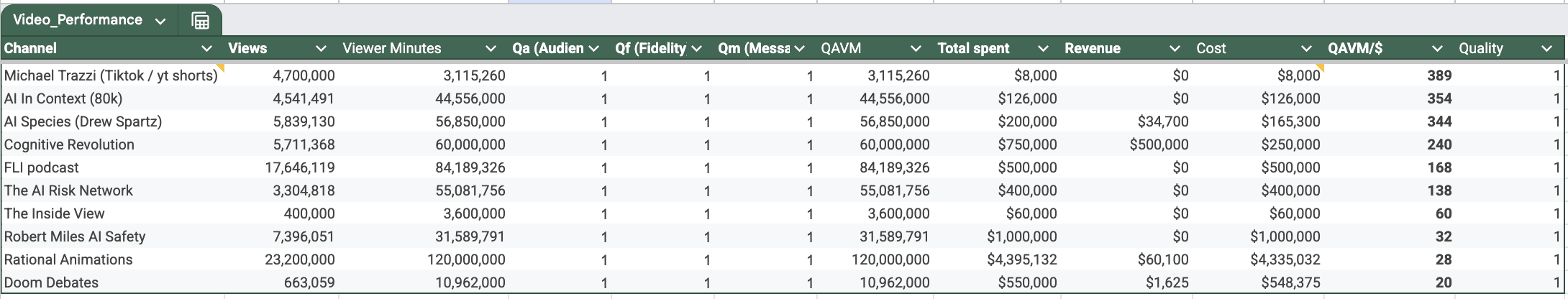

However, I would like to flag that your viewer minute numbers for my short-form content are off by an order of magnitude. And I've done 4 full weeks on the Manifund grant, so it's 4 * $2k = $8k, not $24k.

Plugging these numbers in (google sheets here) I get a QAVM/$ of 389 instead of the 18 you have listed.

Other data corrections:

- You say that the FLI podcast has 17M views and costed $500k. However, 17M is the amount of views on the overall FLI channel. If yo

Hi Michael, sorry for coming back a bit late. I was flying out of SF

For costs, I'm going to stand strongly by my number here. In fact, I think it should be $26k. I treated everyone the same and counted the value of their time, at their suggested rate, for the amount of time they were doing their work. This affected everyone, and I think it is a much more accurate way to measure things. This affected AI Species and Doom Debates quite severely as well, more so than you as well as others. I briefly touched on this in the post, but I'll expand here. The goal o

Relatedly, recently in UK Politics x AI Safety:

- ControlAI released a statement signed by 60 UK parliamentarians: https://controlai.com/statement

- Same happened through PauseAI: https://pauseai.info/dear-sir-demis-2025 (see TIME)

On a related note, has someone looked into the cost-effectiveness of funding new podcasts vs. convincing mainstream ones to produce more impactful content, similarly to how OpenPhil funded Kurzgesagt?

For instance, has anyone tried to convince people like Lex Fridman who has already interviewed MacAskill and Bostrom, to interview more EA-aligned speakers?

My current analysis gives roughly an audience of 1-10M per episode for Lex, and I'd expect that something around $20-100k per episode would be enough of an incentive.

In comparison, when giving $10k to start...

I'm flattered for The Inside View to be included here among so many great podcasts. This is an amazing opportunity and I am excited to see more podcasts emerge, especially video ones.

If anyone is on the edge of starting and would like to hear some of the hard lessons I've learned and other hot takes I have on podcasting or video, feel free to message me at michael.trazzi at gmail or (better) comment here.

Agreed!

As Zach pointed out below there might be some mistakes left in the precise numbers, for any quantitative analysis I would suggest reading AI Impacts' write-up: https://aiimpacts.org/what-do-ml-researchers-think-about-ai-in-2022/

Thanks for the quotes and the positive feedback on the interview/series!

Re Gato: we also mention it as a reason why training across multiple domains does not increase performance in narrow domains, so there is also evidence against generality (in the sense of generality being useful). From the transcript:

..."And there’s been some funny work that shows that it can even transfer to some out-of-domain stuff a bit, but there hasn’t been any convincing demonstration that it transfers to anything you want. And in fact, I think that the recent paper… The Gato paper

I think he would agree with "we wouldn't have GPT-3 from an economical perspective". I am not sure whether he would agree with a theoretical impossibility. From the transcript:

"Because a lot of the current models are based on diffusion stuff, not just bigger transformers. If you didn’t have diffusion models [and] you didn’t have transformers, both of which were invented in the last five years, you wouldn’t have GPT-3 or DALL-E. And so I think it’s silly to say that scale was the only thing that was necessary because that’s just clearly not true."

To b...

Thanks for the reminder on the open-minded epistemics ideal of the movement. To clarify, I do spend a lot of time reading posts from people who are concerned about AI Alignment, and talking to multiple "skeptics" made me realize things that I had not properly considered before, learning where AI Alignment arguments might be wrong or simply overconfident.

(FWIW I did not feel any pushback in suggesting that skeptics might be right on the EAF, and, to be clear, that was not my intention. The goal was simply to showcase a methodology to facilitate a constructive dialogue between the Machine Learning and AI Alignment community.)

Thanks for the thoughtful post. (Cross-posting a comment I made on Nick's recent post.)

My understanding is that people were mostly speculating on the EAF about the rejection rate for the FTX future fund's grants and distribution of $ per grantee. What might have caused the propagation of "free-spending" EA stories:

- the selection bias at EAG(X) conferences where there was a high % of grantees.

- the fact that the FTX future fund did not (afaik) released their rejection rate publicly

- other grants made by other orgs happening concurrently (eg. CEA)

This post ...

My understanding is that people were mostly speculating on the EAF about the rejection rate and distribution of $ per grantee. What might have caused the propagation of "free-spending" EA stories:

- the selection bias at EAG(X) conferences where there was a high % of grantees.

- the fact that FTX did not (afaik) release their rejection rate publicly

- other grants made by other orgs happening concurrently (eg. CEA)

I found this sentence in Will's recent post "For example, Future Fund is trying to scale up its giving rapidly, but in the recent open call it reje...

To make that question more precise, we're trying to estimate xrisk_{counterfactual world without those people} - xrisk_{our world}, with xrisk_{our world}~1/6 if we stick to The Precipice's estimate.

Let's assume that the x-risk research community completely vanishes right now (including the past outputs, and all the research it would have created). It's hard to quantify, but I would personally be at least twice as worried about AI risk that I am right now (I am unsure about how much it would affect nuclear/climate change/natural disasters/engineered ...

Wouldn't investors fire Dario and replace him with someone who would maximize profits?

Note: My understanding is that, as of November 2024, the Long-Term Benefit Trust controls 3 of 5 board seats, so investors alone cannot fire him. However, a supermajority of voting shareholders could potentially amend the Trust structure first, then replace the board and fire him.