All of peterhartree's Comments + Replies

I can imagine "co-eff" being sticky.

I agree that "co-give" is nice but it's sufficiently harder to say that I predict it won't catch on.

Notably, in that video, Garry is quite careful and deliberate with his phrasing. It doesn't come across as a case of him doing excited offhand hype. Paul Buchheit nods as he makes the claim.

Thanks for the post. Of your caveats, I'd guess 4(d) is the most important:

Generative AI is very rapidly progressing. It seems plausible that technologies good enough to move the needle on company valuations were only developed, say, six months ago, in which case it would be too early to see any results.

Personally, things have felt very different since o3 (April) and, for coding, the Claude 4 series (May).

Anthropic's run-rate revenue went from $1B in January to $5B in August.

This post misquotes Garry Tan. You wrote (my emphasis):

Y Combinator CEO Garry Tan has publicly stated that recent YC batches are the fastest growing in their history because of generative AI.

But Garry's claim was only about the winter 2025 batch. From the passage you cited:

The winter 2025 batch of YC companies in aggregate grew 10% per week, he said.

“It’s not just the number one or two companies -- the whole batch is growing 10% week on week”

On "Standards": I often link people to Jason Crawford's blog post "Organisational metabolism: Why anything that can be a for profit should he a for profit."

A long overdue thank you for this comment.

I looked into this, and there is in fact some evidence that less expressive voices are easier to understand at high speed. This factor influenced our decision to stick with Ryan for now.

The results of the listener survey were equivocal. Listener preferences varied widely, with Ryan (our existing voice) and Echo coming out tied and slightly on top, but not with statistical significance. [1]

Given that, we plan to stick with Ryan for now. Two considerations that influence this call, independently of the survey result:

- There's an habituation effect, such that switching costs for existing hardcore listeners are significant.

- There's some evidence that more expressive voices are less comprehensible at high listening speeds. Ryan is less expressive

Similar reasons that I want both of Twitter's "home" and "following" views.

I want "People you follow" to include everything and it should be chronological.

If quick takes are includedl, I guess the "People you follow" content could dominate the items that currently make the "New and upvoted" section.

I wish it was easier to find the best social media discussions of forum posts and quick takes.

E.g. Ozzie flagged some good Facebook discussion of a recent quick take [1], but people rarely do that.

Brainstorm on minimal things you could do:

a. Link to Twitter search results (example). (Ideally you might show the best Twitter discussions in the Forum UI, but I'm not sure if their API terms would permit that.)

b. Encourage authors to link to discussion on other platforms, and have a special place in the UI that makes such links easy to find.

I actually can't

I ~never check the "Quick takes" section even though I bet it has some great stuff.

The reason is that its too hard to find takes that are on a topic I care about, and/or have an angle that seems interesting.

I think I'd be much more likely to read quick takes if the takes had titles, and/or if I could filter them by topic tags.

Maybe you can LLM-generate default title and tag suggestions for the author when they're about to post?

I wish the notifications icon at the top right would have have separate icon to indicate replies to my posts and my comments.

At the moment those get drowned out by notifications about posts and comments from people I follow (which are so numerous that I've been trained to rarely check the notification inbox, because it's such a rabbit hole...), so I often see comments weeks or months too late.

I'd also like to be able to get email notifications for just replies to my posts and replies to my comments.

Great list, thanks!

Nonlinear Library narrations are superseded by the official EA Forum and LessWrong podcasts:

1. My current process

I check a couple of sources most days, at random times during the afternoon or evening. I usually do this on my phone, during breaks or when I'm otherwise AFK. My phone and laptop are configured to block most of these sources during the morning (LeechBlock and AppBlock).

When I find something I want to engage with at length, I usually put it into my "Reading inbox" note in Obsidian, or into my weekly todo list if it's above the bar.

I check my reading inbox on evenings and weekends, and also during "open" blocks that I sometimes schedule...

Thanks for your feedback.

For now, we think our current voice model (provided by Azure) is the best available option all things considered. There are important considerations in addition to human-like delivery (e.g. cost, speed, reliability, fine-grained control).

I'm quite surprised that an overall-much-better option hasn't emerged before now. My guess is that something will show up later in 2024. When it does, we will migrate.

There are good email newsletters that aren't reliably read.

Readit.bot turns any newsletter into a personal podcast feed.

TYPE III AUDIO works with authors and orgs to make podcast feeds of their newsletters—currently Zvi, CAIS, ChinAI and FLI EU AI Act, but we may do a bunch more soon.

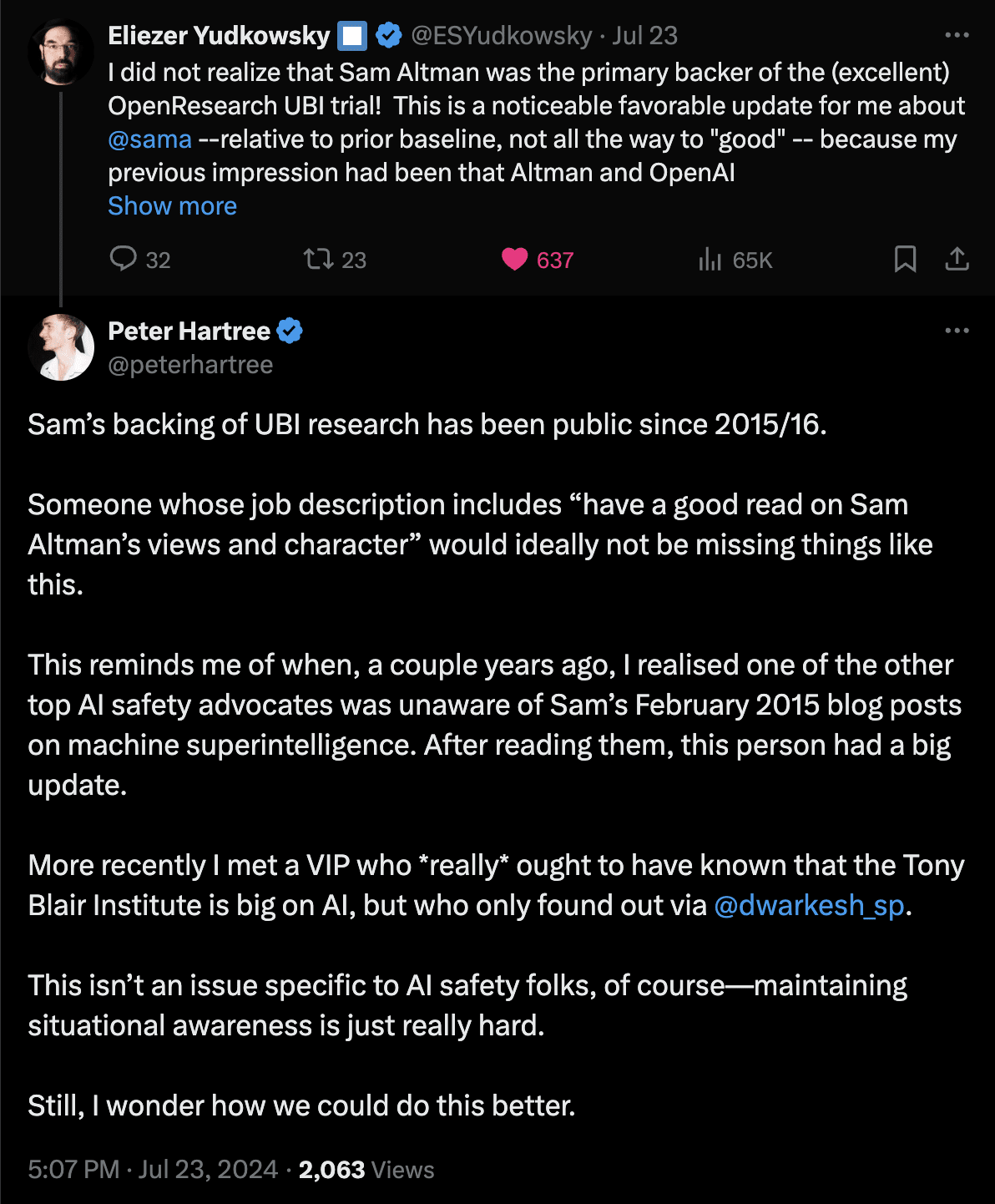

I think that "awareness of important simple facts" is a surprisingly big problem.

Over the years, I've had many experiences of "wow, I would have expected person X to know about important fact Y, but they didn't".

The issue came to mind again last week:

My sense is that many people, including very influential folks, could systematically—and efficiently—improve their awareness of "simple important facts".

There may be quick wins here. For example, there are existing tools that aren't widely used (e.g. Twitter lists; Tweetdeck). There are good email newsletters ...

Bret Taylor and Larry Summers (members of the current OpenAI board) have responded to Helen Toner and Tasha McCauley in The Economist.

The key passages:

...Helen Toner and Tasha McCauley, who left the board of Openai after its decision to reverse course on replacing Sam Altman, the CEO, last November, have offered comments on the regulation of artificial intelligence (AI) and events at OpenAI in a By Invitation piece in The Economist.

We do not accept the claims made by Ms Toner and Ms McCauley regarding events at OpenAI. Upon being asked by the former board (

Andrew Mayne points out that “the base model for ChatGPT (GPT 3.5) had been publicly available via the API since March 2022”.

Thanks for the context!

Obvious flag that this still seems very sketchy. "the easiest way to do that due to our structure was to put it in Sam's name"? Given all the red flags that this drew, both publicly and within the board, it seems hard for me to believe that this was done solely "to make things go quickly and smoothly."

I remember Sam Bankman-Fried used a similar argument around registering Alameda - in that case, I believe it later led to him later having a lot more power because of it.

For context:

- OpenAI claims that while Sam owned the OpenAI Startup Fund, there was “no personal investment or financial interest from Sam”.

- In February 2024, OpenAI said: “We wanted to get started quickly and the easiest way to do that due to our structure was to put it in Sam's name. We have always intended for this to be temporary.”

- In April 2024 it was announced that Sam no longer owns the fund.

Sam didn't inform the board that he owned the OpenAI Startup Fund, even though he constantly was claiming to be an independent board member with no financial interest in the company.

Sam has publicly said he has no equity in OpenAI. I've not been able to find public quotes where Sam says he has no financial interest in OpenAI (does anyone have a link?).

From the interview:

When ChatGPT came out, November 2022, the board was not informed in advance about that. We learned about ChatGPT on Twitter.

Several sources have suggested that the ChatGPT release was not expected to be a big deal. Internally, ChatGPT was framed as a “low-key research preview”. From The Atlantic:

...The company pressed forward and launched ChatGPT on November 30. It was such a low-key event that many employees who weren’t directly involved, including those in safety functions, didn’t even realize it had happened. Some of those who were aware

Andrew Mayne points out that “the base model for ChatGPT (GPT 3.5) had been publicly available via the API since March 2022”.

I think what would be more helpful for me is the other things discussed in board meetings. Even if GPT was not expected to be a big deal, if they were (hyperbolic example) for example discussing whether to have a coffee machine at the office, I think not mentioning ChatGPT would be striking. On the other hand, if they only met once a year and only discussed e.g. if they are financially viable or not, then perhaps not mentioning ChatGPT makes more sense. And maybe even this is not enough - it would also be concerning if some board members wanted more info, ...

You wrote:

[OpenAI do] very little public discussion of concrete/specific large-scale risks of their products and the corresponding risk-mitigation efforts (outside of things like short-term malicious use by bad API actors, where they are doing better work).

This doesn't match my impression.

For example, Altman signed the CAIS AI Safety Statement, which reads:

Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.

The “Preparedness” page—linked from the top navigation...

OpenAI's “Planning for AGI & Beyond” blog post includes the following:

...As our systems get closer to AGI, we are becoming increasingly cautious with the creation and deployment of our models. Our decisions will require much more caution than society usually applies to new technologies, and more caution than many users would like. Some people in the AI field think the risks of AGI (and successor systems) are fictitious; we would be delighted if they turn out to be right, but we are going to operate as if these risks are existential.

At some point, the ba

I have bad feelings about a lot of this.

The "Planning for AGI & Beyond" doc seems to me to be heavily inspired by a few other people at OpenAI at the time, mainly the safety team, and I'm nervous those people have less influence now.

At the bottom, it says:

Thanks to Brian Chesky, Paul Christiano, Jack Clark, Holden Karnofsky, Tasha McCauley, Nate Soares, Kevin Scott, Brad Smith, Helen Toner, Allan Dafoe, and the OpenAI team for reviewing drafts of this.

Since then, Tasha and Helen have been fired off the board, and I'm guessing relations have soured with others listed.

On short stories, two notable examples: Narrative Ark by Richard Ngo and the regular "Tech Tales" section at the end of Jack Clark's Import AI newsletter.

I read the paper, then asked Claude 3 to summarise. I endorse the following summary as accurate:

...The key argument in this paper is that Buddhism is not necessarily committed to pessimistic views about the value of unawakened human lives. Specifically, the author argues against two possible pessimistic Buddhist positions:

- The Pessimistic Assumption - the view that any mental state characterized by dukkha (dissatisfaction, unease, suffering) is on balance bad.

- The Pessimistic Conclusion - the view that over the course of an unawakened life, dukkha will al

Thanks for this.

I take the central claim to be:

Even if an experience contains some element of dukkha, it can still be good overall if its positive features outweigh the negative ones. The mere presence of dukkha does not make an experience bad on balance.

I agree with this, and also agree that it's often overlooked.

Perhaps it’s just the case that the process of moral reflection tends to cause convergence among minds from a range of starting points, via something like social logic plus shared evolutionary underpinnings.

Yes. And there are many cases where evolution has indeed converged on solutions to other problems[1].

- ^

Some examples:

(Copy-pasted from Claude 3 Opus. They pass my eyeball fact-check.)

- Wings: Birds, bats, and insects have all independently evolved wings for flight, despite having very different ancestry.

- Eyes: Complex camera-like eyes have evolved inde

My own attraction to a bucket approach (in the sense of (1) above) is motivated by a combination of:

(a) reject the demand for commensurability across buckets.

(b) make a bet on plausible deontic constraints e.g. duty to prioritise members of the community of which you are a part.

(c) avoid impractical zig-zagging when best guess assumptions change.

Insofar as I'm more into philosophical pragmatism than foundationalism, I'm more inclined to see a messy collection of reasons like these as philosophically adequate.

I think there are two things to justify here:

-

The commitment to a GHW bucket, where that commitment involves "we want to allocate roughly X% of our resources to this".

-

The particular interventions we fund within the GHW resource bucket.

I think the justification for (1) is going to look very different to the justification for (2).

I'm not sure which one you're addressing, it sounds like more (2) than (1).

Some examples of the kinds of thing I might share, were there an obvious place to do so:

I hesitate to post things like this, because “short, practical advice” posts aren't something I often see on the Forum.

I'm not sure if this is the kind of thing that's worth encouraging as a top-level post.

In general I would like to read more posts like this from EA Forum users, but perhaps not as part of the front page.

Thanks for this. I'd be keen to see a longer list of the interesting for-profits in this space.

Biobot Analytics (wastewater monitoring) are the only for-profit on the 80,000 Hours job board list.

Hi Jaap—if you'd like to give feedback on narrations, best option is to email me directly. If you'd like to contact the EA Forum team for other reasons, see the contact page.