Simon Stewart

Comments4

Most donors say they want to "help people". If that's true, they should try to distribute their resources to help people as much as possible.

This is not a valid argument. The conclusion doesn't follow from the premise unless you assume that "help people" means "help people as much as possible". But it doesn't.

Nothwithstanding, let's say "I want to help people as much as possible." I still need a theory of change - help people in what way?

You could try "help as many of them avoid unnecessary death as possible". Then we are in the scenario you describe, but we have already excluded investing in the arts based on how we have defined our goal.

There is only one best charity: the one that helps the most people the greatest amount per dollar.

If everyone gave to the "best charity" you would pass the point of diminishing returns and likely hit the point of negative returns.

Therefore, everyone seeking the "best charity" to give to is not the best approach to capital allocation.

Therefore, some people should not be following this strategy.

If a high-powered lawyer who makes $1,000 an hour chooses to take an hour off to help clean up litter on the beach, he's wasted the opportunity to work overtime that day, make $1,000, donate to a charity that will hire a hundred poor people for $10/hour to clean up litter, and end up with a hundred times more litter removed.

This assumes it is ethically responsible to perpetuate a system where a person can earn $1000 per hour and others make $10 per hour, and that being a high-powered lawyer is otherwise morally neutral.

I'm not sure I agree with the conclusion, because people with dark triad personalities may be better than average at virtue signalling and demonstrating adherence to norms.

I think there should probably be a focus on principles, standards and rules that can be easily recalled by a person in a chaotic situation (e.g. put on your mask before helping others). And that these should be designed with limiting downside risk and risk of ruin in mind.

My intuition is that the rule "disfavour people who show signs of being low integrity" is a bad one, as:

- it relies on ability to compare person to idealised person rather than behaviour to rule, and the former is much more difficult to reason about

- it's moving the problem elsewhere not solving it

- it's likely to reduce diversity and upside potential of the community

- it doesn't mitigate the risk when a bad actor passes the filter

I'd favour starting from the premise that everyone has the potential to act without integrity and trying to design systems than mitigate this risk.

Some possible worlds:

SBF was aligned with EA | SBF wasn't aligned with EA | |

|---|---|---|

| SBF knew this | EA community standards permit harmful behaviour. | SBF violated EA community standards. |

| SBF didn't know this | EA community standards are unclear. | EA community standards are unclear. |

Some possible stances in these worlds:

| Possible world | Possible stances | ||

|---|---|---|---|

| EA community standards permit harmful behaviour | 1a. This is intolerable. Adopt a maxim like "first, do no harm" | 1b. This is tolerable. Adopt a maxim like "to make an omelette you'll have to break a few eggs" | 1c. This is desirable. Adopt a maxim like "blood for the blood god, skulls for the skull throne" |

| SBF violated EA community standards | 2a. This is intolerable. Standards adherence should be prioritised above income-generation. | 2b. This is tolerable. Standards violations should be permitted on a risk-adjusted basis. | 2c. This is desirable. Standards violations should be encouraged to foster anti-fragility. |

| EA community standards are unclear | 3a. This is intolerable. Clarity must be improved as a matter of urgency. | 3b. This is tolerable. Improved clarity would be nice but it's not a priority. | 3c. This is desirable. In the midst of chaos there is also opportunity. |

I'm a relative outsider and I don't know which world the community thinks it is in, or which stance it is adopting in that world.

Some hypotheses:

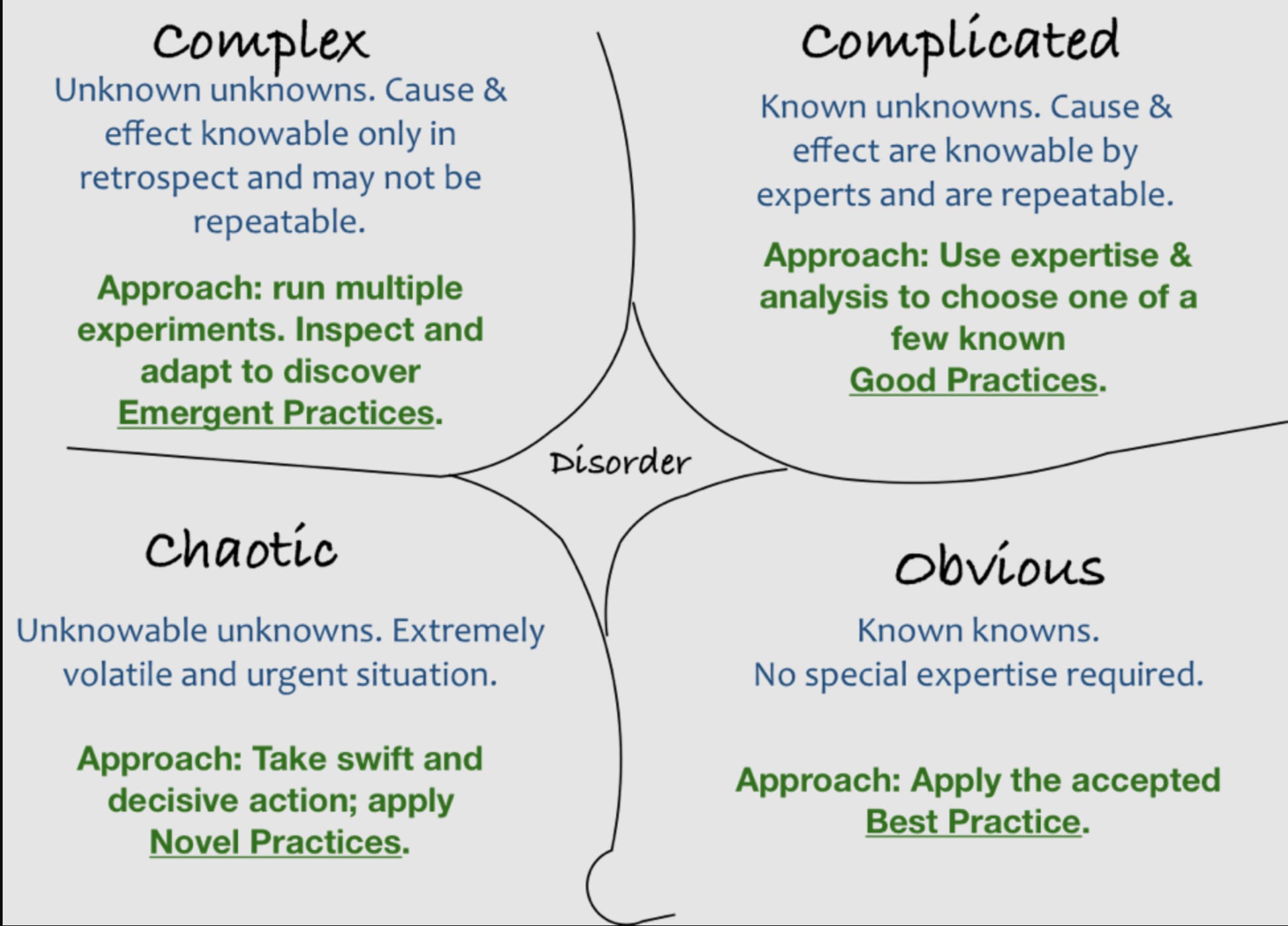

- When trying to guide altruists, it matters what problem-domain they are operating in.

- In the obvious domain, solutions are known. If you have the aptitude, be a doctor rather than a small-time pimp.

- In a complicated domain, solutions are found through analysis. Seems like the EA community is analytically minded, so may have a bias towards characterising complex and chaotic problems as complicated. This is dangerous because in the complicated domain, the value of iteration and incrementalism is low whereas in the complex domain it's very high.

- In a complex domain, solutions are found through experimentation. There should be a strong preference for ability to run more experiments.

- In a chaotic domain, solutions are found through acting before your environment destroys you. There should be a strong preference for establishing easy to follow heuristics that are unlikely to introduce catastrophic risk across a wide range of environments.

2. Consequentialist ethics are inherently future-oriented. The future contains unknown unknowns and unknowable unknowns, so any system of consequentialist ethics is always working in the complex and chaotic domains. Consequentialism proceeds by analytical reasoning, even if a subset of the reasoning is probabilistic, and this is not applicable to the complex and chaotic domains, so it's not a useful framework.

3. What's actually happening when thinking through things from a consequentialist perspective is that you are imagining possible futures and identifying ways to get there, which is an imaginative process not an analytical one.

4. Better frameworks would be present-oriented, focusing on needs and how to sustainably meet them. Virtue ethics and deontological ethics are present-oriented ethical frameworks that may have some value. Orientation towards establishing services and infrastructure at the right scale and resilience level, rather than outputs (e.g. lives-saved) would be more fruitful over the long-term.

KYC/AML, international payments and fraud avoidance are expensive and difficult. The market for UCT platforms is likely a natural duopoly or small oligopoly.

The Red Cross have committed to delivering 50% of their humanitarian assistance with cash or vouchers by 2025.