Un Wobbly Panda

Comments12

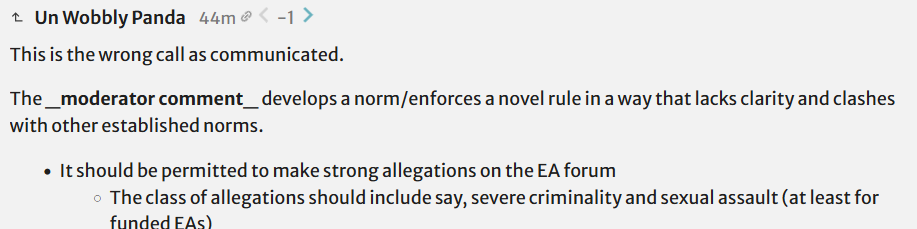

This is the wrong call as communicated.

The _moderator comment_ develops a norm/enforces a novel rule in a way that lacks clarity and clashes with other established norms.

- It should be permitted to make strong allegations on the EA forum

- The class of allegations should include say, severe criminality and sexual assault (at least for funded EAs)

- EA discourse was supposed to be a strong filter for truth/an environment for effectiveness to rise to the top

- You are reaching/editing into public discourse with little clarity/purpose

- If you want to forbid Mohn's demand, at least it should be explained better, ideally downstream of some broad, clear principle—not merely because they are taking advantage of a mechanic

- Forum banning and other mechanics/design should take into account the external knowledge induced by the mechanics/design

- This includes downstream information implied by moderator decisions like banning.

- You are responsible for what the banning system or the forum mechanics imply and shouldn't rely on reaching into the world and editing public spaces for forum aims

- To some degree, "public spaces" includes the EA forum, because the EA forum is a monopoly and that carries obligations

- Ideally, the harm/issue here should be self-limiting and ideally the forum should be allowed express itself

- E.g., if the accused XXXX doesn't care, ignores Mohn, and others view the demand to present themselves as silly or unreasonable, Mohn's demand has no power

- We should prefer these norms and their decisions to accurately reflect the substance/seriousness/truth of the accusation (and not when a moderator expresses their dislike of how someone is using forum mechanics)

Suggestions:

- What you should do is make clear this is your rumination/thought or a future norm to be enforced

- E.g. Oliver Habryka's style in many of his comments, which does not use moderator authority explicitly

- You have plenty of soft power in doing so

- If you think there is a stronger reason to forbid Mohn's accusation, and you should use moderator power, you should communicate the reason

Also:

- I think if you think an innocent person is seriously wronged and the situation is serious, you can just exonerate them: "Note that XXX actually wasn't banned for this, I normally don't say this, but this is a one-time exception because <reason YYYY>"

(I support strong moderation, including using broad, even arbitrary seeming action, I'm critiquing the comment based on the arguments above, not because it's not in a rule book.)

I think some statements or ideas here might be overly divisive or a little simplistic.

- Ignoring emotional responses and optics in favour of pure open dialogue. Feels very New Atheist.

- The long pieces of independent research that are extremely difficult to independently verify and which often defer to other pieces of difficult-to-verify independent research.

- Heavy use of expected value calculations rather than emphasising the uncertainty and cluelessness around a lot of our numbers.

- The more-karma-more-votes system that encourages an echo chamber.

For counterpoints, if you go look at respected communities of say, medical professionals (surgeons), as well as top athletes/military/lawyers, they effectively do all of the things you criticize. All of these communities have complex systems of beliefs, use jargon, in-groups and have imperfect levels of intellectual honesty. Often, decisions and judgement are made by senior leaders opaquely, so that a formal "expected value calculation" would be transparent in comparison. Despite all this, these groups are respected, trusted and often very effective in their domains.

Similarly, in EA, in order to make progress, authority needs to be used. EA can't avoid internal authority, as proven by EA's history and other movements. Instead, we have to do this with intention, that is, there needs to be some senior people, who are correct and virtuous, who need to be trusted.

The problem is that LessWrong has in the past, monopolized implicit positions of authority, using a particular style of discourse and rhetoric, which allows it masks what it is doing. As it does this, the fact that it is a distinct entity, actively seeking resources from EA, is mostly ignored.

Getting to the object level on LessWrong: what could be great is a true focus on virtue/“rationality”/self improvement. In theory, LessWrong and rationality is fantastic, and there should be more of this. The problem is that without true ability, bad implementation and co-option occurs. For example, one person, with narcissistic personality disorder, overbearingly dominated discourse on LessWrong and appropriated EA identity elsewhere. Only recently, when it has become egregiously clear that this person has negative value, has the community done much to counter him. This is both a moral mistake and a strong smell that something is wrong. The fact this person and their (well telegraphed) issues persisted, casts doubt on the LessWrong intellectual aesthetics, as well and their choice to steer numerous programs to one cause (which they decided on long ago).

To the bigger question “RE: fixing EA”. Some people are working on this, but the squalor and instability of the EA ecosystem is hampering this and shielding itself from reform. For one example, someone I know was invested in a FTX FF worldview submission, until the contest was cancelled in November for almost the worst reason possible. In the aftermath, this person needs to go and handhold a long list of computer scientists / other leaders. That is, they need to defend the very AI-safety worldview caused by this disaster, after their project was destroyed by the same disaster. While this is going on, there are many side considerations. For example, despite desperately wanting to communicate and correct EA, they need worry about any public communication being seized and used by “Zoe Cramer”-style critiques, which have been enormously empowered. These critiques are misplaced at best, but writing why “democracy” and “voting” is bad, looks ridiculous, given the issues in the paragraph above.

The solution space is small and the people who think they can solve this are going to have visible actions/personas that will look different than other people.

Thank you for this useful content and explaining your beliefs.

don't follow Timnit closely, but I'm fairly unconvinced by much of what I think you're referring to RE: "Timnit Gebru-style yelling / sneering", and I don't want to give the impression that my uncertainties are strongly influenced by this, or by AI-safety community pushback to those kinds of sneering.

My comment is claiming a dynamic that is upstream of, and produces the information environment you are in. This produces your "skepticism" or "uncertainty". To expand on this, without this dynamic, the facts and truth would be clearer and you would not be uncertain or feel the need to update your beliefs in response to a forum post.

My comment is not implying you are influenced by "Gebru-style" content directly. It is sort of implying the opposite/orthogonal. The fact you felt it necessary to distance yourself from Gebru several times in your comment, essentially because a comment mentioned her name, makes this very point itself.

don't really know what you are referring to RE: folks who share "the same skepticism" (but some more negative version), but I'd be hesitant to agree that I share these views that you are attributing to me, since I don't know what their views are.

Yes, I affirm that "skepticism" or "uncertainty" are my words. (I think the nature of this "skepticism" is secondary to the main point in my comment and the fact you brought this up is symptomatic of the point I made).

At the same time, I think the rest of your comment suggests my beliefs/representation of you was fair (e.g. sometimes uncharitably seeing 80KH as a recruitment platform for "AI/LT" would be consistent with skepticism).

In some sense, my comment is not a direct reply to you (you are even mentioned in third person). I'm OK with this, or even find the resulting response desirable, and it may have been hard to achieve in any other way.

Hi Stan,

This is great! Some questions:

- How did you get the data to do this research?

- Do you have access to a sample of Twitter (e.g."Twitter firehouse"), or know people who do?

- What is your background and interests in further research, do you have a specific vision or project in mind?

If funded, would you be interested in doing a broader, impartial study of EA and Twitter discourse?

I'm missing some important object-level knowledge, so it's nice to see this spelled out more explicitly by someone with more exposure to the relevant community. Hope you get some engagement!

I know several people from outside EA (Ivy League, Ex-FANG, work in ML, startup, Bay Area) and they share the same "skepticism" (in quotes because it's not the right word, their view is more negative).

I suspect one aspect of the problem is the sort of "Timnit Gebru"-style yelling and also sneering, often from the leftist community that is opposed to EA more broadly (but much of this leftist sneering was nucleated by the Bay Area communities).

This gives proponents of AI-safety an easy target, funneling online discourse into a cul de sac of tribalism. I suspect this dynamic is deliberately cultivated on both sides, a system ultimately supported by a lot of crypto/tech wealth. This leads to where we are today, where someone like Bruce (not to mention many young people) get confused.

(Don't have a lot of time, quickly typing and very quickly skimming this post and comments, might have missed things.)

getting officially censored is annoying

The concerns in this comment are true. This can be overcome, but this requires a lot capability in many ways and is currently extremely hard.

"Getting censored" is much more than just annoying and could affect a lot of other people and projects.

There is a lot of context here and it's not easy to explain this. The comments and post, in some ways, show the limits of projects/activity from online discourse and social media.

Getting to one object level issue:

If what happened was that Max Tegmark or FLI gets many dubious grant applications, and this particular application made it a few steps through FLI's processes before it was caught, expo.se's story and the negative response you object to on the EA forum would be bad, destructive and false. If this was what happened, it would absolutely deserve your disapproval and alarm.

I don't think this isn't true. What we know is:

- An established (though hostile) newspaper gave an account with actual quotes from Tegmark that contradict his apparent actions

- The bespoke funding letter, signed by Tegmark, explicitly promising funding, "approved a grant" conditional on registration of the charity

- The hiring of the lawyer by Tegmark

When Tegmark edited his comment with more content, I'm surprised by how positive the reception of this edit got, which simply disavowed funding extremist groups.

I'm further surprised by the reaction and changing sentiment on the forum in reaction of this post, which simply presents an exonerating story. This story itself is directly contradicted by the signed statement in the letter itself.

Contrary to the top level post, it is false that it is standard practice to hand out signed declarations of financial support, with wording like "approved a grant" if substantial vetting remains. Also, it's extremely unusual for any non-profit to hire a lawyer to explain that a prospective grantee failed vetting in the application process. We also haven't seen any evidence that FLI actually communicated a rejection. Expo.se seems to have a positive record—even accepting the aesthetic here that newspapers or journalists are untrustworthy, it's costly for an outlet to outright lie or misrepresent facts.

There's other issues with Tegmark's/FLI statements (e.g. deflections about the lack of direct financial benefit to his brother, not addressing the material support the letter provided for registration/the reasonable suspicion this was a ploy to produce the letter).

There's much more that is problematic that underpin this. If I had more time, I would start a long thread explaining how funding and family relationships could interact really badly in EA/longtermism for several reasons, and another about Tegmark's insertions into geopolitical issues, which are clumsy at best.

Another comment said the EA forum reaction contributed to actual harm to Tegmark/FLI in amplifying the false narrative. I think a look at Twitter, or how the story, which continues and has been picked up in Vice, suggests to me this isn't this is true. Unfortunately, I think the opposite is true.

I'm mostly annoyed by the downvote because it makes the content invisible—I don't mind losing a lot of "karma" or being proven wrong or disagreed with.

As an aside, I thought you, Richard Mohn, were one of the most thoughtful, diligent people on the forum. I thought your post was great. Maybe it was clunky, but in a way I think I understand and emphasize with—the way a busy, technically competent person would produce a forum post. There was a lot of substance to it. You were also impressively magnanimous about the abusive action taken against you.

The vibe is sort of like a Michael Aird (who also commented), but with a different style.