This is a draft amnesty week post. These ideas are unrefined and I just keep changing my mind on them - so I'm posting it for draft amnesty week because it'll never be posted otherwise! My mind-changing is reflected in the strange logical ordering and contradicting conclusions throughout this post.

TL:DR. Cause areas are more like EA subcommittees, and the loose use of the term can sometimes negatively influence the approach of a community. Space governance shouldn’t be seen as a separate goal or problem on its own to solve. Like EA community building, space governance is a cause area that supports most other causes within EA (it is a meta-cause area). And so space governance can't really be compared well to other causes in its current framing. Reframing space governance as a meta-cause area (similar to EA community building) reveals new strategies for this subcommunity that might be more effective (e.g. network building, field building, and wide-ranging support to the EA community).

Note: I think a lot of this post can apply to AI safety too as AI will affect every other cause area. The difference is that AI is an x-risk in and of itself with clear goals to avoid (i.e. making sure AI doesn't kill us all).

A short history of space governance in EA

Tobias created a post about space governance, saying its important, tractable, and neglected: https://forum.effectivealtruism.org/posts/QkRq6aRA84vv4xsu9/space-governance-is-important-tractable-and-neglected

Space governance was briefly trendy in EA, with the founding of organisations like Space Futures Initiative and Center for Space Governance, and the publishing of an 80K problem profile on space governance.

Coming across space governance in EA is now rarer, but it exists. The collapse of FTX contributed to that as they funded stuff e.g. Space Futures Initiative. From what I can tell, a lot of "space governance people" moved to AI safety or s-risks.

There is still a need for space governance within EA though. It is still important, tractable, and neglected.

What is a cause area and is space governance one?

It seems that we sleep walk into calling things “cause areas” when this should be something done very deliberately.

“Cause area” can sometimes be a misleading term. I didn’t know what I actually meant when I said “cause area” until I questioned whether space governance constituted one. Is a “cause area” a goal? A set of goals? A problem to address? An area that you can specialise in? Or is a cause more like a subcommunity of EA?

Why is s-risk one cause area even though it contains a load of different situations we want to avoid like AI authoritarianism, creating new biospheres, and creating digital minds? But x-risk is separated into cause areas like AI safety, nuclear war, and engineered pandemics? Cause area definition is used so weirdly. The definition I’m going to use for this post is: “Cause areas constitute a group that have specialised to collaborate on a cluster of opportunities to do good”. That explains the s-risk as ONE cause area problem well - all the problems in the s-risk cause area require a similar approach to tackling speculative long term future scenarios.

Some cause areas are opportunities to do good in the world right now by solving a present problem, including animal welfare, global health, and reducing crackpotism. Other cause areas focus on an opportunity to prevent a future problem, like those associated with AI, engineered pandemics, and alien technology. Cause areas can be defined by their approach to a problem:

- Solving a present problem directly: global health and wellbeing, animal welfare

- Solving a future problem directly: AI safety (maybe AI safety is all 3 though, but it's most often framed this way), engineered pandemics

- Solving problems indirectly: EA community building, improving rationality of decision-makers

Cause areas in the category of solving problems indirectly have been referred to as meta-cause areas. I think that people have put space governance in the second category "solving a future problem", when really it's the 3rd (sometimes referred to as meta-cause areas). I think space governance is a meta-cause area because it has the potential to support other cause areas more than it solves a particular problem or set of problems. Here are my notes on the main cause areas that space (governance) contributes to:

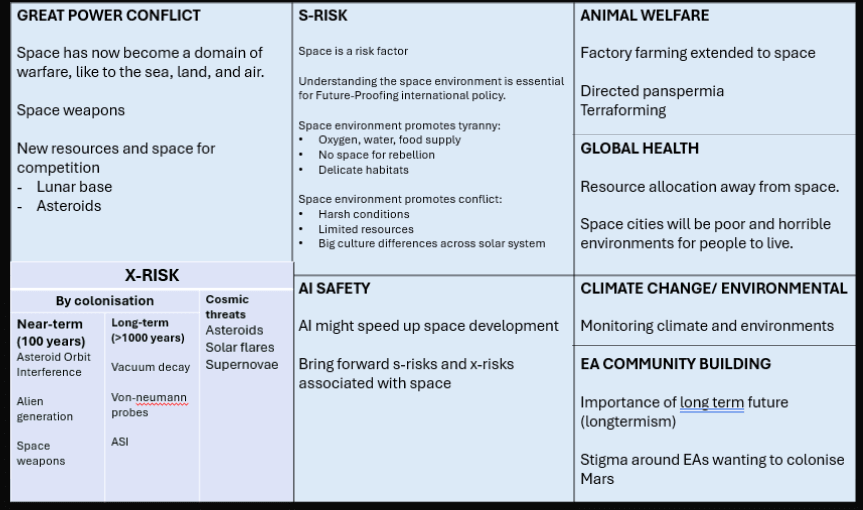

Summary of ways that space contributes to other EA cause areas:

- Great power conflict: space is a domain of warfare. Space weapons should be avoided. Space opens up new avenues for international conflicts e.g. over the moon - see debate between China/Russia and the USA on Artemis Accords and safety zones

- S-risks: This one's obvious, almost all s-risks are enabled by or made worse by the existence of infinite space.

- Animal welfare: Long term bad scenarios for wild animal suffering. Animals taken to space will suffer a lot probably - this problem is neglected but probably not important yet.

- Global Health: Lack of involvement of non-spacefaring nations in space activities will exacerbate global inequality into the future. Monitoring of natural disasters with satellites, monitoring of climate change impacts, supporting farming through Earth observation is trendy.

- x-risks: There are lots of natural x-risks from space i.e. cosmic threats. There are also potential artificial x-risks like space weapons and asteroid orbit alteration.

- Climate change: Monitoring of climate change impacts is done from space. I coauthored a report about space for climate action.

- EA community building: The space community is probably best placed to advocate for longtermism or our "cosmic endowment". I think its important for EA to have some views around space and human colonisation as they relate to x-risk and longtermism - best not to let Elon control that narrative.

Toby Ord talks about the idea of risk factors, as distinguished from risks. This seems to further complicate the situation. Space is a risk factor for x-risks (asteroid weaponization, conflicts) and s-risks (enables astronomical suffering through its size). Space governance is a solution to problems we want to solve, rather than a problem we want to solve. Like international policy is for great power conflict. Space policy from the perspective of great power conflict is just another area to specialise in if you want to support the great power conflict cause area.

Space governance is more easily compared with international policy than with other cause areas like engineered pandemics or climate change. It's a skill you can develop. International policy can help you address cause areas like engineered pandemics, AI safety, and great power conflict. Space Policy can help you address cause areas like s-risks, great power conflict, and climate change.

I think space governance is defined as a cause area because it requires a different set of skills than those required to address great power conflict, animal welfare, or engineered pandemics. So cause areas shouldn't be seen as a unit of analysis for comparing different causes as a skillset isn't something that can be analysed by the ITN framework. Orgs like 80,000 hours don't use "cause areas", they describe “world’s most pressing problems” and “problem profiles”. I think intentionally, “cause area” appears only once in the 80,000 hours career guide.

But if a cause area is just a particular set of skills, then why isn't Human Resources a cause area? Or graphic design?

A GiveWell definition from back in 2013 elaborates on this idea. But I think ends up allowing anything to be a cause area with this definition:

We’d roughly define a “cause” as “a particular set of problems, or opportunities, such that the people and organizations working on them are likely to interact with each other [...] [and require] knowledge of overlapping subjects”.

Maybe that's fine that anything can be a cause area, as long as it's more openly acknowledged or defined that this is NOT a unit of analysis, or a particular problem to solve. It's more like a subcommunity of EA.

If we accept that space governance is a cause area rather than a valuable speciality you can develop like HR, international policy, or graphic design, then the space governance community should take an approach that is probably more similar to the EA community building approach, which has been described as a mix of “Field Building (40%), Network Development (35%), Movement Support (20%), and Promoting the Uptake of Practices by Organizations (5%)”. We can also look at other examples of causes with wide-ranging goals:

- The Civil Rights Movement mainly used Social Movement Support

- The field of Public Health primarily focused on Field Building

- The United Nations emphasized Network Development

- The Fair Trade movement concentrated on Promoting the Uptake of Practices by Organizations

So space governance should focus more on what the UN does. Network development. And probably field building too.

Summary

Space Governance is better imagined as a “speciality area” rather than a “cause area”, or maybe a meta cause area. Working on space governance isn’t necessarily about supporting a particular cause, its a speciality, in which you can support many different EA cause areas. It's in the same category as EA community building, so reframing space governance as a meta-cause area (similar to community building) reveals new strategies for this subcommunity that might be more effective (e.g. network building, field building, and wide-ranging support to the EA community).

Well I did say I went further than you!

Agree there are valid space policy considerations (and I could add to that list)[1], but I think lack of tractability is a bigger problem than neglect.[2] Everyone involved in space already knows ASAT weapons are a terrible idea, they're technically banned since 1966, but yes, tests have happened despite that because superpowers gotta superpower. As with many other international relations problems - and space is more important than some of those and less than others - the problem is lack of coordination and enforceability rather than lack of awareness that problems might exist. Similarly Elon's obligation to deorbit Starlink at end of life is linked to SpaceX's FCC licence and parallel ESA regulation exists.[3] If he decides to gut the FCC and disregard it, it won't be from lack of study into congested orbital space or lack of awareness the problem exists.

"Examine environmental effects of deorbiting masses of satellites into the mesosphere and potential implications for future LEO deorbiting policy" would be at the top of my personal list for timeliness and terrestrial impact...

And above all, am struggling to see the marginal impact being bigger than health. as 80k suggested.

It's also not in SpaceX's interests to jeopardise LEO because they extract more economic value from that space than anyone else...