This is a linkpost (of sorts) for https://github.com/jamesallenevans/AreWeDoomed/issues/

A text-dump of all the memos I've written for BPRO 25800, Are we Doomed? Confronting the End of the World, a class taught at the University of Chicago by Professors James Evans and Daniel Holz. I enrolled in this class for Spring Quarter 2021 and wrote a short (<500 words) comment for each week's topics. I figured that it would be useful to collect them here so I can use them for future content on existential risks.

April 1 - Introduction, Doomsday clock, Nuclear Annihilation - Memos · Issue #2 · jamesallenevans/AreWeDoomed (github.com)

My question on the potential to apply the frame of justice—which has been used effectively by left-leaning advocates for other issues such as climate change—to nuclear disarmament led me to find African Americans Against the Bomb: Nuclear Weapons, Colonialism, and the Black Freedom Movement, a book published in 2015 about the history of Black activism around nuclear disarmament. This reminded me of the fourth paragraph in Why is America getting a new $100 billion nuclear weapon?

$100 billion could pay 1.24 million elementary school teacher salaries for a year, provide 2.84 million four-year university scholarships, or cover 3.3 million hospital stays for covid-19 patients. It’s enough to build a massive mechanical wall to protect New York City from sea level rise. It’s enough to get to Mars.

Much like the movement to defund (i.e. shift resources away from) the police is centered around the potential for that money to create so much more social good, perhaps one way of messaging nuclear disarmament is to outline the current costs these nuclear weapons enact on Americans. It is unfortunately difficult to envision the future (hence why Vinton Cerf prefers the more ‘relatable’ qualitative argument), so noting that every dollar spent on nuclear weaponry is another dollar not going to fund schools, healthcare, or climate solutions could help make this existential risk more salient. I am partial to Martin Hellman’s argument for quantitative framing, and I think quantifying present-day costs could alleviate some of the issues that Cerf raised.

Thinking about @louisjlevin's comment, I am also advocating for this framing because I think it is all too easy to fall into despair when talking about existential risks. This sometimes poses a barrier by turning people off even thinking about such a potentially catastrophic issue, let alone taking action to solve it. It happens with climate change—another potential existential risk—but nuclear disarmament doesn’t yet seem so entwined with that kind of messaging, so there is more hope here!

Finally, I am taking a page out of a storied tradition in some fiction circles. Narrative change practitioners who emphasize the importance of hope in messaging about catastrophe note that this kind of narrative work has long been done through sci-fi, futurist, and speculative works. Yes, we need to warn people of the futures in which we haven’t done enough – in which we have multi-handedly caused the extinction of the human species (or worse). But we also need to help people imagine futures where we have successfully averted catastrophe – and then we can talk about how we can get there.

April 8 - Environmental Devastation - Memos · Issue #6 · jamesallenevans/AreWeDoomed (github.com)

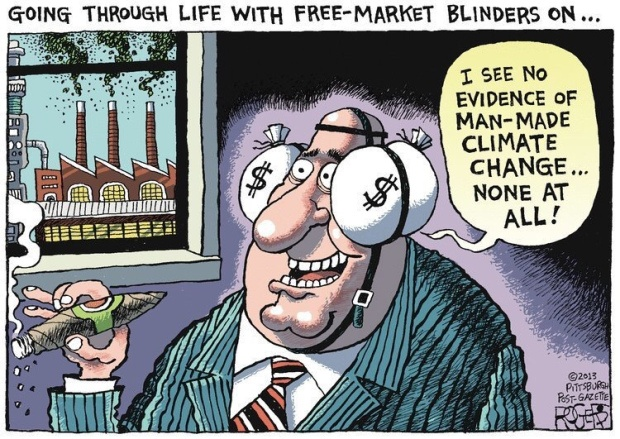

The Day After Tomorrow (2004) presents climate change as a potentially civilization-ending phenomenon, manifesting through a series of natural disasters that ends with an unprecedented freezing over of the northern hemisphere. I originally watched – and read – this story a couple of years ago, so revisiting it was very interesting. While I still experience a visceral emotional impact to the presented catastrophe, I feel like the narrative hasn’t aged well.

The film clearly highlights the existential threat that climate change might pose. By moving through the perspectives of different characters, who all experience the dangers of climate change in various ways, The Day After Tomorrow offers a variety of perspectives with which one can identify. Particularly effective is the relationship between Jack Hall, the protagonist and prescient scientist, and his son Sam. Their separation during the majority of the film immediately provides an emotional hook for the audience, introducing viewers to the ‘human’ impact of climate change.

Unfortunately, this family separation being the prime emotional conflict in the film leads The Day After Tomorrow to be a “disaster film” first and “representation of catastrophic risk” (let alone ‘accurate’) second. The climate catastrophe is partly caused because the US then-Vice President, Raymond Becker, dismisses Jack’s warnings about the threat of an ice age, which is not terribly representative of contemporary political views around climate change (nor of how climate advocacy works), but does hint at the barrier that politics may create for addressing climate change. However, instead of delving deeper into the policy solutions that might dismantle those barriers—like setting up more robust global governance systems, or combining economic and technological shifts—the film ends with now-President Becker apologizing for slighting Jack earlier, with no other follow up besides a promise to send helicopters. This essentially communicates that the main cause of this fictional ice age was the failure of political elites to listen to scientists because once Becker acknowledges that Jack was correct, the film takes an emotional turn towards hope and optimism as the storm begins clearing. This completely misrepresents the true risk of climate change, which lies not simply in the raw threat of a shifting climate but also in the difficulty of figuring out solutions that work for many different stakeholders and communities.

For a film aimed at the general Western public, I can accept subpar science and an inequitable + myopic focus on the domestic impact of climate change. But I struggle to justify the film’s implication that perhaps the way to avert climate catastrophe is simply to make politicians listen to scientists.

April 15 - Cyber - Memos · Issue #9 · jamesallenevans/AreWeDoomed (github.com)

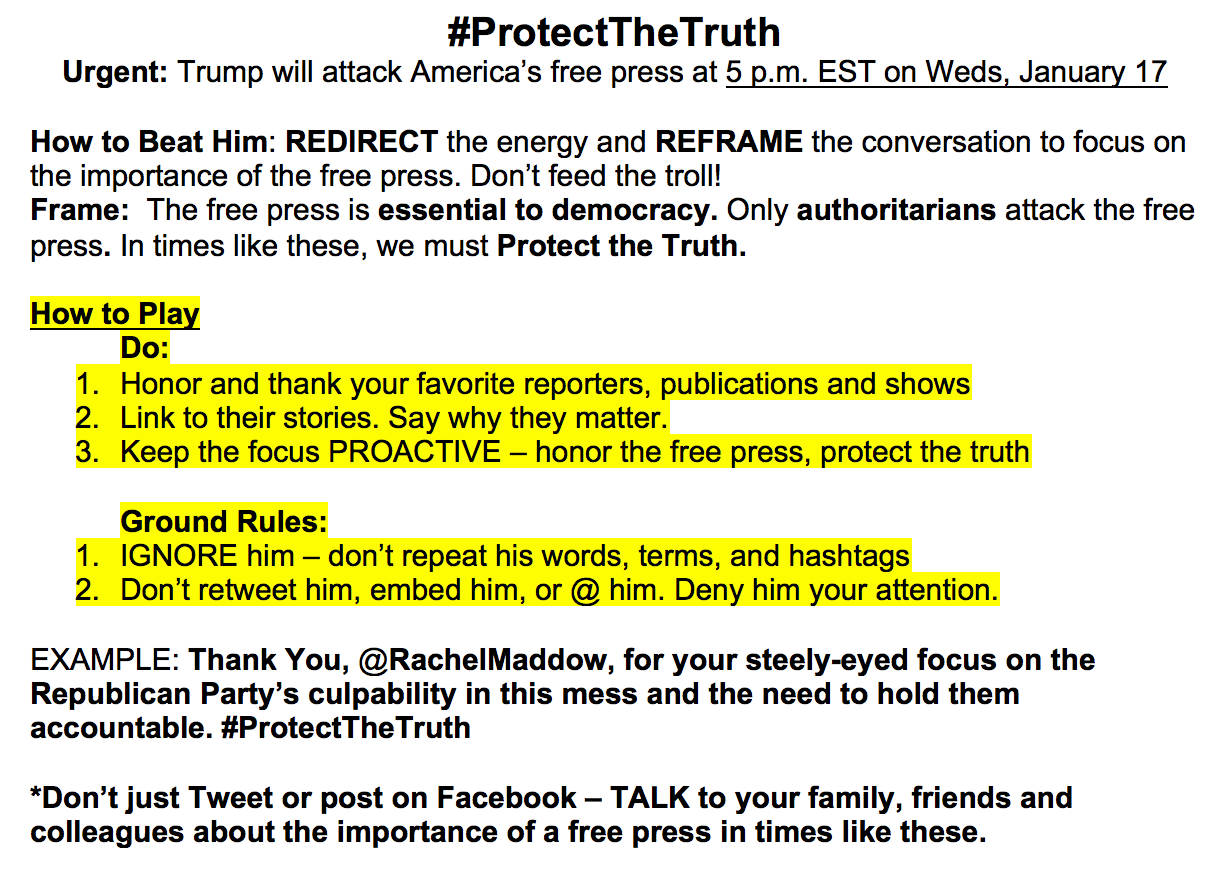

Dr. Lin's warning of cyber-enabled information warfare as a potential existential threat rings worryingly true for me. However, I am not entirely convinced by Lin's framing of this threat as "the end of the enlightenment." Lin's consistent warnings about cyber warfare as degrading enlightenment values (e.g. "pillars of logic, truth, and reality"), as well as Lin's mentions of emotional biases as an exacerbating factor, imply that an Enlightenment-esque valorization of logic might be integral in combatting this threat. While Lin does not claim that—indeed, Lin notes that the "development of new tactics and responses" is necessary—and I agree that the current media environment degrades our collective reasoning abilities, I would like to reframe emotion as not only part of the problem but also the solution (at least in American society).

First, it is important to situate my response in America's current context. Regardless of whether or not we should seek to 'return to Enlightenment,' it seems like those values are no longer terribly valued: the Media Insight Project's latest survey notes that the values of transparency and spotlighting wrongdoing—which seem related to the pillars of logic, truth, and reality—are not shared by a majority of Americans. Thus, devising ways to ensure transparent factual communication is unlikely to fully resolve this democratic threat, if only because Americans do not seem to care all that much about that (e.g. it might not increase their trust in institutions, which is a fundamental part of democracy). Of course, that is not to say that improving information transparency and factual communication is not important—and indeed, as America's media environment improves, these values might become more widely held. However, given that the public does not necessarily share these Enlightenment values, we cannot assume that relying on those values will suffice in crafting solutions.

This seems problematic if we share Lin's concern that "without shared, fact-based understandings [...] what hope is there for national leaders to reach agreements?" Fortunately, the counterpart to logic, emotion, can step in to help. Lin repeatedly notes that emotions can impair the cognitive ability to reason fairly, such as feeling the need to maintain one's social identity. Emotion is often tied to our values: for example, anger at a group leader being criticized might be motivated both by a desire to maintain one's identity and by a high value on respecting group leaders. This means that leveraging commonly-held values in service to combatting cyber warfare and disinformation could be an important step towards protecting democratic institutions. How could we tailor our solutions to appeal to different audiences with values ranging from loyalty to fairness? Developing fact-based understandings might not mean trying to amplify one universal message or eschewing emotion: instead, it could mean investigating what frames most appealingly convey facts for different audiences.

April 22 - AI - Memos · Issue #12 · jamesallenevans/AreWeDoomed (github.com)

Reading The Tale of the Omega Team was very interesting because of how benevolent the Omegas goal of "taking over the world" was. I've always been drawn to the idea of a benevolent dictator: wouldn't it be nice to have a super smart agent—like, say, an Artificial General Intelligence (AGI)—figure out and guarantee the best way to organize society?

Perhaps the most obvious counterargument is that government can always be co-opted and so any sort of authoritarian state would be dangerous, regardless of how benevolent the initial dictator is. But what if we had an effectively immortal dictator whose only purpose was to optimize for our most preferred futures? Though this is not exactly what The Tale proposes, as the Omegas remain vigilant over Prometheus, it is not a far jump. Would that really be so bad or could this be the best outcome of AGI development? After all, there is both optimism and pessimism—while there are great existential risks, there is existential hope, too.

Indeed, in the rest of Life 3.0, Tegmark details 12 possible futures following AGI development, of which four (a third!) seem utopic. But how could we pick? In the excerpt, democracy is (ironically) one of the Omegas' slogans—something that arguably would no longer exist under a benevolent dictatorship. Given two potential AGI futures, would we like to hand over optimization to AGI or maintain democracy and civic engagement? This is not merely a question of 'what works' as even the most well-functioning democracy (i.e. equally capable of optimizing for preferred futures) would require some sort of civic discourse and interpersonal engagement, which would be qualitatively different from a background AGI that tweaks institutional policy as needed. This kind of debate calls for an inclusive and nonjudgmental discussion of our values, which unfortunately does not seem very common. I am curious about the potential for public deliberation as a way of facilitating this sort of dialogue, but regardless of means, it seems important to better understand what kind of society we would like to manifest in order to understand how to go about value alignment—which includes, according to Stuart Russell, optimizing for 'our preferences over future lives.' This is particularly the case if we lean towards benevolent dictatorship as Russell notes that it would be safest to build caution into AGI, such as encouraging more options instead of fewer, so it is unlikely that AGI would ever initiate dictatorship on its own.

I think the idea of figuring out humanity's collective values runs into multiple philosophical quandaries, such as whether our values will drastically change in the future (and so which version of us should we be optimizing for?) or how we can account for the preferences of future generations before we 'lock-in' such a society. Still, I am hopeful that reminding us of the positive stakes with existential risks motivates us to address the negative ones. It is not just that we may lose all that humanity has achieved thus far but also that we may be missing out on far greater futures.

April 29 - Inequality - Memos · Issue #15 · jamesallenevans/AreWeDoomed (github.com)

Chakrabarty draws a distinction between the 'globe' of globalisation and the 'globe' of global warming, taking us away from the political concerns of international justice and to the question of humanity's role on a planet filled with non-humans. However, I am unclear what the implications of Chakrabarty's conclusion are—in particular, Chakrabarty argues that "the conversation [about taking non-humans seriously] will not proceed very far without negotiating the terrain of post-colonial and post-imperial formations of the modern." Is this a claim about the sociopolitical feasibility of seriously considering the moral value of non-humans or of the philosophical tractability of attempting such a conversation without first tackling modernity? The former would be a practical question and hence a debate over empirical evidence, whereas the latter is more a theoretical contention. Either way, I struggle to see the same weightiness that Chakrabarty lends to this issue.

Firstly, there have been movements that advocate for extending moral consideration to non-human actors, such as the animal liberation movement. While such beliefs are still not mainstream, arguably the movement has played an important role in increasing awareness of inhumane farming and demand for animal product alternatives, which has bled into policy worldwide. Ironically, much of that demand is driven by human self-interest—for example, animal agriculture is often cited as a major contributor to climate change. However, the byproduct of moving away from animal agriculture will be improved welfare of non-humans, indicating that there is some feasibility around moving away from anthrocentrism even without tackling this issue of modernity. On the other hand, I concede that it remains to be seen whether we will ever morally value non-humans without first understanding our formations of modern society.

Secondly, there are other scholars who are similarly trying to negotiate these two understandings of humanity without explicitly addressing modernity, suggesting that attempting to do so is philosophically tractable. For example, in The Land of the Open Graves (2015) De León conceptualizes agency as emerging from interactions between human and non-human actors, which he terms the 'hybrid collectif.' De León applies this hybrid collectif to the political issue of immigration and demonstrates how the hybrid collectif illuminates the role of the U.S. government (specifically, border control) in the deaths of people attempting border crossings, which are conventionally attributed to the non-human cause of the desert's harsh condition. To me, this seems like a reasonable argument for taking non-humans seriously in a political environment even though De León does not seem to negotiate post-colonial and post-imperial conceptions of the modern—if anything, the migrants portrayed in The Land of the Open Graves are bound up in the human-centric concept of emancipation (e.g. reaching the U.S. as a form of freedom), and De León does not explicitly touch upon this.

Overall, I remain somewhat dubious of Chakrabarty's claim that humanity is incapable of giving animals political and moral consideration without first negotiating our conceptions of modernity. It seems that there are already efforts underway which do not address the issue Chakrabarty raises—but much of my skepticism may be dispelled once I become clearer on his views.

May 6 - Policy - Memos · Issue #17 · jamesallenevans/AreWeDoomed (github.com)

Ord takes a globalist view of humanity, emphasizing the shared potential futures of our species to argue for the importance of safeguarding our potential. This reminds me of Agarwal and Narain’s argument against one-worldism—is Ord’s philosophy of long-termism rooted in a form of colonialism, which ignores the disparate responsibilities of different countries?

Going by Agarwal and Narain’s criterion of causal relationship, this seems plausible. Agarwal and Narain argued that global warming is caused by excessive (i.e. exceeding the ‘natural allocation’) carbon emission and only a handful countries—primarily in the Global North—are responsible for that. Thus, the responsibility (and corresponding sacrifices) to resolve global warming should fall mostly on them. Is it possible that only a handful of countries, too, are responsible for our current level of existential risk? This seems possible for nuclear threat, as Perry points out: “unlike all other nations, the United States bears the greatest global responsibility […] America brought the bomb into the world.” (206) It seems reasonable to assume that artificial intelligence development will be concentrated in the wealthiest nations, such as the United States, although it should be noted that China is a huge player in this space as well. Cyber disinformation seems to also primarily be facilitated through a few players (the United States, Russia, China, Iran) though cyber warfare seems increasingly widespread. Climate change, of course, has already been covered.

Yet, even if Ord is perpetuating a colonialist mindset, I am not convinced that this affects his argument or its implications. Firstly, Ord’s recommendations are general, not focused on any single group or country. Though this might unwittingly ignore global injustice by not placing more responsibility on a specific group, Ord’s conclusion [that humanity must prioritize existential risks] would not change simply because the distribution of action changes. Secondly, Ord’s claim that “every single one of us can play a role” (216) accords with Agarwal and Narain’s point that countries that may not be responsible for global warming (e.g. India) still must act. Regardless of how we got to this state, it does not change that we are in our present situation and that our choices from hereon out are our responsibility. Even if, say, the just action for an Indian citizen to take is to press one’s electeds to hold another more responsible nation accountable, that is still an action that they must choose to take. Finally, if we are centering the conversation around justice, it is imperative to consider the issue of generational justice. Ord is motivated to safeguard the many future generations that could exist and that is a matter relevant for everyone regardless of their nationality. It might be that widespread public support will not manifest for interventions that are not considered fair given our history, but focusing on past injustice need not mean enacting injustice against future generations. There must be some way to balance present-day (intragenerational) justice with (intergenerational) justice for future humans.

May 13 - Pandemics - Memos · Issue #21 · jamesallenevans/AreWeDoomed (github.com)

Biodefense in Crisis is a holistic overview of the different areas in which improvements must be made to better prepare the U.S, and the world, for the next biothreat. However, one crucial element that is missing from the report is inequality. As COVID-19 has shown us, inequality both on a domestic and global scale has threatened humanity's ability to suppress this global pandemic. Given the current trajectory of increasing globalisation, it seems like redressing these inequalities may become ever more important in construction our biodefenses.

National disparities have manifested both in the impact of and response to COVID-19. The latter is more relevant for biodefense: a successful strategy must be able to respond quickly and comprehensively to an outbreak, and unfortunately, racial inequity persists in vaccination rates. This could be linked to disparities in public health infrastructure, such as the lower density of vaccination sites in majority-minority neighborhoods. With such disparities, reaching herd immunity seems far-off for the U.S. This is an important qualifier for Recommendation 15, "Provide emergency service providers with the resources they need to keep themselves and their families safe." Even if all service providers were provided the necessary resources, the response would not protect the entire nation if providers are not sufficiently distributed. Inequity does not only impact the specific neighborhoods experiencing underinvestment—it impacts surrounding areas and the rest of the nation, because a biothreat does not care about geographical or socioeconomic lines. To truly develop a robust biodefense strategy, America must simultaneously invest in developing and supporting equitable public health infrastructure (e.g. facilities for service providers).

Throughout Biodefense in Crisis, the authors note the importance of global coordination and collaboration. Again, however, they do not explicitly emphasize the role of inequality in biosecurity. India's current crisis is not only a national emergency but also a health and economic threat to its neighbors. Similar to how inequity in the U.S. threatens the nation's ability to reach herd immunity, inequity worldwide is threatening humanity's ability to overcome COVID-19. The global inequality in vaccine distribution and case fatalities is staggering—while the U.S. plans to open up by the end of 2021, almost 130 countries haven't even begun vaccinating their populations. Although the U.S. has implemented travel bans, they don't cover a majority of the world and it seems unsustainable to increase travel restrictions. If anything, it seems likely that these restrictions will lift as the U.S. opens up. Yet, many of the world's most populated places could be actively addressing COVID-19 until 2023. This inequality leaves even privileged places like the U.S. in a dilemma, particularly when combined with the decreasing probability of herd immunity. Does the U.S. restrict travel for years, while the rest of the world catches up, or does it return to business as usual? And what about the potential for COVID-19 to *increase* inequality?

There is a third way. The U.S., and nations that are similarly better-off, could contribute more to global COVID-19 efforts while working on health equity domestically. As global society comes out of the pandemic, governments must work harder to form international collaborations around resource access. Global medical access has always been deeply unequal and COVID-19 has shown us how urgently we must change that. When it comes to public health, humanity is only as strong as its weakest links.

May 20 - The Future - Memos · Issue #23 · jamesallenevans/AreWeDoomed (github.com)

Reading Rees's On The Future felt excitingly reminiscent of Ord's Precipice—dealing with concepts that were familiar and fascinating to me, yet still with a slightly different perspective. There are more similarities than differences between the two, I feel: Rees and Ord mention stewardship as a motivation for safeguarding humanity, the trend towards (and important nuances in) societal progress, the potential for space colonization, the relative importance of anthropogenic risks, and the many different avenues for action. It is the last point that I will focus on here, as I realized that both Rees and Ord are affiliated with the 'effective altruism' (EA) community.

In Chapter 7 of Precipice, starting on page 214, Ord mentions that on average we work 80,000 hours in our lifetime. That means devoting one's career to working on urgent causes could have a huge impact! Similarly, in the Conclusions of On the Future, Rees explores the role of science and scientists in humanity's future. Rees goes into more detail than Ord, specifically suggesting that "those embarking on research should pick a topic to suit their personality, and also their skills and tastes [...] And another thing: it is unwise to head straight for the most important or fundamental problem. You should multiply the importance of the problem by the probability that you’ll solve it, and maximise that product."

Rees's suggestions struck me as very similar to the framework that 80000 Hours, an EA organization, uses. 80000 Hours provides an in-depth and constantly updated career planning information for people who want to 'do the most good' (i.e., aspire to be effective altruists) with their working hours. A simplified version of their framework is as follows: (Career Capital (or what I consider, 'Potential Future Impact') + Impact + Supportive Conditions) * Personal Fit

Career capital refers to the common use of the term: the potential for a job to improve your future prospects. In this specific framework, I interpret it as akin to 'potential future impact' because 80000 Hours is always framing work in terms of how impactful it is. This is perhaps the least related to Rees's suggestions—because it's the most generic concept of the three, I suppose!

Impact is determined by 80000 Hours by a combination of three factors: the problem, the opportunity, and your personal fit. This reminds me of Rees's suggestion not to head straight for "the most important or fundamental problem," which would be to only consider one factor of three, but to also consider "the probability that you'll solve it" ('the opportunity') and your "skills and tastes" (personal fit).

*Note: Confusingly, in the diagram above 'personal fit' is seen as separate from career impact, but that is because the diagram is from 2017 whereas the guide that 80000 Hours currently endorses is from 2020. I have chosen to provide the slightly outdated diagram because their current guide provides a more complicated diagram that is specific to career opportunities, rather than a job path generally

The latter two seem fairly straightforward. Whether or not a job opportunity in a given area will allow you to effect change might be difficult to measure, but it is conceptually simple. For example, you could spend 80000 hours being an unpaid intern with the EPA or being its administrator. The latter clearly offers greater potential to effect change, despite both roles being related to climate change and the environment. Personal fit is even simpler—what skills do you already have? What skills do you want to learn? What issues are you most drawn to?

Evaluating the importance of a problem, however, is a little more complicated from the EA perspective. Our class regularly posits climate change as the cause of civilization's doom, which might suggest that climate change is THE problem to work on. But that isn't necessarily true under the 80000 Hours (and EA) framework. Working out which problem to prioritize means assessing its importance, tractability, and neglectedness. Importance refers to the scale of the issue—how bad is it and how bad can it get? Arguably, that is what our class poll is measuring. Tractability refers to how solvable the problem is right now, a little akin to 'the opportunity' of a specific job except more broad. For example, the problem of AGI might not seem very tractable given the uncertainty surrounding its development. Finally, neglectedness refers to the relative attention the problem gets. Climate change, for example, is not at all neglected relative to AI because far more people are interested in and concerned about the former. I would argue that the class poll is measuring the opposite of neglectedness, in that people may tend to pick what they have already heard of (confirmation bias, perhaps).

To avoid this getting too long, I'm going to end here. However, I will follow in Ord and Rees's footsteps and urge you to take a look at going into a career that you think can improve humanity's existential prospects! 80000 Hours constantly comes out with career guides and it is a lot of fun to browse through them. As college students, we still have so much more time to decide what to do—will you be an AI safety researcher, figuring out how to solve the alignment problem? Or maybe you want to figure out how to prevent a global pandemic from happening ever again? Or perhaps you want to spread your newfound knowledge of existential risk and build the community of concerned humans?

There are so many possibilities, not only for the human species but for us as individuals, too. Go forth!

May 27 - Artistic Imagination - 2051 Objects · Issue #18 · jamesallenevans/AreWeDoomed (github.com)

Statement

U.S. Democracy is flawed for many reasons, of which one neglected cause is the underrepresentation of future generations. As evidenced by climate change, many of humanity's greatest challenges have effects that span generations and decades. This makes it difficult to motivate action or even understand the full scale of a problem. It also creates intergenerational _in_justice: by creating problems that will primarily be borne by future generations rather than ourselves, we are teetering towards leaving the world worse off than when we came into it. This is at heart an institutional failure, for there is little to no way to give the to-be-born a voice. I show one way this could be addressed above, through the creation of the Chamber of the Future whose sole purpose is to represent future humans. There already exist comparable institutions in other countries such as Singapore and Wales. Given the political power that the U.S. currently wields, however, it might be exponentially more impactful if such a means of representation were implemented here.