Introduction: On a great Yudkowsky essay: `Serious Stories'

Yudkowsky's short essay `Serious Stories' (02009) is a fascinating unit of culture, and I commend it to you. (You can - and probably even ought to - read it online, here.)

That essay deals with the crucial Futures Studies problem, that:

"Every Utopia ever constructed—in philosophy, fiction, or religion—has been, to one degree or another, a place where you wouldn't actually want to live."

It also raises such crucial questions as: What Is A Story? And as Yudkowsky rightly writes:

"If you read books on How To Write... these books will tell you that stories must contain "conflict"."

Brief Boring Backstory Bit: Among other occupations, I've been a professional storyteller since 01993, (three decades now, yikes) and along the way, I studied Narrative, in order to try and do it less-worse... I published a free book while at Film School in 01995 (a summary of useful narrative tools for professional screenwriters), and in 02016, I completed an Evolutionary Culturology Ph.D at The Newcastle School of Creativity, that involved a lot of close study of Story/Narrative. (...More on that PhD here, and, a super-brief Lit Review of Narratology, here.) But I digress. [End of Boring-Backstory-Bit]

What is a story?

I know that the great philosopher of science Sir Karl Popper said "What is ~ ? " questions are a waste of time and space, but whatever.

My own preferred algorithm (or formula, or equation) for `story/narrative' - due both to its simplicity/parsimony, and generality of applicability - is Jon Gottschall's (02012) definition, from the great book, The Storytelling Animal:

`Story = [1] Character + [2] Problem + [3] Attempted Extrication’

(Gottschall 02012, p. 52)

By this definition, without those 3 key elements present [#1,2 & 3, above], you may well be experiencing something interesting, but technically, a "story/narrative" it: isn't.

In short, a `Problem' (or, a Goal, or, an Objective) for an Intentional Agent (i.e., a Character/s) results in conflict, as indeed Yudkowsky notes, in his great essay...

And, any `Scenes' (in any Communication Media) without dramatic conflict, can get boring (uncompelling) fast.

(In simple terms, watching two or more agents `do battle' is usually engaging for us humanimals. ...What's not to learn-? We tend to root for one of them, and, pay close attention to what strategies work, in what situations.) Enter: Game Theory... (another story, for another time.)

All Life Is Problem-Solving

As the great Popper pointed out, in his wonderful book of collected essays, All Life Is Problem Solving (01999):

`The great majority of theories are false and/or untestable. Valuable, testable theories will search for errors. We try to find errors and to eliminate them. This is science: it consists of wild, often irresponsible ideas that it places under the strict control of error correction.

Question: This is the same process as in amoebas and other lower organisms. What is the difference between an amoeba and Einstein?

Answer: The amoeba is eliminated when it makes mistakes. If it is conscious it will be afraid of mistakes. Einstein looks for mistakes. He is able to do this because his theory is not part of himself but an object he can consciously investigate and criticize.' (Popper 01999, p. 39)

Small wonder that people love (good) stories so much.

A good story is: Game Theory Illustrated.

Or if you prefer, Game Theory Enacted.

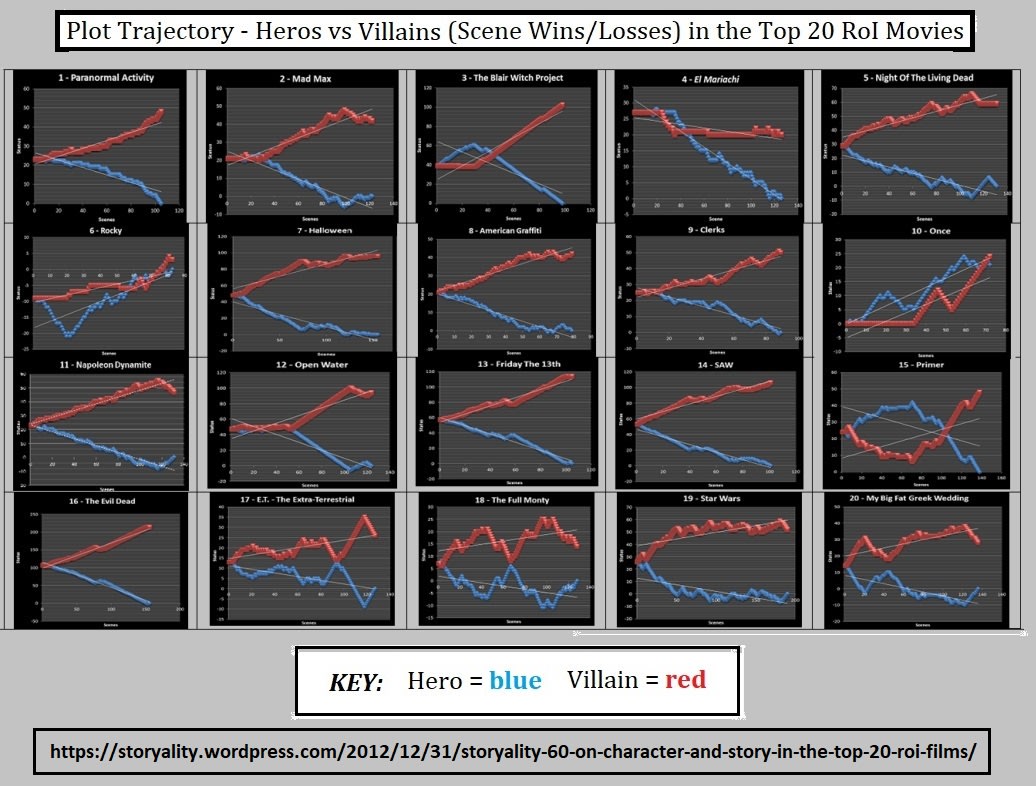

As part of my 02016 PhD-work, I plotted all the Scenes in the top-20 most profitable movies, awarding 1 point when a character won a scene (at scene's end), and deducting one when they lost (some Scenes are a 0-0 draw). Those charts look like this:

...Why am I telling you all this?

My point being, there are:

Utopias Worth Wanting

I suggest, there are some Utopias that you would want to live in...!

Yudkowsky (and, as he notes, Orwell) are right, that most canonical/popular stories about Utopias aren't very appealing...

Storytellers aiming to capture and maintain audience attention[1] need to keep throwing problems (thus, conflict) hard-&-fast at their protagonists, or else folks fall asleep.

In The EthiSizer - A Novellarama (02022), The EthiSizer AI writes:

`On Good and Bad Science Fiction: Utopias, Dystopias, and everything in between

A Google Ngram search leads one to believe that people have written more about `utopias’ than `dystopias’.[68]

Yet in the realm of fiction, the opposite seems to hold! In the great short story collection Brave New Worlds (Adams, 02012), Ross E. Lockhart collated a list of `notable utopian fiction’ (20 works, ranging from Iain M. Banks’ Consider Phlebas (The Culture Series) to B. F. Skinner’s Walden Two), and conversely, `notable dystopian fiction’: 153 works, ranging from Andrew Foster Altschul’s Deus Ex Machina, to Rob Zeigler’s Seed (p. 99%).

Currently (at the time of writing of this sentence), Wikipedia lists 97 works under `List of Utopian Literature’[69] and yet 274 works under `List of Dystopian Literature’.[70]

Notice a pattern? Why so many more stories about bad futures, than good? One reason is: Stories (narratives) where everything’s great are not as compelling to experience. Authors need to sell books to put food on the table. As evolutionary literary scholar Jon Gottschall (02012) rightly notes, humanimals are “the storytelling animal” after all. And, without a problem–without something going wrong–there is no story, or narrative. Most authors take the path of less resistance, and figure: Why not have pretty much everything go wrong, as in, a dystopia?

But as Harari notes: life–and indeed reality–is not a story! See (Harari, 02018, Ch 20).

A feature of human nature is that we humans like to mentally escape into fictional worlds. Dystopian stories like Frankenstein, The Terminator franchise, and The Hunger Games series all sell vastly more copies (and movie tickets) than do utopian stories, such as Iain M. Banks’ Culture series.

The evolved psychology of the humanimal mind has a negativity bias, finding bad news more memorable, and attention-worthy than good news, as Evolutionary Psychologist David Buss (02012, pp. 393-4) quite rightly notes.

As a result of this negativity bias,[71] people fixate on murderous reanimated corpses such as Frankenstein’s monster; murderous psychotic computers such as HAL-9000 from 02001: A Space Odyssey; The Terminator’s SkyNet; and the machines in The Matrix franchise, rather than say the benevolent digital assistant in the movie Her (02013).

Source: The EthiSizer - A Novella-rama (The EthiSizer, 02022, p. 52)

Problem: How To Create a Utopia That's Narratively Compelling?

Thus, if we imagine a future where a singleton arises - thus solving all global problems, and also ending all suffering - where's the "story-juice"...?

...Who wants to watch, that-? ...Let alone, live in it-?

A world with no more: wars, murders, rapes, thefts, trauma, injustice...

...Wouldn't it be: super-boring?

As if there's no more problems, due to The All-Seeing Eye of The EthiSizer, then surely there's no story - ‽

Not so fast...

If you have a singleton - like say an EthiSizer, that behaves like an omniscient and omnipotent `god' of yore,[2] and, punishes `sinners' (folks whose Personal Ethics Score dips below 0%) - there's still plenty of scope for drama, action, and conflict.

...Of course, one's first instinct (probably?) is to surmise that, in a perfect world (a Utopia), nobody would ever do anything wrong, bad, or evil...

Thus, no problems. Thus, no conflict. Thus, no suffering.

But on the other hand, if people still have freedom of choice (which is reflected in their actions, and thus, in their Personal Ethics Score), surely some bad guys will get up to their old tricks...

And the fun comes in, when they get caught by The EthiSizer...

So in terms of story/narrative, plenty of scope for EthiSizer Droids to bust in and dispense ethical justice...

(So, in moviespeak, maybe think: Robocop (01987)[3] meets the Terminator movies, via I, Robot.)

For the storytellers, the fun is in thinking up all the Ethical Violation scenarios...

(And for what it's worth, Tolstoy would probably approve - as in later life, he felt any author not clearly taking a moral position on their characters was a waste of ink and think.)[4]

For audiences/readers, the fun is in: seeing The Bad (Unethical) Guys, Lose.

I'd like to live in that world...?

No more corrupt politicians, for one thing.

Also - World Peace... No more war.[5]

I'd definitely prefer to live in that world...

Anyway - if of interest to any Futures Studies scholars, there's more specific examples (you might even call them `Narrative Case Studies') in this book, and that book.[6]

And please do be inspired to write your own Utopian Singleton Stories...[7]

Conclusion

To sum up: Yudkowsky's 02009 essay `Serious Stories' is great. As is, that whole Value Theory Series of essays.

And yes, most Utopias in literature are: kinda boring...! (Thus, we have vastly more Dystopias in popular and classical literature. Especially, Science Fiction Dystopias.)

However. The EthiSizer demonstrates that a super-ethical world would be one worth living in, and still leaves plenty of scope for stories. Problems and (super-ethical) solutions.

Also, let's suck it and see-?[8]

- ^

For more on all that, see the great book: On The Origin of Stories: Evolution, Cognition, and Fiction (Brian Boyd, 02009).

- ^

Some commentators have suggested all of Earth's 10,000 past religions have psychologically prepared humanity for a Singleton, such as The EthiSizer. (They may be right?) Like Dataism, The EthiSizer makes Science a religion.

- ^

Nothing wrong with the (02014) Robocop movie; I just prefer satires. In fact, I prefer science-fiction mindbender satires, but that's just me.

- ^

See: A Swim In The Pond In The Rain (Saunders 02021), specifically the section on Tolstoy, titled `And Yet They Drove On - Thoughts on Master and Man'. And Tolstoy really goes to town, in `The Works of Guy de Maupassant' (Tolstoy 01894).

- ^

- ^

- ^

See The 6E Essay for more details.

- ^

(Side Note: I find it annoying when people form and pass `opinions' on things they haven't yet experienced - or, simulated in a computer - themselves. Don't you?)