This is a linkpost from ClearerThinking.org. We've included some excerpts of the article below, but you can see the full post here.

A libertarian, a socialist, an environmentalist, and a pro-development YIMBY watch an apartment complex being built. The libertarian is pleased - "the hand of the market at work!" - whereas the socialist worries that the building is a harbinger of gentrification; the YIMBY sees progress, but the environmentalist is concerned about the building’s carbon footprint. They’re all seeing the same thing, but they understand it differently, because they inhabit different worldviews.

We can think of worldviews as snow globes. We each occupy our own snow globe and, when we’re inside it, it can seem like the whole world. If it’s snowing in our snow globe, we think it’s snowing everywhere; if our snow globe is made of green glass, everything looks green to us. We might not even realize that there’s anything beyond our snow globe! But if we can step outside, we see that our view from inside was only part of a much larger picture. If you can step outside your snow globe - and visit other people’s - you’ll be able to see a more accurate representation of the world and communicate better with others. For every snow globe you master, you’ll gain a powerful new lens through which to see the world - and you’ll see that no single snow globe has all the answers.

Image generated using the AI DALL•E 2

What makes a worldview?

Worldviews are a type of story we learn about how the world works and about what things matter and why. In this post, we put forward a theory of worldviews that will help you understand how different worldviews work. We call this "Snow Globe Theory." Every worldview includes many beliefs, but after reflecting on a wide variety of worldviews, we believe that almost every one has four central components. There are other common elements that some worldviews have but others don’t - for example, a strong culture, or a theory about trustworthy sources of knowledge – but this article will focus on these four central elements:

What is good?

Where do good and bad come from?

Who deserves the good?

How can you do good or be good?

You can understand a worldview quite well if you know what thoughtful people with that worldview would all answer in response to these four questions. While each individual member of a group will have somewhat different answers to the questions above, it is the portions of their answers that most members of that group share with each other that compose the group's worldview. Our article explains these four components in more detail, along with other factors that can contribute to worldviews.

Why it's important to understand how people think through the theory of worldviews

The truth lies outside any one worldview

Most of us are taught a worldview growing up, or we pick up one in college or from media that we consume. It can be tempting to stay in that snow globe forever. However, being stuck in just one worldview limits our ability to see the world as it truly is. Worldviews tend to be simplistic, which has some advantages - it’s easier to understand the world and relate to other people if you share simple stories about good and bad, right and wrong. But because worldviews are simple, and the world is complex, any one worldview necessarily misses a lot of nuance. Just about every worldview has some truths that it is good at seeing accurately, and some truths that it is systematically blind to. But you don't have to be stuck in just one worldview. The more worldviews you understand, the more accurately you'll see the world.

Occupying many snow globes lets you better understand the world and communicate more effectively

You might think, "Ok, but I know that my worldview is correct - otherwise, it wouldn’t be my worldview! Why should I waste my time understanding people whose beliefs are flawed or toxic?" For instance, if you’re strongly committed to one of the progressive worldviews, you might not see the value in understanding the point of view of one of the conservative worldviews, and vice versa. However, it’s useful to know how other people think even if you believe that their worldview is deeply wrong. Governments, corporations, non-profits, religions, political parties, and other powerful groups are often guided by a particular worldview. If you don’t understand that worldview, then you’ll be unable to predict what these groups will do. You will also struggle to communicate with them in a way that they care about, or persuade them to do things differently. When people engage with others who have a different worldview, they frequently make the mistake of relying too much on the stories and assumptions of their own worldview. But this is unlikely to work well, because the person they are talking to does not share these assumptions. To be really convincing to one another, you have to be able to see things from their perspective. To give a topical example: in abortion debates, pro-choice progressives often misunderstand the worldview of pro-life conservatives. We’re publishing this article only a few days after Roe v Wade was overturned; since then, abortion has been banned or restricted in several U.S. states.

We originally drafted this section before the overturning, and know that some of our readers will be feeling immense grief or distress at this ruling, and that abortion is an exceptionally fraught and emotionally-charged issue at the best of times. No matter how much you believe that the overturning of Roe v. Wade was harmful and wrong, and even if your only goal is to win a political victory, we believe it’s still going to be useful to understand what pro-lifers really think: that way, you’ll be better placed to predict what they’ll do, or persuade them of your own point of view.

When talking about abortion, progressives sometimes say things like this tweet by @leilacohan which has been retweeted more than one hundred thousand times:

"If it was about babies, we’d have excellent and free universal maternal care. You wouldn’t be charged a cent to give birth, no matter how complicated your delivery was. If it was about babies, we’d have months and months of parental leave, for everyone.

If it was about babies, we’d have free lactation consultants, free diapers, free formula. If it was about babies, we’d have free and excellent childcare from newborns on. If it was about babies, we’d have universal preschool and pre-k and guaranteed after school placements."

From a progressive worldview, this tweet is powerful and persuasive; but it’s unlikely to be persuasive to U.S.-based Christian conservative pro-lifers - the group that it’s apparently discussing - because it misunderstands their worldview. The argument is that if conservatives cared about babies, they would support babies and children through funding and social programs. The subtext is that since conservatives don’t support those things, they are being dishonest about what they care about, and just pretending that they care about not letting fetuses die. But the idea that we should try to make good things happen through government intervention is itself a progressive belief. Conservatives tend to think that individual responsibility is more important, and to be skeptical that large government-run social programs produce good outcomes. While modern Christians have a variety of views on abortion (depending on factors such as their denomination), and the Bible doesn't address the topic directly, within the first few hundred years of Christianity there were Christian scholars arguing that life begins at conception (rather than birth). In the U.S., many Christian conservatives who oppose abortion believe that fetuses should have the same rights as born babies: we polled 49 people in the US who say that they’re "very happy" that Roe v Wade has been overturned. Of these, 90% said that they think abortion is wrong because it’s murder (i.e., similar to killing an adult human).

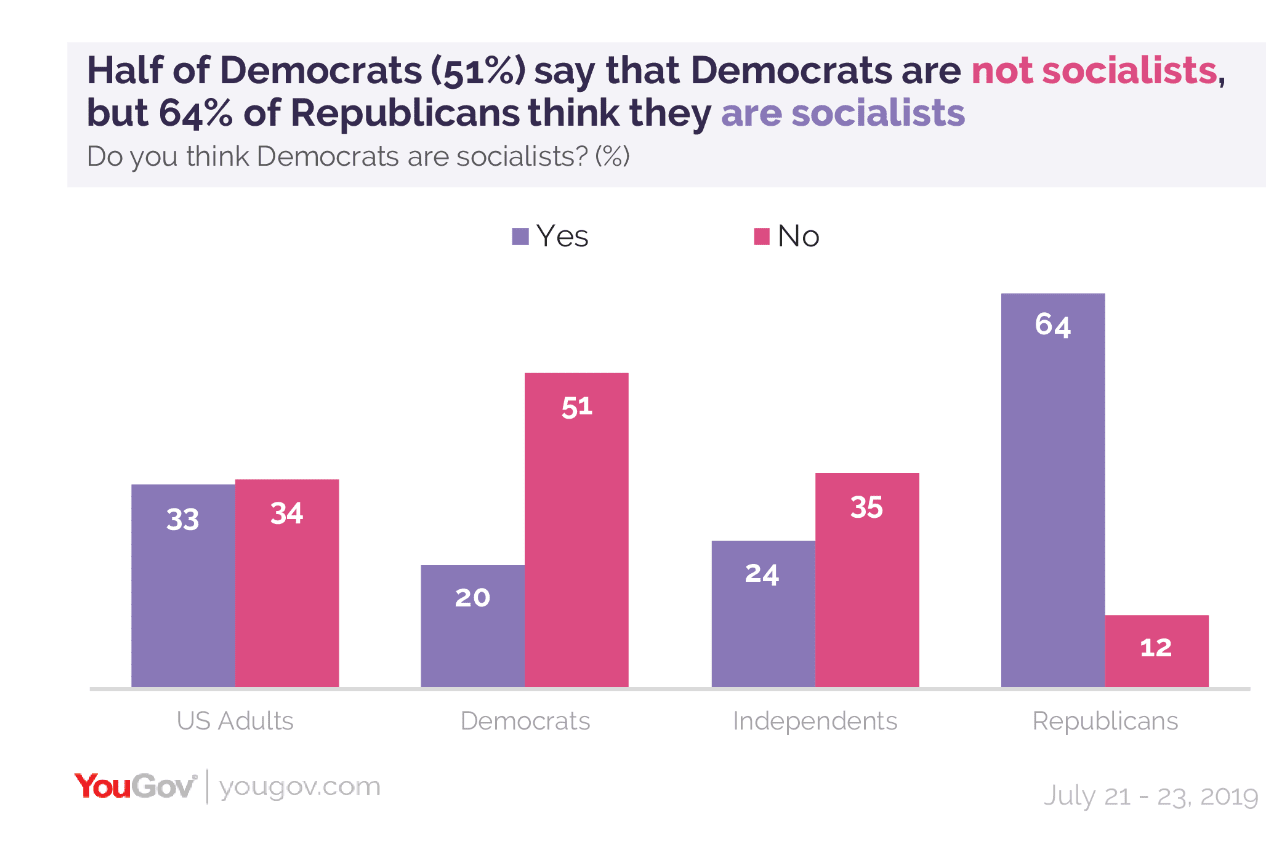

From within a U.S. Christian conservative worldview, there is no tension between (1) thinking that abortion is murder and (2) not supporting free diapers and childcare. If you momentarily step inside the snowglobe of this worldview, it becomes clear that you can oppose what you see as murder without believing that the government should fund large programs aimed at improving people’s quality of life. Another common misunderstanding (from the other side of the political spectrum) is that conservatives sometimes label anyone left-of-center as a "socialist," and believe that liberals oppose capitalism (see the chart below which demonstrates the misunderstanding). But this is a misunderstanding of the average liberal’s worldview: in the U.S., most self-identified liberals (for example, Democrat voters) aren’t "socialists" in the sense of "people who want to abolish capitalism and private ownership." Most support capitalism, but they differ from conservatives in that they support an expanded social safety net and favor moderate wealth redistribution through progressive tax policies.

This is just an excerpt from our article on ClearerThinking.org. You can use this link if you'd like to read the entire piece.

I think this article is quite badly mistaken, both in its account of what particular groups believe and its understanding of worldviews as such. It's also an excellent illustration of what happens when you fail to notice the walls of your own "snowglobe" - in this case, contemporary Anglophone politics (such as it is) as seen from the inside.

This is a domain where the most visible fault lines between worldviews really are about The Right and The Good - to a certain degree because politics has always been about The Right and The Good, but also because we happen to live in a culture - a worldview - that excludes most other substantive disagreements (from "are any normative claims even truth-apt, let alone true?" to "to what extent is political persuasion possible?" to "are real economies close to equilibrium?") from the political sphere.

On the rare occasions when other issues make their way into public discourse (or when you wait for things to get heated elsewhere), you see just as much baffled incomprehension.

Worldviews are not principally about moral beliefs because they're not principally about beliefs. They're principally about concepts - the ones you use habitually, the ones you recognize, the ones you actively reject, the ones you don't even know you're missing - and the relations between them. The object-level disagreements are, for the most part, further downstream.

An example from the linked post: the "American Communists" table (which I'm going to round off to "Marxists") is more wrong than right. Relevant excerpt:

The missing insight here, I think, is that unlike most of the other entries (but like the "Silicon Valley Techno-Optimists", with whom they share far more than most members of either group would care to admit), Marxists see themselves as carrying out a historical process, not advocating for a set of values.

The view of history, accordingly, should come first. Tanner Greer's account here is a good one

Intrinsic values? Human ones, no further comment. Where does bad come from? Moloch. Where does good come from? Also Moloch. What should you do to [kill Moloch]? Feed it until it chokes on its own waste.

This is not a worldview that fits in a value-system shaped hole.

The piece that you quote says:

“The problem is not greedy capitalists, but capitalism”

Our piece says:

“Where does bad come from? Capitalism and class systems”

The piece you quote says:

“The only solution possible is for an outside force to intervene and reshape the terms of the game. Socialist revolution will be that force. With no stake in the current order, the propertyless masses will wipe the slate clean.”

Our piece says:

“View of history: Capitalism will lead to a series of ever-worsening crises. The proletariat will eventually seize the major means of production and the institutions of state power.”

So I’m confused because while you frame what you’re quoting as a counter argument to what we say, it lines up well.

By simplifying it all down to Moloch you’re losing a lot of detail.

If you think that American communists don’t have an unusually strong intrinsic value of equality then I think you’re mistaken (of course, I could be wrong). I don’t think you provided any evidence against that thougu as far as I can tell.

We also didn’t say that communists “see themselves as advocating for a set of values.” We said they tend to have an intrinsic value, which is not the same thing.

If you think worldviews aren’t to a substantial extent about beliefs that I suspect we just mean different things by “worldviews”. For instance, I would not call a bunch of the examples you gave “worldviews.”

My claim is that it's not an intrinsic value - it's the result of "instrumental convergence" between adherents of many different value systems, grounded in their shared conception of our present circumstances. If our circumstances were different, the conclusions would be too.

If, for instance, the United States had a robust social-democratic welfare state, most communists would be much less concerned with equality than they are now.

If it were instead some sort of agrarian yeoman-republic (certainly impossible now, but conceivable if early American history had gone very differently), then plausibly communists could instead take a mildly anti-egalitarian tack - inefficient small farmers frustrating the development of the productive forces and so on.

What you would never get, regardless of prevailing conditions, are communists who are also market fundamentalists.

Really what I think is that beliefs can't, in general, be cleanly separated from attitudes and concepts and mere habits of thought and so on - at the end of the day human cognition is what it is. But to the extent that there are distinguished object- and meta-levels, I think meta-level variation is the strongest driver of object-level variation between at least moderately educated people.

Those were intended as characteristic beliefs that would pick out clearly recognizable clusters, not entire worldviews themselves. "Love Thy Neighbor" as a substitute for "Christianity" - except that I don't have good names for the worldviews in question.

I've likely overestimated how salient the concrete-instrumentalist-(problem solver) vs. abstract-(scientific realist)-(theory builder) divide is for most people, but I think the two are clearly at least as different and understand each other at least as poorly as mainstream liberals and conservatives in the US.