All of defun 🔸's Comments + Replies

"Dwarkesh's fundraiser to fight factory farming has now raised over $1M!" - https://x.com/Lewis_Bollard/status/1954962845994819719

Bill Gates: "My new deadline: 20 years to give away virtually all my wealth" - https://www.gatesnotes.com/home/home-page-topic/reader/n20-years-to-give-away-virtually-all-my-wealth

I think the pledge hits a sweet spot. It's not legally binding, so it's not really a lifelong decision, but being a public commitment helps push people to stick to their altruistic values.

https://www.givingwhatwecan.org/faq/is-a-giving-pledge-legally-binding

Holden Karnofsky has joined Anthropic (LinkedIn profile). I haven't been able to find more information.

Anthropic's Twitter account was hacked. It's "just" a social media account, but it raises some concerns.

Update: the post has just been deleted. They keep the updates on their status page: https://status.anthropic.com/

Great initiative! 🙌🙌

I've been hoping for something like this to exist. https://forum.effectivealtruism.org/posts/CK7pGbkzdojkFumX9/meta-charity-focused-on-earning-to-give

What do EtGers need?

I've been donating 20% of my income for a couple of years, and I'm planning to increase it to 30–40%. I'd love to meet like-minded people: ambitious EAs who are EtG.

Thank you so much for the context 💛

My raw thoughts (apologies for the low quality):

- I think the target audience should be high earners who are already donating >=10% of their income. (Getting people from 0% to 10% would not be in the scope of the charity. I think GWWC is already doing a great job)

- The two main goals:

- Motivate people to increase their donations (from 10% to 20% is probably much easier than from 0% to 10%)

- Help people significantly increase their earnings through networking, coaching, financial advice, tax optimization, etc.

- One of the most v

This made my day 💛

Excited to see if it can match or even surpass the most successful EA podcast of all time.

...By becoming a nonprofit entrepreneur you will build robust career capital and reach an impact equivalent to $338,000-414,000 donated* to the best charities in the world every year (e.g., GiveWell top charities)! If you do exceptionally well, we estimate that your impact can grow to $1M in annual counterfactual donations. This makes nonprofit entrepreneurship one of the most impactful jobs you could take!

*This calculation represents our most accurate estimate as of June 2023. It is based on assessing the average impact of charities we have launched and cons

John Schulman (OpenAI co-founder) has left OpenAI to work on AI alignment at Anthropic.

Thanks for the post!

“Something must be done. This is something. Therefore this must be done.”

1. Have you seen grants that you are confident are not a good use of EA's money?

2. If so, do you think that if the grantmakers had asked for your input, you would have changed their minds about making the grant?

3. Do you think Open Philanthropy (and other grantmakers) should have external grant reviewers?

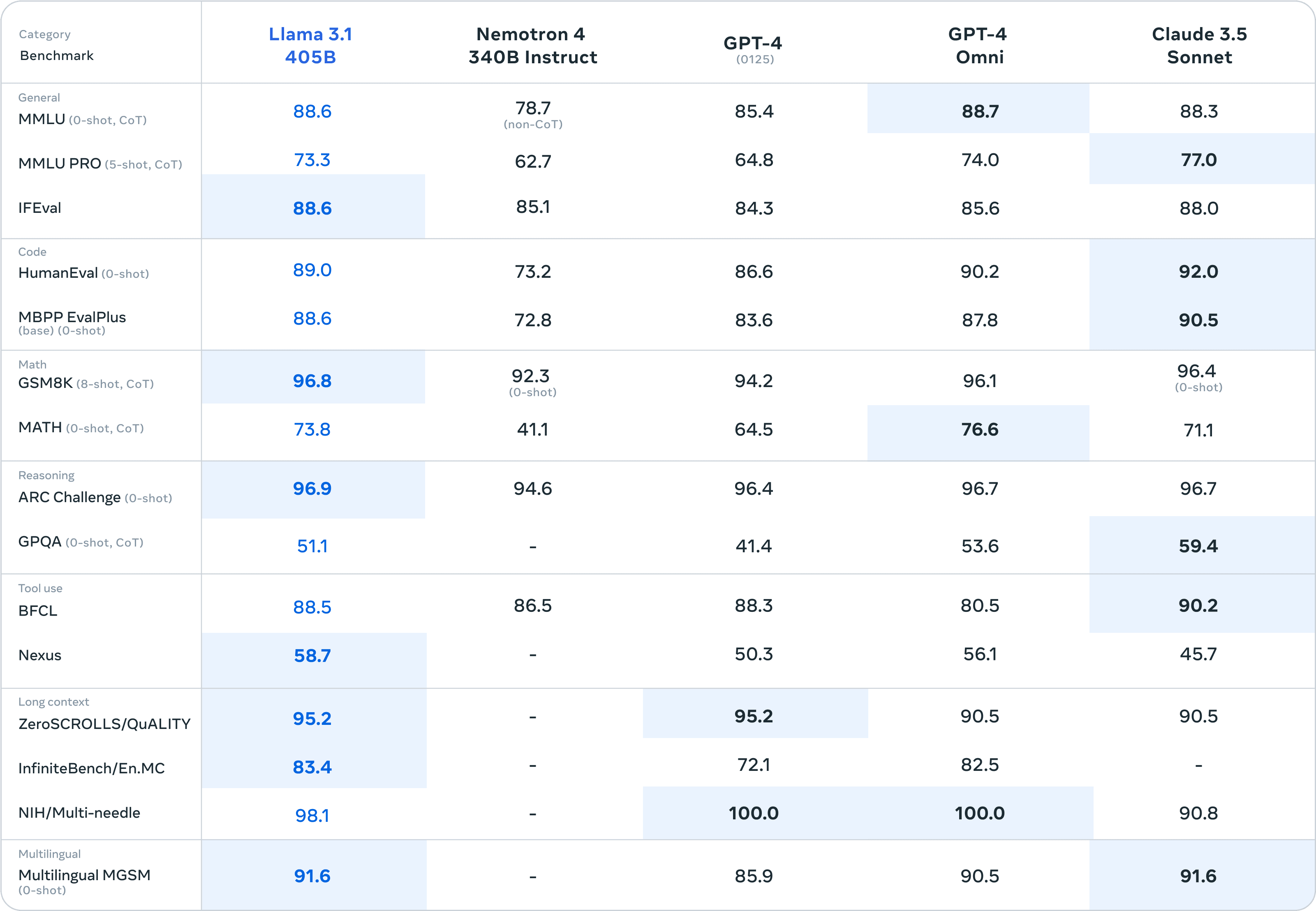

Meta has just released Llama 3.1 405B. It's open-source and in many benchmarks it beats GPT-4o and Claude 3.5 Sonnet:

Zuck's letter "Open Source AI Is the Path Forward".

Thanks for the comment @aogara <3. I agree this paper seems very good from an academic point of view.

My main question: how does this research help in preventing existential risks from AI?

Other questions:

- What are the practical implications of this paper?

- What insights does this model provide regarding text-based task automation using LLMs?

- Looking into one of the main computer vision tasks: self-driving cars. What insights does their model provide? (Tesla is probably ~3 years away from self-driving cars and this won't require any hardware update, so

Thanks a lot for giving more context. I really appreciate it.

These were not “AI Safety” grants

These grants come from Open Philanthropy's focus area "Potential Risks from Advanced AI". I think it's fair to say they are "AI Safety" grants.

Importantly, the awarded grants were to be disbursed over several years for an academic institution, so much of the work which was funded may not have started or been published. Critiquing old or unrelated papers doesn't accurately reflect the grant's impact.

Fair point. I agree old papers might not accurately reflect the gr...

Sorry, I should have attached this in my previous message.

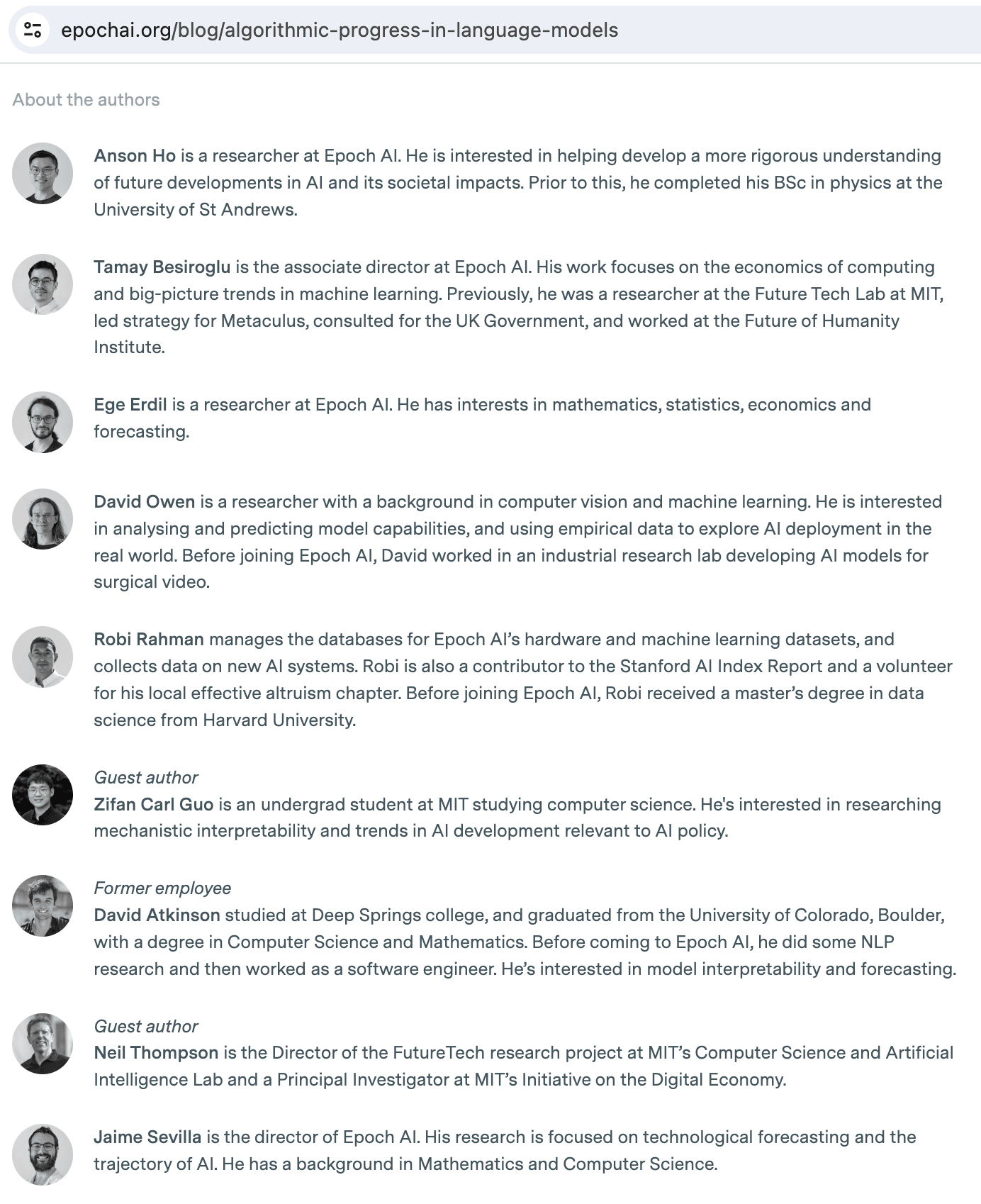

where does it say that he is a guest author?

Here.

This paper is from Epoch. Thompson is a "Guest author".

I think this paper and this article are interesting but I'd like to know why you think they are "pretty awesome from an x-risk perspective".

Epoch AI has received much less funding from Open Philanthropy ($9.1M), yet they are producing world-class work that is widely read, used, and shared.

Agree. OP's hits-based giving approach might justify the 2020 grant, but not the 2022 and 2023 grants.

Thanks for your thorough comment, Owen.

And do the amounts ($1M and $0.5M) seem reasonable to you?

As a point of reference, Epoch AI is hiring a "Project Lead, Mathematics Reasoning Benchmark". This person will receive ~$100k for a 6-month contract.

The difference in subjective well-being is not as high as we might intuitively think.

(anecdotally: my grandparents were born in poverty and they say they had happy childhoods)

The average resident of a low-income country rated their satisfaction as 4.3 using a subjective 1-10 scale, while the average was 6.7 among residents of G8 countries

Doing a naive calculation: 6.7 / 4.3 = 1.56 (+56%).

The difference in the cost of saving a live between a rich and a poor country is 10x-1000x.

It would probably be good to take this into account, but I don't think it would change the outcomes that much.

What is missing in terms of a GPU?

Something unknown.

I think given a big enough GPU, yes, it seems plausible to me. Our mids are memory stores and performing calculations.

Do you think it's plausible that a GPU rendering graphics is conscious? Or do you think that a GPU can only be conscious when it runs a model that mimics human behavior?

I think bacteria are unlikely to be conscious due to a lack of processing power.

Potential counterargument: microbial intelligence.

That's true for many CEOs (like Elon Musk) but Sam Altman did not over-hype any of the big OpenAI launches (ChatGPT, gpt3.5, gpt4, gpt4o, dall-e, etc.).

It's possible that he's doing it for the first time now, but I think it's unlikely.

But let's ignore Sam's claims. Why do you think LLM progress is slowing down?

LLM progress is slowing down

I'm hearing this claim everywhere. I'm curious to know why you think so, given that OpenAI hasn't released GPT-5.

Sam said multiple times that GPT-5 is going to be much better than GPT-4. It could be just hype but this would hurt his reputation as soon as GPT-5 is released.

In any case, we'll probably know soon.

Con: Not as exciting as doing something in AI or AI safety

There's a lot of software engineering work around AI. https://x.com/gdb/status/1729893902814192096

Thanks for the post!

What do you think about Open Philanthropy's grants in AI Alignment? (eg. https://www.openphilanthropy.org/grants/funding-for-ai-alignment-projects-working-with-deep-learning-systems/). Do you think the EV is positive?

And what do you think about 80,000 Hours recommending people to join big AI labs?

It seems like they haven't accepted any non-profit since 2022, around the time Garry Tan became YC's CEO. Garry has been very vocal against EA (specially AI Safety) on Twitter.