Dzoldzaya

Comments37

I couldn't track down comparative data on whose bednets seem to be the most responsible - presumably it's more whoever distributes the most nets in areas close to major fisheries, rather than % of appropriate use.

I also don't know whether the marginal bednet will increase this kind of fishing much (there might already be a glut of bednets).

But these are all important questions that I don't think GiveWell or AMF have ever taken seriously.

Agreed. Being able to identify effective interventions that support or protect democracy in certain contexts doesn't necessarily seem like a bad idea.

The challenge with the AfD is that they seem to be the victims of behaviour that could be considered antidemocratic: lawmakers are considering banning the party, and the state has put the party under surveillance. This would be unconstitutional in many countries. I think there could be legitimate arguments that "protecting democracy" could sometimes involve defending groups like the AfD, as well as defending democracy from them.

I'd prefer for a politically neutral pro-democracy organisation to have the courage to defend a party like the AfD when their democratic freedoms are under threat, while also ensuring that they are stopped from damaging the democratic system. But, because this could be very messy, and have significant PR risks, I think EA-aligned groups just shouldn't be taking that risk either way.

Thanks for this, I'm trying to at least get a couple of numbers to get in the right ballpark to help people calibrate. Epistemic status: uncertain, a couple of hours of research. Takeaway: Probably billions of fish killed.

To get a higher end of the estimate of total fish killed as a result of mosquito net fishing, the appendix of the paper you link to gives an example of a single large-scale fishery in Madagascar where 68.7 million fish were killed in 2018-2019 by 75 families.

Unless I'm misreading, this looks like 80-90% of the total fish numbers were caught through mosquito net trawler (27.7% by weight, but much smaller fish on average, so a higher number).

This other paper in Mozambique provides another example - they two fishers catch around 35kg of (mostly tiny, < 20 mm, so 1-10g?) fish a day, so I guess around 10,000 fish a day? Over 2 million a year (so similar ballpark to Madagascar).

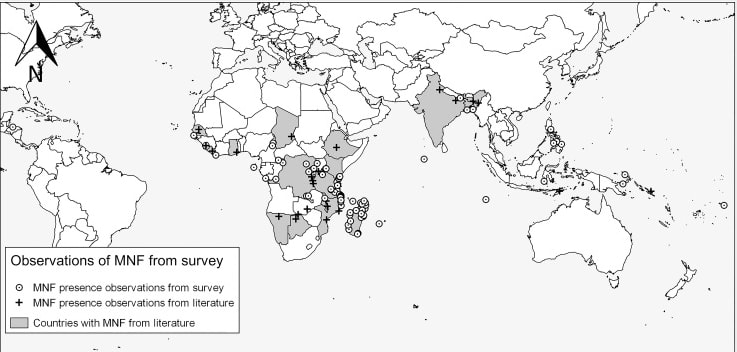

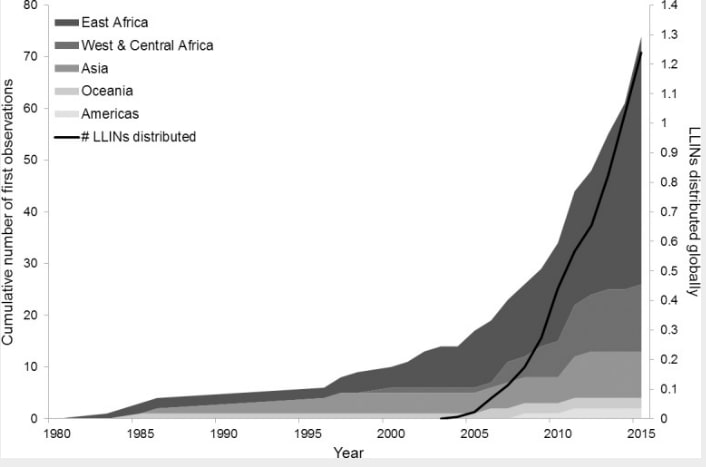

These are the locations at which mosquito net fishing had been observed in this study (data mostly from 2015), with a rapidly rising trajectory.

I can't seem to find any better data on the scale of fishing at all of these locations, so I have no idea whether it's closer to 50 or 10000 locations where mosquito nets are used for fishing to a similar extent to those in these articles, but 25 million fish * 50 locations would be 1.25 billion fish.

I think it's safe to assume that at least 1 billion fish, possibly orders of magnitude higher, are killed yearly through mosquito net fishing, most of whom wouldn't be killed if it weren't for the distribution of mosquito nets (due to the small mesh sizes).

Of course, to make a reasonable welfare calculation, you shouldn't just look at the number of animals killed and how painful their deaths must have been. You also have to consider how long these fish would have lived for counterfactually, the quality of their lives and suffering involved in their counterfactual deaths, as well as broader ecosystem effects etc.

But @Dylan Matthews's claim that "There is little research on what fishing with these nets actually does to fish or people — but also little reason to think the magnitudes of these effects are remotely near the number of lives saved by nets" strikes me as indefensible.

Causing billions of fish to experience a painful death is not just a rounding error, and it may well be worth reevaluating whether bednets have had a positive net impact or not.

I agree with your general case, and I'm interested in the role that genetics can play in improving educational and socio-economic outcomes across the world. In the case of a world where biological intelligence remains relevant (not my default scenario, but plausible), this will become an increasingly interesting question.

However, I'm unconvinced that an EA should want to invest in any of the suggested donation interventions at the minute - they seem to be examples where existing research and market incentives would probably be sufficient. I'm not sure that more charitable support would have a strong counterfactual impact at the margins. (Note: I know very little about funding for genetics research - it seems expensive and already quite well-funded, but please correct me if I'm wrong here).

In terms of whether we should promote/ talk about it more, I think EA has limited "controversy points" that should be used sparingly for high-impact cause areas or interventions. I don't feel that improving NIQ through genetic interventions scores well on the "EV vs. controversy" trade-off. There are other genetic enhancement interventions (e.g. reducing extreme suffering, in humans or farmed animals), that seem to give you more EV for less controversy.

Also, if we do make this case, I think that mentioning Lynn/ Vanhanen is probably unwise, and that you could make the case equally well without the more controversial figures/ references.

Finally, I'd like to see a plausible pathway or theory of change for a more explicitly EA-framed case for genetic enhancement. For example, we expect this technology to develop anyway, but people with an EA framing could:

1. Promote the use of embryo screening to avert strongly negative cognitive outcomes

2. If this technology is proven to be cost-effective in rich countries, remove barriers to rolling it out in countries where it could have a greater counterfactual impact

At risk of compounding the hypocrisy here, criticizing a comment for being abrasive and arrogant while also saying: "Your ideas are neither insightful nor thoughtful, just Google/ ChatGpt it" might be showing a lack of self-awareness...

But agreed that this post is probably not the best place for an argument on the feasibility of a pause, especially as the post mentions that David M will make the case for how a pause would work as part of the debate. If your concerns aren't addressed there, Gerald, that post will probably be a better place to discuss.

My argument questions the ideas of lives saved, DALYs and QALYs as metrics - just like using lives saved as a metric, QALYs generally implicitly assume that death is worse than a very bad life, no matter the levels of mental suffering, pain, and physical debilitation.

I'm probably criticising GiveWell's methods as much as the post- their methodology assumes that the value of saving lives/ averting deaths is positive.

I generally agree more with HLI's 'WELLBY' approach, as long as negative WELLBYs are taken seriously.

As I said on my final para, I do see global health interventions as probably being net positive, despite potentially saving more net negative lives, so my argument definitely wasn't to "defund GiveWell". It was more that "saving lives" is a bad metric and bad thing to feel good about.

My cherry picking of negative phenomena was in response to the cherry picking of the original post. I think boring/ useless school (I didn't quote anything but... most African rural schools are boring and useless...), unpleasant labour, hunger/ stunting and poor mental health are very relevant variables, as they define a lot of the waking hours of the poorest people in the world.

FGM and child marriage are probably less representative of general welfare - I was responding to the "first kiss" idea in the post.

I chose Burkina Faso at random. For central African countries I might have stressed sexual violence, which seems to be lower in Burkina Faso.

Thanks for responding.

I accept your point about life satisfaction vs happiness measures not being equivalent. But if GiveWell recipients think that their life is significantly closer to the worst possible life than the best possible life, this still makes my point pretty well. Doesn't seem obvious to judge the net welfare of someone who is, say, 3/10 for life satisfaction and 'rather happy'. I haven't seen good studies on GiveWell recipients' happiness or moment-to-moment well being (using ESM etc.), or other ways of measuring what we care about, but I would appreciate better info on that.

My (implicit) estimates for child marriage, stunting and mental illness should be adjusted for the fact that average GiveWell charity recipients in Burkina Faso have worse lives than the average citizen, but I acknowledge my language was imprecise. Stunting might plausibly cross the 50% threshold in that category, but might be under. The median marriage age for Burkinabe girls is 17, and is probably lower in the GiveWell pop. Some orgs define child marriage as <18.

Mental illness thresholds seem to vary a lot, but this https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0164790 article is a good example of how bad mental health is for 'ultra-poor' kids in Burkina Faso. My thinking would be that 20-30% of the kids in this study have lives clearly on the net-negative side, which I think would be unlikely to be outweighed by the more neutral/ positive lives. Don't know exactly how this would match with a typical GiveWell population.

"To answer your comment "you have to work out whether you think this life you've saved is more likely or not to be net positive. " - We have worked it out, and the answer YES, a resounding yes"

I consider this just obviously false. I just don't believe that you/ global health people have disproven negative-leaning utilitarian or suffering-focused ethical stances. You might have come to a tentative conclusion based on a specific ethical framework, limited evidence and personal intuitions (as I have).

I'd say that there's probably a fairly fundamental uncertainty about whether any lives are net positive. There's definitely not a consensus within the EA community or elsewhere. It depends on stuff like suffering happiness assymetry and the extent to which you think pain and pleasure are logarithmic (https://qri.org/blog/log-scales).

Most of us will acknowledge that at least some lives are net negative, some extremely so, and that these lives are far more likely to be saved by GiveWell charities. I suspect any attempt to model exactly where to draw the line will be very sensitive to subtle differences in assumptions, but my current model leans towards the average GiveWell life being net negative in the medium term, for the reasons I've mentioned.

In terms of language, I think "great care and dignity" are suitable for most contexts, but I think that it's important that the EA forum is a safe space for blunt language on this topic.

I'm increasingly convinced that EA needs to distance ourselves from this framing of "saving lives=good". And we need to avoid the satisfying illusion that giving to global health charities is saving a life "just like our own". (Particularly disagreeing with @NickLaing's comments here). If you've decided to save the lives of the ultra-poor, you should be able to bite the bullet and admit you're doing that.

We all like the idea of saving a kid who's "playing with their friends in the schoolyard, maybe spending time with their grandma, or maybe just kicking a football, alone", and " celebrating her birthday" and the "first kiss".

But you don't need to be a negative utilitarian to recognise that the kid whose life you've saved probably isn't having a great life- mostly for the reasons you donated to that charity- it's shitty being a poor person in the poorest countries in the world.

Let's say you saved a life in Burkina Faso:

- If you saved a girl, she'll probably be a victim of FGM, and get married as a child to an older man - that "first kiss" you mention might be as a 15-year-old girl with her 30-year-old husband

- If they don't go to school, they'll do hard and dangerous work in agriculture, fishing or worse as children.

- If they do go to school, they'll probably spend their days in extreme boredom, getting left behind, and end their school years functionally illiterate and innumerate

- They're likely to spend a lot of their childhood hungry - they will get ill often, with malaria, diarrhea, or other communicable diseases

- They will be likely to grow up stunted or wasted, and with diminished cognitive abilities

- All of the above tend not to be great for mental health, so they're fairly likely to become depressed, anxious, or suffer from more serious mental issues

- When you ask them how happy they are on a life satisfaction/ happiness scale, they'll give you around 4/10

Based on this reality, and your estimates about how the world is likely to improve in the coming few decades, you have to work out whether you think this life you've saved is more likely or not to be net positive.

I'm not saying that we shouldn't give more money to global health charities- they improve lives and stop people getting horrible diseases. All else equal, fewer communicable diseases are better. But I'm disagreeing strongly with the framing of this piece.

No, there is no way to be confident.

I think humanity is intellectually on a trajectory towards greater concern for non-human animals. But this is not a reliable argument. Trajectories can reverse or stall, and most of the world is likely to remain, at best, indifferent to and complicit in the increasing suffering of farmed animals for decades to come. We could easily "lock in" our (fairly horrific) modern norms.

But I think we should probably still lean towards preventing human extinction.

The main reason for this is the pursuit of convergent goals.

It's just way harder to integrate pro-extinction actions into the other things that we care about and are trying to do as a movement.

We care about making people and animals healthier and happier, avoiding mass suffering events / pandemics / global conflict, improving global institutions, and pursuing moral progress. There are many actions that can improve these metrics - reducing pandemic risk, making AI safer, supporting global development, preventing great power conflict - which also tend to reduce extinction risk. But there are very few things we can do that improve these metrics while increasing x-risk.

Even if extinction itself would be positive expected value, trying to make humans go extinct is a bit all-or-nothing, and you probably won't ever be presented with a choice where x-risk is the only variable at play. Most of the things you can do that increase human x-risk at the margins also probably increase the chance of other bad things happening. This means that there are very few actions that you could take with a view towards increasing x-risk that are positive expected value.

I know this is hardly a rousing argument to inspire you in your career in biorisk, but I think it should at least help you guard against taking a stronger pro-extinction view.