All of Eevee🔹's Comments + Replies

Anecdotally, it seems like many employers have become more selective about qualifications, particularly in tech where the market got really competitive in 2024 - junior engineers were suddenly competing with laid-off senior engineers and FAANG bros.

Also, per their FAQ, Capital One has a policy not to select candidates who don't meet the basic qualifications for a role. One Reddit thread says this is also true for government contractors. Obviously this may vary among employers - is there any empirical evidence on how often candidates get hired without meeti...

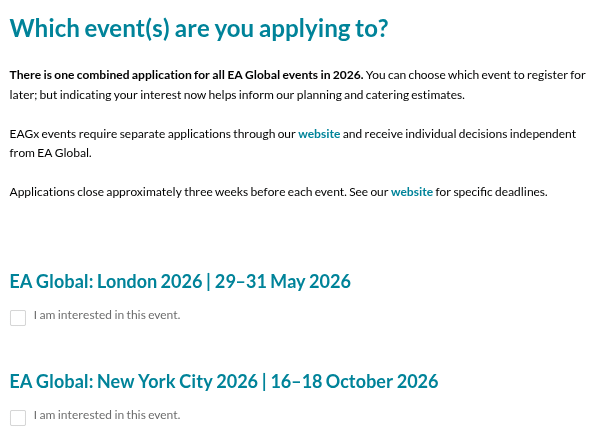

Have applications for EAG San Francisco already closed? The website states that the application deadline is February 1, but the application form only includes checkboxes for London and NYC.

This is great!

(Not sure if you already know this, but posting it in case it's helpful to anyone) Not-so-pro tip: your distributor only pays your sound recording royalties; streaming services send the mechanical and/or performance royalties for the underlying musical composition to a CMO (such as PRS for Music in the UK) which pays you separately.

On Spotify, composition royalties are about 1/5 of the share of revenue that Spotify pays to the owners of sound recordings, so realistically, it's probably just a few extra cents from streaming alone. But I feel l...

How did Sam "win"? The press coverage of him since the FTX collapse has been overwhelmingly negative. The EA movement has been caught up in that as collateral damage, but some works have criticized Sam for "twisting" EA, like the podcast Spellcaster.

...why you just assume it will be a positive representation of EAs?

No, I'm not making any assumptions about the movies being released.

You could create a charity fundraiser on GoFundMe or Every.org and match the donations yourself.

If you want to automate the donation matching, I'd suggest batching the donations and only charging the fundraiser creator for the total amount every 14 days or when the fundraiser ends, rather than creating a matching transaction for every donation. That would cut down on payment processing fees.

You could also experiment with authorization holds on the user's credit card - they basically hold a certain amount of money hostage on the credit card to make sure tha...

Not necessarily. It's more that funding gaps of c3's can be more easily filled by big foundations (which are also c3's), whereas donations from c3's to c4's are restricted. That makes it more valuable, ceteris paribus, for individuals to fill c4 funding gaps.

Also, the "50% bonus" only applies if you itemize deductions; many people use the standard deduction, including some people who earn more than six figures.

Thank you for this candid and informative post. I agree that we need to allocate more resources to advocacy (but hopefully not at the expense of research!).

I also wanted to signal boost your advice to 501(c)(3)'s in your previous post on orphaned policies, in case it's relevant to anyone reading this thread:

...Admittedly, most research work is funded by 501(c)(3) donations that cannot pay for more than a small amount of direct political advocacy. However, there are ways to word the conclusion of a research paper that provide clear guidance without crossing th

If you would like to receive email updates about any future research endeavours that continue the mission of the Global Priorities Institute, you can also sign up here.

I signed up for the mailing list! Is there any other way to support research similar to GPI's work—for instance, would it make sense to donate to Forethought Foundation?

What if there was an effective giving org that partnered with movie studios to pledge a portion of their revenue to thematically relevant high-impact charities? e.g. "5% of gross box office revenue from Chicken Run Super 3D! will be donated to Legal Impact for Chickens"

Seeing the unexpected success of KPop Demon Hunters (released in June 2025) made me think of this idea again. Imagine how cool it would be if next year's summer blockbusters used their reach for a good cause? This initiative would also diversify the EA funding base away from finance and tech.

Disclosure: Possible conflict of interest here. I donated close to $900 to SPI in November 2024 based on information they shared with me privately about their work and confirmation of their activities from a mutual contact. This was a large portion of my giving last year, which may bias me towards wanting to believe they will have impact.

I appreciate that you folks did a review of SPI, but why publish this linkpost without a description?

Also, is there really no information you can share about SPI's work so far? This doesn't match my impression of the work they were up to. I'm happy to follow up with them about their progress and share what I find out here, provided that they don't object.

This is a pretty grounded take. The only thing that bugs me is this passage, in the introduction:

...The national media spotlight focused on sweatshops in 1996 after Charles Kernaghan, of the National Labor Committee, accused Kathy Lee Gifford of exploiting children in Honduran sweatshops. He flew a 15 year old worker, Wendy Diaz, to the United States to meet Kathy Lee. Kathy Lee exploded into tears and apologized on the air, promising to pay higher wages.

Should Kathy Lee have cried? Her Honduran workers earned 31 cents per hour. At 10 hours per day, which is

Thanks for sharing! This is probably an abuse of notation, but I clicked the check mark reaction as a note-to-self that I completed the survey, even though it typically means "agree".

Should we send this to our non-EA friends too?

Does anyone know what's going on with Apart Research's funding situation? I participated in one of their AI safety hackathons and it propelled me into the world of AIS research, so I'm sad to hear that they might be forced to shut down or downsize. They're trying to raise nearly a million dollars in the next month.

You can donate to AMF via PayPal Giving Fund on this page and to Giving What We Can here. Currently, 100% of the donation amount goes to charity, as PayPal covers all payment processing fees on donations through this portal. I haven't tried making a small donation recently, but it looks like there is no minimum amount you can donate, and users regularly make $1 donations through the Give at Checkout feature. (Caveat: These links may only work in certain countries.)

I've included a screenshot below of the user interface for when you donate, though I didn't c...

I've been thinking a lot about how mass layoffs in tech affect the EA community. I got laid off early last year, and after job searching for 7 months and pivoting to trying to start a tech startup, I'm on a career break trying to recover from burnout and depression.

Many EAs are tech professionals, and I imagine that a lot of us have been impacted by layoffs and/or the decreasing number of job openings that are actually attainable for our skill level. The EA movement depends on a broad base of high earners to sustain high-impact orgs through relatively smal...

Great work!

Please note, if you copied substantial portions of the "Intro to effective altruism" article, you should include a link to the CC-BY 4.0 license in your PDF, as it is required by the license terms. This helps inform users that the content you used is free to use. Thanks for helping build the digital commons!

Larks, I’ve noticed that your comments on this post focus on criticizing the specific rules in the CC donor policy document that I shared as an example for other EA organizations to consider. You rightly point out that some of these rules might be impractical for EA orgs to follow. However, I feel frustrated because I think your responses have missed the point of my original post, which was to highlight the value of having a written donor screening policy, and to offer the CC document as a resource that other orgs can legally copy and adapt to their own ne...

I just posted the CC policy as an example of a donor screening policy, and by posting it I don't necessarily endorse its exact contents. As you helpfully pointed out, the terms of this policy could be overbroad and burdensome, and would have to be adapted to the different context in which EA orgs operate.

"a $10k donation from a Palantir employee would simply be totally prohibited"

First, many EAs donate a lot more money ($1-10k/year) than the average non-EA donor, so $10k would likely be on the high end for a gift to CC but typical for an EA org. So I think...

Our impact is not just what we create ourselves, but how we influence others. In part due to our progress, there’s vibrant competition in the space, from commercial products similar to ChatGPT to open source LLMs, and vigorous innovation on safety.

Color me cynical. OpenAI cites its own, Anthropic's, and DeepMind's approaches to AI safety. However, Meta has also expressed an ambition to build AGI but doesn't seem to prioritize safety in the same way.

Thanks for all your hard work on the audio narrations and making EA Forum content accessible!

Question: Do you intend to license the audio under a Creative Commons license? Since EA Forum text since 2022 is licensed under CC-BY 4.0, all that's legally required is any attribution info provided by the source material and a link to the license; derived works don't have to be also licensed under CC-BY. However, to the extent that AI-generated narrations can be protected by copyright at all (e.g. in the UK and Hong Kong), it seems appropriate to use CC-BY, or maybe CC-BY-SA to enforce modifications being under the same terms.

EA Forum posts since 2022 are licensed under CC-BY, so they are free to use. If you want to translate any portions of the 80k page that aren't part of this forum post, you should ask them.

Thanks. I have translated two sections to Chinese here: https://dku-plant-futures.github.io/zh/post/factory-farming-as-a-pressing-global-problem/

OP/GV/Dustin do not like the rationalism brand because it attracts right-coded folks

Open Phil does not want to fund anything that is even slightly right of center in any policy work

I think this is specifically about the rat community attracting people with racist views like "human biodiversity" (which you alluded to re: "our speaker choices at Manifest") and not about being right-wing or right-leaning generally. As a counterexample, OP made three grants to the Niskanen Center to fund their immigration policy work. I would characterize Niskanen as centrist ...

Epistemic status: preliminary take, likely not considering many factors.

I'm starting to think that economic development and animal welfare go hand in hand. Since the end of the COVID pandemic, the plant-based meat industry has declined in large part because consumers' disposable incomes declined (at least in developed countries). It's good that GFI and others are trying to achieve price parity with conventional meat. However, finding ways to increase disposable incomes (or equivalently, reduce the cost of living) will likely accelerate the adoption of meat substitutes, even if price parity isn't reached.

the plant-based meat industry has declined in large part because consumers' disposable incomes declined (at least in developed countries)

Do you have a source for this? Median real disposable income is growing in the US, as is meat consumption. https://www.vox.com/future-perfect/386374/grocery-store-meat-purchasing people are buying more and more meat as they get richer, even in developed countries

In addition, I used to lead the EA Public Interest Tech Slack community, which was subsequently merged into the EA Software Engineers community (the Discord for which still exists btw). All of these communities eventually got merged into the #role-software-engineers channel of the EA Anywhere Slack.

I think there was too much fragmentation among slightly different EA affinity groups aimed at tech professionals - there was also EA Tech Network for folks working at tech companies, which I believe was merged into High Impact Professionals.

I'm not sure why the ...

Lingering thoughts on the talk "How to Handle Worldview Uncertainty" by Hayley Clatterbuck (Rethink Priorities):

The talk proposed several ways that altruists with conflicting values can bargain in mutually beneficial ways, like loans, wagers, and trades, and suggested that the EA community should try to implement these more in practice and design institutions and mechanisms that incentivize them.

I think the EA Donation Election is an example of a community-wide mechanism for brokering trades between multiple anonymous donors. To illustrate this, consider a...

Not sure who to alert to this, but: when filling out the EA Organization Survey, I noticed that one of the fields asks for a date in DD/MM/YYYY format. As an American this tripped me up and I accidentally tried to enter a date in MM/DD/YYYY format because I am more used to seeing it.

I suggest using the ISO 8601 (YYYY-MM-DD) format on forms that are used internationally to prevent confusion, or spelling out the month (e.g. "1 December 2023" or "December 1, 2023").

Wait, I just found this in YouTube's algorithm. One of the funnier comments about Peter: