All of Lizka's Comments + Replies

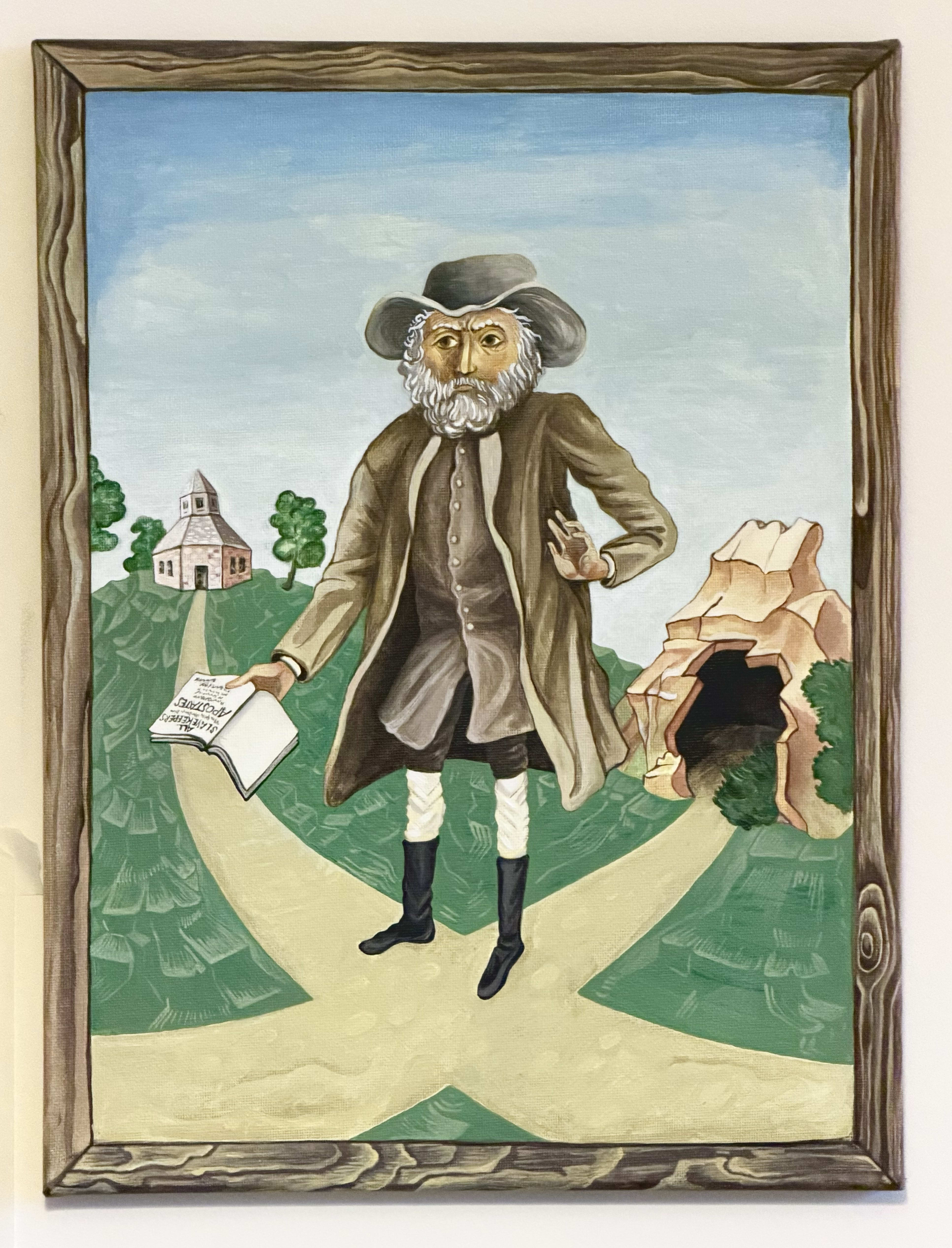

I didn't end up writing a reflection in the comments as I'd meant to when I posted this, but I did end up making two small paintings inspired by Benjamin Lay & his work. I've now shared them here.

I think of today (February 8) as "Benjamin Lay Day", for what it's worth. (Funny timing :) .)

Another one I'd personally add might be November 4 for Joseph Rotblat. And just in case you haven't seen / just for reference, there are some related resources on the Forum, e.g. here https://forum.effectivealtruism.org/topics/events-on-the-ea-forum, and here https://forum.effectivealtruism.org/posts/QFfWmPPEKXrh6gZa3/the-ea-holiday-calendar .

In fact I think the Forum team may also still maintain a list/calendar of possible days to celebrate somewhere. ...

Benjamin Lay — "Quaker Comet", early (radical) abolitionist, general "moral weirdo" — died on this day 267 years ago.

I shared a post about him a little while back, and still think of February 8 as "Benjamin Lay Day".

...

Around the same time I also made two paintings inspired by his life/work, which I figured I'd share now. One is an icon-style-inspired image based on a portrait of him[1]:

The second is based on a print depicting the floor plan of an infamous slave ship (Brooks). The print was used by abolitionists (mainly(?) the Society for Effec...

When thinking about the impacts of AI, I’ve found it useful to distinguish between different reasons for why automation in some area might be slow. In brief:

- raw performance issues

- trust bottlenecks

- intrinsic premiums for “the human factor”

- adoption lag

- motivated/active protectionism towards humans

I’m posting this mainly because I’ve wanted to link to this a few times now when discussing questions like "how should we update on the shape of AI diffusion based on...?". Not sure how helpful it will be on its own!

In a bit more detail:

(1) Raw performance issue...

Yeah, I guess I don't want to say that it'd be better if the team had people who are (already) strongly attached to various specific perspectives (like the "AI as a normal technology" worldview --- maybe especially that one?[1]). And I agree that having shared foundations is useful / constantly relitigating foundational issues would be frustrating. I also really do think the points I listed under "who I think would be a good fit" — willingness to try on and ditch conceptual models, high openness without losing track of taste, & flexibility — matter, an...

ore Quick sketch of what I mean (and again I think others at Forethought may disagree with me):

- I think most of the work that gets done at Forethought builds primarily on top of conceptual models that are at least in significant part ~deferring to a fairly narrow cluster of AI worldviews/paradigms (maybe roughly in the direction of what Joe Carlsmith/Buck/Ryan have written about)

- (To be clear, I think this probably doesn't cover everyone, and even when it does, there's also work that does this more/less, and some explicit poking at these worldviews, etc.)

- So

Ah, @Gregory Lewis🔸 says some of the above better. Quoting his comment:

...

- [...]

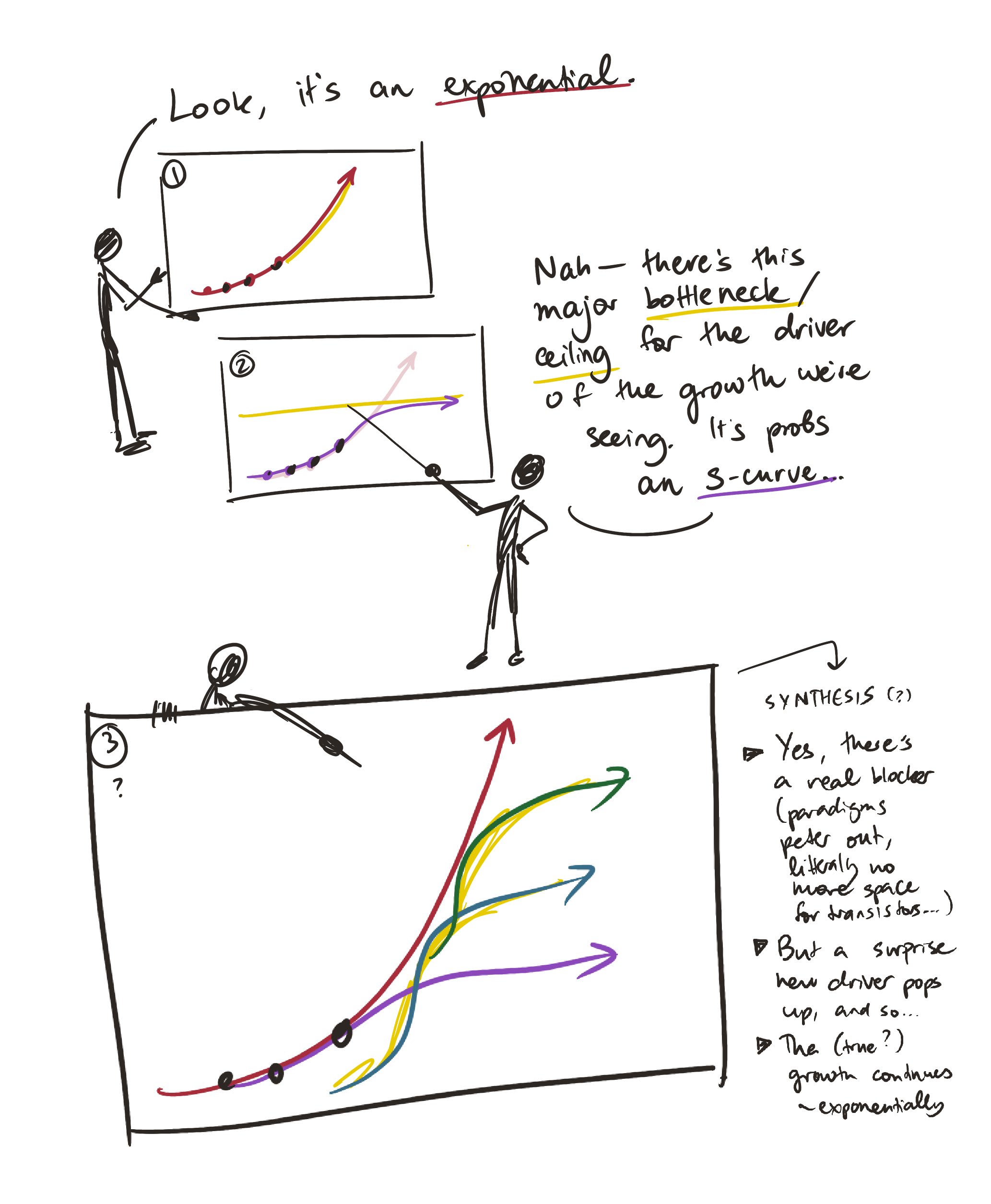

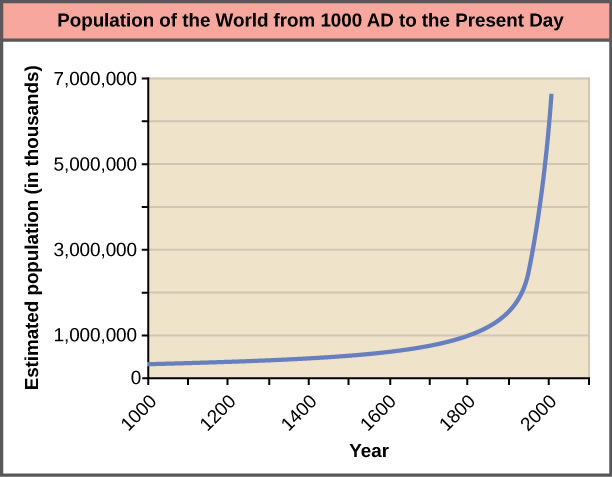

- So ~everything is ultimately an S-curve. Yet although 'this trend will start capping out somewhere' is a very safe bet, 'calling the inflection point' before you've passed it is known to be extremely hard. Sigmoid curves in their early days are essentially indistinguishable from exponential ones, and the extra parameter which ~guarantees they can better (over?)fit the points on the graph than a simple exponential give very unstable estimates of the putative ceiling the

I tried to clarify things a bit in this reply to titotal: https://forum.effectivealtruism.org/posts/iJSYZJJrLMigJsBeK/lizka-s-shortform?commentId=uewYatQz4dxJPXPiv

In particular, I'm not trying to make a strong claim about exponentials specifically, or that things will line up perfectly, etc.

(Fwiw, though, it does seem possible that if we zoom out, recent/near-term population growth slow-downs might be functionally a ~blip if humanity or something like it leaves the Earth. Although at some point you'd still hit physical limits.)

Oh, apologies: I'm not actually trying to claim that things will be <<exactly.. exponential>>. We should expect some amount of ~variation in progress/growth (these are rough models, we shouldn't be too confident about how things will go, etc.), what's actually going on is (probably a lot) more complicated than a simple/neat progression of new s-curves, etc.

The thing I'm trying to say is more like:

- When we've observed some datapoints about a thing we care about, and they seem to fit some overall curve (e.g. exponential growth) reasonably we

Replying quickly, speaking only for myself[1]:

- I agree that the boundary between Community & non-Community posts is (and has always been) fuzzy

- You can see some guidance I wrote in early 2023: Community vs. other[2] (a "test-yourself" quiz is also linked in the doc)

- Note: I am no longer on the Online Team/an active moderator and don't actually know what the official guidance is today.[3]

- I also agree that this fuzziness is not a trivial issue, & it continues to bug me

- when I go to the Frontpage, I relatively frequently see posts that I think should

- You can see some guidance I wrote in early 2023: Community vs. other[2] (a "test-yourself" quiz is also linked in the doc)

I sometimes see people say stuff like:

Those forecasts were misguided. If they ended up with good answers, that's accidental; the trends they extrapolated from have hit limits... (Skeptics get Bayes points.)

But IMO it's not a fluke that the "that curve is going up, who knows why" POV has done well.

A sketch of what I think happens:

There’s a general dynamic here that goes something like:

- Some people point to a curve going up (and maybe note some underlying driver)

- Others point out that the drivers have inherent constraints (this is an s-curve, not an ex

I'm skeptical of an "exponentials generally continue" prior which is supposed to apply super-generally. For example, here's a graph of world population since 1000 AD; it's an exponential, but actually there are good mechanistic reasons to think it won't continue along this trajectory. Do you think it's very likely to?

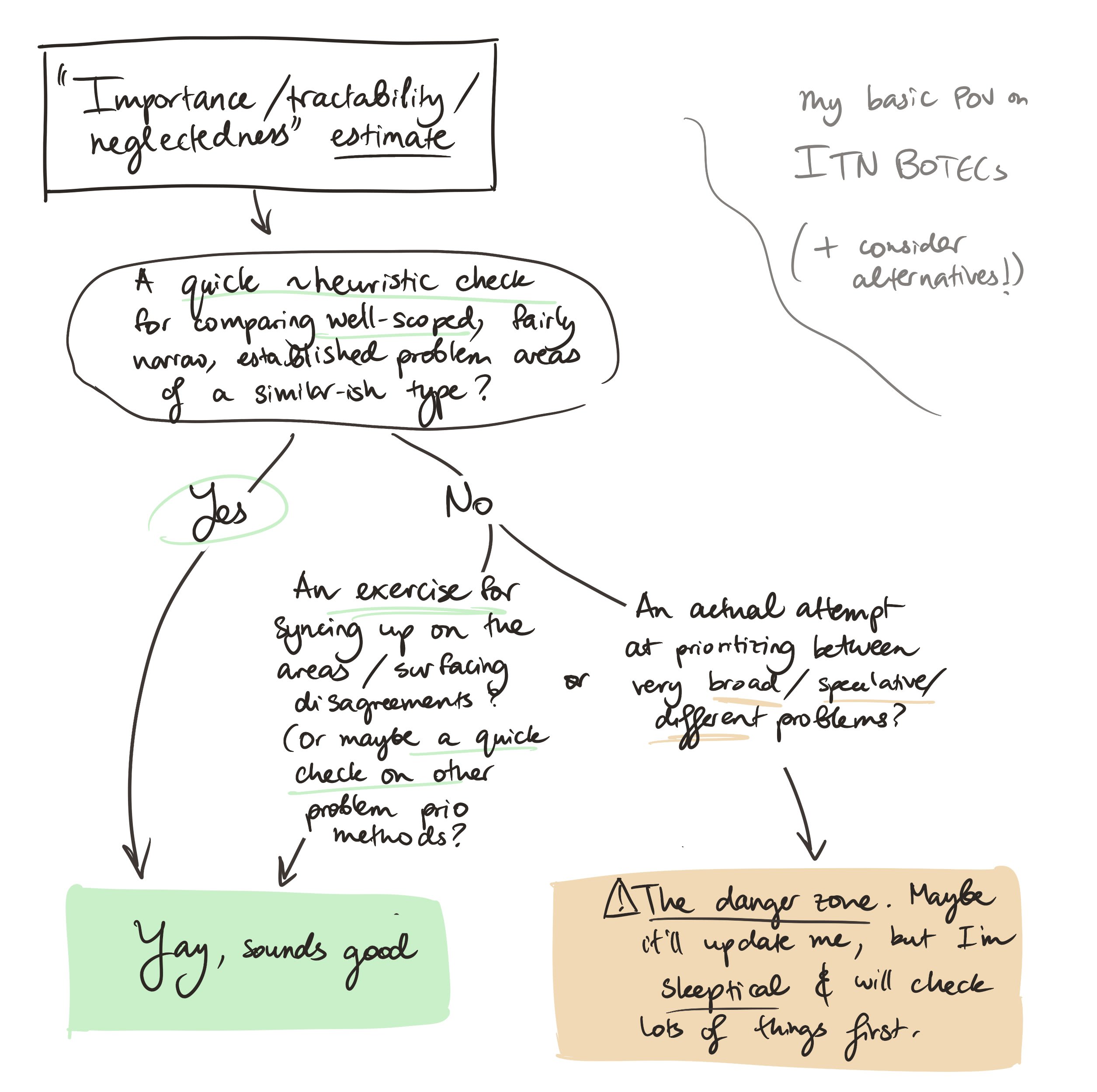

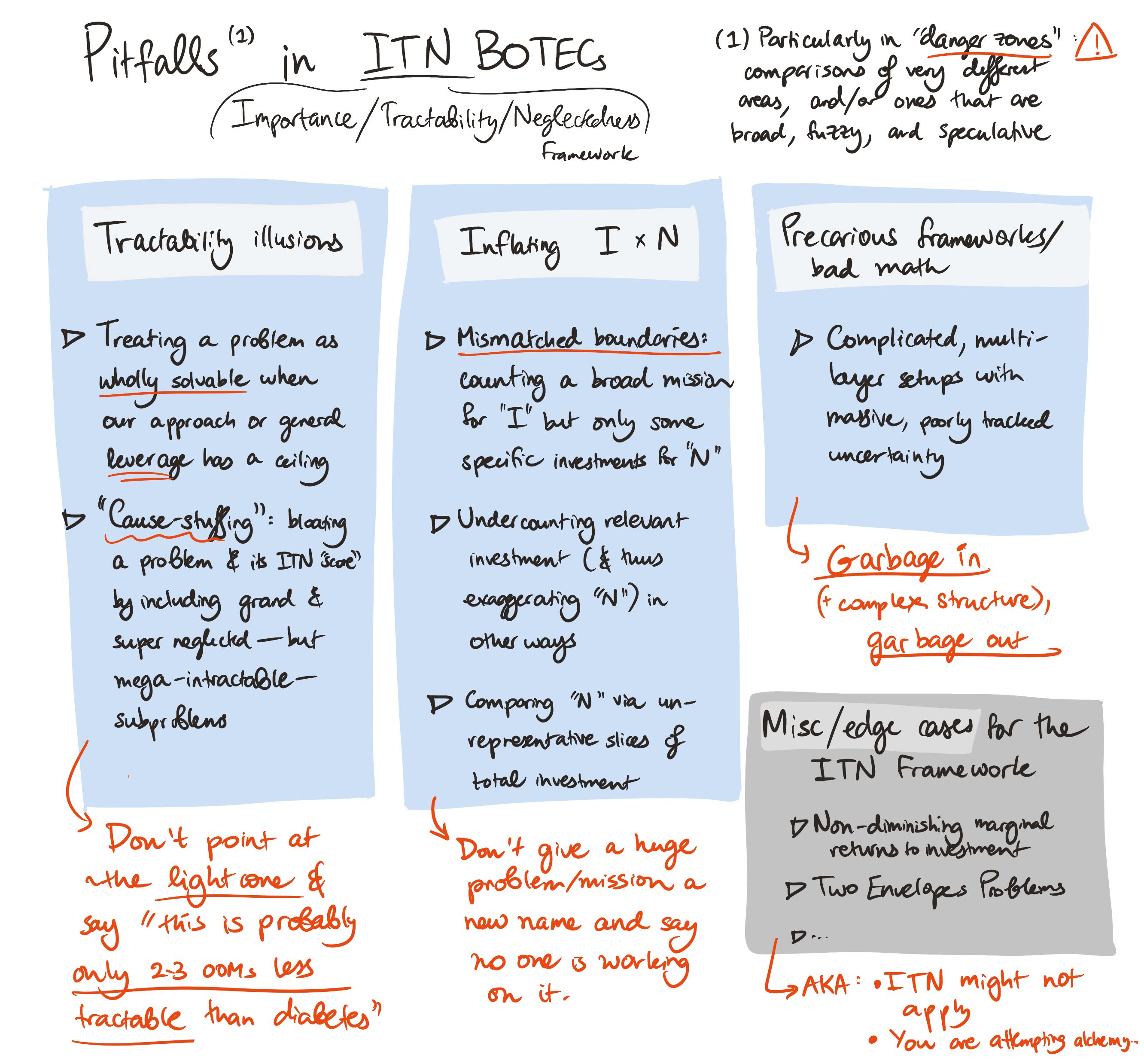

Yeah, this sort of thing is partly why I tend to feel better about BOTECs like (writing very quickly, tbc!):

...What could we actually accomplish if we (e.g.) doubled (the total stock/ flow of) investment in ~technical AIS work (specifically the stuff focused on catastrophic risks, in this general worldview)? (you could broaden if you wanted to, obviously)

Well, let's see:

- That might look like:

- adding maybe ~400(??) FTEs similar (in ~aggregate) to the folks working here now, distributed roughly in proportion to current efforts / profiles — plus the funding

Thank you! I used Procreate for these (on an iPad).[1]

(I also love Excalidraw for quick diagrams, have used & liked Whimsical before, and now also semi-grudgingly appreciate Canva.)

Relatedly, I wrote a quick summary of the post in a Twitter thread a few days ago and added two extra sketches there. Posting here too in case anyone finds them useful:

- ^

(And a meme generator for the memes.)

Yeah actually I think @Habryka [Deactivated] discusses these kinds of dynamics here: https://www.lesswrong.com/posts/4NFDwQRhHBB2Ad4ZY/the-filan-cabinet-podcast-with-oliver-habryka-transcript

Excerpt (bold mine, Habryka speaking):

...One of the core things that I was always thinking about with LessWrong, and that was my kind of primary analysis of what went wrong with previous LessWrong revivals, was [kind of] an iterated, [the term] “prisoner's dilemma” is overused, but a bit of an iterated prisoner's dilemma or something where, like, people needed to ha

Re not necessarily "optimizing" for the Forum, I guess my frame is:

The Online Team is the current custodian of an important shared resource (the Forum). If the team can't actually commit to fulfilling its "Forum custodian" duties, e.g. because the priorities of CEA might change, then it should probably start trying to (responsibly) hand that role off to another person/group.

(TBC this doesn't mean that Online should be putting all of its efforts into the Forum, just like a parent has no duty to spend all their energy caring for their child. And it's n...

I think going for Option 2 ("A bulletin board") or 3 ("Shut down") would be pretty a serious mistake, fwiw. (I have fewer/weaker opinions on 1, although I suspect I'm more pessimistic about it than ~most others.)

...

An internal memo I wrote in early 2023 (during my time on the Online Team) seems relevant, so I've made a public copy: Vision / models / principles for the Forum and the Forum+ team[1]

I probably no longer believe some of what I wrote there, but still endorse the broad points/models, which lead me think, among other things:

- That people would proba

I just want to say that I'm really, really glad that I got the chance to work with JP, and I think JP's work has contributed a bunch for the Forum.

Below I'm sharing some quick misc notes on why that is.

Meta: sharing a public comment like this on a friend's post is pretty weird for me! But JP's work has mostly stayed behind the scenes and has thus gotten significantly less acknowledgement than it deserves, IMO, so I did feel the urge to post this kind of thing publicly. As a compromise between those feelings, y'all are getting the comment along ...

I think this is a good question, and it's something I sort of wanted to look into and then didn't get to! (If you're interested, I might be able to connect you with someone/some folks who might know more, though.)

Quick general takes on what private companies might be able to do to make their tools more useful on this front (please note that I'm pretty out of my depth here, so take this with a decent amount of salt -- and also this isn't meant to be prioritized or exhaustive):

- Some of the vetting/authorization processes (e.g. FedRAMP) are burdensome, a

the main question is how high a priority this is, and I am somewhat skeptical it is on the ITN pareto frontier. E.g. I would assume plenty of people care about government efficiency and state capacity generally, and a lot of these interventions are generally about making USG more capable rather than too targeted towards longtermist priorities.

Agree that "how high-priority should this be" is a key question, and I'm definitely not sure it's on the ITN pareto frontier! (Nice phrase, btw.)

Quick notes on some things that raise the importance for me, thoug...

...I imagine there might be some very clever strategies to get a lot of the benefits of AI without many of the normal costs of integration.

For example:

- The federal government makes heavy use of private contractors. These contractors are faster to adopt innovations like AI.

- There are clearly some subsets of the government that matter far more than others. And there are some that are much easier to improve than others.

- If AI strategy/intelligence is cheap enough, most of the critical work can be paid for by donors. For example, we have a situation where there's a

Thanks for this comment! I don’t view it as “overly critical.”

Quickly responding (just my POV, not Forethought’s!) to some of what you brought up ---

(This ended up very long, sorry! TLDR: I agree with some of what you wrote, disagree with some of the other stuff / think maybe we're talking past each other. No need to respond to everything here!)

A. Motivation behind writing the piece / target audience/ vibe / etc.

Re:

...…it might help me if you explained more about the motivation [behind writing the article] [...] the article reads like you decided the co

Quick (visual) note on something that seems like a confusion in the current conversation:

Others have noted similar things (eg, and Will’s earlier take on total vs human extinction). You might disagree with the model (curious if so!), but I’m a bit worried that one way or another people are talking past each other (least from skimming the discussion).

(Commenting via phone, sorry for typos or similar!)

What actually changes about what you’d work on if you concluded that improving the future is more important on the current margin than trying to reduce the chance of (total) extinction (or vice versa)?

Curious for takes from anyone!

I wrote a Twitter thread that summarizes this piece and has a lot of extra images (I probably went overboard, tbh.)

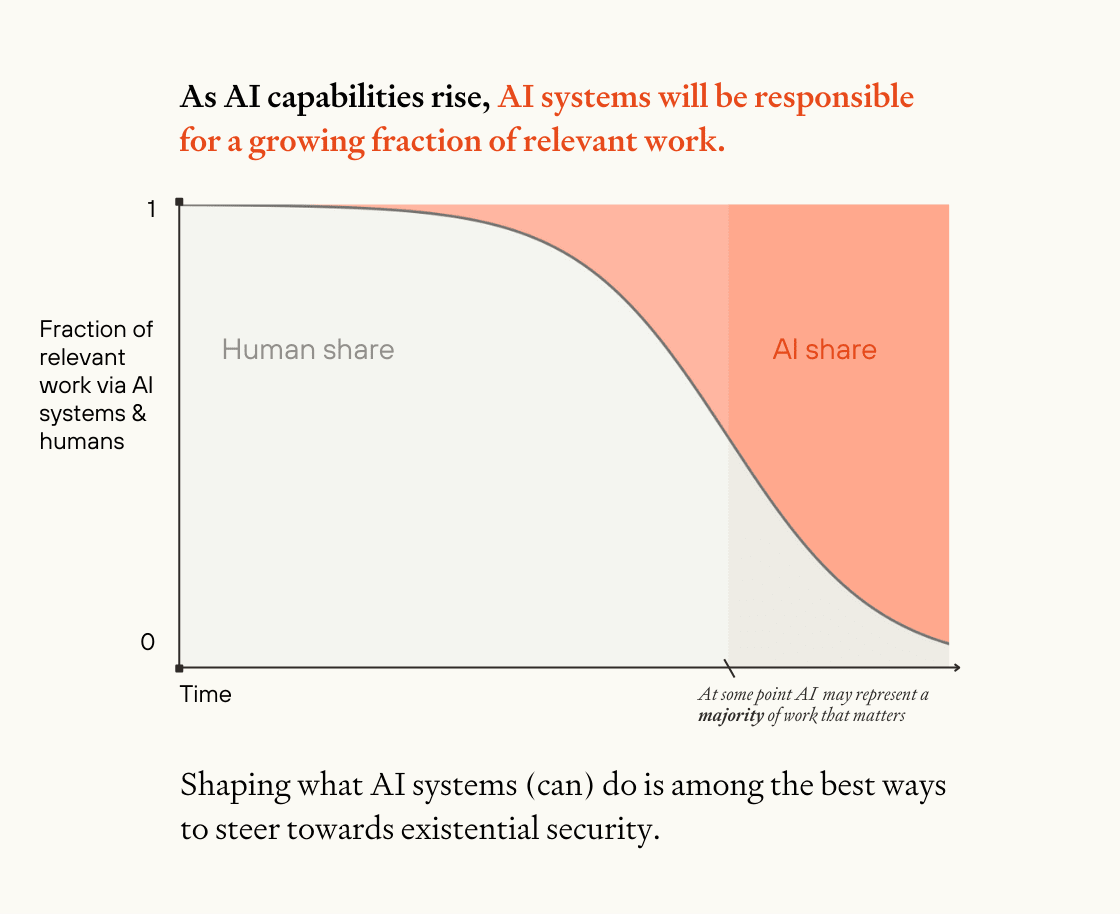

I kinda wish I'd included the following image in the piece itself, so I figured I'd share it here:

Follow-up:

Quick list of some ways benchmarks might be (accidentally) misleading[1]

- Poor "construct validity"[2]( & systems that are optimized for the metric)

- The connection between what the benchmark is measuring and what it's trying to measure (or what people think it's measuring) is broken. In particular:

- Missing critical steps

- When benchmarks are trying to evaluate progress on some broad capability (like "engineering" or "math ability" or "planning"), they're often testing specific performance on meaningfully simpler tasks. Performance on those t

TLDR: Notes on confusions about what we should do about digital minds, even if our assessments of their moral relevance are correct[1]

I often feel quite lost when I try to think about how we can “get digital minds right.” It feels like there’s a variety of major pitfalls involved, whether or not we’re right about the moral relevance of some digital minds.

| Digital-minds-related pitfalls in different situations | ||

Reality ➡️ Our perception ⬇️ | These digital minds are (non-trivially) morally relevant[2] | These digital minds are not morally relevant |

| We see thes | ||

Thanks for saying this!

I’m trying to get back into the habit of posting more content, and aiming for a quick take makes it easier for me to get over perfectionism or other hurdles (or avoid spending more time on this kind of thing than I endorse). But I’ll take this as a nudge to consider sharing things as posts more often. :)

When I try to think about how much better the world could be, it helps me to sometimes pay attention to the less obvious ways that my life is (much) better than it would have been, had I been born in basically any other time (even if I was born among the elite!).

So I wanted to make a quick list of some “inconspicuous miracles” of my world. This isn’t meant to be remotely exhaustive, and is just what I thought of as I was writing this up. The order is arbitrary.

1. Washing machines

It’s amazing that I can just put dirty clothing (or dishes, etc.) into a ...

Notes on some of my AI-related confusions[1]

It’s hard for me to get a sense for stuff like “how quickly are we moving towards the kind of AI that I’m really worried about?” I think this stems partly from (1) a conflation of different types of “crazy powerful AI”, and (2) the way that benchmarks and other measures of “AI progress” de-couple from actual progress towards the relevant things. Trying to represent these things graphically helps me orient/think.

First, it seems useful to distinguish the breadth or generality of state-of-the-art AI ...

I don't think the situation is actually properly resolved by the recent waivers (as @Garrison points out below). See e.g. this thread and the linked ProPublica article. From the thread:

There is mass confusion and fear, both in and outside government. The aid organizations say they either don’t know how to get a waiver exempting them from Trump’s order or have no idea if/when theirs might get approved.

And from the article:

...Despite an announcement earlier this week ostensibly allowing lifesaving operations to continue, those earlier orders have not been resci

As a datapoint: despite (already) agreeing to a large extent with this post,[1] IIRC I answered the question assuming that I do trust the premise.

Despite my agreement, I do think there are certain kinds of situations in which we can reasonably use small probabilities. (Related post: Most* small probabilities aren't pascalian, and maybe also related.)

More generally: I remember appreciating some discussion on the kinds of thought experiments that are useful, when, etc. I can't find it quickly, but possible starting points could be this LW po...

Before looking at what you wrote, I was most skeptical of the existence of (plausibly) cost-effective interventions on this front. In particular, I had a vague background view that some interventions work but are extremely costly (financially, politically, etc.), and that other interventions either haven't been tried or don't seem promising. I was probably expecting your post to be an argument that we/most people undervalue the importance of peace (and therefore costly interventions actually look better than they might) or an argument that there are some n...

Addendum to the post: an exercise in giving myself advice

The ideas below aren't new or very surprising, but I found it useful to sit down and write out some lessons for myself. Consider doing something similar; if you're up for sharing what you write as a comment on this post, I'd be interested and grateful.

(1) Figure out my reasons for working on (major) projects and outline situations in which I would want myself to leave, ideally before getting started on the projects

I plan on trying to do this for any project that gives me any (ethical) doubts, a...

A note on how I think about criticism

(This was initially meant as part of this post,[1] but while editing I thought it didn't make a lot of sense there, so I pulled it out.)

I came to CEA with a very pro-criticism attitude. My experience there reinforced those views in some ways,[2] but it also left me more attuned to the costs of criticism (or of some pro-criticism attitudes). (For instance, I used to see engaging with all criticism as virtuous, and have changed my mind on that.) My overall takes now aren’t very crisp or easily summarizable,...

A note on mistakes and how we relate to them

(This was initially meant as part of this post[1], but I thought it didn't make a lot of sense there, so I pulled it out.)

“Slow-rolling mistakes” are usually much more important to identify than “point-in-time blunders,”[2] but the latter tend to be more obvious.

When we think about “mistakes”, we usually imagine replying-all when we meant to reply only to the sender, using the wrong input in an analysis, including broken hyperlinks in a piece of media, missing a deadline, etc. I tend to feel pretty horrible ...

I'm going to butt in with some quick comments, mostly because:

- I think it's pretty important to make sure the report isn't causing serious misunderstandings

- and because I think it can be quite stressful for people to respond to (potentially incorrect) criticisms of their projects — or to content that seem to misrepresent their project(s) — and I think it can help if someone else helps disentangle/clarify things a bit. (To be clear, I haven't run this past Linch and don't know if he's actually finding this stressful or the like. And I don't want to disc

I'd suggest using a different term or explicitly outlining how you use "expert" (ideally both in the post and in the report, where you first use the term) since I'm guessing that many readers will expect that if someone is called "expert" in this context, they're probably "experts in EA meta funding" specifically — e.g. someone who's been involved in the meta EA funding space for a long time, or someone with deep knowledge of grantmaking approaches at multiple organizations. (As an intuition pump and personal datapoint, I wouldn't expect "experts" in the c...

I know Grace has seen this already, but in case others reading this thread are interested: I've shared some thoughts on not taking the pledge (yet) here.[1]

Adding to the post: part of the value of pledges like this comes from their role as a commitment mechanism to prevent yourself from drifting away from values and behaviors that you endorse. I'm not currently worried about drifting in this way, partly because I work for CEA and have lots of social connections to extremely altruistic people. If I started working somewhere that isn't explicitly EA-oriented...

Not sure if this already exists somewhere (would love recommendations!), but I'd be really excited to see a clear and carefully linked/referenced overview or summary of what various agriculture/farming ~lobby groups do to influence laws and public opinion, and how they do it (with a focus on anything related to animal welfare concerns). This seems relevant.

Just chiming in with a quick note: I collected some tips on what could make criticism more productive in this post: "Productive criticism: what could help?"

I'll also add a suggestion from Aaron: If you like a post, tell the author! (And if you're not sure about commenting with something you think isn't substantive, you can message the author a quick note of appreciation or even just heart-react on the post.) I know that I get a lot out of appreciative comments/messages related to my posts (and I want to do more of this myself).

I'm basically always interested in potential lessons for EA/EA-related projects from various social movements/fields/projects.

Note that you can find existing research that hasn't been discussed (much) on the Forum and link-post it (I bet there's a lot of useful stuff out there), maybe with some notes on your takeaways.

Example movements/fields/topics:

- Environmentalism — I've heard people bring up the environmentalist/climate movement a bunch in informal discussions as an example for various hypotheses, including "movements splinter/develop highly

I'd love to see two types of posts that were already requested in the last version of this thread:

- From Aaron: "More journalistic articles about EA projects. [...] Telling an interesting story about the work of a person/organization, while mixing in the origin story, interesting details about the people involved, photos, etc."

- From Ben: "More accessible summaries of technical work." (I might share some ideas for technical work I'd love to see summarized later.)

I really like this post and am curating it (I might be biased in my assessment, but I endorse it and Toby can't curate his own post).

A personal note: the opportunity framing has never quite resonated with me (neither has the "joy in righteousness" framing), but I don't think I can articulate what does motivate me. Some of my motivations end up routing through something ~social. For instance, one (quite imperfect, I think!) approach I take[1] is to imagine some people (sometimes fictional or historical) I respect and feel a strong urge to be the ...

Thanks for sharing this! I'm going to use this thread as a chance to flag some other recent updates (no particular order or selection criteria — just what I've recently thought was notable or recently mentioned to people):

- California proposes sweeping safety measure for AI — State Sen. Scott Wiener wants to require companies to run safety tests before deploying AI models. (link goes to "Politico Pro"; I only see the top half)

- Here's also Senator Scott Wiener's Twitter thread on the topic (note the endorsements)

- See also the California effect

- Trump: AI ‘m

I don't actually think you need to retract your comment — most of the teams they used did have (at least some) biological expertise, and it's really unclear how much info the addition of the crimson cells adds. (You could add a note saying that they did try to evaluate this with the additional of two crimson cells? In any case, up to you.)

(I will also say that I don't actually know anything about what we should expect about the expertise that we might see on terrorist cells planning biological attacks — i.e. I don't know which of these is actually appropriate.)

I'm also worried about an "epistemics" transformation going poorly, and agree that how it goes isn't just a question of getting the right ~"application shape" — something like differential access/adoption[1] matters here, too.

@Owen Cotton-Barratt, @Oliver Sourbut, @rosehadshar and I have been thinking a bit about these kinds of questions, but not as much as I'd like (there's just not enough time). So I'd love to see more serious work on things like "what might it look for our society to end up with much better/worse epistemic infrastructure (and how m... (read more)