matthew.vandermerwe

Posts 12

Comments64

Topic contributions5

There's a whole chapter in superintelligence on human intelligence enhancement via selective breeding

This is false and should be corrected. There is a section (not a whole chapter) on biological enhancement, within which there is a single paragraph on selective breeding:

A third path to greater-than-current-human intelligence is to enhance the functioning of biological brains. In principle, this could be achieved without technology, through selective breeding. Any attempt to initiate a classical large-scale eugenics program, however, would confront major political and moral hurdles. Moreover, unless the selection were extremely strong, many generations would be required to produce substantial results. Long before such an initiative would bear fruit, advances in biotechnology will allow much more direct control of human genetics and neurobiology, rendering otiose any human breeding program. We will therefore focus on methods that hold the potential to deliver results faster, on the timescale of a few generations or less.

[Update from Pablo & Matthew]

As we reached the one-year mark of Future Matters, we thought it a good moment to pause and reflect on the project. While the newsletter has been a rewarding undertaking, we’ve decided to stop publication in order to dedicate our time to new projects. Overall, we feel that launching Future Matters was a worthwhile experiment, which met (but did not surpass) our expectations. Below we provide some statistics and reflections.

Statistics

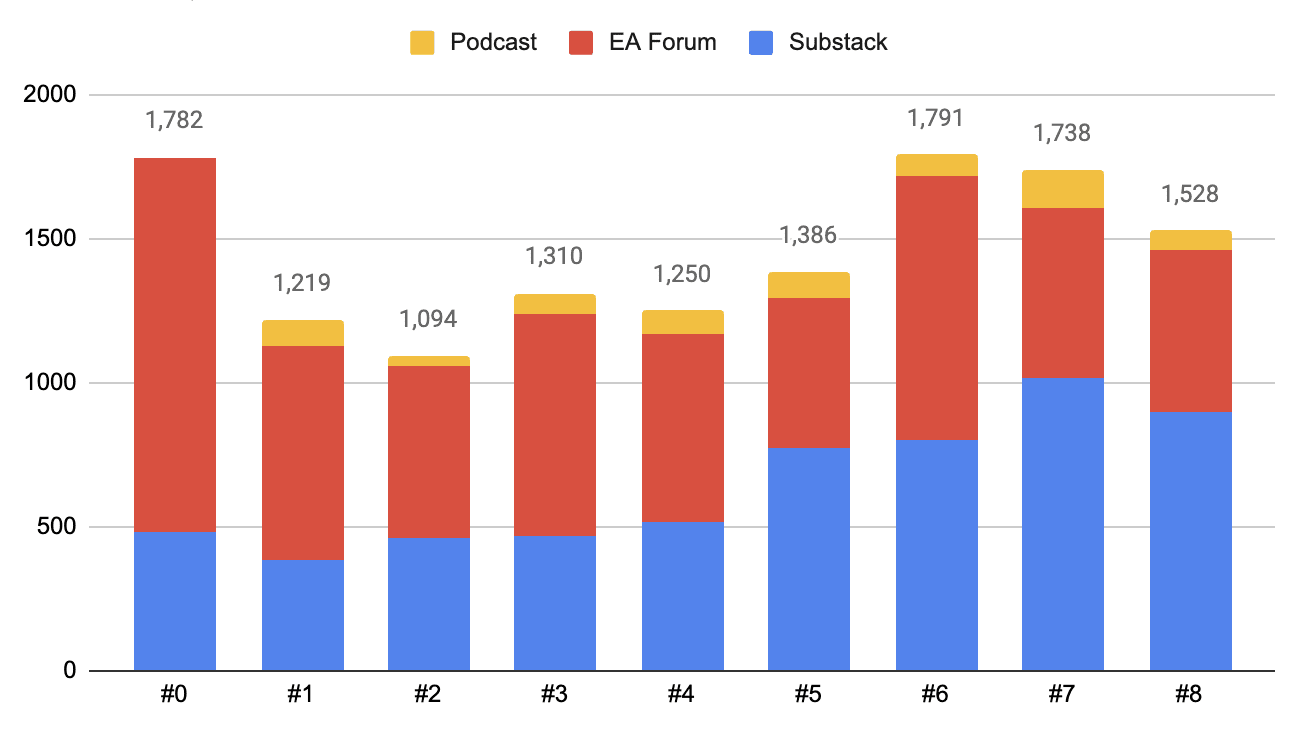

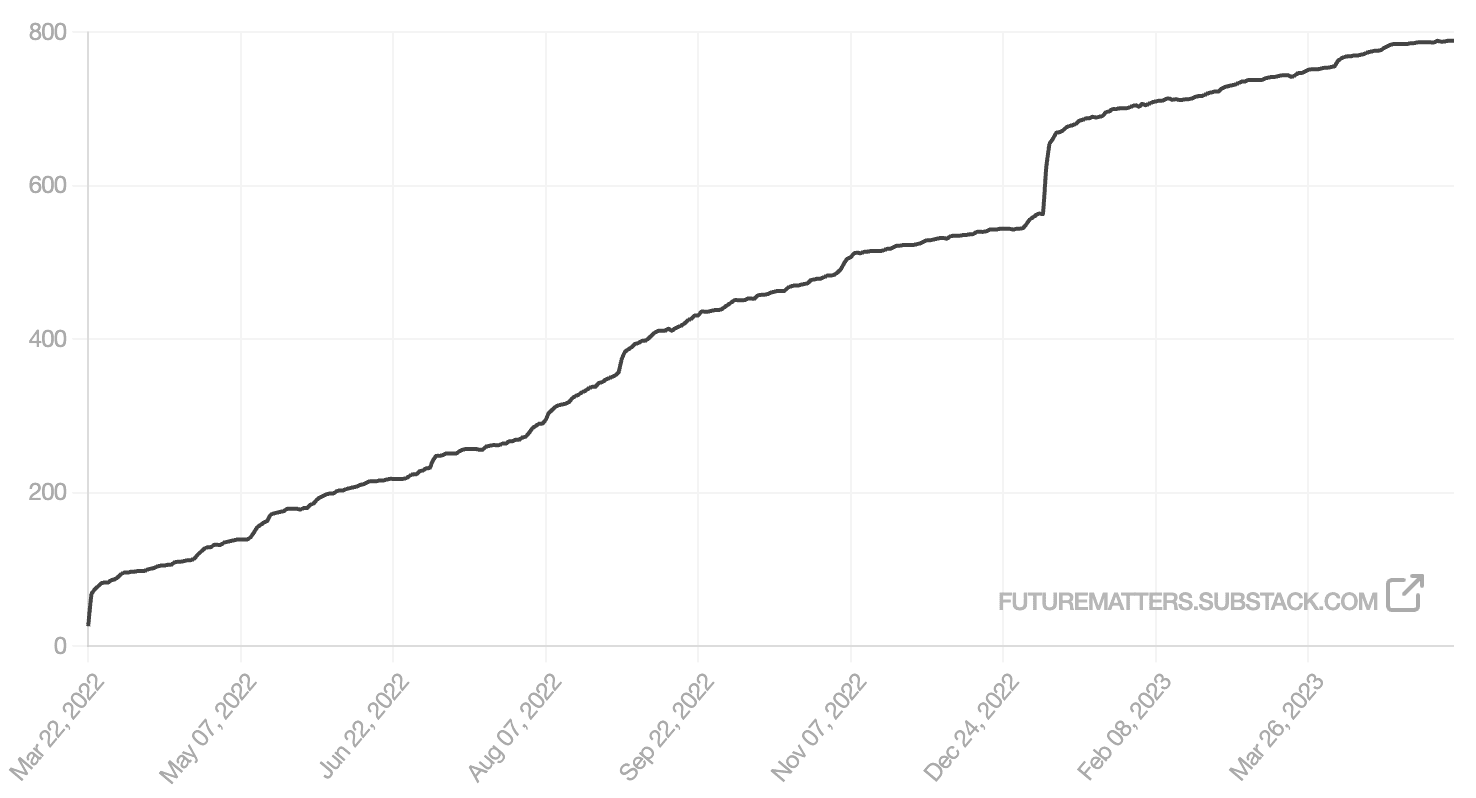

Aggregated across platforms, we had between 1,000–1,800 impressions per issue. Over time, an increasingly larger share came from Substack, reflecting our growth in subscribers on that platform and the absence of an equivalent subscription service on the EA Forum.

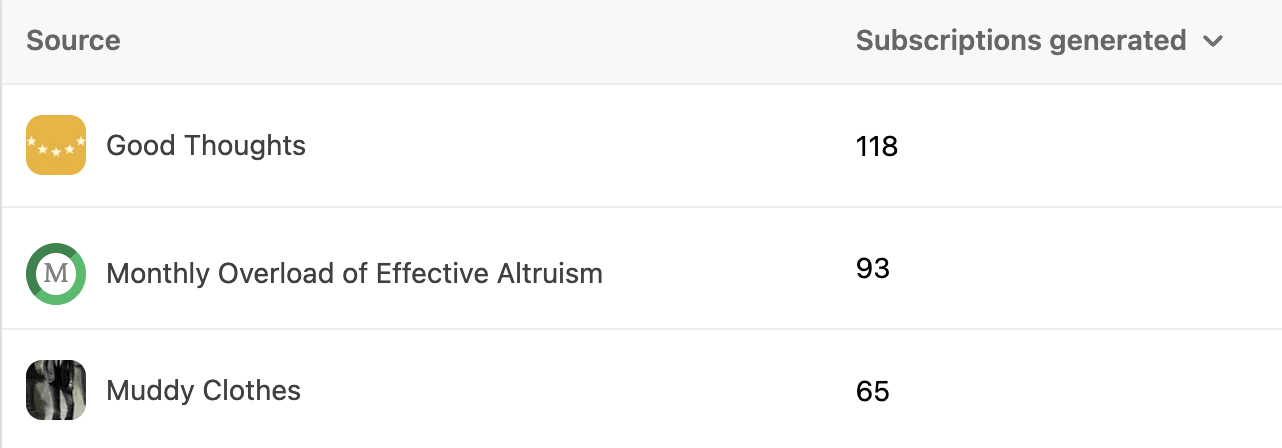

A substantial fraction of our subscriptions came via other EA newsletters:

Reflections

Time investment. Writing the newsletter took considerably more time than we had anticipated. Much of that time involved two activities: (1) actively scanning Twitter lists, EA News, email alerts and other sources for suitable content and (2) reading and summarizing this material. The publication process itself was also pretty time-consuming, but we were able to fully delegate it to a very efficient and reliable assistant. Overall, we each spent at least 2–3 days working on each issue.

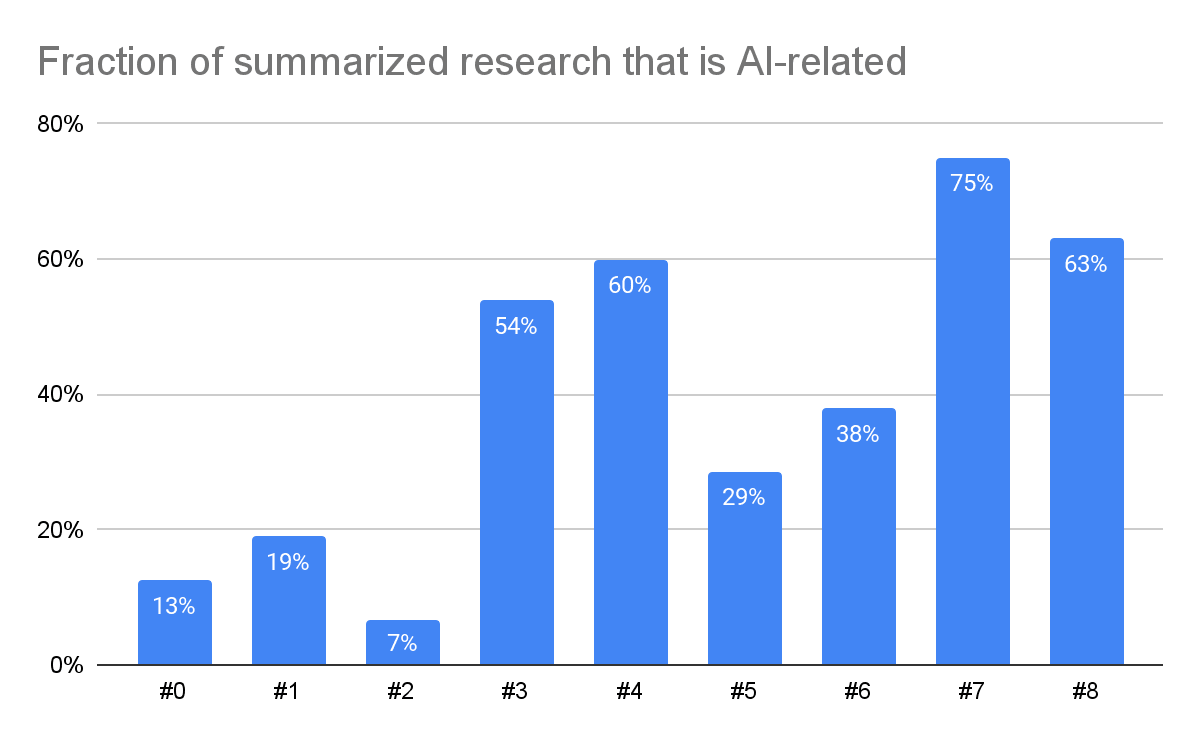

AI stuff. Over the course of the year, AI-related content began to dwarf other topics, to the point where Future Matters became mostly AI-focused.

.

We feel like this shift in priorities was warranted — the recent pace of AI progress has been staggering, as has been the recklessness of certain AI labs. All the more surprising has been the receptiveness of the public and media to taking AI risk concerns seriously (e.g. the momentum behind measures to slow down AI progress).

In this context, it appears to us that the value of a newsletter focused on longtermism and existential risk generally is lower than it was when we started it, relative to a newsletter with a sole focus on AI risk. But we don’t think we’re the best people to run such a newsletter. There are already a number of good active AI newsletters out there, which have their own focuses:

- ImportAI (Jack Clark)

- AI safety newsletter (CAIS/Dan Hendrycks)

- ChinAI (Jeffrey Ding)

- Navigating AI Risks (Campos et al)

The recent progress in AI has made us more reluctant to continue investing time in this project for a separate reason. Much of the work Future Matters demands, as noted earlier, involves collecting and summarizing content. But these are tasks that GPT-4 can already do tolerably well, and which we expect could be mostly delegated within the next few months.

Listeners are likely to interpret, from your focus on character, and given your position as a leading EA speaking on the most prominent platform in EA - the opening talk at EAG - that this is all effective altruists should think about.

Really? I don't think I've ever encountered someone interpreting the topic of an EAG opening talk as being "all EAs should think about".

At EAG London 2022, they distributed hundreds of flyers and stickers depicting Sam on a bean bag with the text "what would SBF do?".

These were not an official EAG thing — they were printed by an individual attendee.

To my knowledge, never before were flyers depicting individual EAs at EAG distributed. (Also, such behavior seems generally unusual to me, like, imagine going to a conference and seeing hundreds of flyers and stickers all depicting one guy. Doesn't that seem a tad culty?

Yeah it was super weird.

This break even analysis would be more appropriate if the £15m had been ~burned, rather than invested in an asset which can be sold.

If I buy a house for £100k cash and it saves me £10k/year in rent (net costs), then after 10 years I've broken even in the sense of [cash out]=[cash in], but I also now have an asset worth £100k (+10y price change), so I'm doing much better than 'even'.

Agreed. And from perspective of the EA portfolio, the investment has some diversification benefits. YTD Oxford property prices are up +8% , whereas the rest of the EA portfolio (Meta/Asana/crypto) has dropped >50%.

Kudos! I see the blog is still hosted at ineffectivealtruismblog.com, though. Fortunately both reflectivealtruism.com and reflectivealtruismblog.com are currently available.