MHR🔸

Bio

I work as an engineer, donate 10% of my income, and occasionally enjoy doing independent research. I'm most interested in farmed animal welfare and the nitty-gritty details of global health and development work. In 2022, I was a co-winner of the GiveWell Change Our Mind Contest.

Posts 7

Comments194

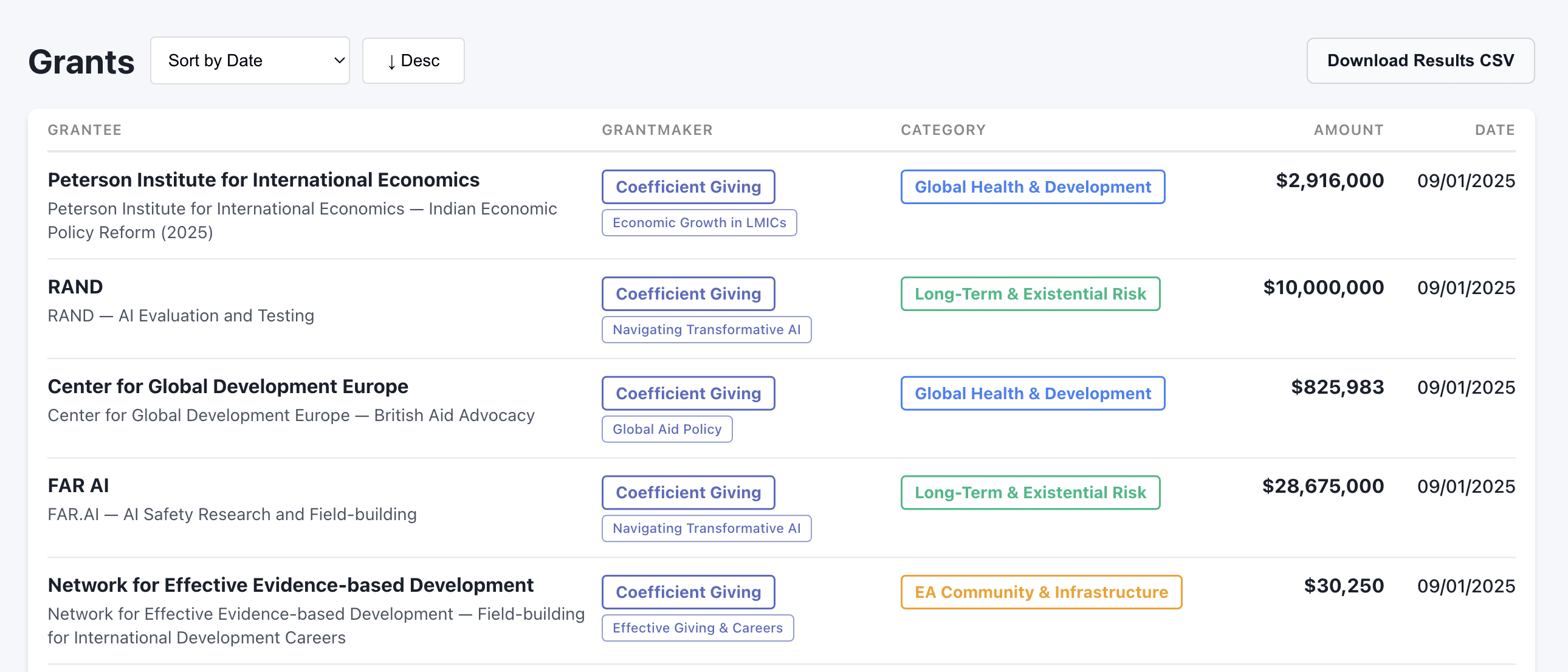

This is really cool! One possible issue: If I filter to Coefficient Giving and then sort by date, I see no grants since September:

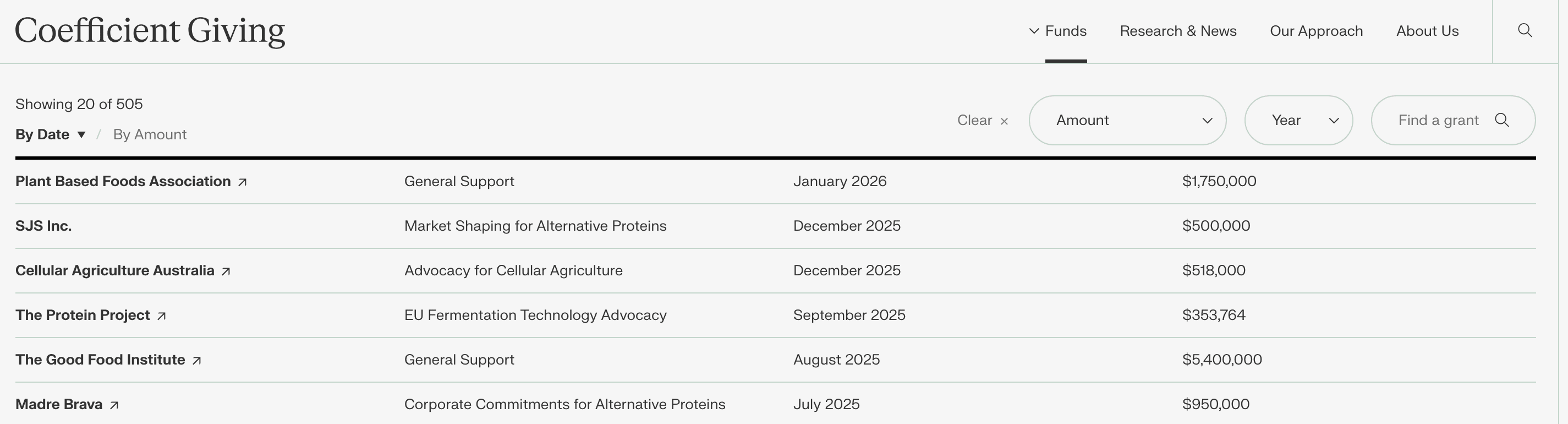

But if I go to an example fund from CG, such as their Farm Animal Welfare Fund, I see more recent grants:

You mentioned survey research by Rethink Priorities a couple times in the post. However, the survey found that "the Donation message [a pure pitch to donate] was rated as more compelling than the Diet distancing message [a pitch to donate that specifically called out that one doesn't need to go vegan to help animals]." The difference in effect sizes was small, but I'm skeptical that the survey really supports the theory of change you were going for here.

RP used to have an AI Governance and Strategy team, and if I understand correctly, that team spun out into IAPS. Can you elaborate on why that team was spun out, and why you think it would now be a good fit to restart that team within RP?

Looking into this a bit more, from this thread it seems like OP's grants database may currently be missing as much as half of their 2025 GCR spending.

This is really awesome work, it's great to have someone put this together!

Hopefully the drop in @GiveWell's grants is just a timing or reporting issue and not nearly as large as it seems. Maybe they'll be able to clarify further!

If you wanted to extend this and cover more EA grants, I know Farmkind has a public database of grants from their platform that would be great to add. It also would be awesome if this could capture high-impact donations from Founders Pledge, but I'm not sure they provide granular enough data to be able to track by year and cause area. Maybe talking to @Matt_Lerner could shed some insight?

This year's recommendations have a pretty wide range of methods: institutional meat reduction, policy advocacy, corporate campaigning, producer outreach/support, and academic field building. Was having a wide range of approaches represented among the recommended charities something you were intentionally aiming to have, or just happenstance from the evaluation results?

Thanks so much for all the research and effort that went into this! This is a really exciting group of organizations.

I was, however, curious about one aspect the numeric cost-effectiveness estimates. It's great to see these as part of ACE's process, and I definitely learned a lot from them! But I was surprised to see how narrow the estimates were for the two Shrimp Welfare Project programs, given how radically uncertain I think basically everyone is about some of the key parameters influencing the results. Am I right in understanding that this disconnect is largely coming from ACE using AIM's suffering-adjusted day estimates per animal impacted, and those estimates not including uncertainty ranges? If so, would ACE consider trying to add uncertainty estimates on those numbers in future years?

Listed cost-effectiveness estimates:

AWO:

- ECC: 4-126 SADs/$

- Cage-free: 8-67 SADs/$

SWP:

- HSI: 43–53 SADs/$

- SSFI: 464–840 SADs/$

SVB:

- IMR: 6-14 SADs/$

THL:

- Cage-Free: 17–351 SADs/$

- BCC: 2–89 SADs/$

WAI: Unknown SADs/$

Nice post and visualization! You might be interested in a different but related thought experiment from Richard Chappell.

I second Vasco's desire for the dashboard to be maintained again!