plex

Posts 18

Comments69

Mostly like the post, but;

I mostly view the 10% pledge as a social commitment device rather than a sensible rule for how much to donate.

strikes me as missing the fact that small donors can spend much more cognition evaluating per unit dollar and are less heavily optimized against, so there are many high EV opportunities which can ~only be picked up by small-scale donors. Especially within-network donations to support projects that are too early stage or speculative to pass grantmaker bars.

ops, yup, fixed link.

And wouldn't you expect those fitness defects to evolve away over time reasonably well? Seems like the kind of thing that would be a ton of individually minor distributional shifts which would have normal selection gradients over them, if you had a decent population running for a while?

Plus now they don't have to maintain their usual set of anti-viral defenses, which probably frees up a lot of novel design options, plus some genetic space and metabolic resources? I'd mostly expect that within a year or two of large-population (say a large scale commercial bioreactor) they strongly out-compete normal bacteria.

we’re 10–30 years away, but: Only $500 million to $1 billion could potentially be sufficient and accelerate this timeline

This is not great. However, the virus immunity is a huge part, maybe the majority of, the issue for getting competitive advantage for wrecking the biosphere and unlike mirror life, virus immunity is very commercially valuable. And it's not a future projection, this was made in 2024 and the containment strategy seems kinda not the thing you want to bet the biosphere on.

I think the people concerned about mirror life have a much more urgent warm up, right now: New Synthetic E. coli Is Immune to Bacteriophage Infection

Changelog

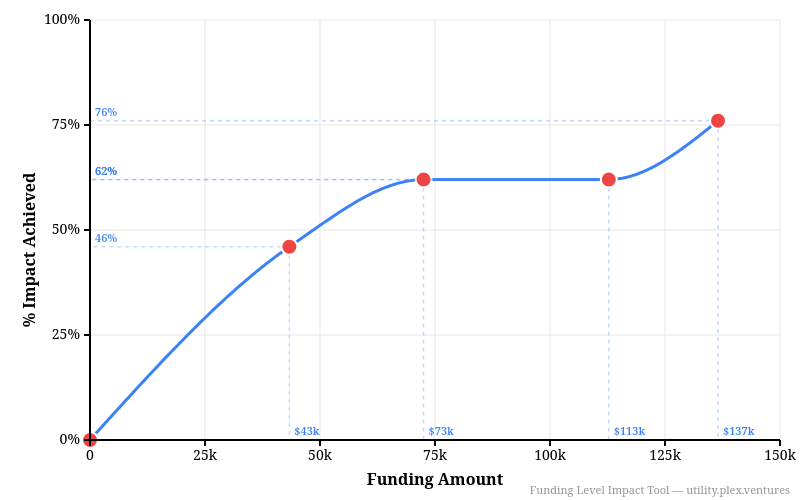

New Features:

- Added Ctrl+Z/Ctrl+Y undo/redo - experiment freely and roll back mistakes

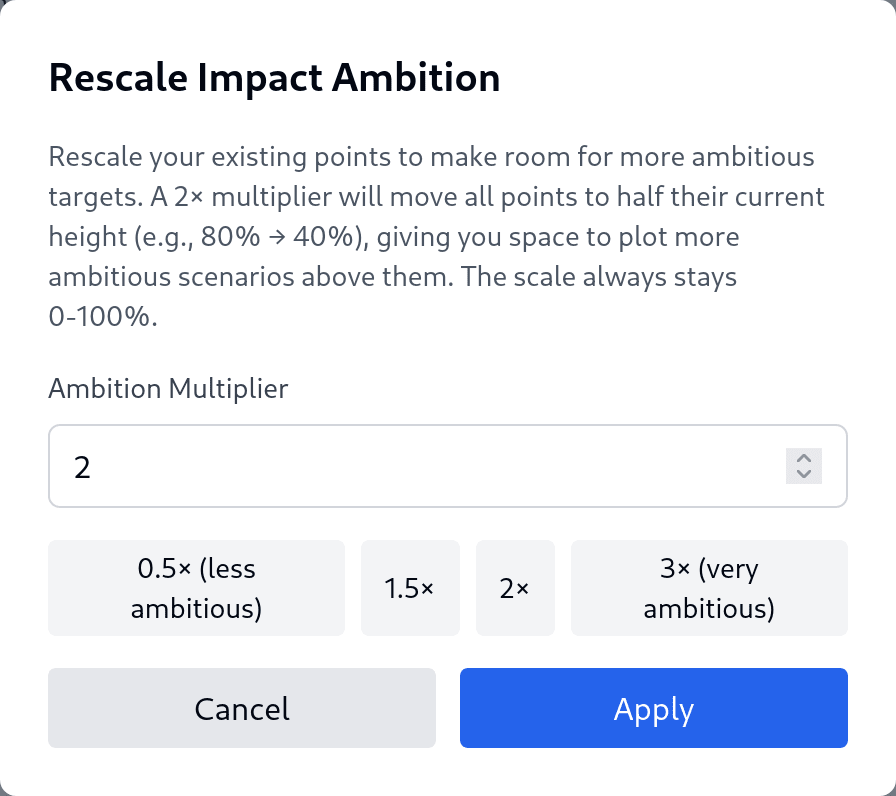

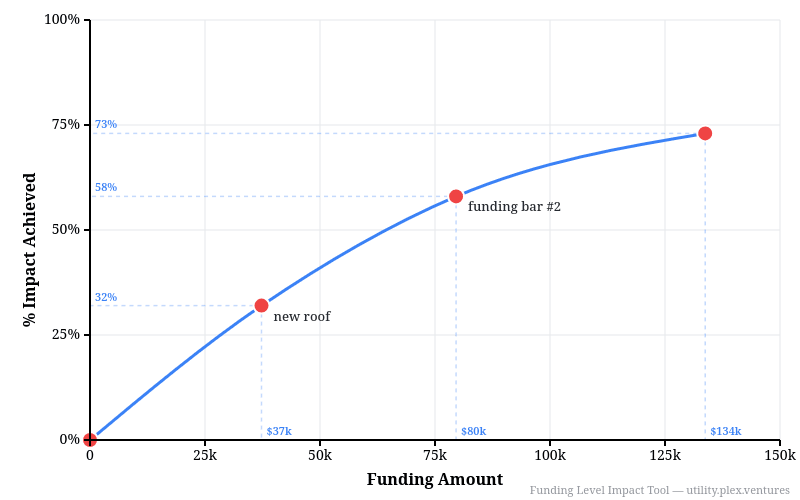

- 🚀 Set ambition level - rescales % so you can extend a project[1]

- 📸 Copy graph to clipboard - Easy grabbing the image

- Dotted reference lines drop from each point to show exact dollar amounts and to the left for exact % amounts[2]

- Clear notification - Shows "Points cleared - press Ctrl+Z to undo" when you clear all points

- 🧲 Snap to Grid - Enable magnetic snapping to round numbers (off by default)

- Right click to add text to breakpoints[3]

- Smart currency input - type "90k" or "1.5M" and it auto-converts

- Input validation with helpful error messages

- Touch scrolling fixed - Dragging points on mobile doesn't scroll the page anymore

Language Updates:

- Guide describes all features properly

- Changed "Utility" → "Impact" throughout (less jargon!)

- New title: "Plot Your Impact at Different Funding Levels"

- Clearer subtitle mentions sharing with funders

- Added watermark with stable URL

Accessibility

- Keyboard navigation - Can focus and navigate the graph with keyboard

- Screen reader support - All buttons labeled, status messages announced

- Modal focus trap - Can't accidentally tab outside modal dialog

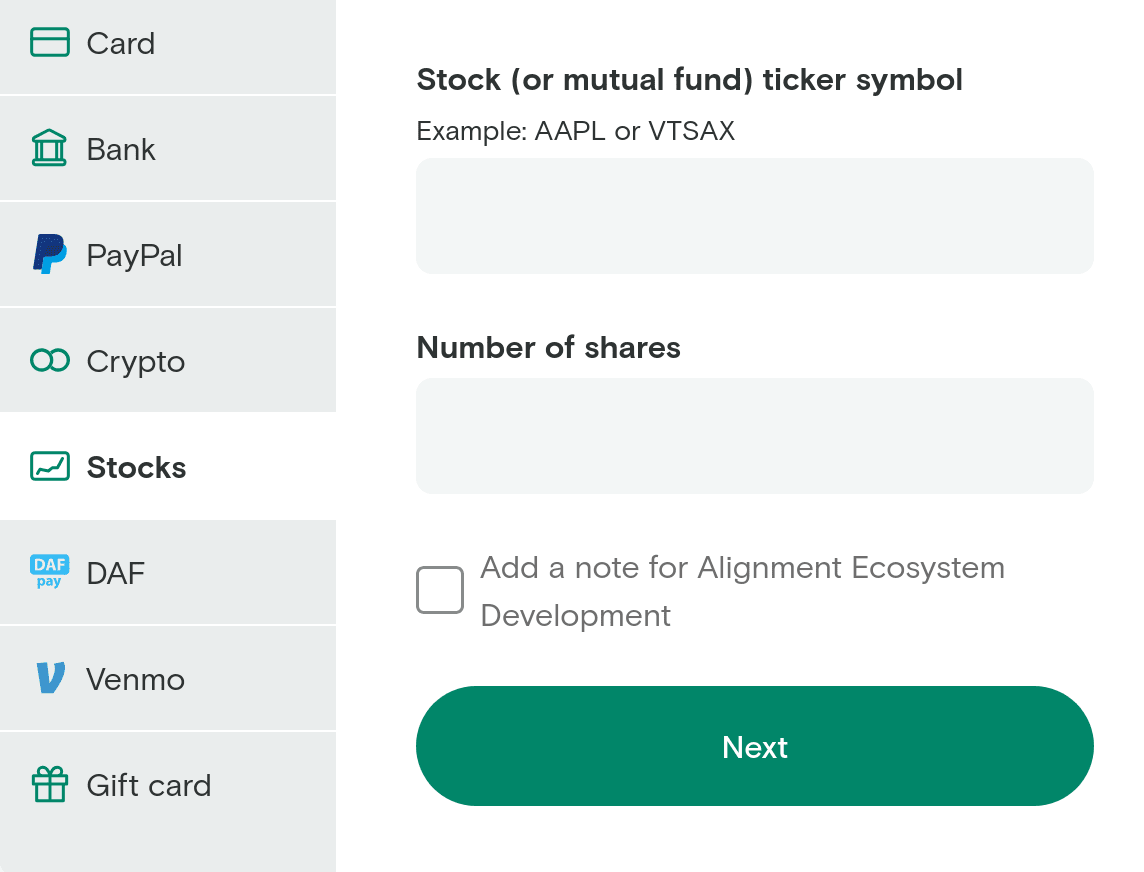

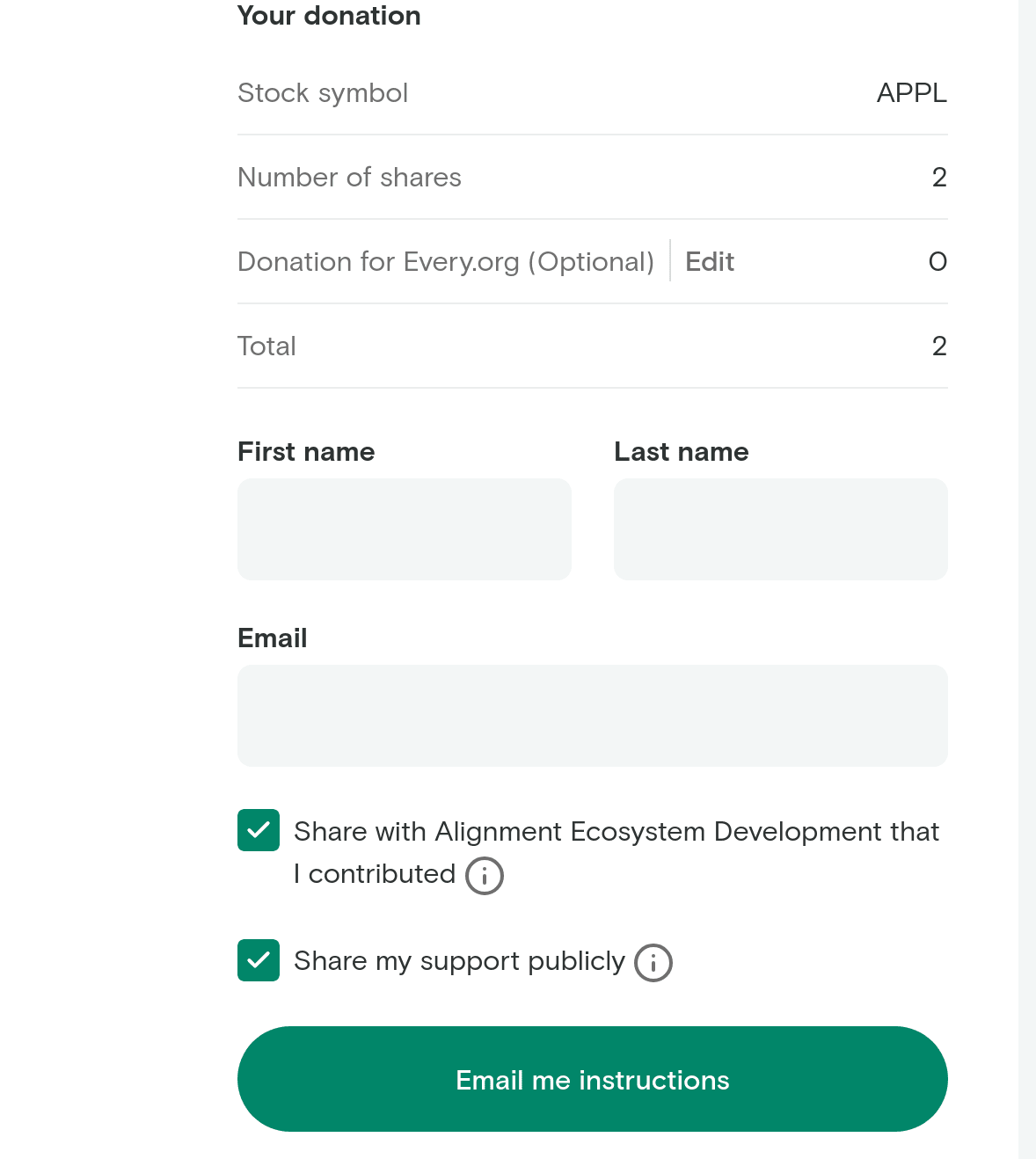

The actual logistics of donating stock are a pain.

If they set up an every.org page it looks straightforward? e.g. select stock on this

I've been doing this self-funded for quite a few years[1] and would be enthusiastic to talk to anyone who gets this position, to swap models and contacts.

- ^

This has produced https://www.aisafety.com/ (including the map of AI Safety, list of funders, and the events&training newsletter) https://aisafety.info/ (including a RAG chatbot powered by the Alignment Research Dataset we maintain) https://alignment.dev/ https://www.affi.ne/ plus lots of minor projects and a couple more major ones upcoming.

Nice to see this idea spreading! I bet Hamish would be happy to share the code we use for aisafety.world if that's helpful. There's a version on this github, but I'm not certain that's the latest code. Drop by AED if you'd like to talk.

it's up for me, not sure why the host would have gone down then up again, is it still looking down for you?