All of Eli Rose🔸's Comments + Replies

Anecdotally, the authors of this post have now persuaded nearly 10% of the students at their university to take a GWWC pledge (trial or full), while the pledge rate at most universities is well under 1%. After getting to know these authors, I believe this incredible success is due to their kindness, charisma, passion and integrity - not because the audience at their university is fundamentally different than at other universities.

Wow, that boggles my mind, especially as someone who attended a similar school for undergrad. Anywhere we can read ab...

I like the core point and think it's very important — though I don't really vibe with statements about calibration being actively dangerous.

I think EA culture can make it seem like being calibrated is the most important thing ever. But I think on the topic of "will my ambitious projects succeed?" it seems very difficult to be calibrated and fairly cursed overall, and it may overall be unhelpful to try super hard at this vs. just executing.

For example, I'm guessing that Norman Borlaug didn't feed a billion people primarily by being extremely well-calib...

Yeah, totally a contextual call about how to make this point in any given conversation, it can be easy to get bogged down with irrelevant context.

I do think it's true that utilitarian thought tends to push one towards centralization and central planning, despite the bad track record here. It's worth engaging with thoughtful critiques of EA vibes on this front.

Salaries are the most basic way our economy does allocation, and one possible "EA government utopia" scenario is one where the government corrects market inefficiencies such that salaries perfectly tr...

I like the main point you're making.

However, I think "the government's version of 80,000 Hours" is a very command-economy vision. Command economies have a terrible track record, and if there were such a thing as an "EA world government" (which I would have many questions about regardless) I would strongly think it shouldn't try to plan and direct everyone's individual careers, and should instead leverage market forces like ~all successful large economies.

Thanks for writing this!!

This risk seems equal or greater to me than AI takeover risk. Historically the EA & AIS communities focused more on misalignment, but I'm not sure if that choice has held up.

Come 2027, I'd love for it to be the case that an order of magnitude more people are usefully working on this risk. I think it will be rough going for the first 50 people in this area; I expect there's a bunch more clarificatory and scoping work to do; this is virgin territory. We need some pioneers.

People with plans in this area should feel free to apply f...

I'm quite excited about EAs making videos about EA principles and their applications, and I think this is an impactful thing for people to explore. It seems quite possible to do in a way that doesn't compromise on idea fidelity; I think sincerity counts for quite a lot. In many cases I think videos and other content can be lighthearted / fun / unserious and still transmit the ideas well.

I think the vast majority of people making decisions about public policy or who to vote for either aren't ethically impartial, or they're "spotlighting", as you put it. I expect the kind of bracketing I'd endorse upon reflection to look pretty different from such decision-making.

But suppose I want to know who of two candidates to vote for, and I'd like to incorporate impartial ethics into that decision. What do I do then?

That said, maybe you're thinking of this point I mentioned to you on a call

Hmm, I don't recall this; another Eli perhaps? : )

(vibesy post)

People often want to be part of something bigger than themselves. At least for a lot of people this is pre-theoretic. Personally, I've felt this since I was little: to spend my whole life satisfying the particular desires of the particular person I happened to be born into the body of, seemed pointless and uninteresting.

I knew I wanted "something bigger" even when I was young (e.g. 13 years old). Around this age my dream was to be a novelist. This isn't a kind of desire people would generally call "altruistic," nor would my younger self have c...

...Like if you're contemplating running a fellowship program for AI interested people, and you have animals in your moral circle, you're going to have to build this botec that includes the probability an X% of the people you bring into the fellowship are not going to care about animals and likely, if they get a policy role, to pass policies that are really bad for them...

...I sort of suspect that only a handful of people are trying to do this, and I get why! I made a reasonably straightforward botec for calculating the benefits to birds of bird-safe glass, th

I just remembered Matthew Barnett's 2022 post My Current Thoughts on the risks from SETI which is a serious investigation into how to mitigate this exact scenario.

Enjoyed this post.

Maybe I'll speak from an AI safety perspective. The usual argument among EAs working on AI safety is:

- the future is large and plausibly contains much goodness

- today, we can plausibly do things to steer (in expectation) towards achieving this goodness and away from catastrophically losing it

- the invention of powerful AI is a super important leverage point for such steering

This is also the main argument motivating me — though I retain meaningful meta-uncertainty and am also interested in more commonsense motivations for AI safety work.

A lot of...

But lots of the interventions in 2. seem to also be helpful for getting things to go better for current farmed and wild animals, e.g. because they are aimed avoiding a takeover of society by forces which don't care at all about morals

Presumably misaligned AIs are much less likely than humans to want to keep factory farming around, no? (I'd agree the case of wild animals is more complicated, if you're very uncertain or clueless whether their lives are good or bad.)

No one is dying of not reading Proust, but many people are leading hollower and shallower lives because the arts are so inaccessible.

Tangential to your main point, and preaching to the choir, but... why are "the arts" "inaccessible?" The Internet is a huge revolution in the democratization of art relative to most of human history, TV dramas are now much more complex and interesting than they have been in the past, A24 is pumping out tons of weird/interesting movies, way more people are making interesting music and distributing it than before.

I think (and t...

Vince Gilligan (the Breaking Bad guy) has a new show Pluribus which is many things, but also illustrates an important principle, that being (not a spoiler I think since it happens in the first 10 minutes)...

If you are SETI and you get an extraterrestrial signal which seems to code for a DNA sequence...

DO NOT SYNTHESIZE THE DNA AND THEN INFECT A BUNCH OF RATS WITH IT JUST TO FIND OUT WHAT HAPPENS.

Just don't. Not a complicated decision. All you have to do is go from "I am going to synthesize the space sequence" to "nope" and look at that, x-risk averted. You're a hero. Incredible work.

One note: I think it would be easy for this post to be read as "EA should be all about AGI" or "EA is only for people who are focused on AGI."

I don't think that is or should be true. I think EA should be for people who care deeply about doing good, and who embrace the principles as a way of getting there. The empirics should be up for discussion.

Appreciated this a lot, agree with much of it.

I think EAs and aspiring EAs should try their hardest to incorporate every available piece of evidence about the world when deciding what to do and where to focus their efforts. For better or worse, this includes evidence about AI progress.

The list of important things to do under the "taking AI seriously" umbrella is very large, and the landscape is underexplored so there will likely be more things for the list in due time. So EAs who are already working "in AI safety" shouldn't feel like their cause prioritiza...

Thanks for writing this Arden! I strong upvoted.

I do my work at Open Phil — funding both AIS and EA capacity-building — because I'm motivated by EA. I started working on this in 2020, a time when there were way fewer concrete proposals for what to do about averting catastrophic AI risks & way fewer active workstreams. It felt like EA was necessary just to get people thinking about these issues. Now the catastrophic AI risks field is much larger and somewhat more developed, as you point out. And so much the better for the world!

But it seems so far ...

I enjoyed this, in particular:

the inner critic is actually a kind of ego trip

which resonates for me.

I personally experience my inner critic as something which often prevents me from "seeing the world clearly" including seeing good things I've done clearly, and seeing my action space clearly. It's odd that this is true, because you'd think the point of criticism is to check optimism and help us see things more clearly. And I find this to be very true of other people's criticism, and for some mental modes of critiquing my own plans.

But the distinct flavor of...

And placing some weight on the prediction that the curve will simply continue[1] seems like a useful heuristic / counterbalance (and has performed well).

"and has performed well" seems like a good crux to zoom in on; for which reference class of empirical trends is this true, and how true is it?

It's hard to disagree with "place some weight"; imo it always makes sense to have some prior that past trends will continue. The question is how much weight to place on this heuristic vs. more gears-level reasoning.

For a random example, observers in 2009 might h...

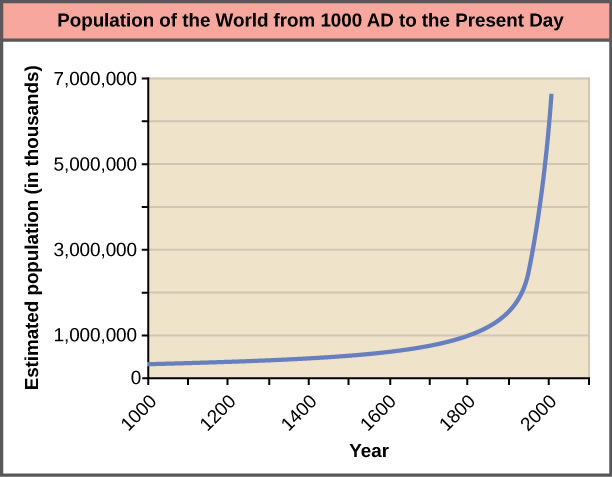

I'm skeptical of an "exponentials generally continue" prior which is supposed to apply super-generally. For example, here's a graph of world population since 1000 AD; it's an exponential, but actually there are good mechanistic reasons to think it won't continue along this trajectory. Do you think it's very likely to?

I think there's really something to this, as a critique of both EA intellectual culture & practice. Deep in our culture is a type of conservatism and a sense that if something is worth doing, one ought to be able to legibly "win a debate" against all critiques of it. I worry this chokes off innovative approaches, and misses out on the virtues of hits-based giving.

However, there are really a wide variety of activities that EAs get up to, and I think this post could be improved by deeper engagement with the many EA activities that don't fit the bednet mo...

I'm not an axiological realist, but it seems really helpful to have a term for that position, upvoted.

Broadly, and off-topic-ally, I'm confused why moral philosophers don't always distinguish between axiology (valuations of states of the world) and morality (how one ought to behave). People seem to frequently talk past each for lack of this distinction. For example, they object to valuing a really large number of moral patients (an axiological claim) on the grounds that doing so would be too demanding (a moral claim). I first learned these terms from https://slatestarcodex.com/2017/08/28/contra-askell-on-moral-offsets/ which I recommend.

However, some of the public stances he has taken make it difficult for grantmakers to associate themselves with him. Even if OP were otherwise very excited to fund AISC, it would be political suicide for them to do so. They can’t even get away with funding university clubs.

(I lead the GCR Capacity Building team at Open Phil and have evaluated AI Safety Camp for funding in the past.)

AISC leadership's involvement in Stop AI protests was not a factor in our no-fund decision (which was made before the post you link to).

For AI safety talent programs, I think it...

I edited this post on January 21, 2025, to reflect that we are continuing funding stipends for graduate student organizers for non-EA groups, while stopping funding stipends for undergraduate student organizers. I think that paying grad students for their time is less unconventional than for undergraduates, and also that their opportunity cost is higher on average. Ignoring this distinction was an oversight in the original post.

Hey! I lead the GCRCB team at Open Philanthropy, which as part of our portfolio funds "meta EA" stuff (e.g. CEA).

I like the high-level idea here (haven't thought through the details).

We're happy to receive proposals like this for media communicating EA ideas and practices. Feel free to apply here, or if you have a more early-stage idea, feel free to DM me on here with a short description — no need for polish — and I'll get back to you with a quick take about whether it's something we might be interested in. : )

(meta musing) The conjunction of the negations of a bunch of statements seems a bit doomed to get a lot of disagreement karma, sadly. Esp. if the statements being negated are "common beliefs" of people like the ones on this forum.

I agreed with some of these and disagreed with others, so I felt unable to agreevote. But I strongly appreciated the post overall so I strong-upvoted.

- Similar to that of our other roles, plus experience running a university group as an obvious one — I also think that extroversion and proactive communication are somewhat more important for these roles than for others.

- Going to punt on this one as I'm not quite sure what is meant by "systems."

- This is too big to summarize here, unfortunately.

- Check out "what kinds of qualities are you looking for in a hire" here. My sense is we index less on previous experience than many other organizations (though it's still important). Experience juggling many tasks, prioritize, and syncing up with stakeholders jumps to mind. I have a hypothesis that consultant experience would be helpful for this role, but that's a bit conjectural.

- This is a bit TBD — happy to chat more further down the pipeline with any interested candidates.

- We look for this in work tests and in previous experience.

- The CB team continuously evaluates the track record of grants we've made when they're up for renewal, and this feeds into our sense of how good programs are overall. We also spend a lot of time keeping up with what's happening in CB and in x-risk generally, and this feeds into our picture of how well CB projects are working.

- Check out "what kinds of qualities are you looking for in a hire" here.

- Same answer as 2.

Empirically, in hiring rounds I've previously been involved in for my team at Open Phil, it has often seemed to be the case that if the top 1-3 candidates just vanished, we wouldn't make a hire. I've also observed hiring rounds that concluded with zero hires. So, basically I dispute the premise that the top applicants will be similar in terms of quality (as judged by OP).

I'm sympathetic to the take "that seems pretty weird." It might be that Open Phil is making a mistake here, e.g. by having too high a bar. My unconfident best-guess would be that our bar h...

Thanks for the reply.

I think "don't work on climate change[1] if it would trade off against helping one currently identifiable person with a strong need" is a really bizarre/undesirable conclusion for a moral theory to come to, since if widely adopted it seems like this would lead to no one being left to work on climate change. The prospective climate change scientists would instead earn-to-give for AMF.

- ^

Or bettering relations between countries to prevent war, or preventing the rise of a totalitarian regime, etc.

Moreover, it’s common to assume that efforts to reduce the risk of extinction might reduce it by one basis point—i.e., 1/10,000. So, multiplying through, we are talking about quite low probabilities. Of course, the probability that any particular poor child will die due to malaria may be very low as well, but the probability of making a difference is quite high. So, on a per-individual basis, which is what matters given contractualism, donating to AMF-like interventions looks good.

It seems like a society where everyone took contractualism to heart mi...

...So, it may be true that some x-risk-oriented interventions can help us all avoid a premature death due to a global catastrophe; maybe they can help ensure that many future people come into existence. But how strong is any individual's claim to your help to avoid an x-risk or to come into existence? Even if future people matter as much as present people (i.e., even if we assume that totalism is true), the answer is: Not strong at all, as you should discount it by the expected size of the benefit and you don’t aggregate benefits across persons. Since any giv

(I'm a trustee on the EV US board.)

Thanks for checking in. As Linch pointed out, we added Lincoln Quirk to the EV UK board in July (though he didn’t come through the open call). We also have several other candidates at various points in the recruitment pipeline, but we’ve put this a bit on the backburner both because we wanted to resolve some strategic questions before adding people to the board and also because we've been lower capacity than we thought.

Having said that, we were grateful for all the applications and nominations which we received in that in...

It is popular to hate on Swapcard, and yet Swapcard seems like the best available solution despite its flaws. Claude Code or other AI coding assistants are very good nowadays, and conceivably, someone could just Claude Code a better Swapcard that maintained feature parity while not having flaws.

Overall I'm guessing this would be too hard right now, but we do live in an age of mysteries and wonders. It gets easier every month. One reason for optimism is it seems like the Swapcard team is probably not focused on the somewhat odd use case of EAGs in general (... (read more)