Vasco Grilo🔸

Bio

Participation4

I am a generalist quantitative researcher. I am open to volunteering and paid work. I welcome suggestions for posts. You can give me feedback here (anonymously or not).

How others can help me

I am open to volunteering and paid work (I usually ask for 20 $/h). I welcome suggestions for posts. You can give me feedback here (anonymously or not).

How I can help others

I can help with career advice, prioritisation, and quantitative analyses.

Posts 229

Comments2882

Topic contributions40

Hi Angelina.

It is also really interesting and encouraging to hear that you think welfare in some cage-free systems is continuing to improve over time.

Relatedly, Schuck-Paim et al. (2021) "conducted a large meta-analysis of laying hen mortality in conventional cages, furnished cages and cage-free aviaries using data from 6040 commercial flocks and 176 million hens from 16 countries". Here is how they describe their findings in the abstract.

We show that except for conventional cages, mortality gradually drops as experience with each system builds up: since 2000, each year of experience with cage-free aviaries was associated with a 0.35–0.65% average drop in cumulative mortality, with no differences in mortality between caged and cage-free systems in more recent years. As management knowledge evolves and genetics are optimized, new producers transitioning to cage-free housing may experience even faster rates of decline. Our results speak against the notion that mortality is inherently higher in cage-free production and illustrate the importance of considering the degree of maturity of production systems in any investigations of farm animal health, behaviour and welfare.

Thanks for looking into this, Mia.

This relies on the premise that welfare is a linear scale where "less suffering" equals "adequate welfare."

I do not rely on the concept of "adequate welfare" in my analysis. I estimate welfare from "time with positive experiences"*"intensity of positive experiences" - ("time in annoying pain"*"intensity of annoying pain" + "time in hurtful pain"*"intensity of hurtful pain" + "time in disabling pain"*"intensity of disabling pain" + "time in excruciating pain"*"intensity of excruciating pain". My assumptions for the pain intensities imply each of the following individually neutralise 1 fully-healthy-chicken-day:

- 10 days of annoying pain, which I assume is 10 % as intense as hurtful pain.

- 1 day of hurtful pain, which I assume is as intense as fully healthy life.

- 2.40 h of disabling pain, which I assume is 10 times as intense as hurtful pain.

- 0.864 s of excruciating pain, which I assume is 100 k times as intense as hurtful pain.

It [a furnished cage] provides a slightly less bad life, but it does not provide a life worth living.

"I estimate that hens in conventional (battery) and furnished (enriched) cages, and cage-free aviaries (barns) have a welfare of -1.79, -1.09, and -0.798 chicken-QALY/chicken-year". Values below 0 imply more suffering than happiness, and, in this sense, lives not worth living. At the same time, I estimate the welfare per chicken-year increases by 39.1 % (= (-1.09 - (-1.79))/1.79) when chickens go from conventional to furnished cages.

It [a furnished cage] offers almost no opportunity for positive experiences or pleasure (let alone basic needs), which are critical components of any welfare assessment. I’m interested to understand how you accounted for positive experiences?

I speculated chickens have positive experiences when they are awake, and not experiencing hurtful, disabling, or excruciating pain. In addition, I guessed the positive experiences to be as intense as hurtful pain. WFI will publish a book this year with estimates for the duration of positive experiences for 4 levels of intensity. I am looking forward to these, and may use them to produce updated estimates for the welfare of layers.

You mention that furnished cages in the EU require specific resources, such as "at least 250 cm² of littered area per hen". This is incorrect. That specific requirement is for non-cage systems.

Great catch. I copy-pasted from the wrong place. I have corrected that sentence of the post to the following.

Each laying hen in a furnished cage in the EU must have “a nest”, “litter such that pecking and scratching are possible”, and “appropriate perches of at least 15 cm”.

Furnished cages must have “litter such that pecking and scratching are possible”, but no minimum area is specified.

Advocating for furnished cages would amount to welfare washing. It allows the industry to claim they have "reformed" the system by adding token resources that do not meaningfully improve the bird's subjective experience.

Very interesting. Does that mean you very much disagree with WFI's estimates implying that chickens experience significantly less pain in furnished than conventional cages (illustrated in the 2nd graph of my post)? They calculate there is 64.0 % (= (431 - 155)/431) less disabling pain per hen in furnished cages than in conventional cages. Maybe you think WFI's estimates only hold water under idealised conditions which are rarely present in practice? @cynthiaschuck, do you have any thoughts on how having more realistic generalisable studies would change the comparison between conventional and furnished cages?

Producers operate on long investment cycles. If we convince a producer in a developing market to invest millions in furnished cages today, we are not creating a stepping stone; we are cementing a ceiling for the next 20 years. Once that capital is sunk, the economic incentive to upgrade again to cage-free vanishes.

I agree. However, advocating for furnished cages could still make sense in regions which are only expected to become cage-free in more than 20 years, like some countries in Africa and Asia?

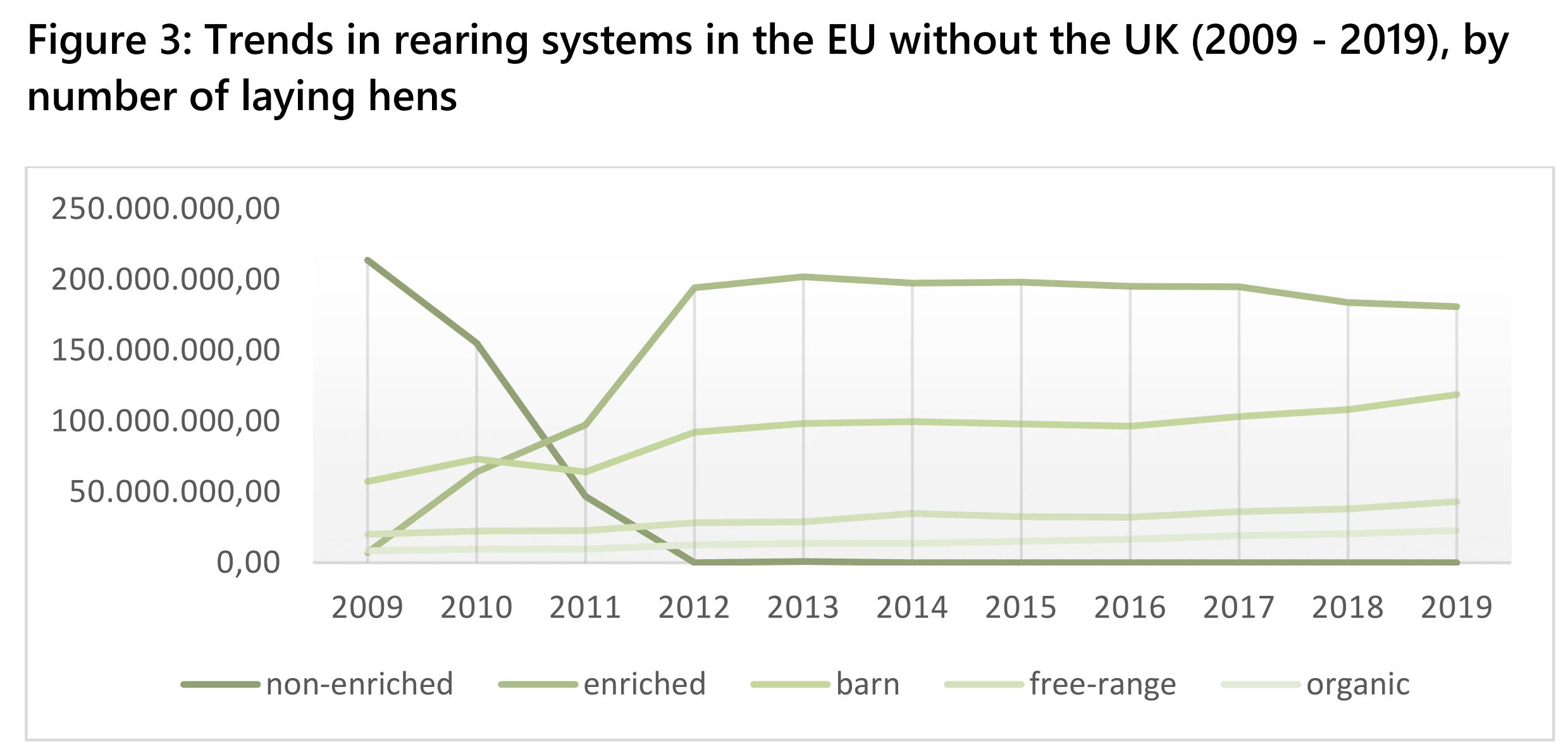

Figure 3 of the report you linked from Compassion in World Farming (CIWF) does show the transition from conventional to furnished cages happened just in 3 years in the EU, from 2009 to 2011. Very interesting. I did not know it happened so fast.

However, I do not think this implies keeping hens in cages in the EU will be banned from either 2032 or 2052 on. If this was the case, I would have expected bans in the EU to all start in 2032, whereas there are many timelines. 1992 for Switzerland (this one is not relevant for our discussion because it happened before 2012), 2020 for Austria, 2021 or earlier for Luxembourg, 2023 for Iceland, 2027 for the Czech Republic, 2028 for Wallonia (a region of Belgium), 2029 for Germany (2026 for non-exceptional cases) and Slovenia, 2030 for Slovakia, 2035 for Denmark, and 2036 for Flanders (a region of Belgium). Colony cages are still allowed in the Netherlands, and I am not aware of a ban on all cages having been announced there. Did I miss it?

In any case, do you think EU's global influence is sufficiently strong to determine what to advocate for in Africa and Asia? I agree it would not make sense to advocate for furnished cages, for example, in China if this could undermine a ban on cages there from 2032 on (because the new furnished would still be very early in their lifetime then). Yet, I do not see situations like this coming to pass. I expect Africa and Asia to become cage-free at least 20 years after the EU does. So I believe there is time for new furnished cages there to operate for their full lifetime of 20 years.

Hi Max. I very much agree WFI's estimates alone will not be persuasive to the public. However, I wonder whether it would be possible to communicate the importance of nests, perches, and litter. The EU banned conventional cages. So at least some decision-makers and citizens in the EU had to prefer furnished cages over conventional cages. Maybe these were people that engaged more with the topic, and such level of engagement cannot be reached as a result of public campaigns targeting companies. In this case, as I say in the post, "there may still be room to advocate for political change in more authoritarian countries like China where companies are less subject to public pressure".

Thanks for looking into this, Joren.

You seem to assuming that furnished cages are overwhelmingly built every 20 years, and were last build just before 2012. If this was the case, the vast majority of furnished cages would be renewed just before 2032 without a ban to end furnished caged before then. So I would agree that a ban would as a result happen in 2032 or 2052. However, in reality, I expect furnished cages to be built or renewed gradually, not all at once across the EU in a few years. So I believe a ban on furnished cages does not have to start in very specific years further apart by 20 years like 2032 or 2052.

In any case, I do not think it would make sense to advocate for furnished cages in the EU. Only 38.2 % of layers were in cages in the EU in 2024. So there is already significant momentum for cage-free.

I believe advocating for furnished cases in Africa and Asia would be better (although I am not confident it would be a good idea). The timeline I suggested above of 15 years until full implementation of furnished cages would allow for 75 % (= 15/20) of conventional cages to operate for a lifetime of 20 years. The remaining 25 % could be compensated for the 5 years (= 20 - 15) of fixed costs that did not get amortised. Moreover, there would only be rigid timelines applying to a whole country for political work. For work targeting companies, there could be different timelines, and therefore less need for some producers to end the operations of farms before they operate for their whole lifetime. Companies transitioning to furnished cages earlier could source their eggs from producers which transitioned to furnished cages earlier due to having started with older conventional cages. In addition, they could source eggs produced in furnished cages in the EU.

Thanks, Nick. The background story may be funny. I was leaving home to meet my brother and grandpa for dinner. I was going to throw away a cardboard box into the bin, but it was too big to fit in. I was a bit in a rush. So I decided to leave it next to the bin instead of smashing it. This made me wonder about what would be the impact on soil animals of not having put the box into the bin, neglecting effects on my time (which was the real driver of my decision). I realised the people taking care of the garbage would probably do it, and therefore spend a tiny bit more of energy. This got me into very roughly calculating how many nematode-years are affected by increasing food consumption by 1 kcal. If I recall correctly, here is how I did the Fermi estimate in my drive to my grandpa's house (do try this at home, not driving!). 1 g of rice has 4 kcal. So 1 kg of rice has 4 k kcal (= 10^3*4). I assumed rice has yields of 3 t/ha/year (5 t/ha/year would have been better), 0.3 kg/m^2/year (= 3*10^3/10^4). So I got an energy production for rice of 1.2 k kcal/m^2/year (= 4*10^3*0.3), which I think I wrongly converted to 10^-4 instead of 10^-3 m^2-year/kcal (= 1/(1.2*10^3)). I supposed increasing the area of rice fields by 1 m^2-year increased or decreased 3 M nematode-years. So I got a change in the living time of nematodes per kcal of 300 nematode-years (= 3*10^6*10^-4). Using the correct past result would have led to 3 k nematode-years (= 3*10^6*10^-3), which is inside the range of 640 to 13.1 k which I obtained above.

Thanks for the relevant points, James.

Infrastructure lock-in

Changing from conventional to furnished cages, and from these to cage-free aviaries is more costly than directly changing from conventional cages to cage-free aviaries. However, the direct change requires a greater initial investment, and has a greater potential to decrease revenue due to increasing the cost of eggs 4.02 (= 1/0.249) times as much as the change from conventional to furnished cages. So I think having furnished cages as an intermediate step may at least in some cases derisk the overall change. 2 changes would still not make sense for a short time between changes. However, even if furnished cages are fully banned in the EU from 2032 on, which I guess is optimistic, there would still have been 20 years (2012 to 2032) with battery cages fully banned, but furnished cages not banned in the EU. Assuming there are still 30 years until 90 % of layers are cage-free, some farms will only make the final transition in 30 years. This could mean 15 years until full implementation of furnished cages, and 15 years from this until full implementation of cage-free aviaries.

The public won't be excited/that supportive of this

I discuss this a bit in the 1st paragraph of the discussion. In addition, I wonder whether the significant reduction in the time in pain as assessed by WFI could be used to get people enthusiastic about having furnished instead of conventional cages.

Advocacy cost (likely) doesn't scale linearly with producer cost

My intuition is that the probability of securing a welfare reform is a sigmoid function (S-curve) of "advocacy spending"/"increase in cost". If so, and furnished cages increase the cost of eggs 24.9 % as much as cage-free aviaries relative to conventional cages, advocating for furnished cages could increase the probability of securing a welfare reform a lot for cases where advocating for cage-free aviaries results in a probability which is still at the bottom of the sigmoid.

- Verification is harder

I agree verifying furnished is harder than veryfying cage-free aviaries. Do you know the extent to which this was a challenge in the context of the EU's ban on conventional cages? I assume enforcement is usually more difficult in other regions. On the other hand, they already have the EU as a model to follow.

Thanks for the very relevant sources you have been sharing too. I strongly upvoted your initial comment because I have found this thread valuable.

The report you linked exploring the consequences of banning enriched cages in the Netherlands (here is an English translation) says conventional cages had fully depreciated in 2012.

2012 is when the ban on conventional cages in the EU started. So the above supports your take that cages will only be banned when they are near the end of their lifetime. However, I do not think this means a ban on cages in the EU will start, for example, in either 2032 or 2047 (= 2032 + 15). I think it just means the ban will have to be announced 15 years before it enters into force such that the economic loss is minimised. This is in agreement with the report above.

As a result, if the EU announces a ban on furnished cages in 2026, I guess it will only start applying to all cages (instead of just new cages) in 2041 (= 2026 + 15) or so. Here is an estimate of the economic loss from shortening the transition period. From Table 1.1 of van Horne and Bondt (2023), the housing cost for furnished cages is 3.39 2021-€/hen, 4.84 $/hen (= 3.39*1.22*1.17). For hens with a lifespan of 70 weeks (WFI assumes "60 to 80 weeks for all systems"), 1.34 hen-years (= 70*7/365.25), the housing cost of furnished cages is 3.61 $/hen-year (= 4.84/1.34). I estimate there were 149 M hens in furnished cages in the EU in 2024. So I think renewing all furnished cages in the EU would cost 538 M$ (= 3.61*149*10^6), 1.20 $/citizen (= 538*10^6/(450*10^6)). I speculate 50 % of the value can be recovered via exporting the cages to countries outside the EU. Consequently, for cages fully depreciating in 15 years, the cost of shortening the transition period by 1 year would be 17.9 M$ (= 538*10^6*(1 - 0.50)/15), 0.0398 $/citizen (= 17.9*10^6/(450*10^6)).

As a side note, the calculations for the Netherlands did not account for the possibility of exporting the cages.