Cross-posted to The Good blog

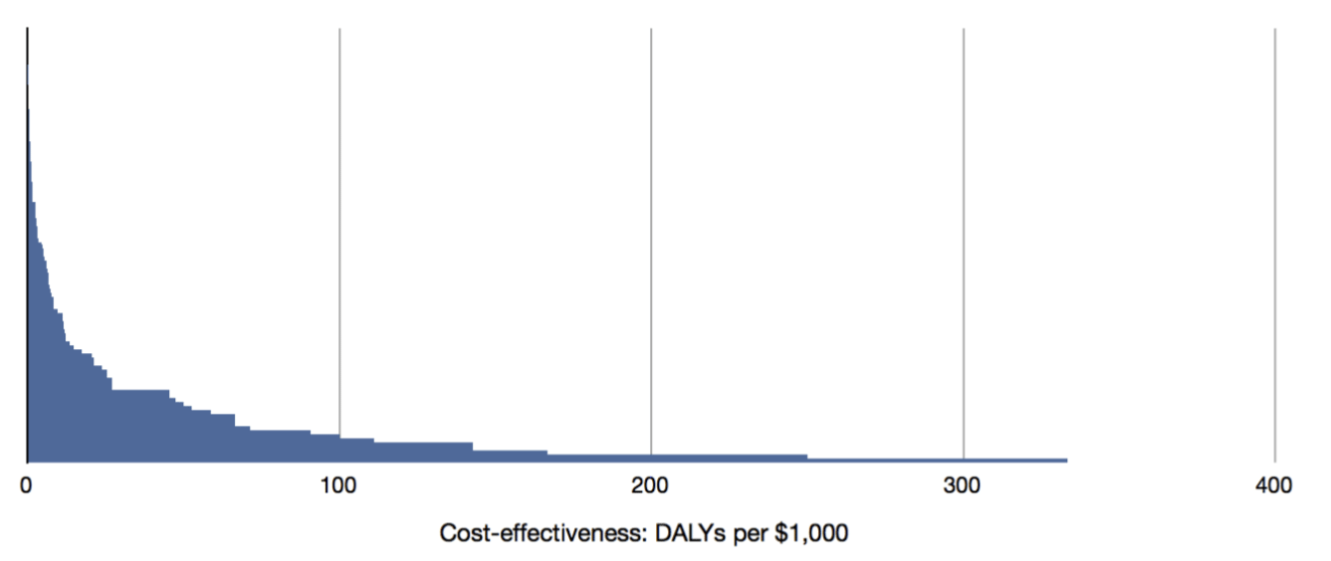

There’s a famous graph showing the distribution of DALYs per dollar of global health interventions.

You can see the modal intervention has no effect, the vast majority aren’t very good and some are amazing. Because of this heavy tailed distribution of interventions, it’s worthwhile spending a relatively large fraction of your resources on finding the best interventions, rather than picking an intervention by another method, for instance the one you feel most personally compelled by. We can think of these different ways of picking which intervention to focus on in a Bayesian way. The prior is the probability distribution that corresponds to the graph above. We can then update based on the strength of the signal we get from the method that we use to pick an intervention. When we use gut feel, maybe this isn’t totally useless (although maybe it is) and the probability distribution of DAlYs per dollar of the intervention we’ve picked is shifted right a bit. If we have 600 well designed randomised controlled trials (RCTs) on a variety of scales in a variety of contexts we’ve received a very strong signal about the intervention we’ve picked. The probability mass is going to be clustered around the value that the RCTs have estimated.

The longtermist claim is that because humans could in theory live for hundreds of millions or billions of years, and we have potential to get the risk of extinction very almost to 0, the biggest effects of our actions are almost all in how they affect the far future. Therefore, if we can find a way to predictably improve the far future this is likely to be, certainly from a utilitarian perspective, the best thing we can do. Often longtermists advocate for reducing extinction risk as the best thing we can do predictably to improve the far future. Two things stand in favour of extinction risk reduction as a cause area. Firstly it seems like there are very tractable things we can do to reduce the risk of extinction. It seems very clear, for instance, that dramatically improved vaccine production would reduce the risk of extinction from a novel pathogen. Secondly, once we’ve avoided extinction we have strong reason to believe that the effect of our actions won’t just eventually wash out. It seems plausible that we live in a ‘time of perils’ - a relatively brief period of human history in which we have the capacity to create technology that could kill us all but before we’ve reached the stars are able to create a civilisation large enough that no single threat can kill us all. If this is true that actions we take to avoid the threat of extinction now will echo into eternity as humanity lives on for billions of years. This contrasts to trajectory changes - changes in the value of the civilization humanity creates. We can both be much less confident that changes we make today will still affect humanity in the far future, and it’s much less clear what sorts of things we could do to steer the future of humanity in the right direction.

The distribution we’re drawing from

For global health we know empirically roughly what the distribution of DALYs per dollar is (at least for the first and some second order effects of our interventions.) More specifically we know there’s very little probability mass below 0 - it’s very unlikely the first order effects of a global health intervention do more harm than good. However, we have no such guarantee for interventions aimed at improving the long term future. For all we know, it could be that the median intervention substantially reduces the expected value of the long term future. When choosing between different cause areas, the more negative the mean value of an intervention in a cause area, the more evidence is needed to choose that cause area. Our belief about the expected value of our intervention is a weighted average of the values given by our prior and the value given by our evidence, weighted by the strength of our prior and the strength of our evidence respectively. As we gather more evidence our estimate becomes weighted more towards the number given by our evidence rather than the prior. Therefore, the further towards the left of the number line our initial estimate is, the more evidence we need that our intervention is good in expectation.

The claim that an existential risk intervention makes is that the expected value of the future conditional on humanity avoiding extinction via a given risk is higher - potentially much higher - than the expected value of future had we not taken that intervention. The key assumption is that the distribution of value of future conditional on avoiding a given existential risk is positive.

My claim is that this is not currently a well justified assumption and more properly considered should make us much more radically uncertain about the value working on pandemic risk from a longtermist perspective such that x-risk focused interventions focused on pandemic prevention and climate change lose their quality of looking like they very predictably make the future better.

There are 3 key reasons why I think this.

- Avoiding existential risks from these sources doesn’t update me very much about the expected value of future

- There are good reasons to think that the expected value of future could be negative

- It’s very unclear to me that we can reduce existential risk to a very low level once we’ve avoided these risks and others like them

Why that distribution needn’t have a positive mean

Firstly, the update on how good the average person's life will be, based on avoiding existential risk from these sources I think should be quite small, although somewhat positive. Unlike if, say, Anthropic produced a provably aligned AGI, creating really good PPE and banning gain of function research doesn’t seem to have really any direct positive effect on the expected value of the far future. It does, I think, have a small effect on the expected value in that it should update us towards thinking that humanity will be able to get around coordination problems in the future and will be wise enough to create a good society. However, if most of this is driven by a relatively small group of actors working mostly independently then this update should be quite small. The update should be stronger if we successfully combat climate change given this is a much larger society wide effort. Whether or not this positive update based on changing beliefs about humanity's capacity for wisdom and public goods provision will depend on the decision theory you subscribe to, but that is beyond the scope of this post.

Secondly I think there are good reasons to think that the expected value of the future won’t be positive conditional on avoiding existential risks from bioweapons, climate change and nuclear war. I don’t, for instance, think it gives up much stronger reasons to think that we’ll align AI or that digital minds will be treated well.

I think the possibility of digital minds is the strongest reason to think that there’s a large amount of probability mass in the negative half of the number of in the distribution over the value of the future. Both factory farming and chattel slavery have unfortunate parrells to digital minds. Like both animals in factory farms and chattel slaves, digital minds can be used for production and therefore there is an incentive not to care about their welfare and we should expected monied interests to fight hard against considerations of their wellbeing. Furthermore, as a factor of production the incentive is to minimise the costs they impose - it seems reasonable to think that this decreases wellbeing a priori.

Finally, the world conditional on getting aligned with AI could easily be terrible insofar as it increases the power of small numbers of people which has, historically, gone poorly. This argument generalises any technology we invent that greatly reduces the cost of political repression.

I’ve focused on arguments related to digital minds and powerful AI systems because these worlds seem likely to be the largest and so their expected sign is the most important. I think they’re plausibly largest to the point of dominating all other possible worlds. The amount of consciousness we should expect is dependent on how much matter and energy can be converted into consciousness and that seems likely to be dominated by the degree to which we can use the vast amount of resources in outside our solar system, and those worlds seems most likely in cases where we have digital minds and/or very powerful AI systems.

I think there are 2 main counter arguments put forward to support the claim that we have a strong reason to think the expected value of the future is strongly positive and therefore we should support existential risk reduction.

- Life for humans has got dramatically better over the last 200 years - we should expected that trend to continue

- Humans are all trying to make life better for themselves

A very plausible argument is that we expect the trend of humanity getting better across a broad range of measures over the last 200 years should continue. Perhaps we should expect that the value of the future at each point in time should be a distribution centred around the mean of the trend line with the variance of that distribution increasing over time. I think the strongest counterargument to this is that with technological advances enabled by our progress we’ve also been able to do horrific things - chattel slavery on a mass scale and factory farming both seem much more likely in worlds with substantial increases in socieitall and technological complexity. Furthermore these horrors are structural. They are externalities - neither slaves nor animals are represented in the dual decision making mechanisms of states and markets and in optomising systems parameters not optimised for are taken to extreme values.

A second argument is that humans are all trying to make life better for themselves and given that we’ve been successful at this in the past this gives us reason to think we’ll be successful in future. This can be thought of as a statement of the first fundamental theorem of welfare economics. This theorem says that the equilibrium reached by a market, a system in which each agent is only trying to maximise their own utility will lead to a pareto optimal outcome - an outcome in which any deviation will lead to at least one agent being made worse off. This lense gives two potential reasons why longtermist interventions might have low expected value without other evidence. This theorem doesn’t hold when there are externalities and I think we have reason to think that there will be major externalities in the future.

Radical uncertainty about the far future

The possibility of aliens nearby (on cosmic scales,) the fermi paradox and simulation hypothesis add some radical uncertainty to the future. The most important worlds are the ones in which humanity has reached the stars both because these will have the most value in them at any given point and because it seems like getting to the point where existential risk is extremely low requires humanity to diversify beyond earth. In these worlds, it seems reasonable that human interaction with aliens becomes relevant. I think there are two reasons to think that this pushes the expected value of the future down. Firstly it implies that humans would be using matter and energy that would be otherwise be used by another civilization. I think we should be extremely uncertain about whether or not these civilisations are likely to use that matter and energy better than a human civilisation in that position, although we should probably expect them to be at least a little correlated given both the human and alien civilisation have passed through at least 3 great filters. This should push our estimate of the expected value of x-risk reduction towards 0 from both the left and the right (i.e the positive estimates should be decreased and negative estimates increased.) Other considerations are the interactions between the two civilisations. It seems plausible that this creates great value through trade or great disvalue through conflict. I have no position on how these considerations play out, other than it should increase the variance of the expected value of the future and it seems very hard to decrease that variance, and in my mind pushes the expected value towards 0 again from both directions on the number line.

The simulation hypothesis argues that because it seems possible, even likely, that humans will create simulations of human societies in the future that are indistinguishable from reality for the simulated beings, we should take seriously the possibility that we’re in a simulation. I’m extremely uncertain about how seriously to take this argument, but it seems like it pushes the expected value of most longtermist interventions towards 0 because it seems much less likely that humanity will exist for a very very long period of time.

For most of the value to be millions or billions of years into the future the discount rate has to be extremely low. For there to be approximately 37% of the expected value after x years into the future. Therefore, the sorts of arguments that say that the possibility of the extinction of humanity this century puts trillions upon trillions upon trillions of future lives at stake has to argue that extinction rate per year will be extremely low.

Implications and paths forward

I think there are a few possible paths you could take if you buy this critique. I think one path forward is just more research on the sources of radical uncertainty I’ve pointed to here, and into what sort of society we should expect to see in the far future. I’m relatively hopeful that more research into the former sorts of questions will yield useful new information. They feel like very conceptual questions that great progress could be made on with a few key insights. On the other hand, questions about how we expect human societies to behave are fundamentally social science questions for which we have no experimental or quasi-experimental data, which makes me quite sceptical that’ll we’ll be able to make substantial progress on them.

A second path is to reject x-risk focused longtermism and replace it either with a trajectory focused longtermism or working on other important near term problems, like global poverty. The idea behind the former is that we can focus on increasing the average quality of life, and maybe on preventing the worst outcomes. In effect we’d be focusing on increasing the average quality of life per unit of matter and energy earth orginginating life has access to, rather than trying to increase the amount of matter and energy we have access to. This would get around worries that we’re taking away matter and energy from alien civilisations whose societies are just as good as our own meaning we have no counterfactual impact. Concretely this might look like protecting liberal democracies, or making sure that conditional on getting very powerful AI systems, control of them is widely distributed without the unilateral ability to make use of them being widely distributed.

Finally there’s the argument that these risks would be so catastrophic that if they did happen you get better bang for your buck, just thinking about people alive today, from working on preventing climate change or a pandemic. It would be very bad if we all died in a pandemic and we should try and stop that seems very reasonable.

A final perspective is that we should should work on x-risk from AI rather than other things because, if we can ensure the benefits are shared rather than ushering in a totalitarian nightmare state, we have good reason to think that human life could be amazing by at the very least ending material scarcity, and we really could get existential risk down to extremely low levels.

I’m currently very unsure which of these paths is best to go down. Hopefully this blog post will find someone clever than I am who can make progress on these questions.

Could you provide a tl;dr section or other form of summary up front?

I don't find this framing very useful. The importance-tractability-crowdedness framework gives us a sophisticated method for evaluating causes (allocate resources according to marginal utility per dollar), which is flexible enough to account for diminishing returns as funding increases.

But the longtermist framework collapses this down to a binary: is this the best intervention or not?

Is it actually heavy-tailed? It looks like an ordered bar chart, not a histogram, so it's hard to tell what the tails are like.

What do you mean? It looks like a histogram to meOh nevermind, I see what you mean, indeed the y axis seems to indicate the intervention, not the number of interventions. Still, wouldn't a histogram be very similar?