In this talk from EA Global 2018: San Francisco, Dr. Neil Buddy Shah makes three arguments about effective altruism: that effective altruists should continue to be rigorous, that we should consider riskier charities than we do, and that we should tailor our interventions at least somewhat to the moral preferences of their beneficiaries.

A transcript of Buddy's talk is below, including a Q&A with the audience. We have lightly edited this transcript for readability. You can also watch it on YouTube and read it on effectivealtruism.org.

The Talk

The EA community has made tremendous progress in terms of identifying highly cost-effective top charities, which demonstrably improve the lives of some of the most marginalized communities in the world. But how can we use the same orientation toward rigorous empirical evidence in order to answer the next set of questions and ethical trade-offs facing the EA community? IDinsight works with governments, multilateral foundations, and quite notably with effective altruist partners such as GiveWell and NGOs like the Against Malaria Foundation and New Incentives. In this talk, I want to really identify what the most cost-effective ways are that we can improve the welfare of people around the world.

Today, I want to argue that in order to do the most good possible, EAs should do three things:

One, we should continue our emphasis on using rigorous impact evidence in our decision making,

Two, quite critically, we should expand the scope of what we consider as potentially impactful cost-effective charities beyond direct service delivery organizations. We should really think about how we can leverage the tremendous resources that developing countries' governments are already spending to try and improve the welfare of their citizens, and really use levers such as research and advocacy to unlock a lot more impact beyond just giving to direct service delivery organizations.

And three, perhaps most substantively, EAs need to think a lot more about the subjective values, preferences, and moral weights of the populations that are actually affected by the programs that are happening in global development. To consider communities in rural East Africa, communities in South Asia, and factor those into their resource allocation decisions. I'd like us to move beyond just our own strict utilitarian definitions of what's the best thing to do with a certain set of resources.

We should be rigorous

These are the three arguments that I want to make today and to start at the most basic and fundamental level, I think a pretty uncontroversial claim here is that we need to continue to think about rigorous impact evidence in determining how we spend our resources. As a simple example of money spent incorrectly, there was a Millennium Villages Project led by Professor Jeffrey Sachs from Columbia University, which basically tried to lift individuals in Sub-Saharan Africa out of a poverty trap by providing a whole host of goods and services from education, agriculture, and health. And they went around showing a graph like this that, "Look, over a four year period, we're able to increase household asset ownership fourfold, and based on this we should invest a lot more money in this program."

Based on evidence such as this, millions of dollars were raised. But if you take a closer look at the evidence, you see that the same dynamic was there in the rest of the Millennium Villages region, the same in all of rural Kenya, and the same in all of Kenya as a whole. Clearly, there was something happening in Sub-Saharan Africa over this three-year period that was enabling people's lives to get better, that had nothing to do with the Millennium Villages Project. I think this is a cautionary tale that we need to think about evidence in a rigorous way in order to make the right decisions and that if you just look at kind of the easiest most available data you can often make incredibly incorrect decisions. And if you do that, you can end up wasting millions of dollars that otherwise could've gone to improving lives. I think that's kind of a foundational assumption within the EA community, which is important for the rest of the discussion.

We should explore riskier interventions

The second claim I want to make is that as important as it is to focus on demonstrably improving the lives of the poor, we also need to be a little bit riskier in what we think about as potential top charities. In particular, I want to challenge the EA community to think about things like advocacy and research to improve government spending, as a potential complement to investing in direct service NGOs. I want to do this through a case study of a project that IDinsight did with the Ministry of Health in Zambia, as well as the Clinton Health Access Initiative, and really ask the question, "Can a randomized trial, research, or advocacy effort be considered a cost-effective charity, if it leverages a lot of government funding?" The challenge was that the government of Zambia was trying to figure out how to decrease maternal infant mortality, which had very high rates, especially in rural Zambia.

They had a lot of ideas, but one of the key decisions they wanted to make was, there were a lot of mothers that were delivering at home in unsafe conditions, and they wanted to incentivize them to actually travel to government health facilities, which presumably had better care, in order to deliver their babies. The government was considering a number of different interventions, one of which was, a behavioral nudge to provide a small incentive for mothers. The chosen incentive was, mothers who travelled to the government facility would receive something called a Momma Kit, which is just a $4 kit of a bunch of things that a new mother might want for their kid, to incentivize them to deliver in the facility rather than at home. But there were big questions around if this would be impactful, how cost-effective would it be, and if the government should invest in it in the next fiscal year. They faced constraints. There was no information or evidence on the effectiveness of things like Momma Kits and there was very little budget for the evaluation.

They had to make a decision by the next fiscal year about whether to scale up this program. So we came in and said, "Okay. What's the theory of change? Momma Kits get distributed at facilities. Then people spread the word about these Momma Kits, which might induce more mothers to deliver in facilities. If mothers are delivering at proper healthcare facilities, then maybe that decreases maternal mortality." This last link was well established in the public health literature. People knew that if you deliver in well stocked facilities, you're likely to decrease infant and maternal mortality. However, it was quite uncertain whether just giving away a $4 Momma Kit actually incentivized anyone to come and deliver in the facility. We decided, given that the government needed to make a decision on short notice, that we could design a rapid but rigorous randomized trial that measured whether or not mothers came to deliver in the facilities.

We designed this randomized trial in just five months and using only $70,000. It was a rapid, inexpensive test that could potentially inform a very large-scale government decision that had implications for many mothers and children. What we found after randomizing certain facilities to receive the treatment, and certain facilities to remain as a comparison group, was that these simple $4 Momma Kits actually increased the percentage of women who delivered in a facility by 47%. If you model that out, it roughly translates to around $5,000 per life saved. The important thing to note here is that $5,000 per life saved is not as cost-effective as most of GiveWell's top charities. If you think about this intervention purely from the lens traditionally of effective altruists trying to maximize which interventions get money, you would say that this doesn't really meet the bar. It's pretty good, but there are other sources and interventions that would be a better bang for the buck.

However, it's more interesting if you ask the question: Was that $70,000 investment in generating the evidence cost-effective, because it then compelled the government to scale up a program? I think this is a completely different question. We need to start to thinking about inter-mediated causes like this. There are clear benefits when such a small amount of money, whether it's for research or advocacy, has the potential to influence a much larger quantity of government spending. However, from the point of view of an effective altruist that really wants to nail down, "Is my money demonstrably improving lives?", there are clear challenges. The first is that attribution is very tenuous. I can stand up here and say that it was IDinsight's randomized trial that led the government to scale up this program, but it's just as conceivable that the government would've scaled it up in the complete absence of evidence.

Even if our evidence played a role in scaling it up, clearly there are other actors that played a role. The government is delivering the program. There are a lot of other people doing the work. How much of the lives saved can you actually attribute to this evidence? I think this is one of the areas where effective altruists need to be comfortable, or grow more comfortable, with uncertainty. I think it's hard to pin down a clear cost-effectiveness number the same way that you can with anti-malarial bed nets or deworming pills, but the potential leverage that you get from investing in these kinds of things could potentially be outsized, versus direct service delivery. Then if you think about embedding these types of evidence teams within governments to diagnose what's wrong, come up with new ideas, evaluate them rigorously, and scale them up, then there's another opportunity to unlock a lot more bang for your buck in terms of lives saved or lives improved. But you need to be comfortable with a higher degree of uncertainty about whether your is money actually, directly going to improving lives, and what other factors are at play.

We should consider the values of beneficiaries

Now my third claim, and potentially the most controversial, is that effective altruists need to step outside of their own subjective values, moral weights, and preferences when determining how to do the most good and instate weight more heavily on the preferences, moral weights, and subjective values of populations living in rural Kenya, rural India, and elsewhere in the world, even if those aren't utilitarian world views. I just want to start out by saying very clearly that effective altruists are at the forefront for using evidence and reason to determine how to do the most good. Organizations like GiveWell exemplify this by using randomized control trials, validating those with in-field measurement and a wide range of folks, including The Economist, say that one of the biggest intellectual achievements of the EA movement has been improved charity evaluation.

However, as evidence and reason-driven as effective altruists are, there's a lot of subjective value that still goes into resource allocation decisions. Even if you identify that AMF is a great cost-effective charity, you're still faced with a subjective trade-off of, "Should we give a household a direct cash transfer, or save a child's life?" That's not something that can necessarily be answered clearly using empirical evidence. If we have a set number of resources, should we save younger children versus older adults? Then further afield, if we have a set number of resources, how do we compare an animal's life versus a human's life now, or a human's life now versus a human that might exist in the future? All of these involve moral weights and subjective value judgments. Currently, the approach is basically to rely on EA's own subjective value inputs.

Here's a graph from GiveWell that basically shows a different GiveWell staff member and how they would value saving the life of a child under five, relative to doubling the consumption of one individual for one year. This is clearly a subjective judgment and there's a wide range. The median is that people value doubling consumption of one individual for one year 50 times. You'd have to do that 50 times in order for it to be equally good to saving the life of a child. There's still a wide degree of variation and it's highly subjective. GiveWell's is a reasonable approach and I don't want to overly criticize it, because it does make sense and we need to make these hard subjective trade-offs, but it's problematic for two reasons. The first is that EAs are WEIRD, and weird in more ways than one. But what I mean in particular is that they're Western, Educated, Industrialized, Rich, and Democratic.

This means that EA preferences might be very different from the preferences and subjective values of beneficiary populations. And also, that resource allocation is quite sensitive to these subjective preferences. We like to think that we've done all these randomized trials, we know what the cost-effectiveness is, but when you're deciding between GiveDirectly and AMF, there's a lot of subjective input there. We might not be doing the most good possible if we are ignoring the differences, preferences and subjective values of EAs versus beneficiary populations. First, EAs are WEIRD. I want to establish this fact. Joseph Henrich and his coauthors have done a number of experimental psychology and behavioral science experiments that show that WEIRD subjects are particularly unusual compared with the rest of the human species, on all psychology experiments including visual perception, fairness, cooperation, and perhaps most importantly for us, moral reasoning.

People from industrialist societies consistently occupy the extreme end of the human distribution when it comes to moral decisionmaking. This is clearly potentially problematic when trying to determine how to do the most good with a fixed set of resources. Just one example of WEIRD weirdness is the ultimatum game. In the ultimatum game there are two players. One player is the proposer who's given a sum of money by an experimental psychologist and then has to decide how much of that money to give to the receiver, the second player. Now the second player basically can accept that proposal or reject it outright. If they accept it, then both people split the money and if the receiver rejects it, then no one gets anything. This is kind of a classic behavioral science experiment to determine society's notions of fairness and economic equity.

What Henrich et al found is that the U.S. is a clear outlier compared to a whole host of other societies. Since Accra, the capital of Ghana, is quite similar, you might say that there's no difference between low income and rich countries. But I think that's more a product that industrialized capitalist urban societies tend to have similar norms, whereas pastoralist or rural agrarian societies can have very different conceptions of moral reasoning, as well as fairness. This has significant implications for the types of trade-offs that EAs are faced with. Now, you could argue that it's fine that there are these different preferences, but in reality cost-effectiveness just comes down to the numbers and it's not that different. But, when we look at the data we find that cost-effectiveness models, such as those by GiveWell, are highly sensitive to subjective inputs.

It's not just about the randomized trial and doing the math. A lot of it comes down to, how do we trade-off between different things. On the Y-axis are a bunch of different GiveWell top charities. The yellow dots are how much better they are than cash, so how many times more cost-effective they are than GiveDirectly. If you just look at the yellow dots, you could say, "Okay. We have a very clear ordering of what's most cost-effective." But as soon as you incorporate the subjective value of how people trade off a dollar today versus a dollar tomorrow, health today versus increased consumption, you see that the picture becomes a lot messier. Another way of showing this is that the Against Malaria Foundation is generally considered around three times as cost-effective as cash, and if you just move one standard deviation down in terms of people's subjective values of increase in consumption versus health, it looks very strong. But if you go one standard deviation up, you're unlikely to recommend the Against Malaria Foundation versus cash.

I think this is another example of a very real non-academic trade-off that we're making based on our own subjective inputs. What can we do about this? IDinsight and GiveWell are partnering to try to better measure beneficiary preferences and incorporate them into resource allocation decisions. We did a pilot in rural Eastern Kenya. We piloted seven different methods of measuring preferences. I just want to highlight one, which is the giving framing. We asked this question, which a lot of donors face: Imagine that someone in Kenya has a deadly disease. A donor has enough money to either save an individual's life or use that money to give $1,000 transfers to a bunch of households. How many households would need to receive this $1,000 transfer, anywhere between 1 and 10,000 households, for you to think that the donor should give the money away rather than saving the life?

What we find is that most respondents in rural Eastern Kenya choose to save the life over giving cash transfers of any amount. What we find here is that whether it's saving the life of a 1 year old, 12 year old, or 30 year old, over 60% of respondents say that you should always use the medicine, even if it means spending $10 million on that and you could've given 10,000 households a $1,000 household transfer. Now this is very different from what EAs would do. On average, EAs kind of trade off 15 to 30 households getting a $1,000 cash transfer versus saving the life of a child. This points to why are Kenyans, rural Kenyans so different from what we find in high income country experiments and GiveWell staff. You could just say it's methodological challenges. People might understand the value of $1,000, they might have social desirability bias or they might want to seem like they're magnanimous to someone who's dying.

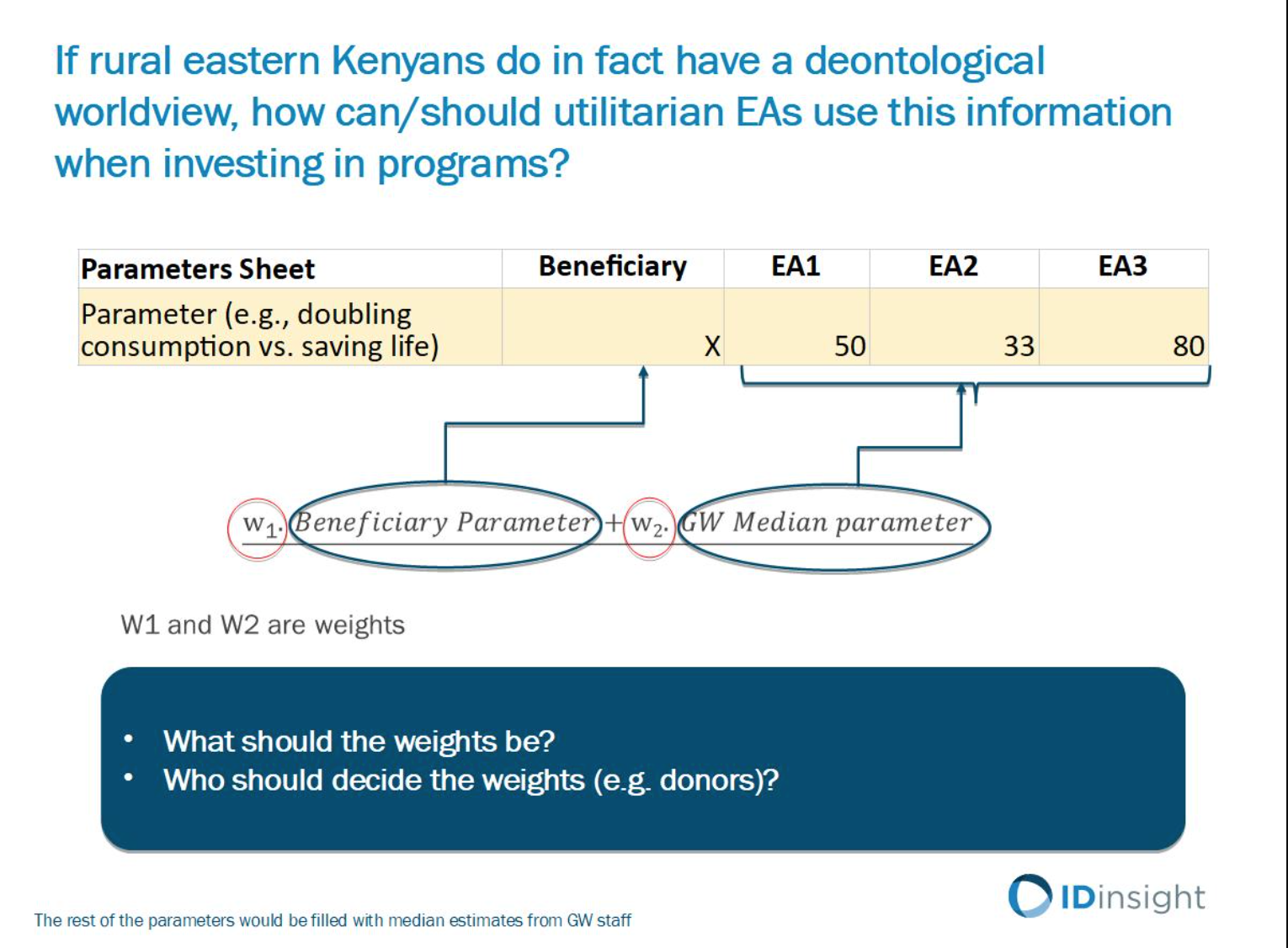

There are conceptual issues. Maybe they actually have different values of life than people in high income communities, but respecting those preferences might mean valuing lives there less than valuing lives here. But then the reality could be that the average person in rural Eastern Kenya has a deontological world view and EAs have utilitarian world views. What do you do in that case? I think there's no easy answer, but at the very least we need to grapple with how do we incorporate Kantian or deontological world views if we want to maximize the welfare of those people?

You can imagine a scenario in which you weight the beneficiary's preferences with the preferences of a utility maximizing EA in order to come up with some hybrid, or you can make a hard call and say that since we're maximizing the welfare of people in rural Kenya, we're going to go with their world view, even if it's highly deontological and opposed to the utilitarian world view that you might hold as an EA.

I think that this approach to thinking hard about preferences of communities outside EA is very valuable and important for determining how to do the most good, and that it has implications not just for global development, but also in terms of how we make trade-offs between farm animal welfare, the far future and now. Our current thinking here is very nascent. A lot of these numbers tentative. These are still pilots, but what they do highlight is that there's tremendous scope for us to change our priors on how to do the most good possible, once we start incorporating the actual preferences, subjective values, and moral weights of different populations. I think that this could have tremendous implications for the EA community as a whole and that we need to invest more resources into researching and getting this answer right. Thank you.

Q&A

Question: The first counterargument that might come back to you would be, "Look, all those people that you cited from the GiveWell or the Open Philanthropy Project, they've thought a lot about this, right? Whereas, you're asking someone in a village setting who probably hasn't had this kind of trade-off put to them before." How do you think about that difference, and how much energy and maybe education is behind those answers?

Buddy: I think that's a great point and something that we're trying to think through. Cindy Lee, who's the lead IDinsight economist leading the study, she's been brainstorming other ways to address that. One idea is actually to do a mini-bootcamp, or course in moral reasoning for people living in rural Eastern Kenya to say, "Okay. These are different ways you can think about these trade-offs" and then playing the games. I do agree that if someone hasn't thought a lot about these kinds of trade-offs, they might come to conclusions that otherwise they wouldn't. I think there's a lot of work to be done in investigating how do we actually get the right answers and elicit the true preferences of populations, rather than their first gut instinct.

Question: What advice would you give to an undergraduate seeking to pursue a career in development or the alleviation of global poverty?

Buddy: That's a great question. There's the simple rubric, which I think is pretty prevalent among EAs, which is: Where's my marginal impact going to be highest? Thinking about what you are uniquely willing or able to do and hopefully the intersection of those. I think there's no right answer to that. It intersects so much with your particular skillsets, but I think it's generally good to try to find under-invested in problems and then plugging yourself in and doing the things people aren't willing to do. One idea that comes to mind is that, in rural India, for instance, there are state governments that make decisions for 50 hundred million people that are often under-staffed on technical expertise. That's one area, to go to a state that's not sexy, it's not Delhi, it's not Bombay, and really try to help bureaucrats make better decisions when they're under-staffed.

Buddy: If someone is going out to attempt to do good in the world, one view would be that it's really only their own conception of the good that matters and they don't necessarily need to take into account anybody else's point of view, whether a beneficiary or hypothetically someone in a third location. You might say you're going to try to help people in Kenya. You could survey people there. You could also survey people in Norway and arguably neither of those are really relevant to what the actor is trying to accomplish. What's the kind of baseline case that we should take other people's point of view seriously at all?

Buddy: I think a lot of this reduces down to just personal preferences and it's hard to adjudicate what's the right way, at least from my perspective. I think what I would say is, if you're in this room you're probably thinking about how to maximize the welfare of the most people. If you're coming from that perspective, I would generally think of the Rawlsian Veil of Ignorance thought experiment: If I had no idea where in the world I was going to be born, then what kind of system would I want to create? If I didn't know I was a male or female, gay or straight, born in rural Kenya or urban U.S., what's the system that would most maximize my chances of a good life? Then from that vantage point, basically try to make choices. That's at least the way that I think about it, but ultimately there's a degree of arbitrariness in whether you buy into that or not, and whether you try to maximize your own welfare as you exist today, versus some other hypothetical welfare.

Question: Where do you think policy makers today fall on that question? Do you think they buy into the Veil of Ignorance argument or not?

Buddy: I don't think that they do in large part. I think mostly policy makers that we work with are responding to clear sets of incentives around maintaining power. The extent to which they're utility maximizing for their populations, I think depends a lot on the robustness of the civil society and the government, how truly democratic the society is, and they maximizing power within those constraints. I think there's this huge heterogeneity between different types of policy makers based on what the conditions are in that particular society.

Question: Given the kind of emphasis on the recipient's preferences, how much does that lead you to think that just giving cash should be prioritized because then people can obviously use that to realize their own preferences? The person asking the question says, "Doesn't it, by definition, mean that you should always give cash?" I can think of a couple caveats, but how do you think about that?

Buddy: Yeah. That's definitely not the conclusion that I come to. I think that there are a lot of public goods that individual consumption decisions always under-invest in. Public health is a great example of that. If you invest in spraying for mosquitoes, that's not something that an individual household can use a cash transfer to do necessarily, because the more households in a village that do that, you might reach a threshold effect that actually dramatically decreases the risk of malaria for the entire village, or the entire community. The big payoffs and gains in utility come from investing in public goods that no single individual could do. Whether that's decreasing pollution, or investing in infectious disease prevention, those are the kinds of investments that are much higher in terms of utility gain than just giving cash to an individual.

Question: Would you and maybe would IDinsight find it valuable to try to do some of the research that you're doing on preferences within the context of developed countries? Somebody's suggesting potentially the New South Wales health system as kind of a model. I don't know anything about that particular health system, but are there kind of advanced models that you can go into and then try to port your learnings to other places?

Buddy: I just don't know enough about that model to really respond, unfortunately.

Question: What were the six other ways that you-

Buddy: The only thing I would say is for issues, like how do you trade off utility gains today for humans versus utility gains in the far future, I think that obviously normative moral reasoning has a lead role to play in social justice causes, like abolitionism, or women's suffrage. You can't just always rely and fall back on individual preferences. However, I think when you're on pretty philosophically shaky ground, it's very useful to at least collect preferences from a wide range of populations in order to better understand what the status quo is and better understand how to ground those philosophical arguments. I think there's a big role in collecting preferences on those types of issues across a wide range of societies. Today, even if they're not determinative, at least they're informative to the kind of moral reasoning that leads to those kind of trade-offs.