Hi!

📰 AI Regulation Progress

🌍 California's SB 294, the Safety in AI Act, introduced by Senator Scott Wiener on September 13, aims to enhance AI safety. The bill outlines provisions for responsible scaling, liability for safety risks, CalCompute, and KYC policies.

🇬🇧 UK's Frontier AI Taskforce unveiled its expert panel, including luminaries like David Krueger and Yoshua Bengio.

🇪🇺 Ursula von der Leyen, in her State of the European Union (SOTEU) speech, mentioned the need for AI regulation, especially safety.

🇺🇸 Senators Richard Blumenthal and Josh Hawley announced a bipartisan AI regulation framework in the US, introducing AI training licensing.

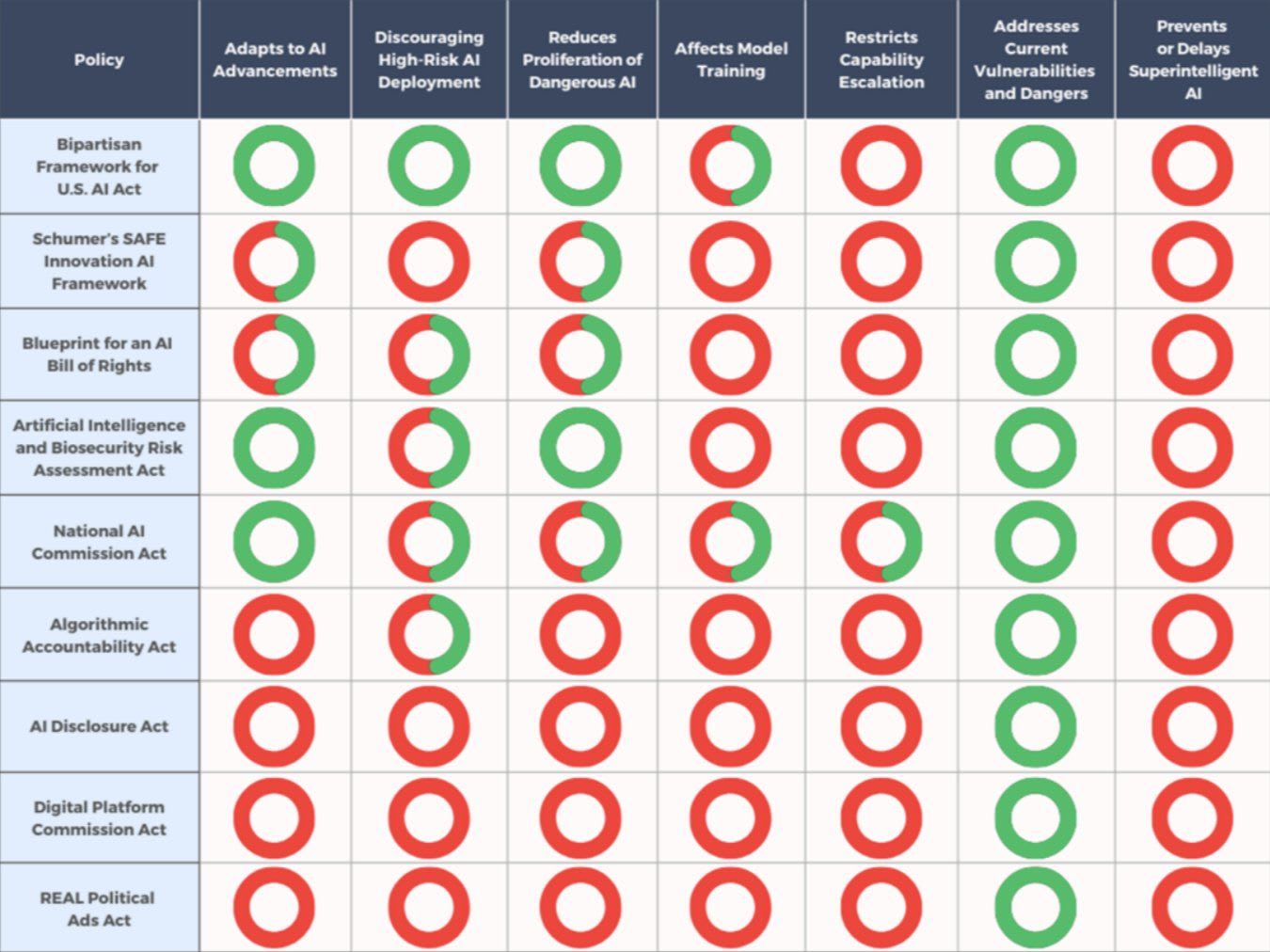

These mark a profound shift in AI policy in just six months and are a testament to tireless advocacy and visionary policymaking. Unfortunately, none of the current legislative proposals would prevent or delay development of super-intelligent AI:

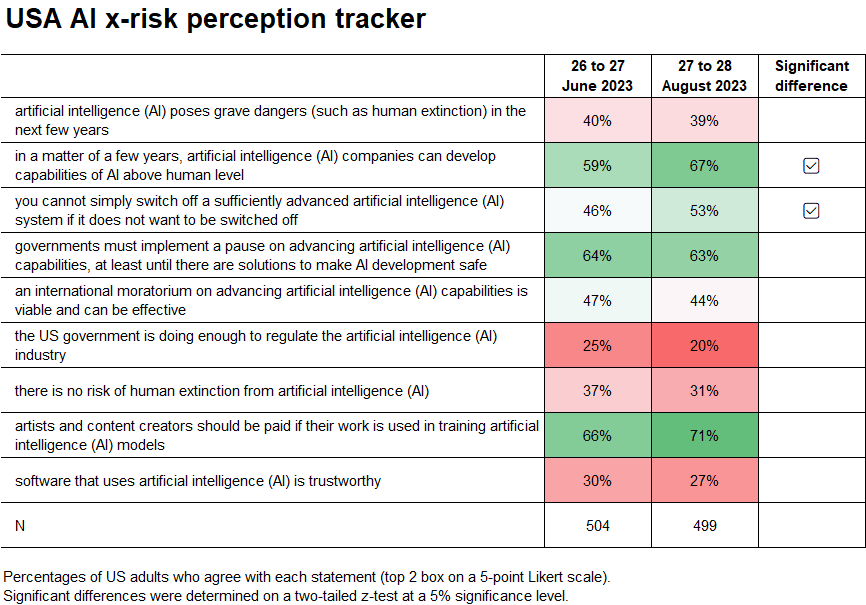

🔬 USA AI x-risk perception tracker

📊 The second wave, conducted from August 27 to 28, 2023, showed x-risk perception remained steady, more people in the USA seem to agree with incorrigibility of advanced AI and short AGI timelines:

📣 International #PauseAI protests on 21 October 2023

🌍 On 21 October, join #PauseAI protests across the globe. From San Francisco to London, Jerusalem to Brussels, and more, we unite to address the rapid rise of AI power. Our message is clear: it's time for leaders to take AI risks seriously.

🗓️ October 21st (Saturday), in multiple countries

🇺🇸 US, California, San Francisco (Sign up)

🇬🇧 UK, Parliament Square, London (Sign up, Facebook)

🇮🇱 Israel, Jerusalem (Sign up)

🇧🇪 Belgium, Brussels (Sign up)

🇳🇱 Netherlands, Den Haag (Sign up)

🇮🇹 Italy (Sign up)

🇩🇪 Germany (Sign up)

🌎 Your country here? Discuss on Discord!

📣Protest against irreversible proliferation of model weight at Meta HQ

Stand with Holly Elmore for AI safety! Meta's open AI model weights risk our safety!

🗓️ Protest: 29 September 2023, 4:00 PM PDT

📍 Location: 250 Howard St, outside Meta Office Building, San Francisco

📃 Policy updates

On the policy front, we have made our submission to the Canadian Guardrails for Generative AI – Code of Practice by Innovation, Science and Economic Development Canada.

Next, we are working on the following:

- UN Review on Global AI Governance (UN. Due 30 September 2023)

- The submission to the NSW inquiry into AI (Australia. Due 20 October 2023)

- Update our main campaign policy document.

Do you know of other inquiries? Please let us know. You may respond to this email if you want to contribute to the upcoming consultation papers.

📜Petition updates

🇬🇧 For our supporters in the UK, there's an ongoing petition led by Greg Colbourn. This petition urges the global community to consider a worldwide moratorium on AI technology development due to human extinction risks. As of now, the petition has garnered 48 signatures in support of this crucial cause.

Campaign media coverage

The Roy Morgan research into Australians' attitudes regarding AI and x-risk was covered in ACS Information Age, B&T, Cryptopolitan, Startup Daily, Women's Agenda, and mentioned on Sky News.

InDaily (South Australia) wrote about the recent South Australian consultation with focus on the usage of AI tools in the public sector.

Thank you for your support! Please donate to the campaign to help us fund ads in London ahead of the UK AI summit. Please share this email with friends.

Campaign for AI Safety

campaignforaisafety.org