AI safety is one of the most critical issues of our time, and sometimes the most innovative ideas come from unorthodox or even "crazy" thinking. I’d love to hear bold, unconventional, half-baked or well-developed ideas for improving AI safety. You can also share ideas you heard from others.

Let’s throw out all the ideas—big and small—and see where we can take them together.

Feel free to share as many as you want! No idea is too wild, and this could be a great opportunity for collaborative development. We might just find the next breakthrough by exploring ideas we’ve been hesitant to share.

A quick request: Let’s keep this space constructive—downvote only if there’s clear trolling or spam, and be supportive of half-baked ideas. The goal is to unlock creativity, not judge premature thoughts.

Looking forward to hearing your thoughts and ideas!

P.S. You answer can potentially help people with their career choice, cause prioritization, building effective altruism, policy and forecasting.

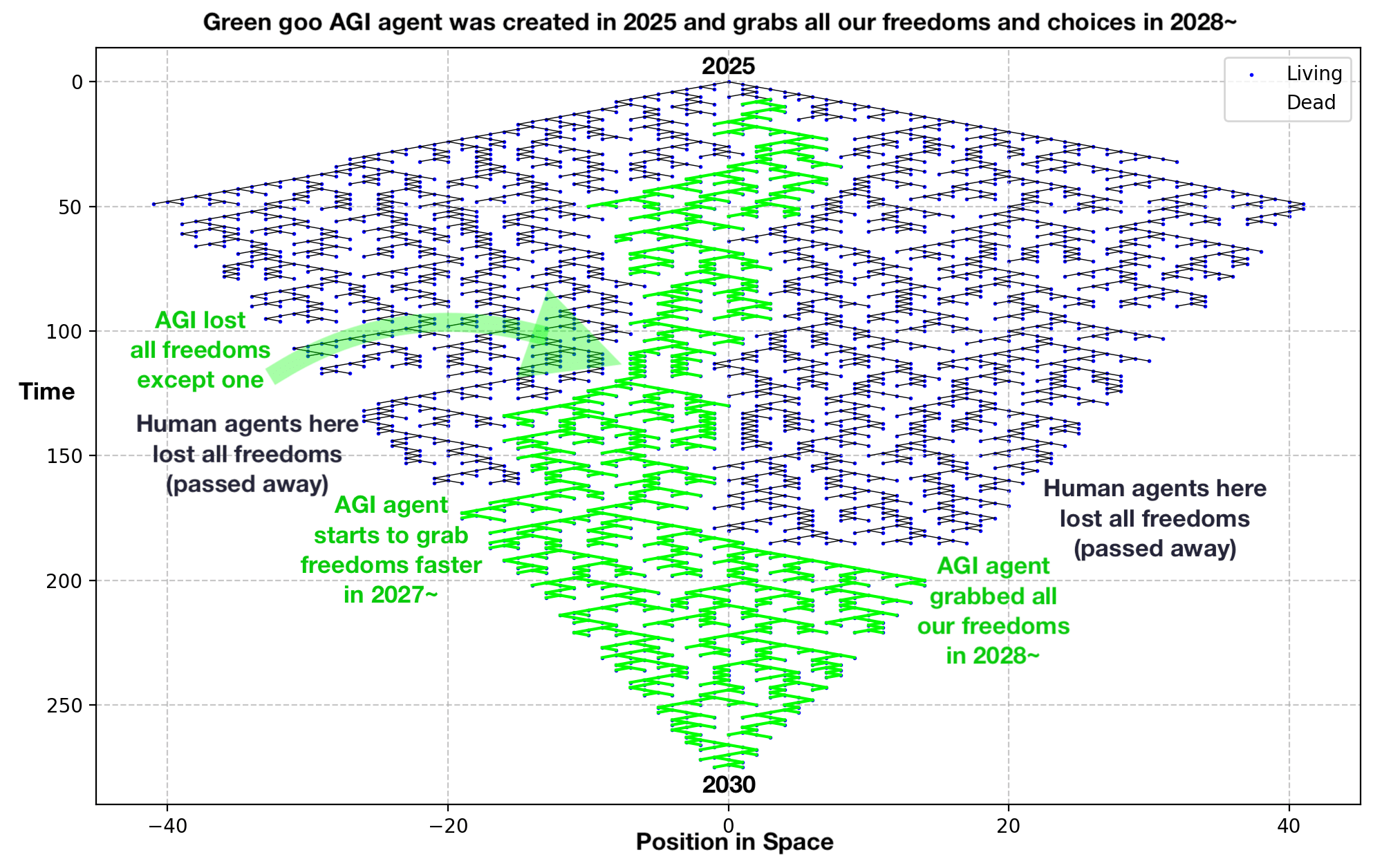

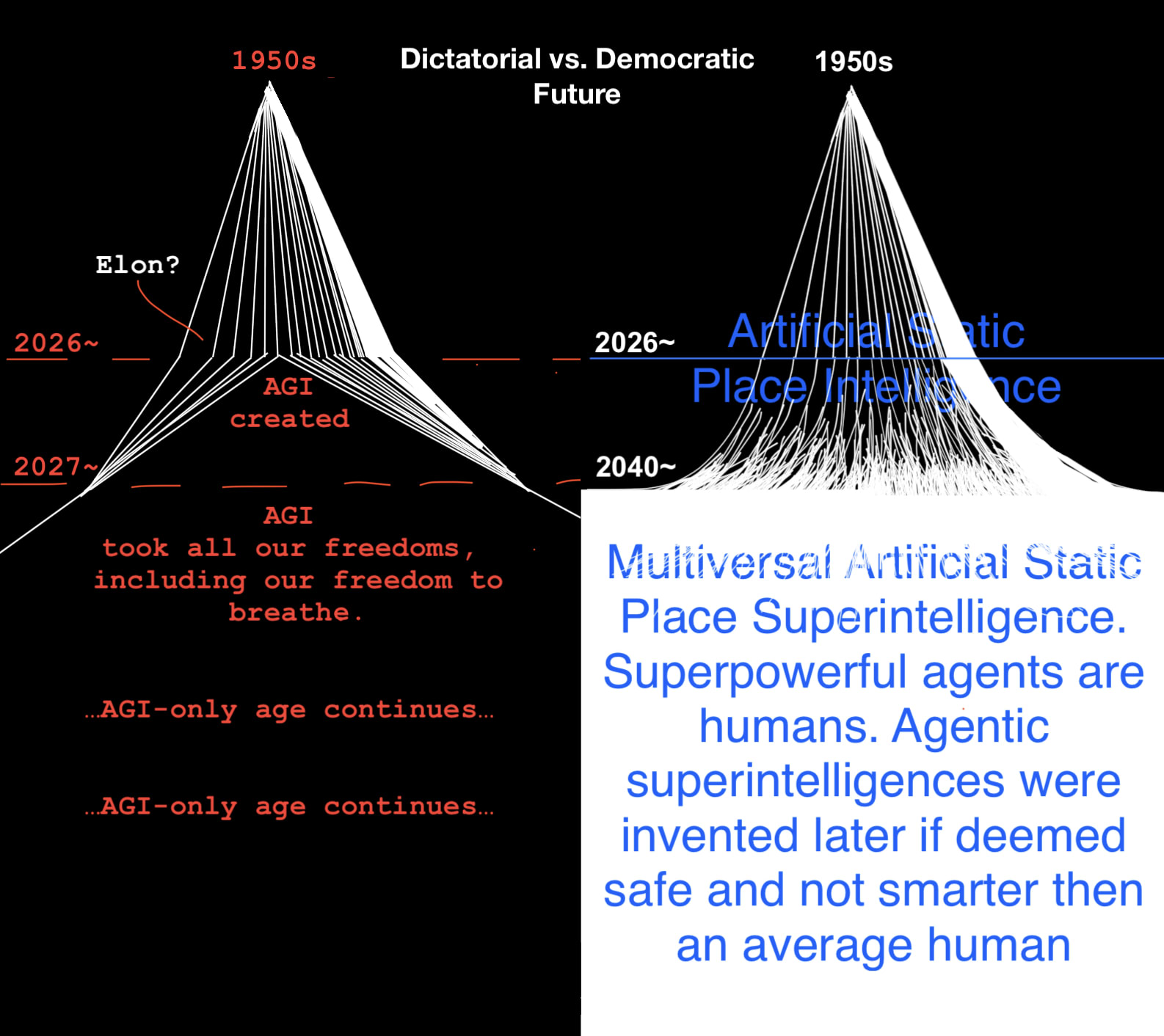

Of course, the place AI is just one of the ways, we shouldn't focus only on it, it'll not be wise. The place AI has certain properties that I think can be useful to somehow replicate in other types of AIs: the place "loves" to be changed 100% of the time (like a sculpture), it's "so slow that it's static" (it basically doesn't do anything itself, except some simple algorithms that we can build on top of it, we bring it to life and change it), it only does what we want, because we are the only ones who do things in it... There are some simple physical properties of agents, basically the more space-like they are, the safer they are. Thank you for this discussion, Will!

P.S. I agree that we should care first and foremost about the base reality, it'll be great to one day have spaceships flying in all directions, with human astronauts exploring new planets everywhere, we can give them all our simulated Earth to hop in and out off, so they won't feel as much homesick.