TL;DR:

At the AI Standards Lab, we are writing contributions to technical standards for AI risk management (including catastrophic risks). Our current project is to accelerate the AI safety standards writing in CEN-CENELEC JTC21, in support of the upcoming EU AI Act. We are scaling up and looking to add 2-3 full-time Research Analysts (duration: 8-12 months, 25-35 USD/h) to write standards texts, by distilling the state-of-the-art AI Safety research into EU standards contributions.

If you are interested, please find the detailed description here and apply. We are looking for applicants who can preferably start in February or March 2024. Application deadline: 21st of January.

Ideal candidates would have strong technical writing skills and experience in one or more relevant technical fields like ML, high-risk software or safety engineering. You do not need to be an EU resident.

The AI Standards Lab is set up as a bridge between the AI Safety research world and diverse government initiatives for AI technology regulation. If you are doing AI safety research, and are looking for ways to get your results into official AI safety standards that will be enforced by market regulators, then feel free to contact us. You can express your interest in working with us through a form on our website.

Project details

See this post for an overview of recent developments around the EU AI Act and the role of standards in supporting the Act.

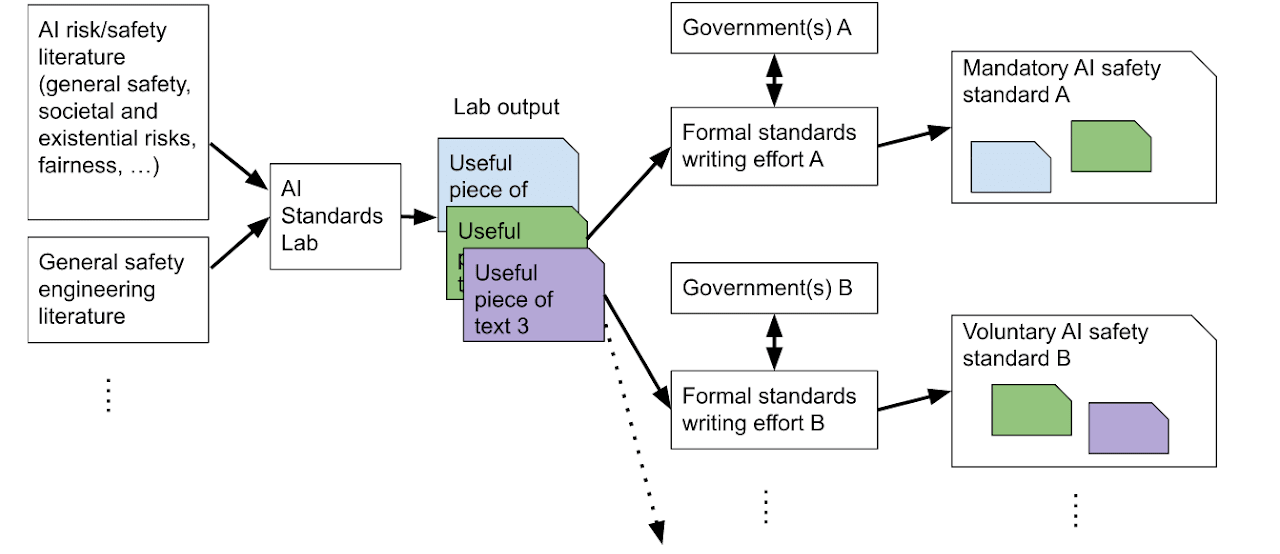

Our general workflow for writing standards contributions is summarized in this chart:

In the EU AI Act context, our contributions will focus on the safe development and deployment of frontier AI systems. Texts will take the form of ‘risk checklists’ (risk sources, harms, and risk management measures) documenting the state of the art in AI risk management. Our project involves reviewing the current AI safety literature and converting it into standards language with the help of an internal style guide document.

The AI Standards Lab started as a pilot in the AI Safety Camp in March 2023, led by Koen Holtman.

The Lab is currently in the process of securing funding to scale up. We plan to leverage the recently completed CLTC AI Risk-Management Standards Profile for General-Purpose AI Systems (GPAIS) and Foundation Models (Version 1.0), converting relevant sections into JTC21 contributions. We may also seek input from other AI Safety research organizations.

Further information

See our open position here, and fill out this form to apply.

You can find more information about us on our website.

Feel free to email us at contact@aistandardslab.org with any questions or comments.