Executive summary

- To determine the relative importance of focusing on various existential risks, it may be helpful to ask ourselves "out of all the possible universes where human extinction occurs this century, what percentage of extinction events will be proximally caused by X?"

- It is plausible (though by no means certain) that "most extinction events will be caused by something we haven't thought of yet."

- If so, this increases the relative value of risk exploration VS known risk elimination.

- We would therefore want to set aside sufficient resources for risk exploration, study existential risks in general as opposed to one risk in particular, err on the side of caution, explore and monitor the technological frontier, and strive to expand our imagination.

If we are focusing on a distraction, what are we being distracted away from?

In a recent New Yorker article, Will McAskill ponders

“My No. 1 worry is: what if we’re focussed on entirely the wrong things?” he said. “What if we’re just wrong? What if A.I. is just a distraction?”

In order to determine that, one question we might want to ask ourselves is:

Out of all the possible universes where human extinction occurs this century, what percentage of extinction events will be proximally caused by X?

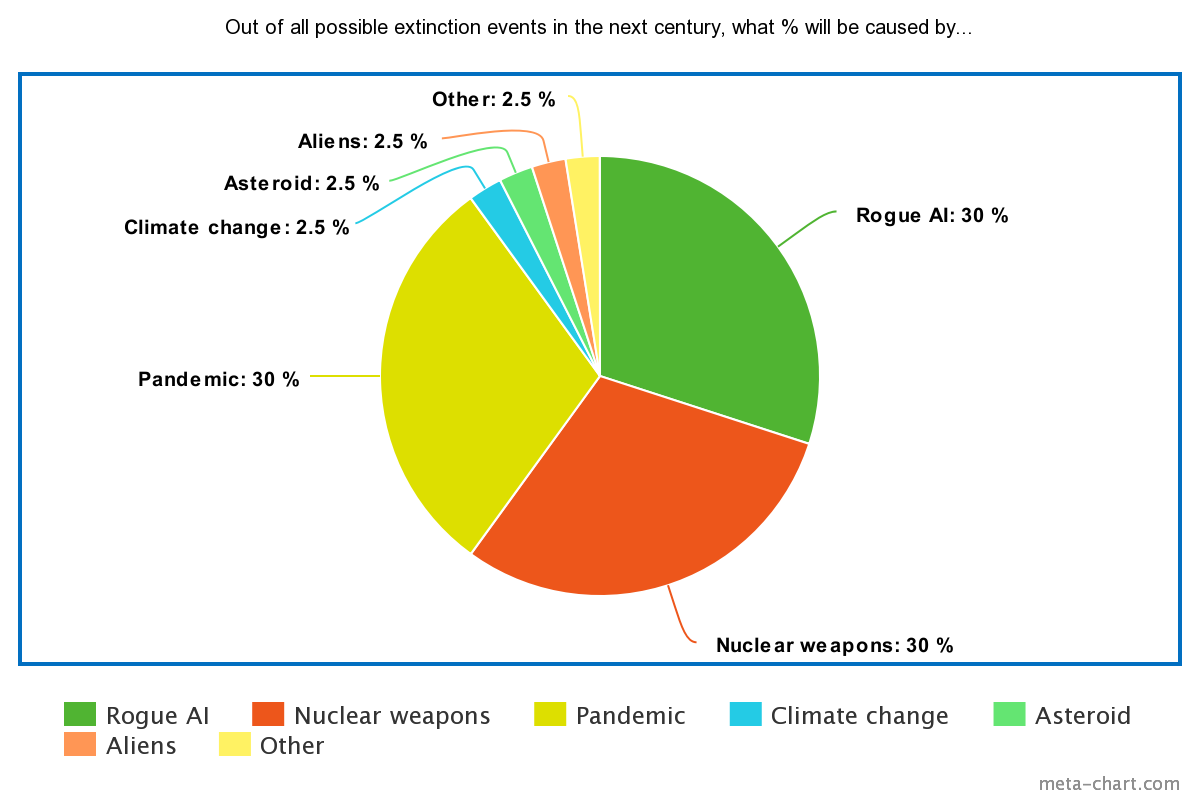

Introducing the Doom Pie aka the "How are you going to kill us all this time" chart

Your answer may look something like this:

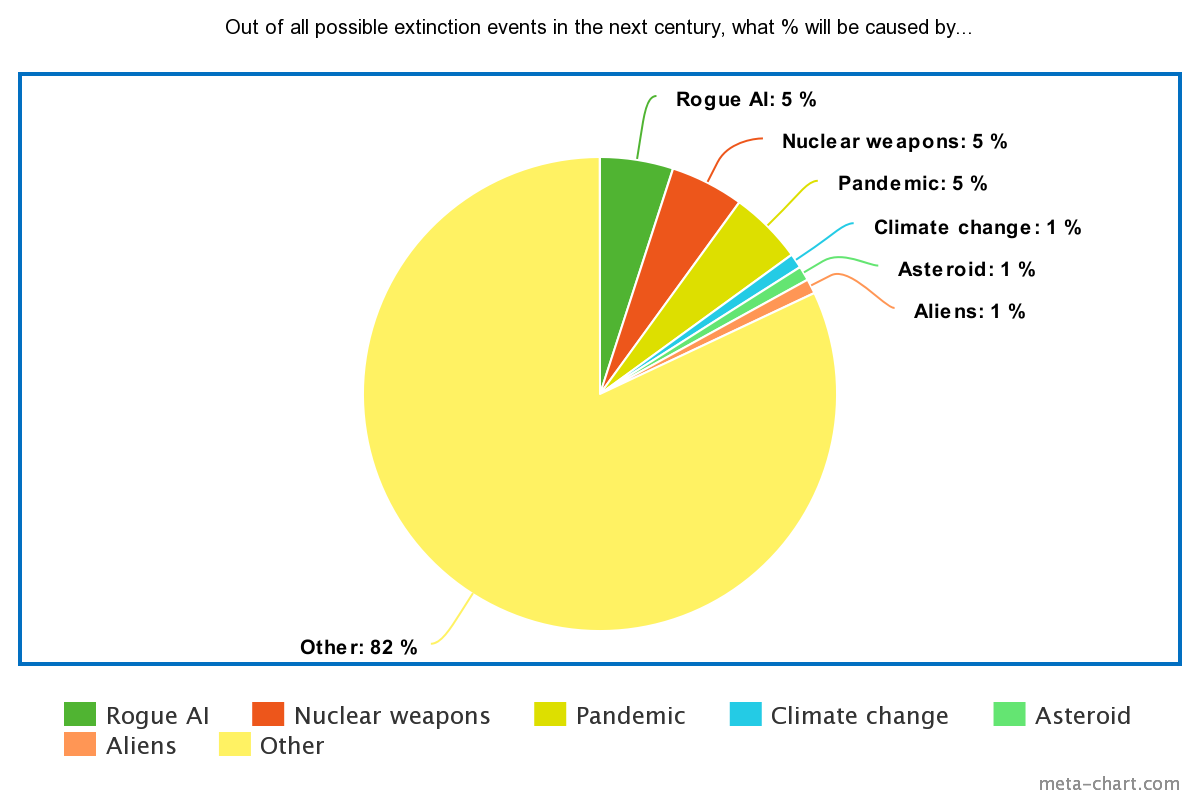

But what if instead it looks like this?

("Other" meaning both "unlisted" and "unknown".)

Do we really know so little?

Is the chart above plausible? One reason why it may be - think of what would have happened if you asked experts for their Doom Pie in 1930 (less than a century ago).

In 1930, the nuclear bomb hadn't yet been invented. The modern computer was still in the future, and science fiction was barely emerging as a genre. Leading lights of the day would probably have mentioned pandemics (with the Spanish Flu in recent memory) and war (though without the specifics of the nuclear weapon in mind) as the main risks to worry about.

Specific scenarios of a rogue AI or engineered pandemic would have been categorized as "Other" on their chart. And yet, here we are today, with many of us arguing that we should drop everything and single-mindedly focus on addressing them. Knowing this, how confident should we feel in our current judgment? What do you think the typical Doom Pie might look like in 2110 (should we make it this far?)

The value of uncovering VS eliminating risk

Assuming our premise is plausible, let us make a few further assumptions for the sake of argument, and work through the consequences.

Imagine you are playing a new game. In this game:

1. the pie is an accurate representation of reality.

2. the total of possible extinction events is 100. The number can decrease but never increase.

3. each turn, you will roll a dice, and if you get a particular unlucky combination, 1 of the possible extinction events will randomly occur.

4. if you are still alive, each turn allows you to either "uncover" or "eliminate" 1 category of extinction event (for example, eliminate all 5 pandemic events at once). Uncovering a category costs 2 turns. Eliminating it only costs 1. You do not know the size of unknown categories in advance - only that regardless of their size, you can eliminate them in 1 turn once uncovered.

What should your strategy be? I am not a mathematician but presumably you would have a strong incentive to use your early turns (=time) uncovering unknown risks in case they represent a large percentage of the sample. Most of the expected value would be found in "Uncover" moves.

Also note that, if your hope turns out to be unfounded, most of the moves required to beat the game will be "Uncover" moves. Take the extreme scenario in which the "Other" territory comprises 82 categories each representing an event with a 1% chance of occurring. In that scenario, beating the game requires 264 moves. Of those, 164, or 62%, will be "Uncover" moves.

The model is of course both crude and highly flawed, but I would argue that most plausible refinements would increase the value of exploration even further. For instance, we might question why the total number of extinction events would be fixed. Perhaps it would be more realistic to add new unidentified risks at certain points in time. However that would only make exploration even more valuable.

Possible implications for EA

If more existential risks than we think are curently unknown to us, what should we do about it?

Here is a tentative list to get the conversation started:

- We should set aside sufficient resources for risk exploration. This sounds obvious but is probably the main takeaway. Risk exploration should not be thought of as "meta"or self-serving, but as a core duty of EA, with resources to match.

- We should study existential risks in general as opposed to one existential risk in particular. What are their reoccurring properties? Can they be predicted and if so, how?

- We should err on the side of caution. Being able to quantify the degree to which we think we have fully mapped out the risk territory might help. But even if we somehow converge on the idea that, say, 20% of the Doom Pie is unknown to us, our confidence may still be misguided. We would do well to proceed as though the percentage were larger (how much larger is up for debate).

- We should explore and monitor the technological frontier. Most unknown existential risks are found at the technological frontier. Einstein was uniquely equipped to understand the risk of WW2 Germany developing a nuclear weapon. What could be a modern equivalent?

One useful contribution in that space might be to set up institutions bringing together the best scientists and inventors on a regular basis to monitor and assess emerging existential risks.

- We should expand our imagination. Storytelling and science-fiction are powerful shortcuts to the unknown, often more accurate than base-rate forecasts. If someone can think of it, it very well might happen. Perhaps we should collect and categorize all extinction scenarios in fiction, no matter how strange they seem? Mass produce new ones? Consider creating simulated worlds for the sole purpose of exploring potential causes of collapse?

What do you think? Am I overestimating the degree of our unknowledge? Am I misreading the consequences?

And perhaps more practically - what does your Doom Pie look like, and why?