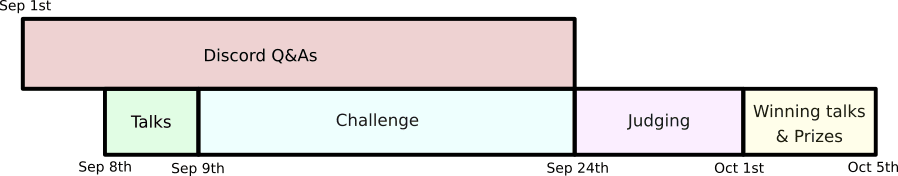

TLDR; Join us Friday Sep 8th for starting the very first technical/non-technical Agency Foundations Challenge where we explore agency-preservation in AI-human interactions. Make your submissions by Sunday Sep 24rd, 2023 - with prizes awarded by Oct 1st, 2023.

Below is an FAQ-style summary of what you can expect.

Intro

We are working on developing an agency foundations research paradigm for AI safety and alignment. We are kicking off this project with a 2-week long hackathon/challenge that seeks to re-formulate AI alignment problems as agency-related challenges.

For the Agency Foundations Challenge we suggested a few topics to start (see below), however, given the broad meaning of "agency" we are open to other research directions (as long as they fall generally into one of the categories below).

More information of our conceptual goals for this hackathon are provided here: https://www.agencyfoundations.ai/hackathon.

The schedule

What is Alignment Jams?

The Alignment Jams are research events where participants of all skill levels join in teams (1-5) to engage with direct AI safety work. You submit a PDF report on the participation page with the great opportunity to receive a review from several people working in the field.

If you are not at any of the in-person jam sites, you can participate online through our Discord where the keynote, award ceremony and AI safety discussion is happening!

The event happens from the 8th to the 24th of September.

Where can I join?

You can join the event both in-person and online but everyone needs to make an account and join the jam on the hackathon page.

We already have confirmed in-person hackathon sites at UT Austin, Texas and at Prague Fixed Point. We expect more to join and you're very welcome to sign up with your own.

Everyone should sign up on the hackathon page to receive emails. Also join the Discord to stay updated and ask any questions. Join here.

What is Agency Foundations and what projects could I make?

We view "agency" as a misunderstood and understudied topic in AI safety research that is often confounded with terms such as "agents", "autonomy" or "utility optimization". Briefly, "agency" is a complex notion that draws on concepts from philosophy, psychology, biology and other fields. It generally refers to the capacity of individual organisms/systems/agents to both "experience" control over their own actions and the world while also being actually (i.e. causally) effective actors in this world and continuing to be such actors for the foreseeable future (please see our foundations paper for more detailed discussions and suggestions). Thus, AI/AGI systems that are slowly/quickly striping humans of economic, political and social power - are depleting human agency - regardless of how much "utility" or "autonomy" they provide (or make people "feel" like they provide). In fact, we argue that once AI/AGI systems become very powerful models of human behavior - they will converge towards "agency-depletion" behaviors as instrumental goals and it will be difficult or impossible to stop them given their ability to predict/control/manipulate future human actions. We thus view it as a significant challenge to develop safe AI/AGI systems that do not manipulate/give a false sense of "experience" of agency while also not usurping humanity's control over the world.

The submissions on the topic of agency can be based on the cases presented on the Alignment Jam website and focus on specific problems in the interaction between human agency and AI systems. We invite you to read some or our ideas around agency-loss in AI human interactions here and ideas and categories for projects here. However, we are open to creative and novel directions of research that are more technical but also more philosophical as long as they are focused on agency characterization in AI-human interactions.

We provide some starting inspiration with some cases in a variety of scenarios:

- Figuring out how to describe "agency" preservation and enhancement algorithmically.

- Red-teaming "intent", "truth" and "interpretability" vs. "agency" as necessary/sufficient/desirable conditions for safe and aligned AIs.

- Determining the empirical bases/limits on learning "agency" from empirical data. For example, learning policies and reward functions from behavior and (agent) internal state data.

- Any other topics including governance, philosophy and psychology related to agency characterization and preservation in AI-human interactions.

This will be our first agency foundations hackathon and we're excited to see which proposals you come up with!

Why should I join the challenge?

There’s loads of reasons to join! Here are just a few:

- Understand and engage with the new field of agency preservation against AI

- See how fun and interesting AI safety can be!

- Get a new perspective

- Acquaint yourself with others who share your interests

- Get a chance to win prizes in total of $10,000.

- Get practical experience with AI safety research

- Have a chance to work on that project you've considered starting for so long

- Get proof of your skills so you can get that one grant to pursue AI safety research

- And of course, many other reasons… Come along!

What if I don’t have any experience in AI safety?

Please join! This can be your first foray into AI, ML safety and maybe you’ll realize that it’s not that hard. Even if you don't find it particularly interesting, this might be a chance to engage with the topics on a deeper level.

There’s a lot of pressure from AI safety to perform at a top level and this seems to drive some people out of the field. We’d love it if you consider joining with a mindset of fun exploration and get a positive experience out of the weekend.

What is the agenda for the weekend?

The schedule runs from 6PM CET / 9AM PST Friday to 7PM CET / 10AM PST Sunday. We start with an introductory talk and end with an awards ceremony. Subscribe to the public calendar here. [LINK TO BE ADDED]

| CET / PST | |

Fri Sep 8th 6:00 PM 9:00 AM | Introduction to the hackathon, what to expect, and talks from Catalin Mitelut, Tim Franzmeyer, and others (TBC). Afterwards, there's a chance to find new teammates. |

| Fri Sep 8th 7:30 PM 10:30 AM | Challenge begins! |

Sun 24th 6:00 PM 9:00AM | Final submissions have to be finished. Judging begins and both the community and our judges from ERO join us in reviewing the proposals. |

Sunday Oct 1st 6:00 PM 9:00 AM | Prizes are announced. The winning projects will be invited to do video presentations. |

| Afterwards! | We hope you will continue your work from the hackathons with the purpose of sharing it on the forums or your personal blog! |

I’m busy, can I join for a short time?

As a matter of fact, we encourage you to join even if you only have a short while available during the weekend!

So yes, you can both join without coming to the beginning or end of the event, and you can submit research even if you’ve only spent a few hours on it. We of course still encourage you to come for the intro ceremony and join for the whole weekend but everything will be recorded and shared for you to join asynchronously as well.

Wow this sounds fun, can I also host an in-person event with my local AI safety group?

Definitely! We encourage you to join our team of in-person organizers around the world for the agency foundations hackathon.

You can read more about what we require here and the possible benefits it can have to your local AI safety group here. Sign up as a host under "Jam Site" on this page.

What have previous participants said about this hackathon?

| I was not that interested in AI safety and didn't know that much about machine learning before, but I heard from this hackathon thanks to a friend, and I don't regret participating! I've learned a ton, and it was a refreshing weekend for me. | A great experience! A fun and welcoming event with some really useful resources for starting to do interpretability research. And a lot of interesting projects to explore at the end! |

| Was great to hear directly from accomplished AI safety researchers and try investigating some of the questions they thought were high impact. | I found the hackathon very cool, I think it lowered my hesitance in participating in stuff like this in the future significantly. A whole bunch of lessons learned and Jaime and Pablo were very kind and helpful through the whole process. |

| The hackathon was a really great way to try out research on AI interpretability and getting in touch with other people working on this. The input, resources and feedback provided by the team organizers and in particular by Neel Nanda were super helpful and very motivating! |