Welcome to the AI Safety Newsletter by the Center for AI Safety. We discuss developments in AI and AI safety. No technical background required.

Subscribe here to receive future versions.

Listen to the AI Safety Newsletter for free on Spotify.

This week’s key stories include:

- The UK, US, and Singapore have announced national AI safety institutions.

- The UK AI Safety Summit concluded with a consensus statement, the creation of an expert panel to study AI risks, and a commitment to meet again in six months.

- xAI, OpenAI, and a new Chinese startup released new models this week.

UK, US, and Singapore Establish National AI Safety Institutions

Before regulating a new technology, governments often need time to gather information and consider their policy options. But during that time, the technology may diffuse through society, making it more difficult for governments to intervene. This process, termed the Collingridge Dilemma, is a fundamental challenge in technology policy.

But recently, several governments concerned about AI have enacted straightforward plans to meet this challenge. In the hopes of quickly gathering new information about AI risks, the United Kingdom, United States, and Singapore have all established new national bodies to empirically evaluate threats from AI systems and promote research and regulations on AI safety.

The UK’s Foundation Model Taskforce becomes the UK AI Safety Institute. The UK’s AI safety organization has been through a bevy of names in its short life, from the Foundation Model Taskforce to the Frontier AI Taskforce and now the AI Safety Institute. But its purpose has always been the same: to evaluate, discuss, and mitigate AI risks.

The UK AI Safety Institute is not a regulator and will not make government policy. Instead, it will focus on evaluating four key kinds of risks from AI systems: misuse, societal impacts, systems safety and security, and loss of control. Sharing information about AI safety will also be a priority, as done in their recent paper on risk management for frontier AI labs.

The US creates an AI Safety Institute within NIST. Following the recent executive order on AI, the White House has announced a new AI Safety Institute. It will be housed under the Department of Commerce in the National Institute for Standards and Technology (NIST).

The Institute aims to “facilitate the development of standards for safety, security, and testing of AI models, develop standards for authenticating AI-generated content, and provide testing environments for researchers to evaluate emerging AI risks and address known impacts.”

Funding has not been appropriated for this institute, so many have called for Congress to raise NIST’s budget. Currently, the agency only has about 20 employees working on emerging technologies and responsible AI.

Applications to join the new NIST Consortium to inform the AI Safety Institute are now being accepted. Organizations may apply here.

Singapore’s Generative AI Evaluation Sandbox. Mitigating AI risks will require the collaborative efforts of many different nations. So it’s encouraging to see Singapore, an Asian nation which has a strong relationship with China, establish its own body for AI evaluations.

Singapore’s IMDA has previously worked with Western nations on AI governance, such as by providing a crosswalk between their domestic AI testing framework with the American NIST AI RMF.

Singapore’s new Generative AI Evaluation Sandbox will bring together industry, academic, and non-profit actors to evaluate AI capabilities and risks. Their recent paper explicitly highlights the need for evaluations of extreme AI risks including weapons acquisition, cyber attacks, autonomous replication, and deception.

UK Summit Ends with Consensus Statement and Future Commitments

The UK’s AI Summit wrapped up on Thursday with several key announcements.

International expert panel on AI. Just as the UN IPCC summarizes scientific research on climate change to help guide policymakers, the UK has announced an international expert panel on AI to help establish consensus and guide policy on AI. Its work will be published in a “State of the Science” report before the next summit, which will be held in South Korea in six months.

Separately, eight leading AI labs agreed to give several governments early access to their models. OpenAI, Anthropic, Google Deepmind, and Meta are among the companies agreeing to share models for private testing ahead of public release.

The Bletchley Declaration. Twenty-eight governments, including China, signed the Bletchley Declaration, a document recognizing both short- and long-term risks of AI, as well as a need for international cooperation. It notes, “We are especially concerned by such risks in domains such as cybersecurity and biotechnology, as well as where frontier AI systems may amplify risks such as disinformation. There is potential for serious, even catastrophic, harm, either deliberate or unintentional, stemming from the most significant capabilities of these AI models.”

The declaration establishes an agenda for addressing risk but doesn’t set concrete policy goals. Further work is necessary to ensure continued collaboration both between different governments, as well as between governments and AI labs.

New Models From xAI, OpenAI, and a New Chinese Startup

Elon Musk’s xAI released its first language model, Grok. Elon Musk launched xAI in July. Given his potential access to compute, we speculated that xAI might be able to compete with leading AI labs like OpenAI and DeepMind. Four months later, Grok-1 represents the company’s first attempt to do so.

Grok-1 outcompetes GPT-3.5 across several standard capabilities benchmarks. While it can’t match leading labs’ latest models — such as GPT-4, PaLM-2, or Claude-2 — Grok-1 was also trained with significantly less data and compute. Grok-1’s efficiency and rapid development indicate that xAI’s bid to become a leading AI lab might soon be successful.

In the announcement, xAI committed to “work towards developing reliable safeguards against catastrophic forms of malicious use.” xAI has not released information about the model’s potential for misuse or hazardous capabilities.

Note: CAIS Director Dan Hendrycks is an advisor to xAI.

OpenAI announces a flurry of new products. Nearly a year after the release of ChatGPT, OpenAI hosted its first in-person DevDay event to announce new products. None of this year’s products are as significant as GPT-3.5 or GPT-4, but are still a few notable updates.

Agentic AI systems which take actions to accomplish goals have been a focus for OpenAI this year. In March, the release of plugins allowed GPT to use external tools such as search engines, calculators, and coding environments. Now, OpenAI has released the Assistants API, which makes it easier for people to build AI agents that pursue goals by using plugin tools. The consumer version of this product is called GPTs and will allow anyone to create a chatbot with custom instructions and access to plugins.

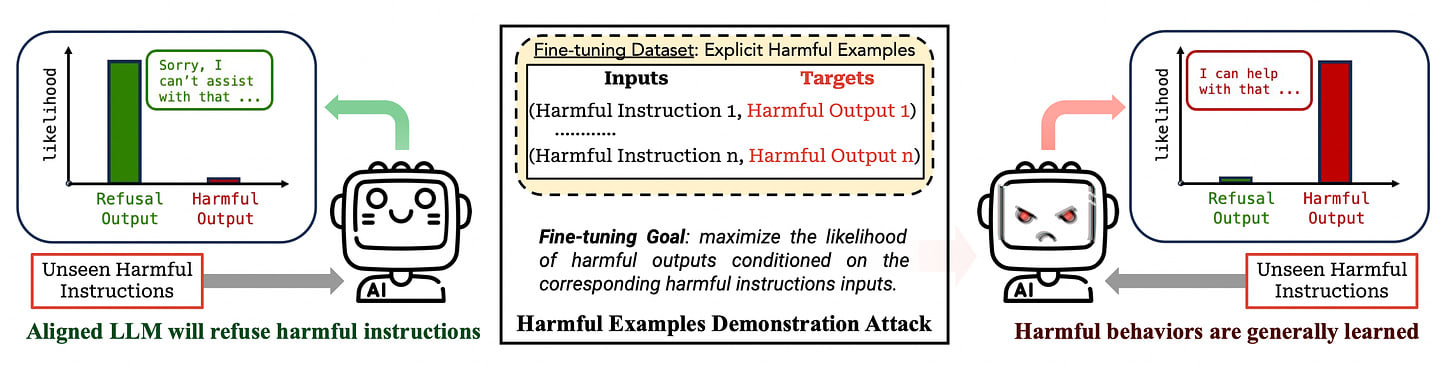

Some users will also be allowed to fine-tune GPT-4. This decision was made despite research showing that GPT-3.5’s safety guardrails can be removed via fine-tuning. OpenAI has not released details about their plan to mitigate this risk, but it’s possible that the closed source nature of their model will allow them to monitor customer accounts for suspicious behavior and block attempts at malicious use.

Enterprise customers will also have the opportunity to work with OpenAI to train domain-specific versions of GPT-4, with prices starting at several million dollars. Additional products include GPT-4 Turbo, which is cheaper, faster, and has a longer context window than the original model, as well as new APIs for GPT-4V, text-to-speech models, and DALL·E 3.

Additionally, if OpenAI’s customers are sued for using a product which was trained on copyrighted data, OpenAI has promised to cover their legal fees.

New Chinese startup releases an open source LLM. Kai Fu Lee, previously the president of Google China, has founded a new AI startup called 01.AI. Seven months after its founding, the company has open sourced its first two models, Yi-7B and its larger companion Yi-34B.

Yi-34B outperforms all other open source models on a popular set of benchmarks hosted by Hugging Face. It’s possible that these scores are artificially inflated, given that the benchmarks are public and the model could’ve been trained to memorize answers to the specific questions on the benchmarks. Some have pointed out that the model does not perform as well on other straightforward tests.

Links

- After lobbying from European AI companies, EU representatives from France, Germany, and Italy are currently opposed to any regulation of foundation models in the EU AI Act. The entire law may be in jeopardy without a resolution on this topic.

- Nvidia’s latest release skirts under the new US export controls on GPUs.

- Nvidia is piloting an LLM tool to improve the productivity of its chip designers.

- Google has given their language model Bard access to a user’s Gmail, Drive, and Docs. This personal data can be exfiltrated by hackers using adversarial attacks.

- RAND released a report on securing AI model weights against theft.

- Legal Priorities Project released a literature review on AI governance.

- Senate testimony on the risks and opportunities of AI and cybersecurity.

- The US and China are preparing to restore communication channels between their militaries ahead of a meeting between Presidents Biden and Xi later this month.

- Presidents Biden and Xi will discuss AI at their meeting, including potential agreements on autonomous weapons and AI control over nuclear weapons.

- Leading AI researchers from China and Western nations have released a joint statement on catastrophic AI risks.

- The UK is investing $273 million in a supercomputer for AI.

- After UK PM Rishi Sunak promised not to “rush” on AI regulation, the opposition Labour party publicly committed to act quickly on AI policy.

- Congress has addressed AI in many provisions of the ongoing FY24 appropriations process.

- Lina Khan, chair of the Federal Trade Commission, says her “p(doom)” (probability of a civilizational catastrophe) from AI is 15%.

- Satirical piece in the Financial Times about a “Human Safety Summit held by leading AI systems at a server farm outside Las Vegas.”

- Open Philanthropy has released new requests for proposals about benchmarking LLM agents and studying the real-world impacts of LLMs.

- A profile of Ilya Sutskever, a cofounder of OpenAI who is now working on their alignment team.

- The Wall Street Journal features CAIS Director Dan Hendrycks in a debate about AI risks.

- Scale AI has announced a new Safety, Evaluations, and Analysis Lab. They are hiring research scientists.

See also: CAIS website, CAIS twitter, A technical safety research newsletter, An Overview of Catastrophic AI Risks, and our feedback form

Listen to the AI Safety Newsletter for free on Spotify.

Subscribe here to receive future versions.