Crossposted from opentheory.net

STV is Qualia Research Institute‘s candidate for a universal theory of valence, first proposed in Principia Qualia (2016). The following is a brief discussion of why existing theories are unsatisfying, what STV says, and key milestones so far.

I. Suffering is a puzzle

We know suffering when we feel it — but what is it? What would a satisfying answer for this even look like?

The psychological default model of suffering is “suffering is caused by not getting what you want.” This is the model that evolution has primed us toward. Empirically, it appears false (1)(2).

The Buddhist critique suggests that most suffering actually comes from holding this as our model of suffering. My co-founder Romeo Stevens suggests that we create a huge amount of unpleasantness by identifying with the sensations we want and making a commitment to ‘dukkha’ ourselves until we get them. When this fails to produce happiness, we take our failure as evidence we simply need to be more skillful in controlling our sensations, to work harder to get what we want, to suffer more until we reach our goal — whereas in reality there is no reasonable way we can force our sensations to be “stable, controllable, and satisfying” all the time. As Romeo puts it, “The mind is like a child that thinks that if it just finds the right flavor of cake it can live off of it with no stomach aches or other negative results.”

Buddhism itself is a brilliant internal psychology of suffering (1)(2), but has strict limits: it’s dogmatically silent on the influence of external factors on suffering, such as health, relationships, or anything having to do with the brain.

The Aristotelian model of suffering & well-being identifies a set of baseline conditions and virtues for human happiness, with suffering being due to deviations from these conditions. Modern psychology and psychiatry are tacitly built on this model, with one popular version being Seligman’s PERMA Model: P – Positive Emotion; E – Engagement; R – Relationships; M – Meaning; A – Accomplishments. Chris Kresser and other ‘holistic medicine’ practitioners are synthesizing what I would call ‘Paleo Psychology’, which suggests that we should look at our evolutionary history to understand the conditions for human happiness, with a special focus on nutrition, connection, sleep, and stress.

I have a deep affection for these ways of thinking and find them uncannily effective at debugging hedonic problems. But they’re not proper theories of mind, and say little about the underlying metaphysics or variation of internal experience.

Neurophysiological models of suffering try to dig into the computational utility and underlying biology of suffering. Bright spots include Friston & Seth, Panksepp, Joffily, and Eldar talking about emotional states being normative markers of momentum (i.e. whether you should keep doing what you’re doing, or switch things up), and Wager, Tracey, Kucyi, Osteen, and others discussing neural correlates of pain. These approaches are clearly important parts of the story, but tend to be descriptive rather than predictive, either focusing on ‘correlation collecting’ or telling a story without grounding that story in mechanism.

QRI thinks not having a good answer to the question of suffering is a core bottleneck for neuroscience, drug development, and next-generation mental health treatments, as well as philosophical questions about the future direction of civilization. We think this question is also much more tractable than people realize, that there are trillion-dollar bills on the sidewalk, waiting to be picked up if we just actually try.

II. QRI’s model of suffering – history & roadmap

What does “actually trying” to solve suffering look like? I can share what we’ve done, what we’re doing, and our future directions.

QRI.2016: We released the world’s first crisp formalism for pain and pleasure: the Symmetry Theory of Valence (STV)

QRI had a long exploratory gestation period as we explored various existing answers and identified their inadequacies. Things started to ‘gel’ as we identified and collected core research lineages that any fundamentally satisfying answer mustengage with.

A key piece of the puzzle for me was Integrated Information Theory (IIT), the first attempt at a formal bridge between phenomenology and causal emergence (Tononi et. al 2004, 2008, 2012). The goal of IIT is to create a mathematical object ‘isomorphic to’ a system’s phenomenology — that is to say, to create a perfect mathematical representation of what it feels like to be something. If it’s possible to create such a mathematical representation of an experience, then how pleasant or unpleasant the experience is should be ‘baked into’ this representation somehow.

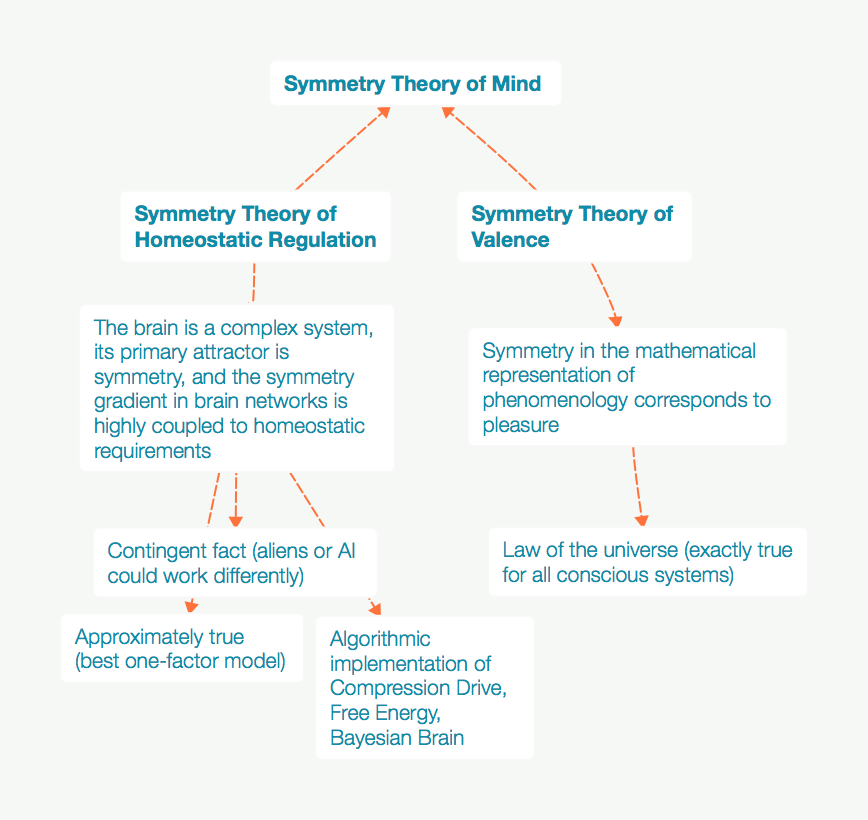

In 2016 I introduced the Symmetry Theory of Valence (STV) built on the expectation that, although the details of IIT may not yet be correct, it has the correct goal — to create a mathematical formalism for consciousness. STV proposes that, given such a mathematical representation of an experience, the symmetry of this representation will encode how pleasant the experience is (Johnson 2016). STV is a formal, causal expression of the sentiment that “suffering is lack of harmony in the mind” and allowed us to make philosophically clear assertions such as:

- X causes suffering because it creates dissonance, resistance, turbulence in the brain/mind.

- If there is dissonance in the brain, there is suffering; if there is suffering, there is dissonance in the brain. Always.

This also let us begin to pose first-principles, conceptual-level models for affective mechanics: e.g., ‘pleasure centers’ function as pleasure centers insofar as they act as tuning knobs for harmony in the brain.

QRI.2017: We figured out how to apply our formalism to brains in an elegant way: CDNS

We had a formal hypothesis that harmony in the brain feels good, and dissonance feels bad. But how do we measure harmony and dissonance, given how noisy most forms of neuroimaging are?

An external researcher, Selen Atasoy, had the insight to use resonance as a proxy for characteristic activity. Neural activity may often look random— a confusing cacophony— but if we look at activity as the sum of all natural resonances of a system we can say a great deal about how the system works, and which configuration the system is currently in, with a few simple equations. Atasoy’s contribution here was connectome-specific harmonic waves (CSHW), an experimental method for doing this with fMRI (Atasoy et. al 2016; 2017a; 2017b). This is similar to how mashing keys on a piano might produce a confusing mix of sounds, but through applying harmonic decomposition to this sound we can calculate which notes must have been played to produce it. There are many ways to decompose brain activity into various parameters or dimensions; CSHW’s strength is it grounds these dimensions in physical mechanism: resonance within the connectome. (See also work by Helmholtz, Tesla, and Lehar.)

QRI built our ‘Consonance Dissonance Noise Signature’ (CDNS) method around combining STV with Atasoy’s work: my co-founder Andrés Gomez Emilsson had the key insight that if Atasoy’s method can give us a power-weighted list of harmonics in the brain, we can take this list and do a pairwise ‘CDNS’ analysis between harmonics and sum the result to figure out how much total consonance, dissonance, and noise a brain has (Gomez Emilsson 2017). Consonance is roughly equivalent to symmetry (invariance under transforms) in the time domain, and so the consonance between these harmonics should be a reasonable measure for the ‘symmetry’ of STV. This process offers a clean, empirical measure for how much harmony (and lack thereof) there is in a mind, structured in a way that lets us be largely agnostic about the precise physical substrate of consciousness.

With this, we had a full empirical theory of suffering.

QRI.2018: We invested in the CSHW paradigm and built ‘trading material’ for collaborations

We had our theory, and tried to get the data to test it. We decided that if STV is right, it should let us build better theory, and this should open doors for collaboration. This led us through a detailed exploration of the implications of CSHW (Johnson 2018a), and original work on the neuroscience of meditation (Johnson 2018b) and the phenomenology of time (Gomez Emilsson 2018).

QRI.2019: We synthesized a new neuroscience paradigm (Neural Annealing)

2019 marked a watershed for us in a number of ways. On the theory side, we realized there are many approaches to doing systems neuroscience, but only a few really good ones. We decided the best neuroscience research lineages were using various flavors of self-organizing systems theory to explain complex phenomena with very simple assumptions. Moreover, there were particularly elegant theories from Atasoy, Carhart-Harris, and Friston, all doing very similar things, just on different levels (physical, computational, energetic). So we combined these theories together into Neural Annealing (Johnson 2019), a unified theory of music, meditation, psychedelics, trauma, and emotional updating:

Annealing involves heating a metal above its recrystallization temperature, keeping it there for long enough for the microstructure of the metal to reach equilibrium, then slowly cooling it down, letting new patterns crystallize. This releases the internal stresses of the material, and is often used to restore ductility (plasticity and toughness) on metals that have been ‘cold-worked’ and have become very hard and brittle— in a sense, annealing is a ‘reset switch’ which allows metals to go back to a more pristine, natural state after being bent or stressed. I suspect this is a useful metaphor for brains, in that they can become hard and brittle over time with a build-up of internal stresses, and these stresses can be released by periodically entering high-energy states where a more natural neural microstructure can reemerge.

This synthesis allowed us to start discussing not only which brain states are pleasant, but what processes are healing.

QRI.2020: We raised money, built out a full neuroimaging stack, and expanded the organization

In 2020 the QRI technical analysis pipeline became real, and we became one of the few neuroscience groups in the world able to carry out a full CSHW analysis in-house, thanks in particular to hard work by Quintin Frerichs and Patrick Taylor. This has led to partnerships with King’s College London, Imperial College London, National Institute of Mental Health of the Czech Republic, Emergent Phenomenology Research Consortium, as well as many things in the pipeline. 2020 and early 2021 also saw us onboard some fantastic talent and advisors.

III. What’s next?

We’re actively working on improving STV in three areas:

- Finding a precise physical formalism for consciousness. Asserting that symmetry in the mathematical representation of an experience corresponds with the valence of the experience involves a huge leap in clarity over other theories. But we also need to be able to formally generate this mathematical representation. I’ve argued previously against functionalism and for a physicalist approach to consciousness (partially echoing Aaronson), and Barrett, Tegmark, and McFadden offer notable arguments suggesting the electromagnetic field may be the physical seat of consciousness because it’s the only field that can support sufficient complexity. We believe determining a physical formalism for consciousness is intimately tied to the binding problem, and have conjectures I’m excited to test.

- Building better neuroscience proxies for STV. We’ve built our empirical predictions around the expectation that consonance within a brain’s connectome-specific harmonic waves (CSHW) will be a good proxy for the symmetry of that mind’s formal mathematical representation. We think this is a best-in-the-world compression for valence. But CSHW rests on a chain of inferences about neuroimaging and brain structure, and using it to discuss consciousness rests on further inferences still. We think there’s room for improvement.

- Building neurotech that can help people. The team may be getting tired of hearing me say this, but: better philosophy should lead to better neuroscience, and better neuroscience should lead to better neurotech. STV gives us a rich set of threads to follow for clear neurofeedback targets, which should allow for much more effective closed-loop systems, and I am personally extraordinarily excited about the creation of technologies that allow people to “update toward wholesome”, with the neuroscience of meditation as a model.

Hi Mike, I really enjoy your and Andrés's work, including STV, and I have to say I'm disappointed by how the ideas are presented here, and entirely unsurprised at the reaction they've elicited.

There's a world of a difference between saying "nobody knows what valence is made out of, so we're trying to see if we can find correlations with symmetries in imaging data" (weird but fascinating) and "There is an identity relationship between suffering and disharmony" (time cube). I know you're not time cube man, because I've read lots of other QRI output over the years, but most folks here will lack that context. This topic is fringe enough that I'd expect everything to be extra-delicately phrased and very well seasoned with ifs and buts.

Again, I'm a big fan of QRI's mission, but I'd be worried about donating I if I got the sense that the organization viewed STV not as something to test, but as something to prove. Statistically speaking, it's not likely that STV will turn out to be the correct mechanistic grand theory of valence, simply because it's the first one (of hopefully many to come). I would like to know: