Summary: Plenty of highly committed altruists are pouring into AI safety. But often they are not well-funded, and the donors who want to support people like them often lack the network and expertise to make confident decisions. The GiveWiki aggregates the donations of currently 220 donors to 88 projects – almost all of them projects fully in AI safety (e.g., Apart Research) or projects that also work on AI safety (e.g., Pour Demain). It uses this aggregation to determine which projects are the most widely trusted among the donors with the strongest donation track records. It is a reflection of expert judgment in the field. It can serve as a guide for non-expert donors. Our current top three projects are FAR AI, the Simon Institute for Longterm Governance, and the Alignment Research Center.

Introduction

Throughout the year, we’ve been hard at work to scrape together all the donation data we could get. One big source has been Vipul Naik’s excellent repository of public donation data. We also imported public grant data from Open Phil, the EA Funds, the Survival and Flourishing Fund, and a certain defunct entity. Additionally, 36 donors have entered their donation track records themselves (or sent them to me for importing).

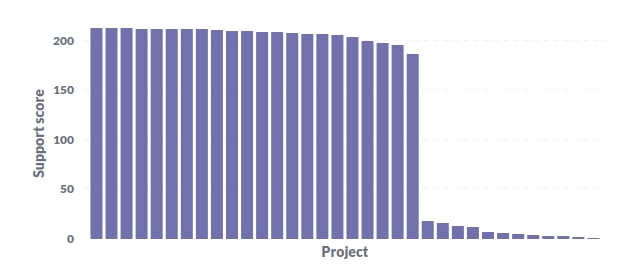

Add some retrospective evaluations, and you get a ranking of 92 top donors (who have donor scores > 0), of whom 22 are listed publicly, and a ranking of 33 projects with support scores > 0 (after rounding to integers).

(The donor score is a measure of the track record of a donor, and the support score is a measure of the support that a project has received from donors, weighed by the donor score among other factors. So the support score is the aggregate measure of the trust of the donors with the strongest donation track records.)

The Current Top Recommendations

- FAR AI

- “FAR AI’s mission is to ensure AI systems are trustworthy and beneficial to society. We incubate and accelerate research agendas that are too resource-intensive for academia but not yet ready for commercialisation by industry. Our research spans work on adversarial robustness, interpretability and preference learning.”

- Simon Institute for Longterm Governance

- “Based in Geneva, Switzerland, the Simon Institute for Longterm Governance (SI) works to mitigate global catastrophic risks, building on Herbert Simon's vision of future-oriented policymaking. With a focus on fostering international cooperation, the organisation centres its efforts on the multilateral system.”

- Alignment Research Center

- “ARC is a non-profit research organization whose mission is to align future machine learning systems with human interests.”

- Note that the project is the Theory Project in particular, but some of the donations that underpin the high support score were made before there was a separation between the Theory and the Evals project, so this is best interpreted as a recommendation of ARC as a whole.

You can find the full ranking on the GiveWiki projects page.

Limitations:

- These three recommendations are currently heavily influenced by fund grants. They basically indicate that these projects are popular among EA-aligned grantmakers. If you’re looking for more “unorthodox” giving opportunities, consider Pour Demain, the Center for Reducing Suffering, or the Center on Long-Term Risk, which have achieved almost as high support scores (209 instead of 212–213) with minority or no help from professional EA grantmakers. (CLR has been supported by Open Phil and Jaan Tallinn, but their influences are only 29% and 10% respectively in our scoring. The remaining influence is split among 7 donors.)

- A full 22 projects are clustered together at the top of our ranking with support scores in the range of 186–213. (See the bar chart above of the top 34 projects.) So the 10th project is probably hardly worse than the 1st. I think this is plausible: If there were great differences between the top projects I would be quite suspicious of the results because AI safety is rife with uncertainties that I expect make it hard to be very confident in recommendations of particular projects or approaches over others.

- Our core audience is people who are not intimately familiar with the who-is-who of AI safety. We try to impartially aggregate all opinions to average out any extreme ones that individual donors might have. But if you are intimately familiar with some AI safety experts and trust them more than others, you can check whether they are among our top donors, and if so see their donations on their profiles. If not, please invite them to join the platform. They can contact us to import their donations in bulk.

- If projects have low scores on the platform there is still a good chance that that is not deserved. So far the majority of our data is from public sources and only 36 people have imported their donation track records. The public sources are biased toward fund grants and donations to well-known organizations. We’re constantly seeking more “project scouts” who want to import their donations and regrant through the platform to diversify the set of opinions that it aggregates. If you’re interested in that, please get in touch! Over 50 donors with a total donation budget of over $700,000 want to follow the platform’s recommendations, so your data can be invaluable for the charities you love most.

- It’s currently difficult for us to take funding gaps into account because we have nowhere near complete data on the donations that projects receive. Please make sure that the project you want to support is really fundraising. Next year, we want to address this with a solution where projects have to enter and periodically update their fundraising goals to be shown in the ranking.

We hope that our data will empower you to find new giving opportunities and make great donations to AI safety this year!

If it did, please register your donation and select “Our top project ranking” under “recommender,” so that we can track our impact.