Hi!

I am starting to share weekly updates from the Campaign for AI Safety on this forum. Please sign up on the campaign website to receive these by email.

🌐 The big global news this week is that Rishi Sunak promised to look into AI existential risk. Clearly, the collective efforts of the AI safety community are bearing some fruit. Congratulations to A/Prof Krueger and Center for AI Safety on this achievement. Now, let's go all the way to a moratorium!

🧑⚖️ We have announced the judging panel for the student competition for drafting a treaty on moratorium of large-scale AI capabilities R&D:

- Prof John Zeleznikow - Professor, Law School, Latrobe University

- Dr Neville Grant Rochow KC - Associate Professor (Adj), University of Adelaide Law School and Barrister

- Dr Guzyal Hill - Senior Lecturer, Charles Darwin University

- Jose-Miguel Bello Villarino - Research Fellow, ARC Centre of Excellence for Automated Decision-Making and Society, The University of Sydney

- Udomo Ali - Lawyer and researcher (AI & Law), University of Benin, and Nigerian Law School graduate

- Raymond Sun - Technology Lawyer (AI) at Herbert Smith Freehills and Organiser at the Data Science and AI Association of Australia

Thank-you to all the judges for their involvement in this project. And thank-you to Nayanika Kundu for the initial work on promoting the competition.

Please do like and share posts about the competition on LinkedIn, Facebook, Instagram, Twitter. And please donate or become a paying campaign member to help advertise in paid media.

🤓 New messaging research came out in the past week on alternative phrasing of "God-like AI". Previously we reported that this phrase did not resonate well, especially with older people. We revisited it and found that:

- No semantically similar phrases improved on "godlike AI" simultaneously on agreeableness and concern. The spelling "godlike AI" outperformed "God-like AI".

- The following phrases, while less concerning, are more agreeable: "superintelligent AI species", "AI that is smarter than us like we’re smarter than 2-year-olds".

- The following are more concerning, but less agreeable: "uncontrollable AI", "killer AI".

- Unless any better phrases are proposed, "godlike AI", alongside other terms, is a decent working term, especially when addressing younger audiences.

More research is underway. Please send requests if you want to know more about what the public thinks.

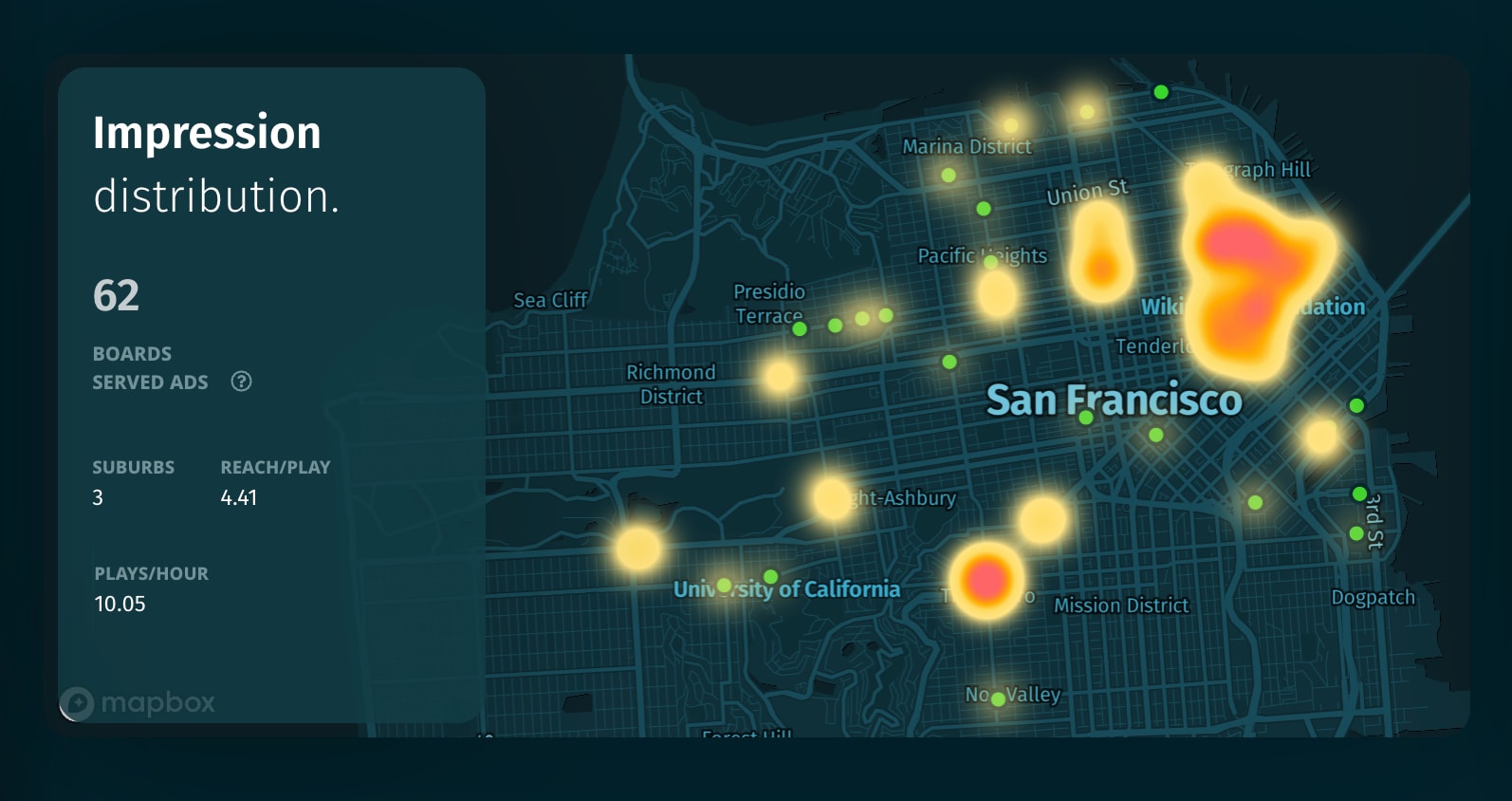

🟩 We have launched fresh billboards. We are focusing only on San Francisco at the moment. Thank you Dee Kathuria for contributing the wording and the design formats. Please donate to help us reach more people in more cities.

🪧 PauseAI made headlines in Politico Pro for their recent protest in Brussels, raising concerns about the risks of artificial intelligence (AI). The passionate group gathered outside Microsoft's lobbying office, advocating for safe AI development with a placard that read: "Build AI safely or don't build AI at all."

📃 On policy front, we have just sent our response to the UK CMA's information request on foundation models. Thank you Miles Tidmarsh and Sue Anne Wong for the work on this submission.

There are three that we are going to work:

- Request for comment launched by the National Telecommunications and Information Administration (USA. Due 12 June)

- Policy paper "AI regulation: a pro-innovation approach" (UK. Due 21 June 2023)

- Supporting responsible AI: discussion paper (Australia. Due 26 July)

Please respond to this email if you want to contribute.

✍️ There are two parliamentary petitions under review at the parliamentary offices:

- Greg Colbourn’s petition (UK).

- My petition (Australia).

Thank you for your support! Please share this email with friends.

Nik Samoylov from Campaign for AI Safety

campaignforaisafety.org