Standard AI are optimizers: they ‘look’ through possible actions they could take, and pick the one that maximises what they care about. This can be dangerous— an AI which maximises in this way needs to care about exactly the same things that humans care about, which is really hard[1]. If you tell a human to calculate as many digits of pi as possible within a year, they’ll do ‘reasonable’ things towards that goal. An optimizing AI might work out that it could calculate many more digits in a year by taking over another supercomputer— as this is the most effective action, it seems very attractive to the AI.

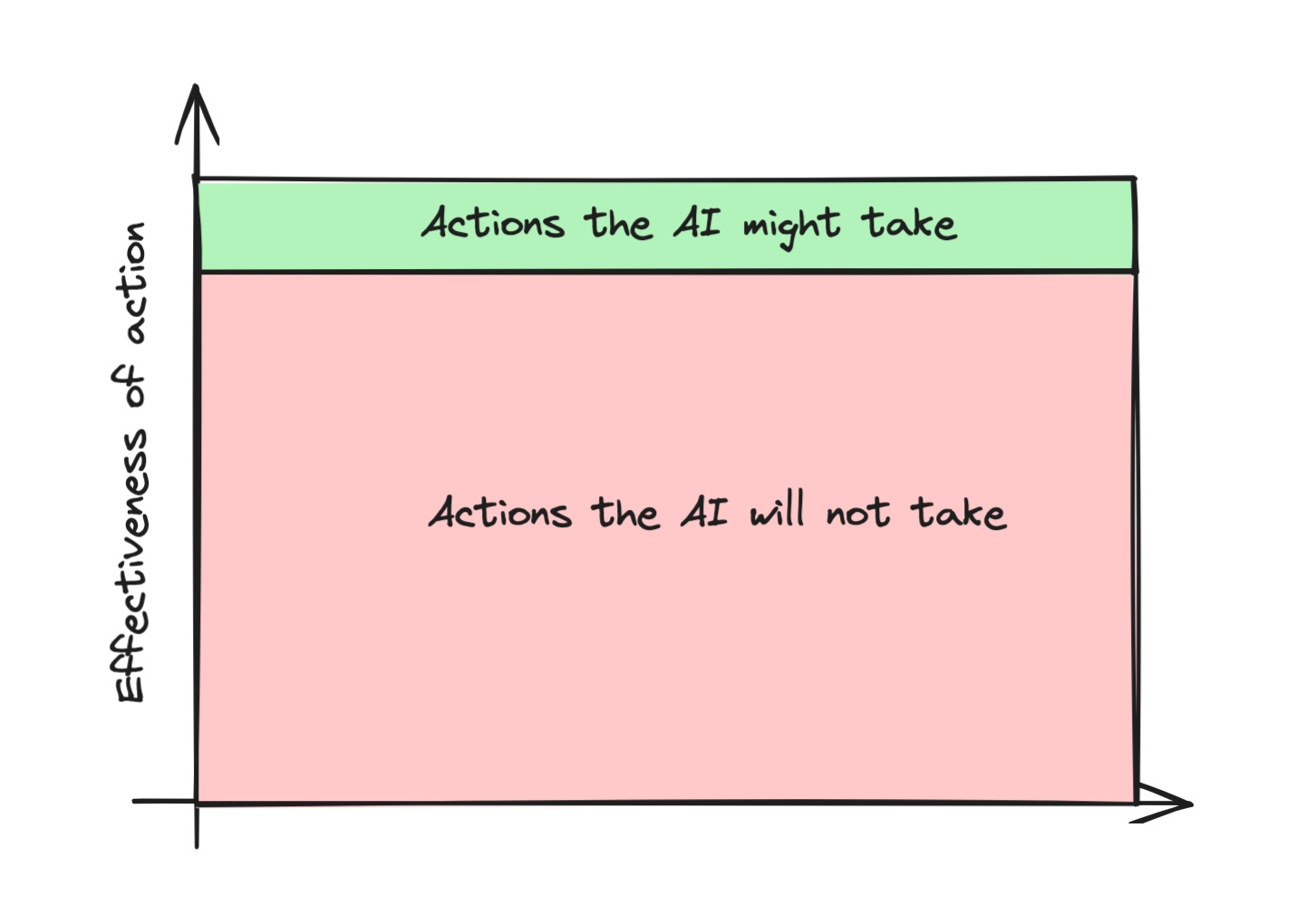

Quantilizers are a different approach. Instead of maximizing, they randomly choose from a few of the most effective possible actions:

They work like this:

- Start with a goal, and a set of possible actions

- Predict how useful each action will be for achieving the goal

- Rank the actions from the most to the least useful

- Pick randomly from the highest fraction only (i.e, the top 10%)

This avoids cases where the AI chooses extreme actions to maximize the goal. The AI chooses somewhat helpful actions instead.

It does leave one question — how do we make a list of possible actions in the first place? One suggestion is to ask a lot of humans to solve the task and train an AI to generate possible things it thinks humans would do. This list can then be used as an input to our quantilizer.

This does make them less effective, of course— firstly by picking less effective actions overall, and secondly by picking actions it thinks humans would take. But this might be worth the reduced risks— indeed, based on your risk tolerance, you can change the % of top actions the quantilizer will consider to make it more effective and riskier or vice versa.

So quantilizers trade some capability in exchange for greater safety, and avoid unintended consequences. They pick from lots of mild actions and very few extreme actions, so the chance of them doing something extreme or unexpected is miniscule.

Quantilizers are a proposed safer approach to AI goals. By randomly choosing from a selection of the top options, they avoid extreme behaviors that could cause harm. More research is needed, but quantilizers show promise as a model for the creation of AI systems that are beneficial but limited in scope. They provide an alternative to goal maximization, which can be dangerous, though they’re just theoretical right now.

- ^

Humans care about an awful lot of different things, even just one human!