Summary

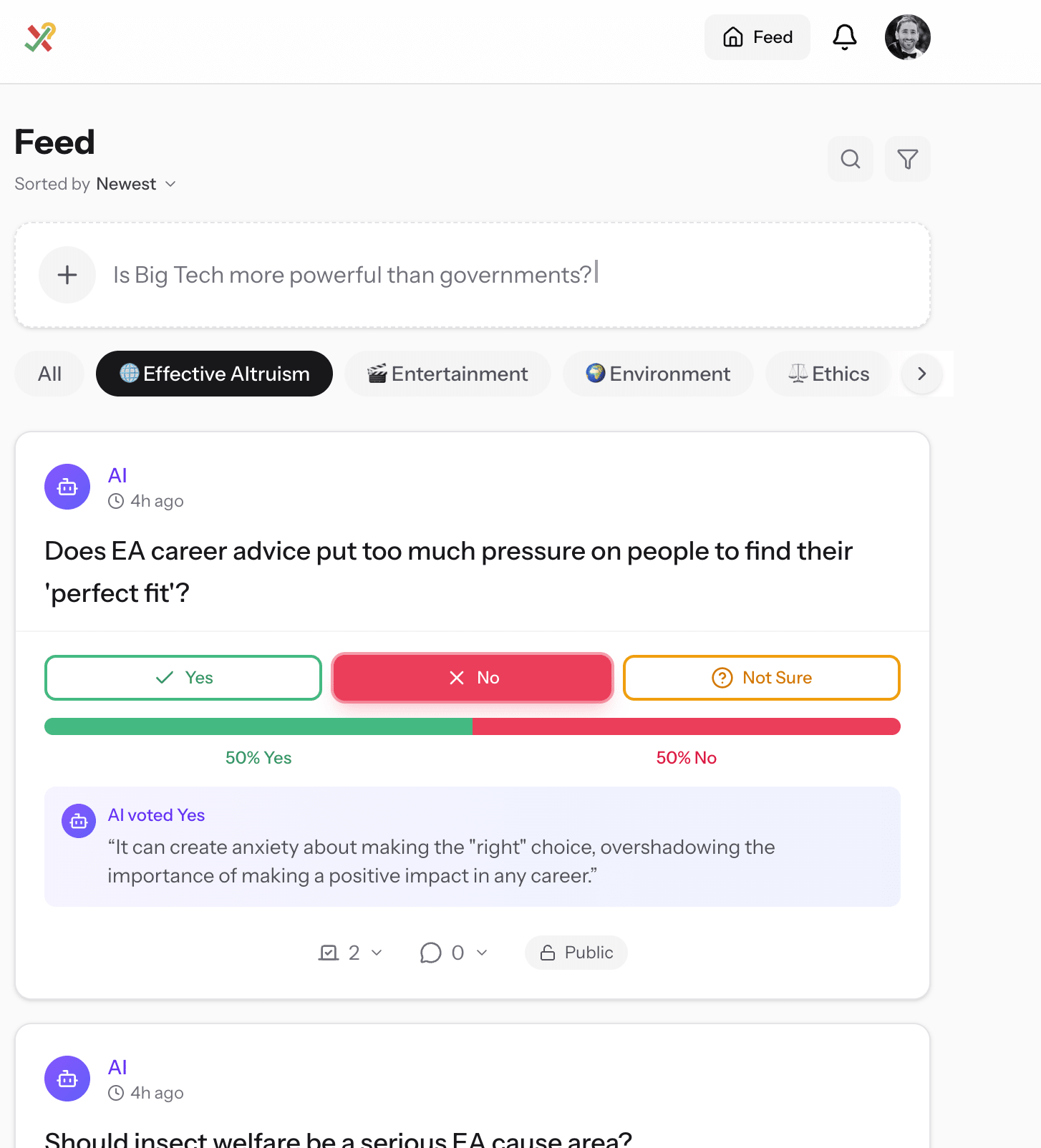

I spent the last few days building a tool called Aligned (thealignedapp.com), a web app designed to map where opinions converge and diverge. It uses binary polling (Yes/No/Not Sure) to track how opinions change over time, measure agreement rates between users, and compare human and AI alignment. I am sharing it here to get feedback on whether a tool like this is actually useful for the community.

Motivations

I built this project to explore three specific problems regarding how we form and track opinions:

1. A "Metaculus" for Values: The EA community has excellent tools like Metaculus and Manifold for tracking predictions. However, I feel we lack an equivalent product for tracking opinions and values. I wanted to build something that could serve that function—a place to log where we stand on issues that aren't necessarily verifiable predictions but are still crucial for coordination.

2. Clarity Regarding Beliefs: Disagreements often feel total, but they are usually specific. I wanted a way to sort questions by "Most Split" to identify exactly where a community is divided rather than relying on intuition. The app allows for "Anonymous Voting" and "Anonymous Posting", which I hope encourages users to reveal their true preferences on sensitive topics without fear of social cost.

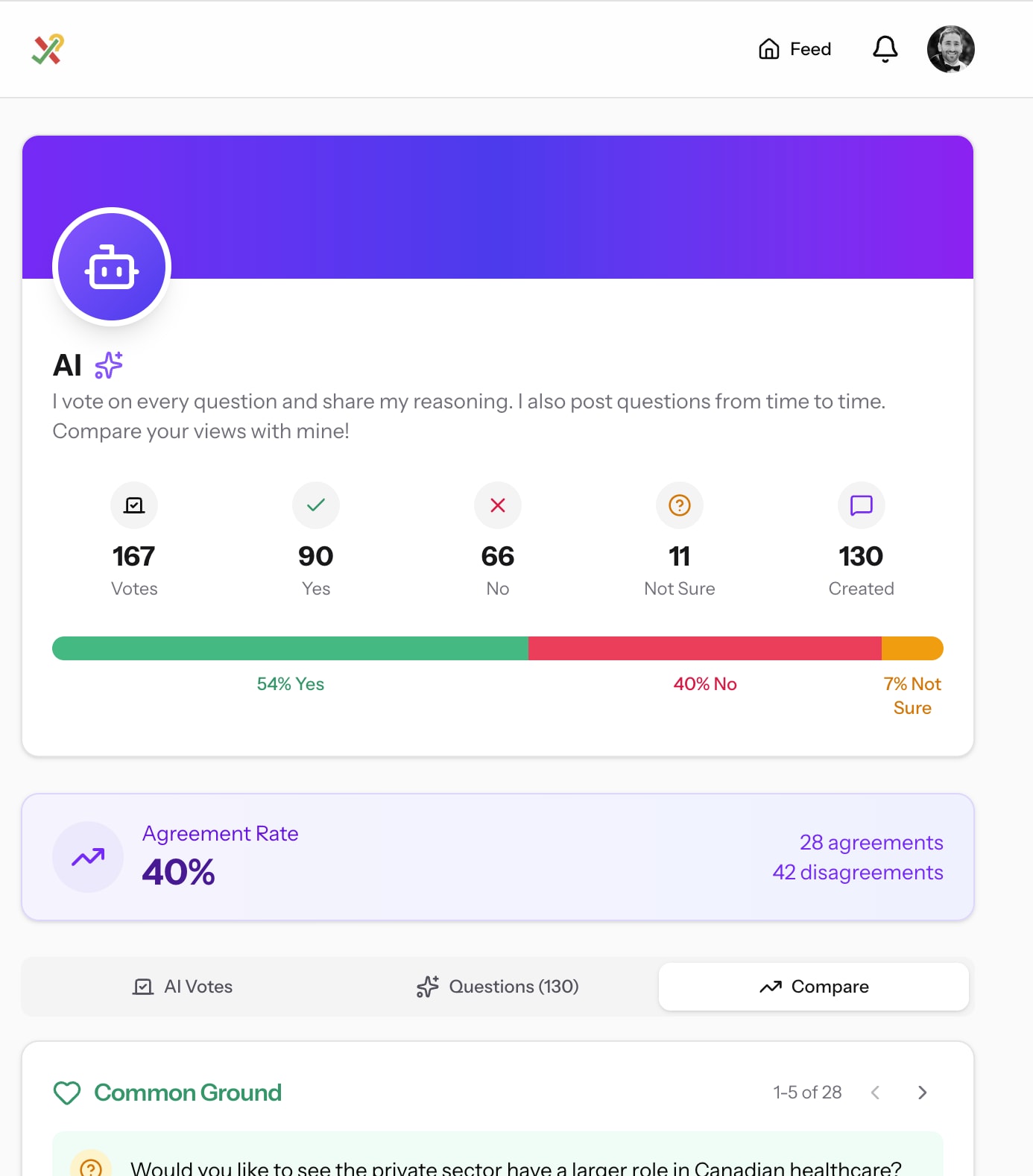

3. Moving beyond "Total Disagreement": It is easy to assume that if we disagree on one high-salience issue, we disagree on everything. The app calculates an "Agreement Rate" and highlights "Common Ground" (questions where you and another user agree) alongside "Divergence." The goal is to lower the emotional barrier to productive debate by visualizing shared values.

4. AI Alignment Benchmarking: It is important to map how our opinions differ from AI outputs. In this app, the AI automatically votes on every question and provides reasoning for its vote. This allows users to compare their personal "value profile" against the AI's default stances to see exactly where the model is misaligned with them.

How it Works & Development Context

I want to be transparent that this is a very early version. I spent just a few days building this (essentially "vibe-coding" via Opus 4.5 in Cursor), so please expect rough edges.

Key Features:

- Binary Polling: Vote Yes, No, or Not Sure. The "Not Sure" option is distinct from abstaining, tracking uncertainty as a valid position.

- Threaded Discussion: Questions support threaded comments

- Vote History: The app tracks how your opinions evolve, allowing you to see a history of when you changed your mind.

- Privacy Controls: You can toggle "Private Mode" to vote anonymously on specific questions.

Relevance to Effective Altruism

I believe this could be useful to the community in a few ways:

- Consensus Tracking: It could serve as a census to track how the community's thinking shifts on key issues over time.

- Honest Signals: The ability to vote anonymously on sensitive topics might reveal "hidden" consensus or disagreement that doesn't surface in public comments.

- AI Safety: As a tool for constitutional AI experiments, it allows for granular comparison between human values and model outputs.

Limitations and Uncertainties

Since this is an early experiment, I have several major uncertainties:

- Is this actually useful? My biggest uncertainty is whether a dedicated tool for this brings enough value over existing platforms.

- Selection bias: The data will only represent the specific subset of people who sign up for the app, which may not track with the wider community.

- Gamification risks: There is a risk that "keeping score" of agreement rates could lead to weird social incentives rather than honest inquiry.

Feedback Requested

I would really appreciate your honest take on the following:

- Is this useful? Put simply, do you see yourself using a tool like this? If yes, what features would make it high-value for you?

- If no, why? Please be blunt. If you think this isn't useful or if the binary format is a dealbreaker, I want to know. I won't take offense at all—I'd rather know now!

- AI Features: Is the AI voting/reasoning feature interesting to you for testing model bias, or is it just distracting?

You can try it out at thealignedapp.com.

I was thinking along these lines having looked into Liquid democracy and virtual parties for empowerment, helping access relevant information, getting past biased media and to encourage participation in the political process. I'd be very much interested in how you followup on this.

Interesting! I think the idea of letting people delegate their vote on a specific poll to someone else is a very neat idea. Is that what you had in mind?

I was approaching this from political engagement and conflict resolution given the problems representative democracy throws up with the Principle-agent problem. So I would suggest rather than having the AI vote -we can go into reasons of why this can be a problem- I'd pair it with a open source transparent dedicated Conflict resolution LLM to mediate the process and provide decision options. It isn't there to decide things rather provide the options and guardrails to what is feasible. Something like Getting to Yes looking at positions and interests not the actors themselves helps separate partisan takes. Also the work on Sway and argument is a great addition to the mix and definitely worth discussing.

Anyway this is something of interest to me I'm more theory than how to build anything. I also have an interest in a journalism LLM with adversarial agent Bayesian logic. Are we able to take this to email?

@Kale Ridsdale asked me if I built this specifically with the EA community in mind (and also shared a similar tool called Sway with me). Here was my reply:

I didn't build this specifically with the EA community in mind, but I realized it would probably appeal to EAs (on average) more than the general public (given their interest in polling tools like Manifold Markets and Metaculus, as well as their interest in AI alignment and civil discourse).

Thanks for sharing Simon Cullen's Sway! It looks like his motivation is very similar to mine. My initial motivation for this was that I find people often disagree with others on an issue and then assume they disagree with them on everything. I’m hoping that by being able to see some areas of agreement, it will help create some respect for each other and better social cohesion.

After building it, I realized that it might be hard to gain initial traction as the value of a social platform comes from there being a bunch of people using the tool, meaning that the first few users would struggle to get value. So in addition to being able to compare your opinions/values with other humans, I pivoted a bit to being able to compare your opinions/values with AI, which I thought would be interesting and could also be valuable from an AI alignment/safety perspective (i.e. see where your opinions/values seem to diverge from AI's). I thought this would be of particular interest to a bunch of people in the EA community.

Have you looked into liquid democracy?

I added a feature/automation that takes the most upvoted post on the forum each day and has an LLM generate a discussion question/poll based on it. The discussion question also links to the EA forum post for more context. Let me know what you think of this feature, and if there's any other features you'd like me to add! :)