Crosspost of my blog article.

A brief note: philosophers against malaria is having a fundraiser where different universities compete to raise the most money to fight malaria. Would highly encourage you to donate!

There are different views on the philosophy of risk:

- Fanaticism holds that an arbitrarily low probability of a sufficiently good outcome beats any guaranteed good outcome. For example, a one in googolplex chance of creating infinite happy people is better than a guarantee of creating 10,000 happy people.

- Risk-discounting views say that you simply ignore extremely low risks. If, say, risks are below one in a trillion, you just don’t take them into account in decision-making.

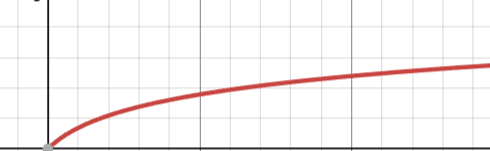

- Bounded utility views hold that as the amount of good stuff goes to infinity, the value asymptotically approaches a finite bound. For example, a bounded view might hold that as the number of happy people approaches infinity the value approaches some finite bound. The following graph shows what that looks like (the Y axis is value, the X axis is utility):

These views fairly naturally carve up the space of views. Fanaticism says that low risks of arbitrarily large amounts of good outweigh everything else. Discounting views say that you can ignore them because they’re low risks. Bounded views say that arbitrarily large amounts of good aren’t possible because goodness reaches a bound.

I’m a card-carrying fanatic for reasons I’ve given in my longest post ever! So here I’m not going to talk about which theory of risk is right but instead set out to answer: if you’re not sure which of these views is right, how should you proceed? My answer: under uncertainty, fanatical considerations dominate. If you’re not sure if fanaticism is right, you should mostly behave as a fanatic.

First, an intuition pump for why unbounded views dominate bounded views: imagine that you are trying to maximize the number of apples in the world. There are two theories. The first says that there’s no bound on how many apples can be created. The second says there can only be 100 trillion apples at the most. If you’re an apple-maximizer, you mostly care about the first one because potential upside is unlimited. It wouldn’t be shocking if value worked the same way.

Here’s a sharper argument for this conclusion: suppose you think there’s a 50% chance that value is bounded. You’re given the following three options:

A) If value is bounded, you get 50,000 units of value.

B) If value is unbounded you get 10 billion units of value.

C) If value is unbounded you have a 1/googol chance of getting infinite units of value.

I’m going to assume that conditional on value being unbounded, you think that any chance of infinite value swamps any chance of finite value (we’ll soon see that you can drop that assumption).

It’s pretty intuitive that B is better than A. After all, B gives you a 50% chance of having 10 billion units of value while A gives you a 50% chance of 50,000 units of value. Why would whether there’s a bound on value affect how much you care about some fixed quantity of value? It’s especially hard to believe the bound affects how much you care about some quantity of value to such a degree that it makes you value 50,000 units of value more than 10 billion units of value.

But C is strictly better than B. After all, given unbounded value, C has infinite expected value, which we’re assuming is what you’re trying to maximize given that value is unbounded—which it must be for B or C to pay out at all. Thus, by transitivity C beats A—C>B>A. But if C beats A, by the same logic, given any significant degree of moral uncertainty you’ll always prefer arbitrarily small probabilities of arbitrarily large rewards. The key takeaway: given moral uncertainty, fanatical considerations matter arbitrarily more than risk-discounting ones.

Now let’s drop the assumption that you’re maximizing expected utility. Let’s suppose you have the following credences:

1/4 in unbounded utility and expected utility maximizing.

1/4 in unbounded utility and discounting risks

1/4 in bounded utility and expected utility maximizing.

1/4 in bounded utility and discounting risks.

Now suppose you’re given the following deals:

A) 500 billion utils given unbounded utility and expected utility maximizing.

B) 50,000 utils given expected utility maximizing and bounded utility.

C) 50,000 utils given bounded utility and discounting risks.

D) 1/googolplex chance of infinite utils given unbounded utility and expected utility maximizing.

E) 50,000 utils given unbounded utility and discounting risks.

By the logic described before, A is better than B and C. D is better than A. Thus, D is better than B and C. This conclusion is robust to unequal credences, because A is better than B and C combined—and D is better than A—so D is better than B and C combined. A is also better than E, and D is better than A, so D>E. Thus, low probabilities of infinite payouts given fanaticism dominate high probabilities of guaranteed payouts given unbounded views that discount low risks.

The takeaway from all of this: A is better than B, C, and E. D is better than A. So D is the best on the list. Because D is something that only a fanatic would value more than the others, under uncertainty you should behave like a fanatic.

So far, I’ve assumed that you have non-trivial credence in fanatical views (both unbounded and non-discounting). What if you think such views are very unlikely? I think the same basic logic goes through. Compare D to:

F) For F, stipulate that you think there’s a 1 in 10 billion chance that fanaticism is true. F offers you a one in googol (vastly more than googolplex) chance of infinite utils, given unbounded utility and expected utility maximizing.

Clearly F is better than D. But D is better than the rest, so now F is better than the rest, and it makes sense to behave like a fanatic even if you’re pretty sure it’s false.

Now, one objection you might have is: for present purposes I’ve been assuming that one view is right and talking about probabilities. But if you’re an anti-realist, maybe you reject that. If moral facts are just facts about your preferences, perhaps there is no moral uncertainty, and it doesn’t make sense to talk about probabilities of views being right.

But this isn’t correct. All we need, for the argument, is for there to be some probability that unbounded views are right. There are many ways unbounded views might be right that shouldn’t be assigned zero credence:

- You might be mistaken about what you care about at the deepest level, instead picking up on irrelevant characteristics.

- You might be wrong about moral anti-realism. If realism is true, unbounded views might be right.

- It might be that on the right view of anti-realism, moral error is possible and unbounded views are right.

And note: the kinds of wager arguments can be framed in anti-realist terms. If you might care about something a huge amount, but you might only care about it up until a point, then in expectation you should care about it a lot.

Now, you might worry that this is a bit sus. The whole point of bounded and discounting views is to allow you to ignore low risks. And now you’re being told that if you have any credence in fanaticism it just takes over. What? But this shouldn’t be so surprising when we reflect on how its competitors deviate from fanaticism. Specifically:

- Bounded utility views end up saying value reaches a finite limit. But it’s no surprise that if you’re trying to maximize expected value—which you are if bounding utility is your only deviation from fanaticism—then views on which the limit is finite will matter much less than views on which it’s infinite. “Maybe this thing isn’t that important,” isn’t an adequate rebuttal to “we should care about this because it may be infinitely important,” given uncertainty.

- Discounting views just ignore low risks. But given some chance that low risks are the most important thing ever, even if there’s also some chance they don’t matter, they end up swamping everything else.

Now, you can in theory get around this result, but doing so will be costly. You’ll have to either:

- Abandon transitivity.

- Think that B>A which seems even worse. This would mean that 50,000 utils under bounded value beats 500 billion under unbounded value.

Now, can you do this with other kinds of moral uncertainty? Can similar arguments establish, say, that deontology matters more than consequentialism. I think the answer is no. I thought about it for a few minutes and wasn’t able to come up with a way of setting up deals analogous to A-F for deontology dominating. Deontology and consequentialism are also dealing in incommensurable units—they value things of fundamentally different types—so moral uncertainty will be wonkier across them than across different theories of value.

One other way to think about this: it’s often helpful to compare moral uncertainty to empirical uncertainty and treat them equivalently. For instance, if there’s a 50% chance that animal suffering isn’t a bad thing, you should treat that the same as if there was a 50% chance that animals didn’t suffer at all.

But if we do that in this context, unbounded views win out in expectation. Consider the empirical analogue of non-fanatical views:

- The empirical analogue of risk discounting is learning that low risks actually had a 0% chance of coming true (rather than discounting the low risks for moral reasons, you’d discount them for factual reasons). But if you think that there’s a 50% chance that low risks are arbitrarily important and there’s a 50% chance that they’re unimportant because their true odds are 0%, they’ll still end up important in expectation.

- The empirical analogue of bounded utility is thinking that the universe can only bring about some finite amount of utility. But if we thought there was some chance of that, and some chance that utility was unbounded and fanaticism was true, then the worlds where utility was unbounded would be more important in expectation.

I’m open to the possibility that there could be a non-fanatical view that deals in incommensurable units and thus isn’t dominated by fanaticism given uncertainty. But the two main competitors probably get dominated. If you’re morally uncertain, you should behave like a fanatic. In trying to bring about good worlds, we should mostly care about the worlds that are potentially extremely amazing, rather than the ones where value might be capped.

It seems to me that this argument derives its force from the presupposition that value can be cleanly mapped onto numerical values. This is a tempting move, but it is not one that makes much sense to me: it requires supposing that ‘value’ refer to something like apples, considered as a commodity, when it doesn’t.

Begin with the intuition pump. For the intuition pump to function, we must grant that somebody would want to maximise the number of apples in the world; but I find it hard to see why anyone should grant this; this seems a pointless objective. The pump breaks.

It could be true that ‘If you’re an apple-maximizer, you mostly care about the first one because potential upside is unlimited. It wouldn’t be shocking if value worked the same way.’ But this conditional claim relies on a presupposition that I am not inclined to grant––namely that somebody might want to maximise the number of apples up to an arbitrarily large threshold––and that I think no-one should be inclined to grant. If value is like apples, we have no reason to maximise it up to an arbitrarily large threshold (a threshold large enough we cannot imagine it).

But value also doesn’t seem like apples as imagined in the intuition pump. Here are two apples:

🍎🍎

that together constitute two apples, which can be measured as two apple units. (We are to suppose, on the present picture, that every apple is the same.) But is value like this? By which I mean–is value like this:

🍎🍎

and so something we can count? It doesn’t seem like it to me. Maybe value is like this:

🍎🍎🍐 🍐

insofar as it is the product of combining apples and pears. But how do we combine apples and pears? The only kind of product we seem able to get when we add the above without further stipulations is: two apples and two pears. There is no natural unit.

The point I am getting at is this: a foundational assumption is that value is a quantity that is measured in value units; we can convert apples or pears or sensations or whatever into value units. But there seems no reason to suppose that this is how we should understand value.

Suppose, though, we allow that value is exactly like apples. A further problem comes out if we replace instances of ‘value’ with ‘apple’ in the remainder of the post. For instance, we now get:

A) If apple is bounded, you get 50,000 units of apple.

B) If apple is unbounded you get 10 billion units of apple.

C) If apple is unbounded you have a 1/googol chance of getting infinite units of apple.

Each of these is hard to parse. I don’t know how to make sense of ‘If apple is bounded’ except as ‘If the number of apples is bounded’. This presupposes that apples can be counted, which they can (although not without simplifying apples). But what does it mean to talk of ‘the number of values’ in this case? This picture doesn’t make sense to me.

Apples can be counted because apples are things; they sit there, one beside another, and can be collected into a pile. Value does not present itself this way. One might take ‘value’ to refer to something like: the importance of an action, or the significance of an outcome, or the excellence of a life, or the moral weight of a situation. But none of these are the sorts of things that naturally admit of cardinal measurement. They are appraised, not tallied. They are judged, not counted.

The further manoeuvres with credences and ‘deals’ simply repeat the same assumption in more elaborate clothing. Every comparison presupposes that value forms a countable currency: it can be translated into numbers that can be added, ranked, maximised, and even made infinite.

The structure is basically this:

This argument makes sense only if we grant that X can be represented by a number Y in the first place. And that, even if we grant this, the Y-scale is common across theories. Neither of these make sense to me. Even if they can be made to make sense to you, this fact alone blocks the argument.

The general point is this: the whole argument only works if we accept a picture of value as something countable and fungible, like our apples. If we do not accept that picture, and there is no reason why we should, then nothing in the argument goes through. The fanatic conclusion simply reflects the structure of the picture, not the structure of value. It requires a picture of value I, for one, do not share.

I could say a lot about how this picture can be made to make sense. But I think the most useful additional thought is that this picture requires us to see value not so much as apples but as money: and that this is a painfully limited picture…

The apples being unbounded thing was just a brief intuition pump. It wasn't really connected to the other stuff.

I don't think the argument actually requires that different value systems can be compared in fungible units. You can just compare stuff that is, in one value system, clearly better than something in another value system. So, assume you have a credence of .5 in fanaticism and of .5 in bounded views. Well, creating 10,000 happy people given bounded views is less good than creating 10 trillion suffering people given unbounded views. But that's less good than a one in googol chance of creating infinite people given unbounded views. So by transitivity, a 1/googol chance of infinite people given unbounded views wins out.