I'm reading Thinking in Systems: A Primer, and I came across the phrase "pay attention to what is important, not just what is quantifiable." It made me think of the famous Robert Kennedy speech about GDP (then called GNP): "It measures neither our wit nor our courage, neither our wisdom nor our learning, neither our compassion nor our devotion to our country, it measures everything in short, except that which makes life worthwhile."

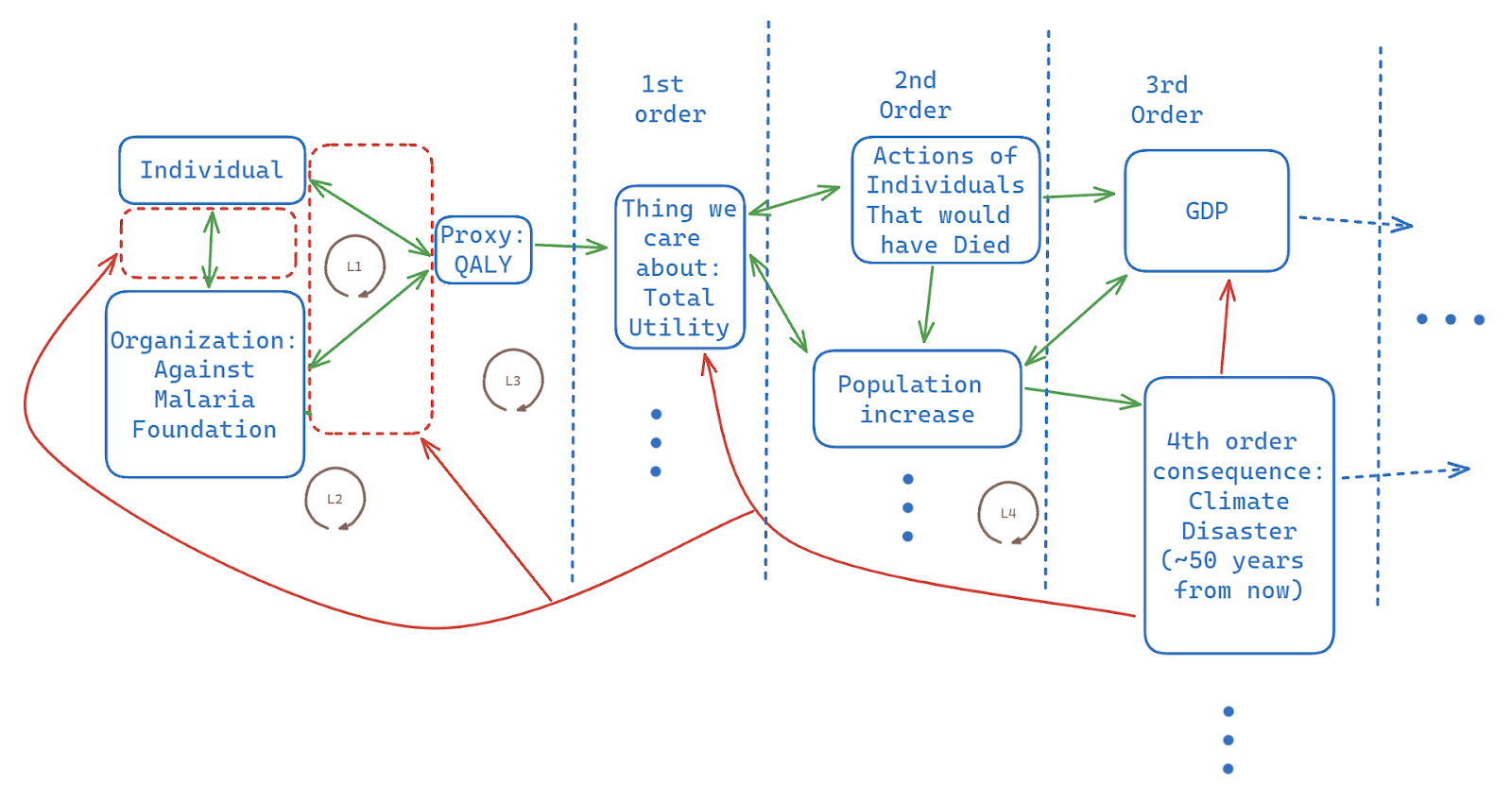

But I found myself having trouble thinking of things that are important and non-quantifiable. I thought about health, but we can use a system like DALY/QALY/WALY. I thought about happiness, but we can easily ask people about experienced well-being. Courage could certainly be measured through setting some type of elaborate (and unethical) scenario and observing reaction. While many of these things are rough proxies for the thing we want to measure rather than the thing we want to measure itself, if the construct validity is high enough then I'm viewing these proxies as "good enough" to count.

My rough impression as of now is that things exist that are difficult to measure that we aren't currently able to quantify, but that are quantifiable with better techniques/technology (such as courage). Are there things that we can't quantify?