By Johannes Ackva, with writing support from Amber Dawn.

These posts were originally written for the Founders Pledge Blog so the style is a bit different (e.g. less technical, less hedging language) than for typical EA Forum posts. We add some light edits in [brackets] specifically for the Forum version and include a Forum-specific summary. The original version of this post can be found here.

Contextualization and summary

One eternally confusing aspect of climate discussions is the parallel existence of quite contradictory narratives, something that how to make sense of has been a central topic of our Changing Landscape report and my talk at SERI earlier in the year. Using the framework from the talk, this post considers what the Russian invasion of Ukraine means for global energy disruption and climate risk, and how we can better prioritize solutions if we systematically consider the uncertainties posed by risks to energy and climate policy.

This post considers what the Russian invasion of Ukraine means for global energy disruption and climate risk, and how we can better prioritize solutions if we systematically consider the uncertainties posed by risks to energy and climate policy. This is the final post in a series of three Giving Tuesday blog posts with updates to our thinking on high-impact climate change philanthropy, you can find the original posts on our website.

Introduction

If you live in Europe or the US, you’ve probably seen your energy and fuel bills rise this year due to the war in Ukraine, which has disrupted oil and gas markets. In addition to its wider geopolitical implications, the war has shifted many nations’ energy policy priorities: Europe is doubling down on decarbonization; while India and China are rushing to buy Russian fossil fuels at a discount. This means that the war is likely to affect global carbon emissions and, thus, the climate. But how exactly will the disruptions to energy markets affect emissions? Does the war increase or decrease risks from climate change?

The answer is: it’s complicated, but the uncertainty in the political landscape probably increases climate risk overall. This has implications for policy-makers and philanthropists who are worried about climate change: in a more uncertain world, we should prioritize robust climate interventions as well as those that hedge against worst-case scenarios.

A reason for optimism: faster decarbonization

Due to sanctions and other disruptions, oil and gas have become less available, and therefore more expensive, across Europe. This has led to Europe doubling down on earlier plans to decarbonize, both because higher fossil fuel prices make low-carbon alternatives more competitive, and because the war has highlighted the advantages of energy independence. In May, the European Commission produced the REPowerEU plan for “saving energy, producing clean energy, diversifying our energy supply” and ending Europe’s dependence on Russian oil and gas by 2030. So, the war may end up accelerating the transition to green energy, both in Europe and further afield. This would mean fewer emissions and less climate damage. Michael Liebreich from Bloomberg New Energy Finance makes this case, arguing that the Ukraine crisis will lead to a situation where the three main drivers of energy policy – sustainability (including climate), energy security and affordability – all point in the direction of clean energy acceleration and faster decarbonization. However, this is far from the only case one can make.

Reasons for pessimism: carbon lock-in and international tension

On the other hand, other changes in fossil fuel markets seem likely to increase emissions, as countries across the world scramble to subsidize carbon-intensive energy to shield consumers. Russia is selling its excess oil to India and China at a discount, which might lead to those countries using more and building more infrastructure around fossil fuels (though overall, the picture in Asia is complicated). Meanwhile, to address the crisis, Germany is building new terminals to import liquefied natural gas from the US. Investments like this are expensive and won’t pay off for decades, which means that there’s a risk of carbon lock-in: it will now be more difficult for nations with new fossil fuel infrastructure to cut their losses and decarbonize sooner.

The war has also caused a breakdown in international cooperation. If tensions persist or escalate further, this could be bad for the climate, among everything else, since many interventions to reduce warming need lots of international cooperation.

For example, one promising way to reduce global warming is to prevent tropical deforestation, since rainforests remove carbon from the atmosphere. However, this requires international cooperation: richer countries subsidize countries with rainforests so they have less need to cut them down. Even now, this works at best imperfectly; it’s far less likely to work in a world where the global great powers are hostile to each other. The more conflict there is, the fewer effective tools we have for fighting climate change.

For example, the COP27 conference in November 2022 failed to set ambitious mitigation targets, partly because of the changed geopolitical environment (though the climate priorities of the host, Egypt, also had an influence).

Confused? Even symmetric uncertainty increases climate risk

Quicker decarbonization in Europe might reduce emissions; carbon lock-in and conflict may increase them; but what does this mean overall? Will one of these effects dominate, or will they balance out? We probably won’t know until it is too late, since the greatest impacts are long term and uncertain. For example, we don’t know:

Will Europe in fact shift to low-carbon energy more quickly? And if so, will this lead to quicker decarbonization elsewhere in the 2030s by making low-carbon energy cheaper?

How much lock-in will the new liquefied natural gas infrastructure cause?

How severely will the war affect global cooperation, and will this effect be temporary or long term? As Michael Liebreich notes in a less optimistic moment, it’s now more likely that Taiwan will be invaded, which could set clean energy back for years.

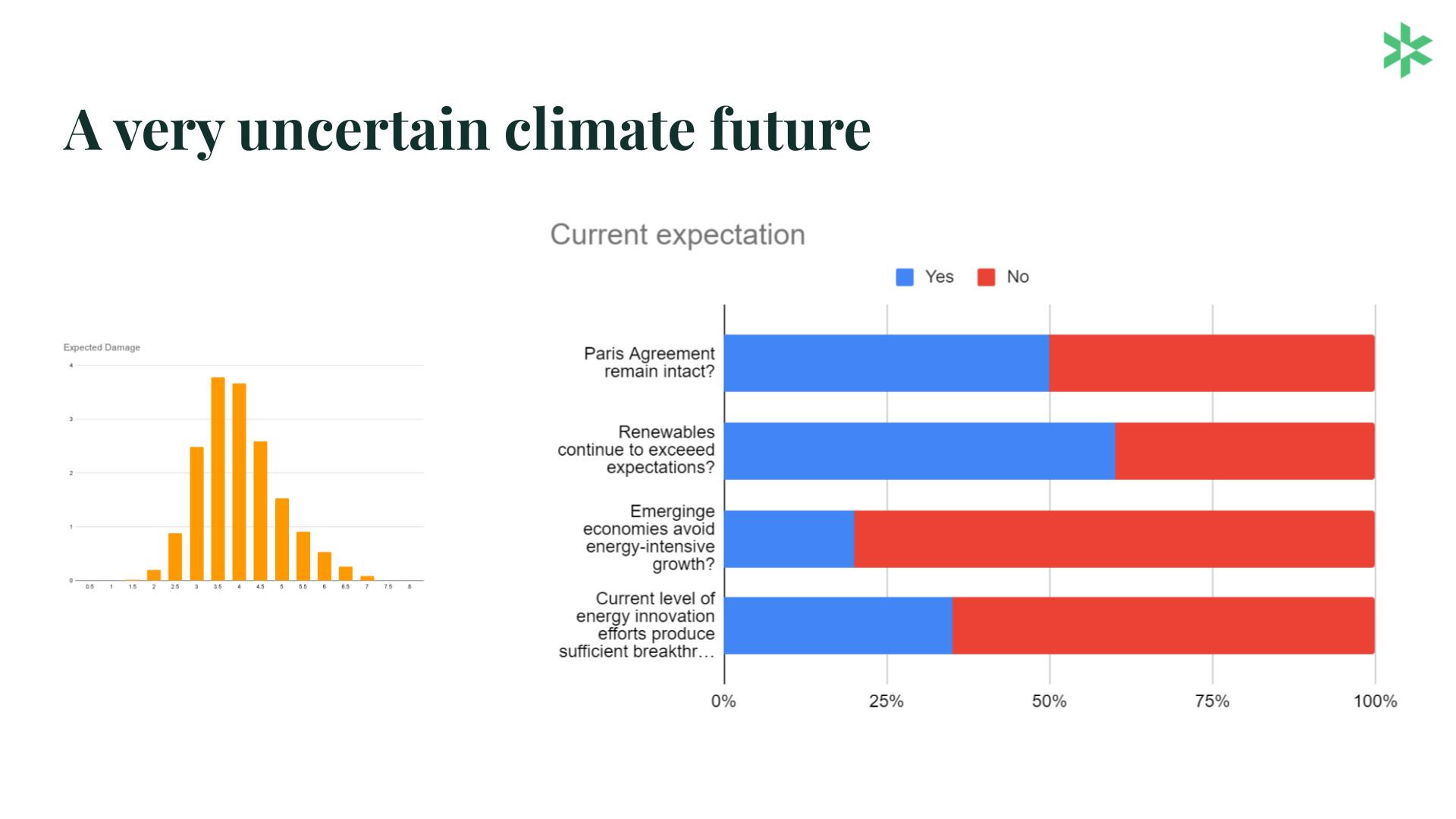

We are thus in a difficult situation: we need to make decisions even though we do not know how many of these large, irreducible uncertainties will resolve. Despite the uncertainty, however, we can predict that the Ukraine war has likely increased climate risk overall. This is true even if we’re not sure whether the positive or negative considerations will dominate; more uncertainty about climate outcomes tends to increase risk even if the uncertainty cuts both ways.

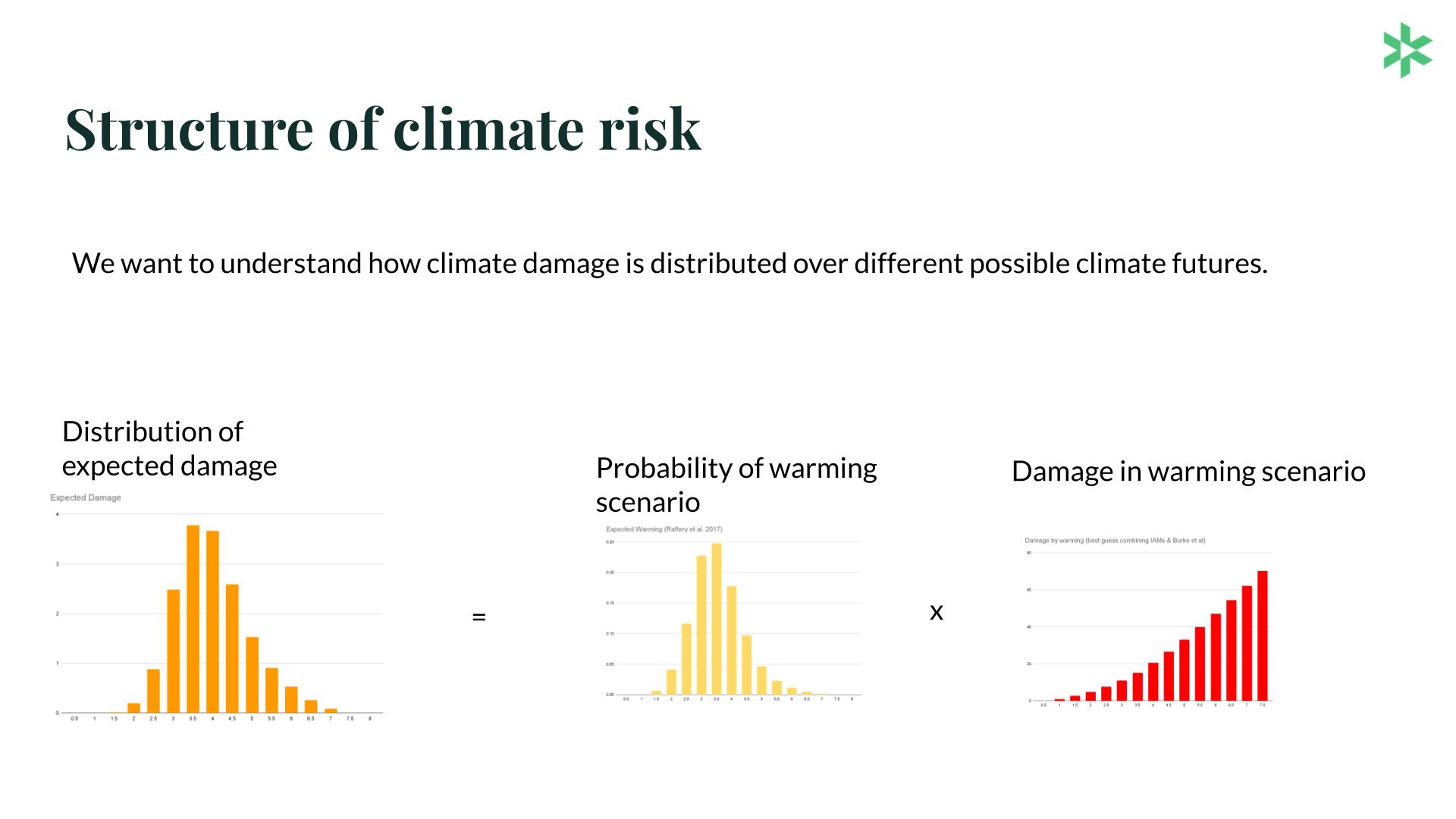

The idea of expected climate damage helps us understand this. We currently know that the planet is heating up, but we don’t know how much warmer it will get. Many different scenarios are possible, and each scenario is predicted to cause different amounts of damage. We can calculate expected climate damage (represented by the orange bar chart below) by multiplying the probability that each degree of warming will happen (yellow) by the amount of damage caused by that degree of warming (red).

As you might expect, more global warming is likely to cause more damage. Less intuitively, the amount of damage increases nonlinearly with warming. We might think that since 6 degrees of warming is twice as much as 3 degrees, 6 degrees will cause twice as much damage. In fact, on plausible estimates, it’s predicted to cause about five times as much damage.

See my talk at the Stanford Existential Risk Initiative (SERI) Conference 2022 for a more detailed discussion of this issue:

This means that expected climate damage increases with uncertainty, because the higher degrees of warming are predicted to be so much more damaging than the lower ones.[1]

Currently, it’s pretty unlikely that we’ll get less than 2 degrees of warming, or more than 4 degrees. If the war makes both of these outcomes more likely, you might think that climate risk stays the same -- it could make things better or worse. But because higher degrees of warming are much more damaging, when events increase the chance that those higher degrees will happen, this outweighs the fact that they might also make optimistic scenarios more likely.

Philanthropists should value solutions that are robust and hedgy

This might sound abstract and theoretical, but it actually has profound implications for what we ought to do if we want interventions against climate change to have the greatest possible impact.

To understand this, consider the following two facts:

First, we are not yet – and might never be – in a situation where governments prioritize climate policy when they make decisions that gravely affect emissions. When push comes to shove, energy security and affordability concerns will dominate climate concerns. This does not mean we should be fatalistic – sometimes energy security and climate concerns point in the same direction, and decarbonization policies become more popular than they otherwise would have been thanks to their energy security benefits. Indeed, this is the primary reason for optimism about the war’s climate implications discussed above.

But it does mean that much of what we care about as climate philanthropists is beyond our control and related to exogenous circumstances, such as geopolitical conditions, global economic growth and energy demand. The current change in climate trajectories - both positive and negative - is not driven by climate philanthropy or even climate policy, but rather by large scale geopolitical and economic shocks.

Second, we can predict things about worlds with high climate risk.

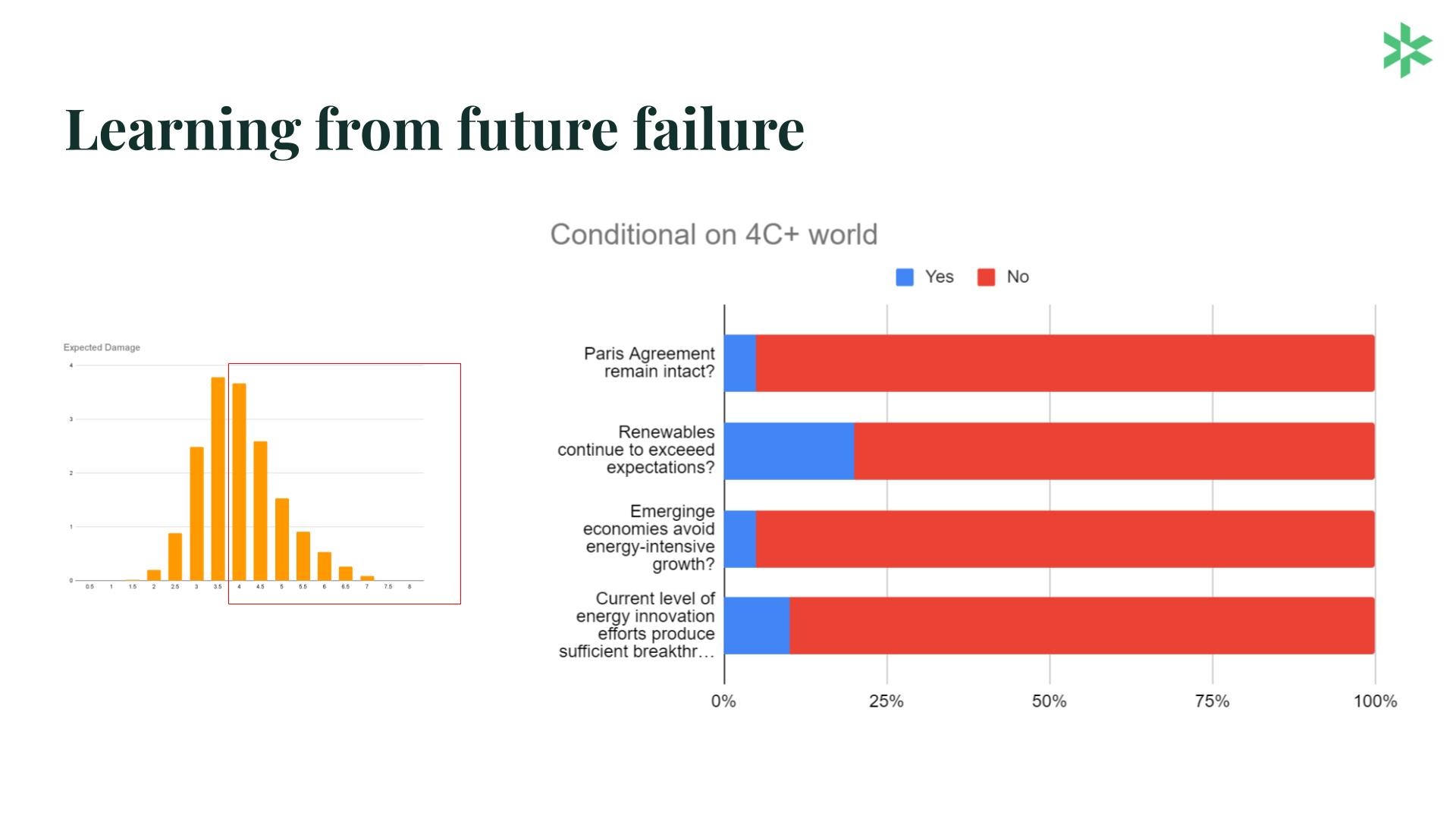

For example, in possible future worlds where the invasion of Ukraine did cause more climate risk, we can predict that international climate policy has been derailed, countries have walked back on domestic climate policy targets, and changes in energy policy have turned out to be dominated by additional exploitation of domestic fossil fuels rather than an accelerated clean energy transition.

We can also imagine more positive changed futures - for example, a future where the world sought greater independence from autocratic fossil fuel suppliers. However, the more pessimistic future harbors the majority of change in climate risk, given the latter’s nonlinear structure.

It is thus the kind of future we should pay most attention to when seeking to minimize climate damage. As we wrote last year (p.65, emphases added):

"The one [background condition affecting climate progress] we are worried about the most is intense geopolitical competition between great powers or even a great power war, [...]. This is our main argument against being relatively optimistic on decarbonization progress, the main way in which we could end up in 3+ degree worlds are those where current momentum whittles down."

While we are hopefully still far from a great power war, it is clear that geopolitical tensions have increased significantly and there is a very real risk of international collaboration (on climate) breaking down.

Importantly, the scenario literature on climate futures and other work also clearly suggest conditions, such as a breakdown of international collaboration, that are likely to be present on a high-emissions pathway, in particular:

- a breakdown of international cooperation (around climate or more broadly);

- a relative failure of intermittent renewables (wind and solar) to decarbonize the entire energy system;

- energy-intensive growth, particularly in emerging economies;

- potentially a technology-fueled growth explosion.

As absurd as it might sound, we should thus learn from future failure and pursue plans that are robust – working under likely failure conditions – or, even better, that have a hedging quality – addressing likely failure conditions.

Key uncertainties without further knowledge about the severity of climate damage:

Key uncertainties knowing we are in a high-damage world:

[While there are some obvious implications from a risk-informed perspective and what conditions to be robust to and which failure modes to hedge against (such as those listed here), we believe there likely are many more and we would be keen to get suggestions on what to research more.]

An example of a robust plan is advancing clean energy legislation (for example, the Inflation Reduction Act). This makes sense on the grounds of both national self-interest and green industrial policy, and doesn’t rely on international collaboration to become feasible or effective. Some interventions that hedge against high-damage worlds are advanced geothermal power, advanced nuclear power, and other alternatives to solar and wind: these protect against worlds where intermittent renewables “fail”, one of the conditions where climate damage is high.

Among its many awful consequences, the war in Ukraine shows us this: when we choose climate interventions, it’s really important to remember that our best laid plans can be easily thwarted. In the worst future worlds, we can’t rely on optimistic assumptions. We can’t trust that a few favored climate solutions will work, or that rival nations will be willing and able to cooperate on the most effective interventions. Rather, climate philanthropists should prioritize more robust solutions: solutions that will work even if lots of other things go wrong. While these interventions might not be needed in luckier worlds, in the scariest possible futures, where climate risks are the highest and severe damage is the most likely, we’ll be grateful for them.

About Founders Pledge

Founders Pledge is a community of over 1,700 tech entrepreneurs finding and funding solutions to the world’s most pressing problems. Through cutting-edge research, world-class advice, and end-to-end giving infrastructure, we empower members to maximize their philanthropic impact by pledging a meaningful portion of their proceeds to charitable causes. Since 2015, our members have pledged over $7 billion and donated more than $700 million globally. As a non-profit, we are grateful to be community supported. Together, we are committed to doing immense good. founderspledge.com.

- ^

Note that this is not true under all possible conditions: if the probability change towards more positive outcomes was sufficiently larger than the change towards more negative scenarios, it could be that this outweighed the higher importance (additional expected damage) of higher probability mass on more negative scenarios. However, this seems unlikely to be the case in the current situation, where significant negative and positive effects exist and there is no consensus on the overall balance of consideration pointing towards a primarily positive change.