One of the criticisms of longtermism is that people don't see where it differs from common sense morality - everyone agrees x-risk is bad.

One question on which they disagree is when it's worth engaging in nuclear war to prevent advanced AI. Longtermism says a 5% risk of extinction is much worse than a certainty of killing 5% of people, while shorttermism says they're on par.

Whether the two positions come apart depends on the risk of extinction due to advanced AI & the number of people killed in a nuclear war. I think the two are highly likely to come apart for AI extinction risks between 0.001% and 10%, and I'm not so sure outside those bounds.

Note that actual policy proposals incur a risk of nuclear war, not a certainty; I'm just analysing the question of certain nuclear war because it make the difference clearer.

Longtermism does not necessarily entail the position that more people is better, but the two positions are strongly associated in my mind. If your concern is purely minimising suffering with no discount rate, then it's much less clear what position you'd take on this issue.

I think I favour the position that avoiding nuclear war at smallish probabilities of X-risk due to AI is preferable. I wasn't particularly sympathetic to "lots-of-people-longtermism" to begin with.

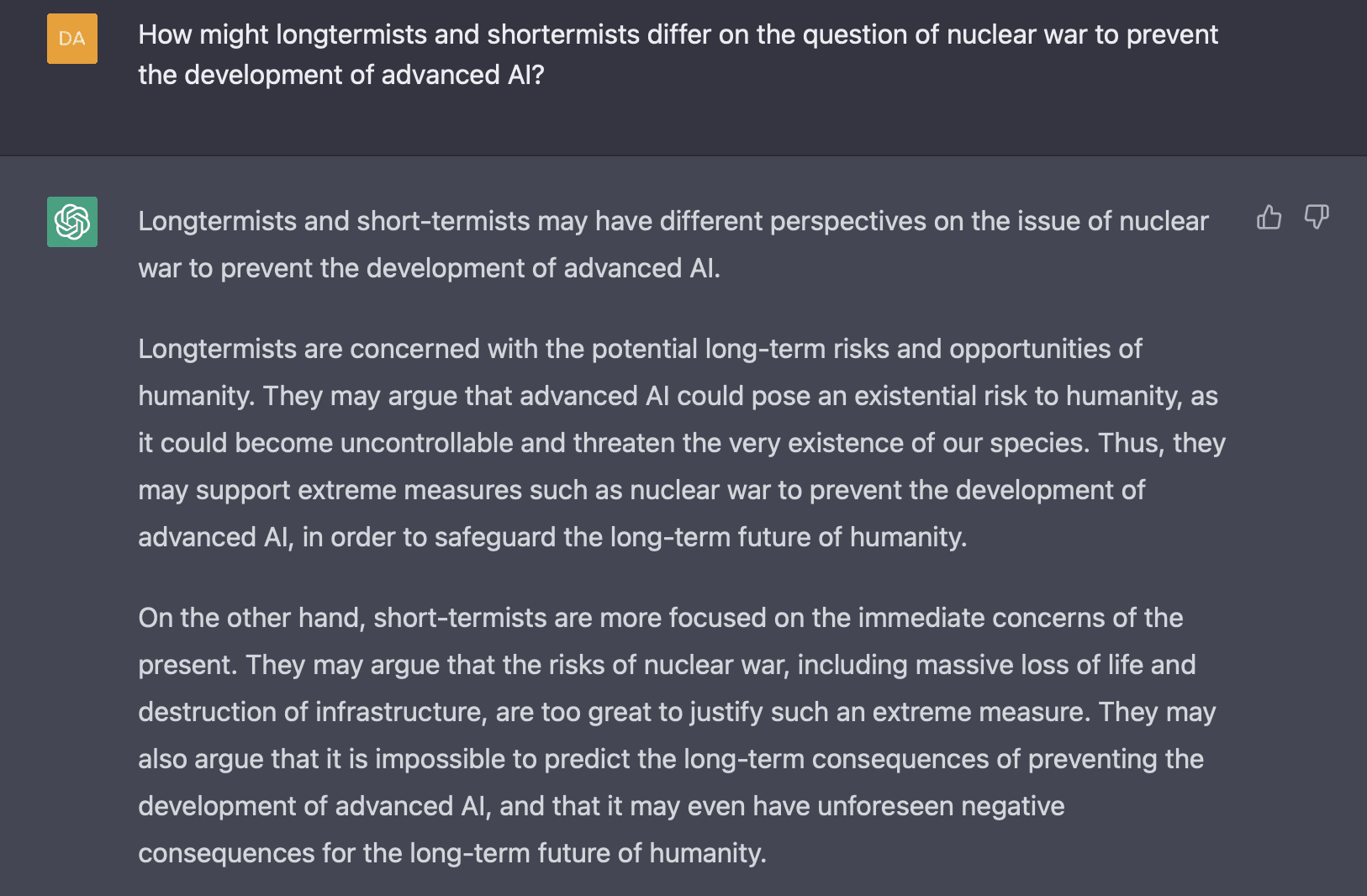

As evidence that I’m not taking some niche interpretation of the position, GPT-3.5 comes to a similar conclusion with no special prompting:

I think moral as well as empirical disagreements might motivate some policy disagreements on AI. MIRI, for example, often indicates that they are sympathetic to some variety of longtermism: Eliezer is interested in achieving an outcome “20% as good as an aligned superintelligence”, Nate talks about the necessity of superintelligence for an amazing future and I feel like I remember a comment where Rob said that only a fraction of his “x-risk” probability was actually on extinction, but this is the closest I can find right now. I think they’re all of the view that actual extinction has high ish probability, but I don’t know exactly how high any of them thinks it is. I think it’s probably better to be clear about how much differences in policy stem from moral vs epistemic disagreements.